Lecture 11

advertisement

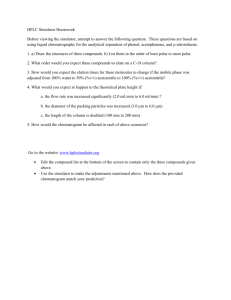

ICOM 5995: Performance Instrumentation and Visualization for High Performance Computer Systems Lecture 11 November 27, 2002 Nayda G. Santiago Announcement Today Due Course Project Final Presentations (15 minutes) See email for details on points December 2 and 4, 2002 Emailed evaluation form for oral presentations Grades Overview Current research in HPC and performance Applications Architectures Grid MPPs Automatic Performance Evaluation Programming Paradigms Insights Distributed Shared Memory UPC Reference Supercomputing 2002 Current Research Areas Conference Supercomputing 2002 November 16-22, 2002 Baltimore, Maryland From Terabytes to Insights Computing: Getting Us on the Path to Wisdom Dr. Rita R. Colwell, Director National Science Foundation With dramatic advances in computation, we are poised to enter a new era. Super computation can help us transform the deluge of data being generated into valuable nuggets of knowledge. Knowledge is the significant step to wisdom which in finality distinguishes enduring and enlightened societies from others. TOP500 Supercomputers Erich Strohmaier, NERSC The TOP500 is a project tracking supercomputer installations in the world since 1993. The twentieth TOP500 list was published in November 2002 at SC2002. Various experts presented detailed analyses of the TOP500 and discuss the changes in the HPC marketplace during the last years. An analysis of the number of systems installed, performance, locations of the supercomputers, architectures of HPC systems, and also HPC systems applications that are used was presented. Earth Simulator – fastest computer The Earth Simulator Project aims to create a "virtual planet earth" on a very high performance computer, through its capability of processing a vast volume of data sent from satellites, buoys and other worldwide observation points. The system will contribute to analyze and predict environmental changes on the earth through the simulation of various global scale environmental phenomena such as global warming, El Niño effect, atmospheric and marine pollution, torrential rainfall and other complicated environmental effects. It will also provide an outstanding research tool in explaining terrestrial phenomena such as tectonics and earthquakes. 35.6 trillion mathematical operations per second. Earth Simulator The Earth Simulator came into operation in March, 2002, after five years development stage. It achieved 35.86 TF in the Linpack benchmark test. More important is that a global atmospheric circulation code is optimized to manifest the performance of 26.58 TF. This can indeed ensure the feasibility of the Earth Simulator in reliable prediction of global environmental changes. Tetsuya Sato, Director-General, The Earth Simulator Center, Japan Marine Science and Technology Center (JAMSTEC) $400 million dollars 87% of peak performance 5104 processors Architectures Grids Connect multiple regional and national computational grids to create a universal source of computing power. Clustering of a wide variety of geographically distributed resources, such as supercomputers, storage systems, data sources, and special devices and services, that can then be used as a unified resource. Text Captioning for the Grid Trace R&D Center of the University of Wisconsin-Madison Speech-to-Text translation. Speaker independent speech recognition systems running on Grid resources. Speaker at Baltimore Translation at Franklin Park, Illinois (Trace) Correction by persons in the audience (Baltimore) Technical terms that are not common in everyday use. Speech to text management service Red indicates corrected words. Cray X1 12.8 Gigaflops processors 52.4 Teraflops peak computing power Tightly coupled MPP architecture Vector processing and distributed shared memory architecture UPC Itanium Co developed by Hewlett-Packard (HP) and Intel. Architecture Two FMACs (Floating point multiply-add calculations) units. Two SIMD FMACs for 3-D graphics. Eight single-precision floating-point operations per cycle for a 6.4GFLOPS single-precision rating on an 800MHz processor. Pipelined functional units. Dual-function arithmetic units. Large register sets. 82 bits wide. Internal parallelism. Can issue up to six instructions per cycle. Gelato www.gelato.org The Gelato Federation is a worldwide federation of research organizations dedicated to collaboration on scalable, open-source Linux-based computing on Intel Itanium Processor Family platforms. Gelato in association with HP Automatic Performance Analysis Automatic Performance Analysis Michael Gerndt, Technische Universitaet Muenchen The Esprit Working Group APART (Automatic Performance Analysis: Real Tools) is a group of 8 European and 3 American partners (www.fzjuelich.de/apart). The working group explores all issues in automatic performance analysis support for parallel machines and grids. Software Engineering Formal Definitions. Automatic Performance Analysis Tools Autopilot Finesse Kappa-PI KOJAK Paradyn Peridot S-Check Virtual Adrian. Distributed Shared Memory Independent threads operating in a shared space. Shared space is logically partitioned among threads. Mapping of each thread and the space that has affinity to it to the same physical node. Thread 0 Thread 1 Thread 2 Thread N-1 Shared Space Global Address Private 0 Private 1 Private 2 Private N-1 Unified Parallel C (UPC) Researcher: Tarek El-Ghazawi, The George Washington University Consortium of government, industry, and academia. UPC is an explicit parallel extension of ANSI C. GWU, IDA, DoD, ARSC, Compaq, CSC, Cray Inc., Etnus, HP, IBM, Intrepid Technologies, LBNL, LLNL, MTU, SGI, Sun Microsystems, UC Berkeley, and US DoE. All language features of C. Distributed Shared Memory programming Model. Pointers Four distinct possibilities: private pointers pointing to the private space, private pointers pointing to the shared space, shared pointers pointing to the shared space, and lastly shared pointers pointing into the private space. New NAS Parallel Benchmark NPB2.4 NPB3.0 Larger problems, I/O Benchmark Class D size New parallelizations, new language OpenMP, HP Fortran, Java threads GrdiNPB3.0 Benchmarking for grid computing SC 2003 Phoenix, Arizona November 15-21, 2003 Igniting Innovation