Quality Control: data processing

advertisement

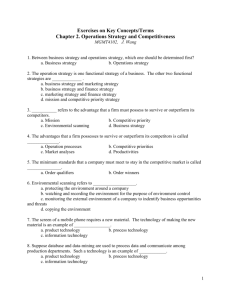

Quality Control Data Processing Operations Scanning data capture and quality assurance Quality in the Data Process Geoffrey Greenwell, Data Processing Advisor IPC Quality in the Data PROCESS P-R-O-C-E-S-S Personal Process Automated Process Conceptual Overview P-R-O (TQM) P ersonal Commitment to Excellence R eject Cynicism or Satisfaction O wn the process C-E-S-S (Six Sigma, Lean Manufacturing) C areful Design E valuate Continually S hift Perspectives S helf Life Conceptual Overview Form Flow Information Exformation Data Entry Manual Scanning Edits Structure Pre-edit Consistency Tabulation controls Data Archive Form flow •Flow Charting is a fundamental tool for careful design. •Flow charting is the mapping of the process. •A flow chart is defined as a pictorial representation describing a process being studied or even used to plan stages of a project. Flow charts tend to provide people with a common language or reference point when dealing with a project or process. deming.eng.clemson.edu •EXAMPLE •Process Flow Chart- Finding the best way home •This is a simple case of processes and decisions in finding the best route home at the end of the working day. Primary Symbols Form Flow Software for flowcharting: •ABC Flowcharter •Visio •Corel Flow •(Microsoft word has basic flow chart symbols) Form Flow External information flow Exformation All the processes involved in managing questionnaires and producing progress reports. Paper Flow Internal information flow Information All the processes involved in managing the electronic processes and producing FEEDBACK. Data flow Ex-Form Flow Look at the “nodes”. In this case, the exchange of forms is a “pressure drop.” 2A 5 1 4 2B 2C 3 6 1. 2. 3. 4. 5. 6. Field office to data entry center. Center to Date entry group supervisor. Supervisor to data entry person Problems/trouble shooting. Storage Retrieval Ex-Form Flow Every node is a potential loss of control in the process. Ways to control: •Operational control forms (electronic systems) •Progress reports •Assign responsible persons Ex-Form Monitoring Control Forms: Input and Output Define a fundamental unit based on geographic criteria. Census: Enumeration area=a box of forms=an electronic batch Survey: A region=a time cycle=an electronic batch • • • All forms are traced and verified to a master control form. The flow of the form is followed through the phases of the data process. The process has an audit trail all the way to the individual data entry station. Ex-Form Flow A division of labor into logical, efficient and affordable processes designed to optimize the efficiency of the production line. P=f(K,L) Classic production function. Capital and labor inputs Labor Intensive Solutions Space Trained personnel (division of labor) Low tech supplies: boxes, paper, labels In-form flow An electronic management system for: A. Processing the primary survey or census instrument. B. Provide a tool for managing the ex-formation. C. Provide feedback in the form of reports (end of information). Capital Intensive Solutions Network servers Data entry stations Cabling Printers In-form flow Form Flow (In or Ex?) •Transferring the box from the project vehicle to the forms depot. •Designing a flow chart for a data entry program. •Assigning a form to a data entry operator •Scanning or keying in a form •Two EA boxes are not closed well and the forms scatter during transport. •Backing up data on the central server. •Supply clerk checking the inventory of paper; folders; labels and filling out a form to re-stock needed items. Data Entry Manual Entry vs. Scanning The Great Debate… $ pre unit of time to process Manual Data Entry • Cost Consideration A. Training for all Clerks B. Monitoring systems and supervisory time. C. Verification of Clerk’s Work D. Keying costs Manual Data Entry Design Considerations: • Data File Structure Levels Multiple items vs. records • Data Dictionary (Critical through all stages!) Good and consistent variable labels Logical and efficient variable names Well defined value sets (several value sets) • Screen Formats Font Size and type Soft backgrounds Field and text positioning Field Size Physical form emulation Manual Data Entry • Path Logical Path Items vs. fields Levels • Skips To skip or not to skip Census vs. Surveys • On-line edits/Error Messages and Warnings Census vs. Survey Manual Data Entry CAPI (Computer Assisted Personal Interviewing) CATI (Computer Assisted Telephone Interviewing) • Controls the process of carrying out the interview by the enumerator. • Removes the ex-form process by directly keying in responses into a portable computer. Manual Data Entry • Controlling the data entry personnel Establish objective measures (extract information from Log Files) Speed and accuracy (8000 keystrokes/hour) • Heads up vs. Head down keying • Census vs. Survey Constraints Censuses: High Volumes (Time primary quality constraint) Surveys: Low Volume (Place is the primary constraint) Manual Data Entry Verification procedures: Verification is a duplication of the data entry process in order to compare two identical records for inconsistencies Dependent vs. independent Dependent verification duplicates the data entry process and compares the data files “on-line” and corrects the files when an error is encountered. Independent verification is a complete re-entry of a form followed by a full comparison of the two data files. Verification is dynamic and is adjusted to the learning curve. 100% at the outset and may drop to 2-3% at the end. Scanning Refer to: A Comparison of Data Capture Methods by Sauer Machine quality is very important. • Glass optics and color corrected instead of plastic optics (light sensors and diffusion) • Resolution (DPI) 300 • Bit depth: higher bit the better ability to interpret grayscales • Scanning speed • Optimal environment: 60-85 F and 40-60% relative hum. Scanning Rotary Scanners move the form. Best for censuses and surveys. Other Options to consider: •Automatic document feeder •Multi feed detector •Exit hoppers •Color bulbs •Image processing Scanning Character reading: OCR, ICR and OMR • • • OMR-Optical Mark Reading. Reads a mark from a questionnaire. OCR-Optical Character Recognition. Converts characters through photosensitive sensors and software enhancements. ICR-Intelligent Character Reading. ICR is pattern based character recognition and is also known as Hand-Print Recognition. (Software differences). Remembers patterns. Note: OCR and ICR usually require “constrained handwriting” or BLOCK capital letters. Scanning Advantages and Disadvantages Speed of process Technological Innovation Minimize human error Loss of process control Minimal technological transfer Maximize machine error Cost World Bank CWIQ (Core Welfare Indicators Questionnaire) Scanning Quality Issues •Pencil type, paper jams, damaged forms •Accurate character recognition dependent on form quality and image •Field level accuracy vs. character level accuracy •Machine Maintenance •Software deficiencies (Voting) •KFI (Key from image) for character correction •KFP (Key from paper) for form and field correction •Confidence level reports (recognition rates) •See page 18, Sauer for Quality Control issues Coding Coding of open ended questions like: Occupation and activity require coding. In Scanning this can be done from the image. In Manual entry it is usually the first step. It requires its own process and supervision. In Scanning it can be seen as a parallel operation. In Manual Entry it is linear. Now that you have: A well designed dictionary with the simplest and most efficient structure and well defined variable labels with easy to use variable names and well defined value sets clearly designed, simple and user friendly screens emulating the forms and flowing logically with programmed skips and have clear interactive messages should you use them and have defined productivity targets for your census or survey and a system to objectively measure productivity and reward accordingly and verified the work and finally rigorously subjected it against the PROCESS rule of quality… you still need to check for errors. Edit Flow Consolidate I. Verified Files II. Structure IV. PreTabulation II. PreConsolidate Data Processor III. Consistency Statistician Data Processor Analyst Structure Edit Controls: • Performed after verification • Control totals used to check completeness – File totals compared with manual counts • Corrections done with questionnaires • Limit checks to rendering questionnaires clear enough for computer processing (processability) only. Pre-edit Consolidation Controls: • Follow pre-established geographical priorities • Check control totals • Use operational control data base • Avoid geographic coding (geocode) conflicts in joining files Consistency Edit Controls: • Develop consistency specifications • Prioritize variables • Monitor corrections • Use control tabulations • Re-run output file Pre-tabulation Consolidation Controls: • Consolidate to facilitate tabulation • Check control totals for each record type • Use standardized forms for operational control • Avoid geocode conflicts in joining files Tabulation Controls: • Computer Program Specifications • User Approval of Tables • Tables Grouped by Characteristics • Tables Checked Against Control Totals • Control Tables Show Weighted and Un-weighted Numbers • Geographic Subtotals Match • Standardized Control Forms for Production Control • Final Table Review • Data Dissemination to User Community Data Archiving Data and Metadata metadata are often called "codebooks“ Metadata is the data which defines the data http://www.icpsr.umich.edu/DDI/ORG/index.html DDI-Data Documentation Initiative The definition of an international standard to define Metadata in the social sciences. Data Archiving The DDI defines a hierarchy of information related to a Census or survey. The DDI defines these by using XML (Extensible Mark up language) XML defines document tags much the same way as HTML. Example of Codebook Structure: http://www.icpsr.umich.edu/DDI/CODEBOOK/codedtd.html Data Archiving NESSTAR is an example of a web based distributed DDI compliant system. Data Archiving All processes need to be documented. This includes: Codebooks Data entry manuals Program documentation Edit programs Imputation rules Tracking/process manuals Final Concepts Lean Manufacturing: A quality control system for monitoring process flow. Six Sigma: A statistical system developed by Motorola to establish process problems and error tolerances and methods to correct. Final Concepts Lean Manufacturing: •Workplace organization •Standardizing work/work stations. •Division of labor to increase process flow. •JIT-Just-in-Time delivery •Pull systems Six Sigma: •Means six deviation tolerance for error: 3.4 error events out of one-million •Measurement (quantifiable) system for process improvement. Final Concepts Conceptual Summary P-R-O (TQM) Personal Commitment to Excellence Reject Cynicism or Satisfaction Own the process C-E-S-S (Six Sigma, Lean Manufacturing) Careful Design (Flow Charts) Evaluate Continually (Six Sigma) Shift Perspectives (Lean Manufacturing) Shelf Life (Data Archive) From John Henry The man that invented the steam drill Thought he was mighty fine But John Henry made fifteen feet The steam drill only nine, Lord, Lord The steam drill made only nine. John Henry hammered in the mountain His hammer was striking fire But he worked so hard, he broke his poor heart He laid down his hammer and he died, Lord, Lord. He laid down his hammer and he died