Optimization Methods in Data Mining

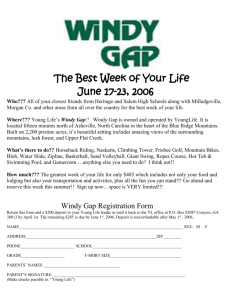

advertisement

Optimization Methods

in Data Mining

Fall 2004

1

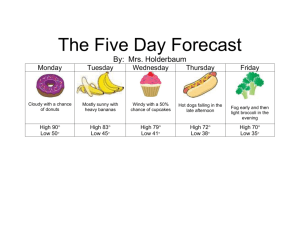

Overview

Optimization

Mathematical

Programming

Support

Vector

Machines

Classification,

Clustering,

etc

Fall 2004

Steepest

Descent

Search

Neural Nets,

Bayesian Networks

(optimize parameters)

Combinatorial

Optimization

Genetic

Algorithm

Feature selection

Classification

Clustering

2

What is Optimization?

Formulation

Decision variables

Objective function

Constraints

Solution

Fall 2004

Iterative algorithm

Improving search

Problem

Formulation

Model

Algorithm

Solution

3

Combinatorial Optimization

Finitely many solutions to choose from

Select the best rule from a finite set of rules

Select the best subset of attributes

Too many solutions to consider all

Solutions

Fall 2004

Branch-and-bound (better than Weka exhaustive

search)

Random search

4

Random Search

Select an initial solution x(0) and let k=0

Loop:

(k)) of x(k)

Consider the neighbors N(x

(0))

Select a candidate x’ from N(x

Check the acceptance criterion

(k+1) = x’ and

If accepted then let x

otherwise let x(k+1) = x(k)

Until stopping criterion is satisfied

Fall 2004

5

Common Algorithms

Simulated Annealing (SA)

that decreases as time goes on

Tabu Search (TS)

Idea: accept inferior solutions with a given probability

Idea: restrict the neighborhood with a list of solutions

that are tabu (that is, cannot be visited) because

they were visited recently

Genetic Algorithm (GA)

Idea: neighborhoods based on ‘genetic similarity’

Most used in data mining applications

Fall 2004

6

Genetic Algorithms

Maintain a population of solutions

rather than a single solution

Members of the population have certain

fitness (usually just the objective)

Survival of the fittest through

Fall 2004

selection

crossover

mutation

7

GA Formulation

Use binary strings (or bits) to encode

solutions:

011010010

Terminology

Fall 2004

Chromosomes = solution

Parent chromosome

Children or offspring

8

Problems Solved

Data Mining Problems that have been

addressed using Genetic Algorithms:

Fall 2004

Classification

Attribute selection

Clustering

9

Classification Example

Outlook

Sunny

100

Overcast

010

Rainy

001

Yes

10

No

01

Windy

Fall 2004

10

Representing a Rule

If windy=yes then play=yes

111

outlook

10 10

windy

play

If outlook=overcast and windy=yes then play=no

010

outlook

Fall 2004

10

windy

01

play

11

Single-Point Crossover

Parents

outlook

111

windy

010

windy

outlook

Offspring

play

10 10

outlook

111

windy

01 01

outlook

010

windy

play

01 01

play

10 10

play

Crossover point

Fall 2004

12

Two-Point

Crossover Offspring

Parents

outlook

111

windy

010

windy

outlook

play

10 10

outlook

111

windy

play

01 01

outlook

010

windy

10

play

play

01 10

01

Crossover points

Fall 2004

13

Uniform Crossover

Parents

Offspring

outlook

111

windy

10

010

windy

01

play

outlook

play

10

outlook

110

windy

01

outlook

011

windy

10

00

play

01 11

play

Problem?

Fall 2004

14

Mutation

Parent

010

outlook

Offspring

01 01

windy

play

010

outlook

11

windy

01

play

Mutated bit

Fall 2004

15

Selection

Which strings in the population should

be operated on?

Rank and select the n fittest ones

Assign probabilities according to fitness

and select probabilistically, say

Fitness ( xi )

P[select xi ]

Fitness ( x j )

j

Fall 2004

16

Creating a New Population

Create a population Pnew with p individuals

Survival

Crossover

Allow individuals from old population to survived intact

Rate: 1-r % of population

How to select the individuals that survive: Deterministic/random

Select fit individuals and create new once

Rate: r% of population. How to select?

Mutation

Slightly modify any on the above individuals

Mutation rate: m

Fixed number of mutations versus probabilistic mutations

Fall 2004

17

GA Algorithm

Randomly generate an initial population P

Evaluate the fitness f(xi) of each individual in P

Repeat:

Survival: Probabilistically select (1-r)p individuals from P and

add to Pnew, according to

f ( xi )

P[select xi ]

f (x j )

j

Crossover: Probabilistically select rp/2 pairs from P and apply

the crossover operator. Add to Pnew

Mutation: Uniformly choose m percent of member and invert

one randomly selected bit

Update: P Pnew

Evaluate: Compute the fitness f(xi) of each individual in P

Return the fittest individual from P

Fall 2004

18

Analysis of GA: Schemas

Does GA converge?

Does GA move towards a good solution?

Local optima?

Holland (1975): Analysis based on schemas

Schema: string combination of 0s, 1s, *s

Example: 0*10 represents {0010,0110}

Fall 2004

19

The Schema Theorem

(all the theory on one slide)

Average fitness of

individuals in

schema s at time t

Distance between

defined bits in s

Number of

defined bits

in schema s

uˆ ( s, t )

d ( s)

o( s )

E[m( s, t 1)]

m( s, t )1 pc

(

1

p

)

m

f (t )

l 1

Probability

of crossover

Probability

of mutation

Number of instance of

schema s at time t

Fall 2004

20

Interpretation

Fit schemas grow in influence

What is missing

Crossover?

Mutation?

How about time t+1 ?

Other approaches:

Fall 2004

Markov chains

Statistical mechanics

21

GA for Feature Selection

Feature selection:

Select a subset of attributes (features)

Reason: to many, redundant, irrelevant

Set of all subsets of attributes very

large

Little structure to search

Random search methods

Fall 2004

22

Encoding

Need a bit code representation

Have some n attributes

Each attribute is either in (1) or out (0)

of the selected set

1

0

temperature

Fall 2004

1

humidity

outlook

0

windy

23

Fitness

Wrapper approach

Filter approach

Apply learning algorithm, say a decision tree, to

the individual x ={outlook, humidity}

Let fitness equal error rate (minimize)

Let fitness equal the entropy (minimize)

Other diversity measures can also be used

Simplicity measure?

Fall 2004

24

Crossover

1

1

humidity

outlook

0

temperature

0

0

1

0

temperature

0

0

windy

1

windy

1

humidity

outlook

1

1

humidity

temperature

windy

humidity

outlook

1

outlook

0

temperature

0

windy

Crossover point

Fall 2004

25

In Weka

Fall 2004

26

Clustering Example

Create two clusters for:

ID

10

20

30

40

Outlook

Sunny

Overcast

Rainy

Rainy

{10,20}

{30,40}

{20,40}

{10,30}

Fall 2004

Temperature

Hot

Hot

Mild

Cool

Humidity

High

High

High

Normal

Windy

True

False

False

False

Offspring

Parents

1 1 0 0 1 1 0 1

0 1 0 1 0 1 0 0

Crossover

Play

No

Yes

Yes

Yes

{10,20,40}

{30}

{20}

{10,30,40}

27

Discussion

GA is a flexible and powerful random

search methodology

Efficiency depends on how well you can

encode the solutions in a way that will

work with the crossover operator

In data mining, attribute selection is the

most natural application

Fall 2004

28

Attribute Selection in

Unsupervised Learning

Attribute selection typically uses a

measure, such as accuracy, that is directly

related to the class attribute

How do we apply attribute selection to

unsupervised learning such as clustering?

Need a measure

compactness of cluster

separation among clusters

Fall 2004

Multiple measures

29

Quality Measures

Compactness

1 If xi belongs to cluster k

ik

Otherwise

0

Instances

Clusters

Fwithin 1

1

Z within

1 K n

2

ik xij kj

d k 1 i 1

Centroid

Normalization

constant to make

Fwithin [0,1]

Fall 2004

Number of

attributes

x

n

kj

i 1

n

i 1

ik ij

ik

30

More Quality Measures

Cluster Separation

Fbetween

Fall 2004

1 1 1 K n

2

1 ik xij kj

Z bet d k 1 k 1 i 1

jJ

31

Final Quality Measures

Adjustment for bias

K K min

Fclusters 1

K max K min

Compexity

d 1

Fclusters 1

D 1

Fall 2004

32

Wrapper Framework

Loop:

Obtain an attribute subset

Apply k-means algorithm

Evaluate cluster quality

Until stopping criterion satisfied

Fall 2004

33

Problem

What is the optimal attribute subset?

What is the optimal number of clusters?

Try to find simultaneously

Fall 2004

34

Example

Find an attribute subset and optimal number of clusters

(Kmin = 2, Kmin = 3) for

ID

10

20

30

40

50

60

70

80

90

100

Fall 2004

Sepal Length

5.0

5.1

4.8

5.1

4.6

6.5

5.7

6.3

4.9

6.6

Sepal Width

3.5

3.8

3.0

3.8

3.2

2.8

2.8

3.3

2.4

2.9

Petal length

1.6

1.9

1.4

1.6

1.4

4.6

4.5

4.7

3.3

4.6

Petal Width

0.6

0.4

0.3

0.2

0.2

1.5

1.3

1.6

1.0

1.3

35

Formulation

Define an individual

Selected attributes

* * * *

*

number of

clusters

Initial Population

0 1 0 1 1

1 0 0 1 0

Fall 2004

36

Evaluate Fitness

Start with 0 1 0 1 1

Three clusters and {Sepal Width, Petal Width}

ID

10

20

30

40

50

60

70

80

90

100

Sepal Width

3.5

3.8

3.0

3.8

3.2

2.8

2.8

3.3

2.4

2.9

Petal Width

0.6

0.4

0.3

0.2

0.2

1.5

1.3

1.6

1.0

1.3

Apply k-means with k=3

Fall 2004

37

K-Means

Start with random centroids: 10, 70, 80

2

Petal Width

1.5

80

60

70 100

1

90

10

0.5

30

20

40

50

0

2

2.5

3

3.5

4

Sepal Width

Fall 2004

38

New Centroids

2

Petal Width

1.5

80

60

C170 100

1

90

10

0.5

30

50

C3

20

40

0

2

2.5

3

3.5

4

Sepal Width

No change in assignment so

terminate k-means algorithm

Fall 2004

39

Quality of Clusters

Centers

Center 1 at (3.46,0.34): {60,70,90,100}

Center 2 at (3.30,1.60): {80}

Center 3 at (2.73,1.28): {10,20,30,40,50}

Evaluation

Fwithin 0.55

Fbetween 6.60

Fclusters 0.00

Fcomplexity 0.67

Fall 2004

40

Next Individual

Now look at 1 0 0 1 0

Two clusters and {Sepal Length, Petal Width}

ID

10

20

30

40

50

60

70

80

90

100

Sepal Length

5.0

5.1

4.8

5.1

4.6

6.5

5.7

6.3

4.9

6.6

Petal Width

0.6

0.4

0.3

0.2

0.2

1.5

1.3

1.6

1.0

1.3

Apply k-means with k=3

Fall 2004

41

K-Means

Say we select 20 and 90 as initial centroids:

Petal Width

2

80

1.5

70

1

60

100

90

10

20

30

50

40

0.5

0

4

4.5

5

5.5

6

6.5

7

Sepal Width

Fall 2004

42

Recalculate Centroids

Petal Width

2

80

1.5

C2

70

1

60

100

90

10

20

30 C1

50

40

0.5

0

4

4.5

5

5.5

6

6.5

7

Sepal Width

Fall 2004

43

Recalculate Again

Petal Width

2

80

C2 60

100

1.5

70

1

90

10

C1 20

30

50

40

0.5

0

4

4.5

5

5.5

6

6.5

7

Sepal Width

No change in assignment so

terminate k-means algorithm

Fall 2004

44

Quality of Clusters

Centers

Center 1 at (4.92,0.45): {10,20,30,40,50,90}

Center 3 at (6.28,1.43): {60,70,90,100}

Evaluation

Fwithin 0.39

Fbetween 14.59

Fclusters 1.00

Fcomplexity 0.67

Fall 2004

45

Compare Individuals

0 1 0 1 1

Fwithin 0.55

Fbetween 6.60

Fclusters 0.00

Fcomplexity 0.67

1 0 0 1 0

Fwithin 0.39

Fbetween 14.59

Fclusters 1.00

Fcomplexity 0.67

Which is fitter?

Fall 2004

46

Evaluating Fitness

Can scale (if necessary)

Then weight them together, e.g.,

0.55 6.60

fitness(0 1 0 1 1)

0 0.67 0.53

0.55 14.59

0.39 14.59

fitness(1 0 0 1 0)

0 0.67 0.84

0.55 14.59

Alternatively, we can use Pareto optimization

Fall 2004

47

Fall 2004

48

Mathematical Programming

Continuous decision variables

Constrained versus non-constrained

Form of the objective function

Fall 2004

Linear Programming (LP)

Quadratic Programming (QP)

General Mathematical Programming (MP)

49

Linear Program

max

s.t

2 0.5 x

0 x 15

f ( x) 2 0.5 x

x 15

0 x

Optimal solution

x

10

Fall 2004

50

Two

Dimensional

Problem

4 x 2 x 4800

x

2

1

2000

2

x1 1500

x2 1500

1500

Feasible

Region

max 12 x1 9 x2

x1 1000

s.t.

x2 1500

x1 x2 1750

4 x1 2 x2 4800

Optimal Solution

1000

x1 , x2 0

x1 x2 1750

500

x1 0

x2 0

x1

500

1000

1500

2000

12 x1 9 x2 12000

12 x1 9x2 6000

Fall 2004

Optimum is

always at an

extreme point

51

Simplex Method

x2

4 x1 2 x2 4800

2000

x1 1500

x2 1500

1500

1000

x1 x2 1750

500

x1 0

x2 0

Fall 2004

x1

500

1000

1500

2000

52

Quadratic Programming

1.4

1.2

f (x )=0.2+(x -1)

1

2

0.8

0.6

0.4

0.2

2

1.

8

1.

6

1.

4

1.

2

1

0.

8

0.

6

0.

4

0.

2

0

0

f ' ( x) 2( x 1)

Fall 2004

2( x 1) 0

x 1

53

General MP

f ' ( x) 0

f ' ( x) 0

f ' ( x) 0

f ' ( x) 0

Derivative being

zero is a necessary

but not sufficient

condition

f ' ( x) 0

Fall 2004

54

Constrained Problem?

f ' ( x) 0

x 10

Fall 2004

55

General MP

We write a general mathematical program in

matrix notation as:

min

s.t.

f ( x)

h ( x) 0

g(x) 0

x Vector of decision v ariables

h(x) (h 1 (x), h 2 (x),..., h m (x))

g(x) (g1 (x), g 2 (x),..., g m (x))

Fall 2004

56

Karush-Kuhn-Tucker (KKT)

Conditions

If x* is a relative minimum for

min

s.t.

f ( x)

h ( x) 0

g(x) 0

There exist λ , μ 0, such that

μ gx 0

f x* λ T h x* μT g x* 0

T

Fall 2004

*

57

Convex Sets

A set C is convex if any line connecting two points in the

set lies completely within the set, that is,

x1 , x2 C, (0,1) : x1 (1 )x2 C

Convex

Fall 2004

Not Convex

58

Convex Hull

The convex hull co(S) of a set S is the

intersection of all convex sets

containing S

A set V Rn is a linear variety if

x1 , x2 V : x1 (1 )x2 V , R

Fall 2004

59

Hyperplane

A hyperplane in Rn is a (n-1)-dimensional variety

Hyperplane in R2

Fall 2004

Hyperplane in R3

60

Convex Hull Example

Humidity

Closets points in

convex hulls

Play

No Play

d

c

Separating hyperplane

bisects closest points

Temperature

Fall 2004

61

Finding the Closest Points

Formulate as QP:

min

s.t.

1

2

cd

2

c i xi

i:Play Yes

d

x

i:Play No

1

1

i

i:Play Yes

i

i:Play Yes

i i

i 0

Fall 2004

62

Support Vector Machines

Support Vectors

Play

Humidity

No Play

Separating

Hyperplane

Temperature

Fall 2004

63

Example

ID

10

20

30

40

50

60

70

80

90

100

Fall 2004

Sepal Width

3.5

3.8

3.0

3.8

3.2

2.8

2.8

3.3

2.4

2.9

Petal Width

0.6

0.4

0.3

0.2

0.2

1.5

1.3

1.6

1.0

1.3

64

Separating Hyperplane

1.8

1.6

1.4

1.2

1

0.8

0.6

0.4

0.2

0

2

Fall 2004

2.5

3

3.5

4

65

Assume Separating Planes

Constraints:

xi w i b 1, i : yi 1

xi w i b 1, i : yi 1.

Distance to each plane:

1

w

Fall 2004

66

Optimization Problem

2

max

w

subject to

x i w i b 1, i : yi 1

w ,b

x i w i b 1, i : yi 1.

Fall 2004

67

How Do We Solve MPs?

5

4

3

2

1

0

-1

-2

-3

-4

-5

-5

Fall 2004

-4

-3

-2

-1

0

1

2

3

4

5

68

Improving Search

Direction-step approach

x k 1 x k x k

Search direction

Current Solution

New Solution

Fall 2004

Step size

69

Steepest Descent

Search direction equal to negative gradient

x f (x k )

Finding is a one-dimensional optimization

problem of minimizing

( ) f (x k x k )

Fall 2004

70

Newton’s Method

Taylor series expansion

f (x) f (x k ) f (x k )( x x k )

1

(x x k )T F (x k )(x x k )

2 side is minimized at

The right hand

xk 1 xk F (xk ) f (xk )

1

Fall 2004

71

Discussion

Computing the inverse Hessian is difficult

Quasi-Newton

x k 1 x k k S k f (x k )

Conjugate gradient methods

Does not account for constraints

Fall 2004

Penalty methods

Lagrangian methods, etc.

72

Non-separable

Add an error term to the constraints:

x i w i b 1 i , i : yi 1

x i w i b 1 i , i : yi 1

i 0, i.

Fall 2004

73

Wolfe Dual

Only place

data appears

max

α

subject to

1

w i i j yi y j x i x j

2 i, j

i

0 i C

2

y

i

i

0.

i

Simple

constraints

Fall 2004

74

Extension to Non-Linear

Kernel functions

K (x, y ) (x) (y )

n

:

R

H

Mapping

Takes place of

dot product in

Wolfe dual

High dimensional

Hilbert space

Fall 2004

75

Some Possible Kernels

K (x, y ) (x y 1)

K (x, y ) e

p

x y / 2 2

2

K (x, y ) tanh( x y )

Fall 2004

76

In Weka

Weka.classifiers.smo

Support vector machine for nominal data only

Does both linear and non-linear models

Fall 2004

77

Optimization in DM

Optimization

Mathematical

Programming

Support

Vector

Machines

Classification,

Clustering,

etc

Fall 2004

Steepest

Descent

Search

Neural Nets,

Bayesian Networks

(optimize parameters)

Combinatorial

Optimization

Genetic

Algorithm

Feature selection

Classification

Clustering

78

Bayesian Classification

Naïve Bayes assumes independence between

attributes

Simple computations

Best classifier if assumption is true

Bayesian Belief Networks

Joint probability distributions

Directed acyclic graphs

Nodes are random variables (attributes)

Arcs represent the dependencies

Fall 2004

79

Example: Bayesian Network

Lung Cancer depends on Family History and Smoker

Family History

FH,S FH,~S ~FH,S ~FH,~S

Smoker

0.8

0.5

0.7

0.1

~LC 0.2

0.5

0.3

0.9

LC

Lung Cancer

Emphysema

Positive X-Ray

Dyspnea

Lung Cancer is conditionally independent of

emphysema. given Family History and Smoker

Fall 2004

80

Conditional Probabilities

Pr( LungCancer " yes" | FamilyHist ory " yes" , Smoker " yes" ) 0.8

Pr( LungCancer " no" | FamilyHist ory " no" , Smoker " no" ) 0.9

n

Pr( z1 ,..., z n ) Pr zi | Parents ( Z i )

i 1

Random

variable

Outcome of the

random variable

Fall 2004

The node representing

the class attribute is

called the output node

81

How Do we Learn?

Network structure

Given/known

Inferred or learned from the data

Variables

Fall 2004

Observable

Hidden (missing values / incomplete data)

82

Case 1: Known Structure and

Observable Variables

Straightforward

Similar to Naïve Bayes

Compute the entries of the conditional

probability table (CPT) of each variable

Fall 2004

83

Case 2: Known Structure and

Some Hidden Variables

Still need to learn the CPT entries

Let S be a set of s training instances

X 1 , X 2 ,..., X s .

Let wijk be the CPT entry for variable

Yi=yij having parents Ui=uik.

Fall 2004

84

CPT

Example

Y LungCancer

i

yij " yes"

wijk

U i {FamilyHist ory , Smoker}

uik {" yes" , " yes"}

FH,S FH,~S ~FH,S ~FH,~S

0.8

0.5

0.7

0.1

~LC 0.2

0.5

0.3

0.9

LC

w wijk i , j ,k

Fall 2004

85

Objective

Must find the value of

w wijk i , j ,k

The objective is to maximize the likelihood of

the data, that is,

s

Prw ( S ) Prw X d

How do we do this?

Fall 2004

d 1

86

Non-Linear MP

From training data

Compute gradients:

s Pr Y y , U u | X

Prw ( S )

i

ij

i

ik

d

wijk

wijk

d 1

Move in the direction of the gradient

Prw ( S )

wijk wijk l

wijk

Learning rate

Fall 2004

87

Case 3: Unknown Network

Structure

Need to find/learn the optimal network

structure for the data

What type of optimization problem is this?

Combinatorial optimization (GA etc.)

Fall 2004

88