data_mining_text_mining - Donald Bren School of Information

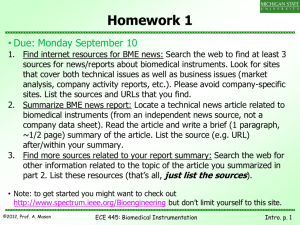

advertisement

Introduction to Biomedical Informatics

Text Mining

© Hayes/Smyth: Introduction to Biomedical Informatics: 1

Outline

• Introduction and Motivation

• Techniques

–

–

–

–

Document classification

Document clustering

Topic discovery from text

Information extraction

• Additional Resources and Recommended Reading

© Hayes/Smyth: Introduction to Biomedical Informatics: 2

Motivation for Text Mining

• PubMed

– PubMed approximately 1 million new articles per year

– Human annotation cannot keep up – increased demand for automation

– Problem is even greater in other domains (e.g., Web search in general)

© Hayes/Smyth: Introduction to Biomedical Informatics: 3

From Jensen, Saric, Bork,

Nature Reviews Genetics, 2006

© Hayes/Smyth: Introduction to Biomedical Informatics: 4

Text Mining Problems

• Classification: automatically assign a document into 1 or more categories

– “easy” problem: is an email spam or non-spam?

– “hard” problem: assign MesH terms to new PubMed articles

• Clustering: Group a set of documents into clusters

– e.g., automatically group docs in search results by theme

• Topic Discovery: Discover themes in docs and index docs by theme

– E.g., discover new scientific concepts not yet covered by MeSH terms

• Information Extraction: Extract mentions of entities from documents

– “easy” problem: extract all gene names mentioned in a document

– “hard” problem: extract a set of facts relating genes in a document, e.g.,

statements such as “gene A activates gene B”

© Hayes/Smyth: Introduction to Biomedical Informatics: 5

Classification and Clustering

• We already discussed these methods in the context of general data

mining in earlier lectures. Now we want to apply these techniques

specifically to text data

• Recall:

– Given a vector of features x, a classifier maps x to a target variable y,

where y is categorical, e.g., y = {has cancer, does not have cancer}

– Learning a classifier consists of being given a training data set of pairs of

x’s and y’s, and learning how to map x to y

– Clustering is similar, but our target data doesn’t have any target y values

– we have to discover the target values (the “clusters”) automatically

© Hayes/Smyth: Introduction to Biomedical Informatics: 6

Classification and Clustering for Text Documents

• Document representation

– Most classification and clustering algorithms assume that each object

(here a document) to be classified can be represented as a fixed length

vector of variables/feature/attribute values

– So how do we convert documents into fixed-length vectors?

– “Bag of Words” representation

• Each vector entry represents whether term j occurred in a document, or how

often it occurred

• Same idea as for information retrieval

• Ignores word order, document structure….but found to work well in practice

and has considerable computational advantages over working with the

document directly

Once we have our vector (bag of words) representation we can use any

classification or clustering method on our documents

© Hayes/Smyth: Introduction to Biomedical Informatics: 7

Document Classification

© Hayes/Smyth: Introduction to Biomedical Informatics: 8

Document Classification

•

Document classification has many applications

– Spam email detection

– Automated tagging of biomedical articles (e.g., in PubMed)

– Automated creation of Web-page taxonomies

•

Data Representation

– “Bag of words” most commonly used: either counts or binary

– Can also use “phrases” for commonly occurring combinations of words

•

Classification Methods

– Naïve Bayes widely used (e.g., for spam email)

• Fast and reasonably accurate

– Support vector machines (SVMs)

• Often the most accurate method in research studies

• But more complex computationally than other methods

– Logistic Regression (regularized)

• Widely used in industry, often competitive with SVMs

© Hayes/Smyth: Introduction to Biomedical Informatics: 9

Trimming the Vocabulary

•

Stopword removal:

– remove “non-content” words

• very frequent “stop words” such as “the”, “and”….

– remove very rare words, e.g., that only occur a few times in 1 million documents

• Often results in removal of 30% or more of the original unique words

•

Stemming:

– Reduce all variants of a word to a single term

– e.g., {draw, drawing, drawings} -> “draw”

– Can use Porter stemming algorithm (1980)

•

This still often leaves us with 10000 to 1 million unique terms

=> a very high-dimensional classification problem!

© Hayes/Smyth: Introduction to Biomedical Informatics: 10

Classifying Term Vectors

• Typically multiple different terms or words may be helpful

– Class = “finance”

– Words = “stocks”, “return”, “interest”, “rate”, etc.

– Thus, classifiers that combine multiple features often do well, e.g,

• Naïve Bayes, Logistic regression, SVMs, etc

(compared to decision trees, for example, which would branch on 1 word at a time)

• Linear classifiers often perform well in high-dimensions

– Typically we have a large number of features/dimensions in text classification

– Theory and experiments tell us linear classifiers do well in high dimensions

– So naïve Bayes, logistic regression, linear SVMS, are all useful

• Main questions in practice are:

– which terms to use in the classifier?

– which linear classifier to select?

© Hayes/Smyth: Introduction to Biomedical Informatics: 11

Feature Selection

• Performance of text classification algorithms can be optimized by

selecting only a subset of the discriminative terms

– See classification results later in these slides

• Greedy search

– Start from empty set or full set and add/delete one at a time

– Heuristics for adding/deleting

• Information gain (mutual information of term with class)

• Chi-square

• Other ideas

– Methods tend not to be particularly sensitive to the specific heuristic

used for feature selection, but some form of feature selection often

improves performance

© Hayes/Smyth: Introduction to Biomedical Informatics: 12

Example of Role of Feature Selection

(from Chakrabarti, Chapter 5)

9600 documents from US Patent database

20,000 raw features (terms)

© Hayes/Smyth: Introduction to Biomedical Informatics: 13

Types of Classifiers

Let c be the class label and let x be a vector of features

• Generative/Probabilistic

– Model p(x | c) for each class, then estimate p(c | x)

– e.g., naïve Bayes model

• Conditional Probability/Regression

– Model p(c | x) directly, e.g.,

– e.g., logistic regression

• Discriminative

– Look for decision boundaries in input space x directly

• No probabilities

– e.g., perceptron, linear discriminants, SVMs, etc

© Hayes/Smyth: Introduction to Biomedical Informatics: 14

Probabilistic “Generative” Classifiers

• Model p( x | ck ) for each class and perform classification via Bayes rule,

c = arg max { p( ck | x ) } = arg max { p( x | ck ) p(ck) }

• How to model p( x | ck )?

– p( x | ck ) = probability of a “bag of words” x given a class ck

• Two commonly used approaches (for text):

– Naïve Bayes: treat each term xj as being conditionally independent, given ck

– Multinomial: model a document with N words as N tosses of a p-sided die

© Hayes/Smyth: Introduction to Biomedical Informatics: 15

Naïve Bayes Classifier for Text

• Naïve Bayes classifier = conditional independence model

– Assumes conditional independence assumption given the class:

p( x | ck ) = P p( xj | ck )

– Note that we model each term xj as a discrete random variable

– Binary terms (Bernoulli):

p( x | ck ) = P p( xj = 1 | ck ) P p( xj = 0 | ck )

© Hayes/Smyth: Introduction to Biomedical Informatics: 16

Multinomial Classifier for Text

• Multinomial Classification model

Assume that the data are generated by a p-sided die (multinomial model)

N

p(x | ck ) p( xj | ck )

nj

j 1

where N = total number of terms

nj = number of times term j occurs in the document

Here we have a single random variable for each class, and the p( xj = i | ck )

probabilities sum to 1 over i (i.e., a multinomial model)

© Hayes/Smyth: Introduction to Biomedical Informatics: 17

Highest Probability Terms in Multinomial Distributions

© Hayes/Smyth: Introduction to Biomedical Informatics: 18

Common Data Sets used for Evaluation

•

Reuters

– 10700 labeled documents

– 10% documents with multiple class labels

•

Yahoo! Science Hierarchy

– 95 disjoint classes with 13,598 pages

•

20 Newsgroups data

– 18800 labeled USENET postings

– 20 leaf classes, 5 root level classes

•

WebKB

– 8300 documents in 7 categories such as “faculty”, “course”, “student”.

•

Industry

– 6449 home pages of companies partitioned into 71 classes

© Hayes/Smyth: Introduction to Biomedical Informatics: 19

Comparing Naïve Bayes and Multinomial models

McCallum and Nigam (1998)

Found that multinomial outperformed naïve Bayes (with binary features) in text

classification experiments

© Hayes/Smyth: Introduction to Biomedical Informatics: 20

Comparing Multinomial and Bernoulli on Reuter’s Data

(from McCallum and Nigam, 1998)

© Hayes/Smyth: Introduction to Biomedical Informatics: 21

Comparing Bernoulli and Multinomial

(slide from Chris Manning, Stanford)

Results from classifying 13,589 Yahoo! Web pages in Science subtree

of hierarchy into 95 different classes

© Hayes/Smyth: Introduction to Biomedical Informatics: 22

WebKB Data Set

• Train on ~5,000 hand-labeled web pages

– Cornell, Washington, U.Texas, Wisconsin

• Crawl and classify a new site (CMU)

• Results:

Student

Extracted

180

Correct

130

Accuracy:

72%

Faculty

66

28

42%

Person

246

194

79%

Project

99

72

73%

Course

28

25

89%

Departmt

1

1

100%

© Hayes/Smyth: Introduction to Biomedical Informatics: 23

Comparing Bernoulli and Multinomial on Web KB Data

© Hayes/Smyth: Introduction to Biomedical Informatics: 24

Comments on Generative Models for Text

(Comments applicable to both Naïve Bayes and Multinomial classifiers)

•

Simple and fast => popular in practice

– e.g., linear in p, n, M for both training and prediction

• Training = “smoothed” frequency counts, e.g.,

p(ck | xj 1)

nk , j k

nk m

– e.g., easy to use in situations where classifier needs to be updated regularly (e.g., for

spam email)

•

Numerical issues

– Typically work with log p( ck | x ), etc., to avoid numerical underflow

– Useful trick:

• when computing S log p( xj | ck ) , for sparse data, it may be much faster to

– precompute S log p( xj = 0| ck )

– and then subtract off the log p( xj = 1| ck ) terms

•

Note: both models are “wrong”: but for classification are often sufficient

© Hayes/Smyth: Introduction to Biomedical Informatics: 25

optional

Beyond independence

•

Naïve Bayes and multinomial both assume conditional independence of words

given class

•

Alternative approaches try to account for higher-order dependencies

– Bayesian networks:

•

•

•

•

•

p(x | c) = Px p(x | parents(x), c)

Equivalent to directed graph where edges represent direct dependencies

Various algorithms that search for a good network structure

Useful for improving quality of distribution model

….however, this does not always translate into better classification

– Maximum entropy models

•

•

•

•

•

•

p(x | c) = 1/Z Psubsets f( subsets(x) | c)

Equivalent to undirected graph model

Estimation is equivalent to maximum entropy assumption

Feature selection is crucial (which f terms to include) –

can provide high accuracy classification

…. however, tends to be computationally complex to fit (estimating Z is difficult)

© Hayes/Smyth: Introduction to Biomedical Informatics: 26

Basic Concepts of Support Vector Machines

Circles = support vectors

= points on convex hull

that are closest to

hyperplane

M = margin = distance of

support vectors from hyperplane

Goal is to find weight vector

that maximizes M

© Hayes/Smyth: Introduction to Biomedical Informatics: 27

Reuters Data Set

•

•

•

21578 documents, labeled manually

9603 training, 3299 test articles

118 categories

– An article can be in more than one category

– Learn 118 binary category distinctions

•

Example “interest rate” article

2-APR-1987 06:35:19.50

west-germany

b f BC-BUNDESBANK-LEAVES-CRE 04-02 0052

FRANKFURT, March 2

The Bundesbank left credit policies unchanged after today's regular meeting of its council, a spokesman said in

answer to enquiries. The West German discount rate remains at 3.0 pct, and the Lombard emergency

financing rate at 5.0 pct.

Common categories

(#train, #test)

•

•

•

•

•

Earn (2877, 1087)

Acquisitions (1650, 179)

Money-fx (538, 179)

Grain (433, 149)

Crude (389, 189)

•

•

•

•

•

Trade (369,119)

Interest (347, 131)

Ship (197, 89)

Wheat (212, 71)

Corn (182, 56)

© Hayes/Smyth: Introduction to Biomedical Informatics: 28

Dumais et al. 1998: Reuters - Accuracy

earn

acq

money-fx

grain

crude

trade

interest

ship

wheat

corn

Avg Top 10

Avg All Cat

NBayes

Trees

LinearSVM

95.9%

97.8%

98.2%

87.8%

89.7%

92.8%

56.6%

66.2%

74.0%

78.8%

85.0%

92.4%

79.5%

85.0%

88.3%

63.9%

72.5%

73.5%

64.9%

67.1%

76.3%

85.4%

74.2%

78.0%

69.7%

92.5%

89.7%

65.3%

91.8%

91.1%

81.5%

75.2% na

88.4%

91.4%

86.4%

© Hayes/Smyth: Introduction to Biomedical Informatics: 29

Precision-Recall for SVM (linear),

Naïve Bayes, and NN (from Dumais 1998)

using the Reuters data set

© Hayes/Smyth: Introduction to Biomedical Informatics: 30

Comparing Text Classifiers

• Naïve Bayes or Multinomial models

– Low time complexity (training = single linear pass through the data)

– Generally good, but not always best performance

– Widely used for spam email filtering

• Linear SVMs, Logistic Regression

– Often produce best results in research studies

– But more computationally complex to train (particularly SVMs)

• Others

– decision trees: less widely used, but can be useful

© Hayes/Smyth: Introduction to Biomedical Informatics: 31

optional

Learning with Labeled and Unlabeled documents

• In practice, obtaining labels for documents is time-consuming, expensive,

and error prone

– Typical application: small number of labeled docs and a very large number of

unlabeled docs

• Idea:

– Build a probabilistic model on labeled docs

– Classify the unlabeled docs, get p(class | doc) for each class and doc

• This is equivalent to the E-step in the EM algorithm

– Now relearn the probabilistic model using the new “soft labels”

• This is equivalent to the M-step in the EM algorithm

– Continue to iterate until convergence (e.g., class probabilities do not change

significantly)

– This EM approach to classification shows that unlabeled data can help in

classification performance, compared to labeled data alone

© Hayes/Smyth: Introduction to Biomedical Informatics: 32

Learning with Labeled and Unlabeled Data

(from “Semi-supervised text classification using EM”, Nigam, McCallum, and Mitchell, 2006)

© Hayes/Smyth: Introduction to Biomedical Informatics: 33

Other issues in text classification

• Real-time constraints:

– Being able to update classifiers as new data arrives

– Being able to make predictions very quickly in real-time

• Document length

– Varying document length can be a problem for some classifiers

– Multinomial tends to be better than Bernoulli for example

• Multi-labels and multiple classes

– Text documents can have more than one label

– SVMs for example can only handle binary data

© Hayes/Smyth: Introduction to Biomedical Informatics: 34

Other issues in text classification (continued)

• Feature selection

– Experiments have shown that feature selection (e.g., by greedy

algorithms using information gain) can often improve results

• Linked documents

– Can view Web documents as nodes in a directed graph

– Classification can now be performed that leverages the link structure,

• Heuristic = class labels of linked pages are more likely to be the same

– Optimal solution is to classify all documents jointly rather than

individually

– Resulting “global classification” problem is typically computationally

complex

© Hayes/Smyth: Introduction to Biomedical Informatics: 35

Background Resources: Document Classification

•

S. Chakrabarti, Mining the Web: Discovering Knowledge from Hypertext Data,

Morgan Kaufmann, 2003.

– See chapter 5 for discussion of text classification

•

C. D. Manning, P. Raghavan, H. Schutze, Introduction to Information

Retrieval, Cambridge University Press, 2008

– Chapters 13 to 15 on text classification

– (and chapters 16 and 17 on text clustering)

– http://nlp.stanford.edu/IR-book/information-retrieval-book.html

•

SVMs for text classification

– T. Joachims, Learning to Classify Text using Support Vector Machines: Methods,

Theory and Algorithms, Kluwer, 2002

© Hayes/Smyth: Introduction to Biomedical Informatics: 36

Document Clustering

© Hayes/Smyth: Introduction to Biomedical Informatics: 37

Document Clustering

• For clustering we can use either

– Vectors to represent each document (E.g., bag of words)

• Useful for clustering algorithms such as k-means, probabilistic clustering, or

– For N documents, define an N x N similarity matrix

• Doc-doc similarity can be defined in different ways (e.g., TF-IDF)

• Useful for clustering methods such as hierarchical clustering

• Unlike classification, there is typically less concern with selecting the

“best” vocabulary for clustering

– remove common stop words and infrequent words

© Hayes/Smyth: Introduction to Biomedical Informatics: 38

Case Study: Clustering of 2 Million PubMed Articles

Reference: Boyack et al, Clustering more than Two Million Biomedical

Publications…, PLoS One, 6(3), March 2011

• Data Set : 2.15 million articles in PubMed

– all articles published between 2004 and 2008, with at least 5 MeSH terms

• Data for each document

– MeSH terms

– Words from titles and abstracts

• Preprocessing

– MEDLINE stopword list of 132 words + 300 words commonly used at NIH

– Terms appearing in less than 4 documents were removed

– 272,926 unique terms and 175 million document-term pairs

© Hayes/Smyth: Introduction to Biomedical Informatics: 39

Methodology

• Methods compared

– Data sources: MeSH only versus Title/Abstract words only

– Similarity metrics

•

•

•

•

•

Tf-idf cosine (see earlier lectures)

Latent semantic indexing/analysis (see earlier lectures)

Topic modeling (discussed later in these slides)

Self-organizing map (neural network method)

Poisson-based model

– 25 million similarity pairs computed

• Approximately top-12 most similar documents for each document

• 9 sets of clusters compared

• 9 combinations of clustering data-source+similarity metric evaluated

• Hierarchical (single-link) clustering applied to each of the 9 similarity sets

• Heuristics used to determine when clusters can no longer be merged

© Hayes/Smyth: Introduction to Biomedical Informatics: 40

Evaluation Methods and Results

• Evaluation metrics

– Textual coherence within a cluster (see paper)

– Coherence of papers within a cluster in terms of funding source

(Question: how reliable are these metrics?)

• Conclusions (from the paper)

– PubMed’s own related article approach (PMRA) generated the most

coherent and most concentrated cluster solution of the nine text-based

similarity approaches tested, followed closely by the BM25 approach

using titles and abstracts.

– Approaches using only MeSH subject headings were not competitive with

those based on titles and abstracts.

© Hayes/Smyth: Introduction to Biomedical Informatics: 41

Textual

Coherence

Results

© Hayes/Smyth: Introduction to Biomedical Informatics: 42

Two-dimensional map of

the highest-scoring cluster

solution, representing

nearly 29,000 clusters and

over two million articles.

© Hayes/Smyth: Introduction to Biomedical Informatics: 44

Background Resources: Document Clustering

•

Papers

– Boyack KW, Newman D, Duhon RJ, Klavans R, Patek M, et al. 2011

Clustering More than Two Million Biomedical Publications: Comparing the

Accuracies of Nine Text-Based Similarity Approaches.

PLoS ONE 6(3): e18029. doi:10.1371/journal.pone.0018029

– Douglass R. Cutting, David R. Karger, Jan O. Pedersen and John W. Tukey,

Scatter/Gather: a cluster-based approach to browsing large document collections,

Proceedings of ACM SIGIR '92.

– Ying Zhao and George Karypis (2005) Hierarchical clustering algorithms for

document data sets, Data Mining and Knowledge Discovery, Vol. 10, No. 2, pp.

141 - 168, 2005.

•

MALLET (Software)

– Java-based package for classification, clustering, topic modeling, and more…

– http://mallet.cs.umass.edu/

© Hayes/Smyth: Introduction to Biomedical Informatics: 45

Topic Modeling

Some slides courtesy of David Newman, UC Irvine

© Hayes/Smyth: Introduction to Biomedical Informatics: 46

Topics = Multinomial Distributions

© Hayes/Smyth: Introduction to Biomedical Informatics: 47

What is Topic Modeling?

• Topic = probability distribution (multinomial) over words

• Document is assumed to be a mixture of topics

– Each document is represented by a probability distribution over topics

– Note that this is different to clustering, which assigns each doc to 1 cluster

• Learning algorithm is completely unsupervised

– No labels required

• Output of learning algorithm

– T topics, each represented by a probability distribution over words

– For each document, what its mixture of topics is

– For each word in each document, what topic it is assigned to

© Hayes/Smyth: Introduction to Biomedical Informatics: 48

What is Topic Modeling useful for?

• Summarize large document collections

• Retrieve documents

• Automatically index documents

• Analyze text

• Measure trends

• Generate topic-based networks

© Hayes/Smyth: Introduction to Biomedical Informatics: 49

Topic Modeling vs. Other Approaches

• Clustering (summarization)

– Topic model typically outperforms clustering

• Clustering: document belongs to single cluster

• Topic modeling: document is composed of multiple topics

• Latent semantic indexing (theme discovery)

– Topics tend to be more interpretable than LSI “vectors”

• TF-IDF (information retrieval)

– Keywords are often too specific. Topics (or a combination of topics and

keywords) are usually better

© Hayes/Smyth: Introduction to Biomedical Informatics: 50

Topic Modeling > Theory

Topic Modeling vs. Clustering

The Topic Model has the advantage over clustering that it can assign multiple topics per document.

Hidden Markov Models in

Molecular Biology: New

Algorithms and Applications

Pierre Baldi, Yves Chauvin, Tim Hunkapiller, Marcella A.

McClure

Hidden Markov Models (HMMs) can be applied to

several important problems in molecular biology.

We introduce a new convergent learning

algorithm for HMMs that, unlike the classical

Baum-Welch algorithm is smooth and can be

applied on-line or in batch mode, with or without

the usual Viterbi most likely path approximation.

Left-right HMMs with insertion and deletion states

are then trained to represent several protein

families including immunoglobulins and kinases.

In all cases, the models derived capture all the

important statistical properties of the families and

can be used efficiently in a number of important

tasks such as multiple alignment, motif detection,

and classification.

One Cluster

[cluster 88]

model data

models time

neural figure

state learning set

parameters

network

probability

number networks

training function

system algorithm

hidden markov

Multiple Topics

[topic 10] state hmm

markov sequence models

hidden states probabilities

sequences parameters

transition probability training

hmms hybrid model

likelihood modeling

[topic 37] genetic structure

chain protein population

region algorithms human

mouse selection fitness

proteins search evolution

generation function

sequence sequences genes

© Hayes/Smyth: Introduction to Biomedical Informatics: 51

Topic Modeling

History

1990

Latent Semantic Analysis (Deerwester et al)

1999

Probabilistic Latent Semantic Analysis (Hoffman)

2003

Latent Dirichlet Allocation (Blei, Ng, Jordan)

2004

Gibbs sampling (Griffiths & Steyvers)

2004+

A variety of extensions and applications…….

© Hayes/Smyth: Introduction to Biomedical Informatics: 52

How are the Topics Learned?

• Most widely used algorithm is based on Gibbs Sampling

– This is essentially a stochastic search technique from statistics

• Sketch of algorithm, for learning T topics

– Initialize each word in each document to a number from 1 to T

– Cycle through each word and resample its assignment, based on

• Which other topics are assigned to words in this document

• Which other words are assigned to this topic

– Continue to cycle through the data until convergence or for a fixed number

of iterations

– Typically takes 20 to 100 iterations to converge

– Each iteration is linear in the number of word tokens in the corpus (good!)

– Algorithm can be easily parallelized

(Parallelization algorithm invented at UCI, now used by Google, Yahoo, etc)

© Hayes/Smyth: Introduction to Biomedical Informatics: 53

optional

Equation for Sampling the Topic for each Word

The topic modeling algorithm uses a probabilistic model to stochastically and

iteratively assign topics to every word in the corpus.

count of topic t

assigned to doc d

count of word w

assigned to topic t

ntdi

nwti

p( zi t | zi )

i

i

n

T

n

t ' t 'd

w' w't W

probability that word i is

assigned to topic t

© Hayes/Smyth: Introduction to Biomedical Informatics: 54

Word/Document counts

for 16 Artificial Documents

stream

documents

River

river

Stream

bank

Bank

money

Money

loan

Loan

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

Can we recover the original topics and topic mixtures from this data?

P. Smyth: Fraunhofer IAIS, May 2010: 55

Example of Gibbs Sampling

• Assign word tokens randomly to 2 topics:

stream

River

river

Stream

bank

Bank

money

Money

loan

Loan

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

P. Smyth: Fraunhofer IAIS, May 2010: 56

After 1 iteration

stream

River

river

Stream

bank

Bank

money

Money

loan

Loan

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

P. Smyth: Fraunhofer IAIS, May 2010: 57

After 4 iterations

stream

River

river

Stream

bank

Bank

money

Money

loan

Loan

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

P. Smyth: Fraunhofer IAIS, May 2010: 58

After 32 iterations

topic 1

stream .40

bank .35

river .25

stream

River

river

Stream

bank

Bank

topic 2

bank .39

money .32

loan .29

money

Money

loan

Loan

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

P. Smyth: Fraunhofer IAIS, May 2010: 59

Explaining Topic Modeling with New York Times Documents

Topic Modeling begins with the text from a corpus of documents…

About a month after the attacks of Sept. 11, firefighters widows began showing up at an obscure

investment firm on Long...¶ To hear Raymond W. Baker tell it, companies in the United States and

throughout the West have it exactly backward when i...¶ You dont have to be honest to use the Internet.

E-mail scams are a growing problem. Youve almost certainly heard from ...¶ In a city where banks sprout

like mushrooms, and die just as fast, the money-moving network described in the unfolding B...¶ The

federal governments chief witness against Robert E. Brennan, the former penny-stock promoter,

concluded his testimo...¶ From the well-appointed offices of Lehman Brothers in Manhattan, federal

prosecutors say, Consuelo Marquez, a Barnard Co...¶ Appearing calm and rested, and smiling occasionally

at one another and their lawyers, Edwards and Berlin described the o. Cary Stayner was sentenced

Thursday to die for committing three murders near Yosemite National Park in 1999. For FBI age ...¶ As

murders go, it was the sort guaranteed to fetch modest attention. Two men were held up at gunpoint in a

restaurant pa ...¶ Teachers loaded some of the elementary school children onto buses. Another group

was hustled down the hill to the old wo ...¶ It started as a whisper from nowhere. Then the whispers

became voices. James Michael Brown had no choice but to listen. ...¶ A day is worth more than 24 hours

to Coral Eugene Watts. Its worth 72, a three-for-one bargain, a triple-time countdown ....¶ The middleaged man and the teenager were footloose traveling companions on a fathomless mission of horror. For

three we ...¶ A jury convicted Michael C. Skakel on Friday of murder in the 1975 bludgeoning death of

Martha Moxley, using more than a ...¶ On July 13, 1990, an expert testified in a Manhattan court that

DNA analysis of semen found in the victim in the Central ...¶ As the man suspected of killing as many as

18 women sat in a downtown prison cell, investigators fielded calls from othe ...¶ For Johnson, an exMarine, stucco specialist and associate pastor of a small Kansas City church, the next couple of hour ...¶

© Hayes/Smyth: Introduction to Biomedical Informatics: 60

Initially, topics are randomly assigned to the

words in each document

For this example, we’ve set the number of topics to 10.

19757 bludgeoning2 convicted4 death10 Friday6 jury2 Martha9 Michael3

more3 Moxley9 murder6 Skakel5 using2 ¶ against4 Brennan3 chief1

concluded3 federal8 former2 governments4 penny-stock6 promoter3

Robert9 testimony4 witness1...

© Hayes/Smyth: Introduction to Biomedical Informatics: 62

Words get assigned probabilistically to topics

19757 bludgeoning2 convicted4 death10 Friday6 jury2 Martha9 Michael3

more3 Moxley9 murder6 Skakel5 using2 ¶ against4 Brennan3 chief1

concluded3 federal8 former2 governments4 penny-stock6 promoter3

Robert9 testimony4 witness1...

turns into

19753 bludgeoning3 convicted3 death3 Friday5 jury3 Martha1 Michael9

more5 Moxley3 murder3 Skakel3 using6 ¶ against6 Brennan8 chief8

concluded5 federal8 former8 governments9 penny-stock8 promoter8

Robert1 testimony8 witness3...

© Hayes/Smyth: Introduction to Biomedical Informatics: 63

Topics are expressed as lists of words likely to cooccur in individual documents

#3: murder police victim killing shot killed death crime violence shooting found

suspect killer kill woman told case night scene investigator

#8: money million business dollar account cash paid pay scandal financial trust

fraud corruption bank scheme laundering illegal deal profit transfer

19753 bludgeoning3 convicted3 death3 Friday5 jury3 Martha1

Michael9 more5 Moxley3 murder3 Skakel3 using6 ¶ against6

Brennan8 chief8 concluded5 federal8 former8 governments9

penny-stock8 promoter8 Robert1 testimony8 witness3...

© Hayes/Smyth: Introduction to Biomedical Informatics: 64

Humans Interpret Topics

After the model produces the topics, a human domain expert interprets the list of most

likely words and chooses a topic name.

topic #3:

murder police victim killing shot killed death

crime violence shooting found suspect killer kill

woman …

topic #3 =

“Crime”

topic #8 =

“Financial

Corruption”

topic #8:

money million business dollar account cash paid pay

scandal financial trust fraud corruption bank scheme

laundering illegal …

© Hayes/Smyth: Introduction to Biomedical Informatics: 65

Topic Modeling can be applied to various types of text …

Collection

New York

Times

# docs

300,000

Description

News articles from New York Times and other newspapers 2000-2002

Austen

1,400

The six Jane Austen novels, broken up into 100-line sections

Blogs

4,000

Blog entries harvested from Daily Kos

Bible

1,200

Chapters in the bible (KJV)

Police

Reports

250,000

Police accident reports from North Carolina

CiteSeer

750,000

Abstracts from research publications in computer science and engineering

Search

Queries

1,000,000

Queries issued to web search engine

Enron

250,000

Enron emails seized by the US Government for the federal case against

the company

Library of

Congress

240,000

Metadata records from Library of Congress American Memory Collection

© Hayes/Smyth: Introduction to Biomedical Informatics: 66

… sample topics

Collection

Sample Topic

New York

Times

[WMD] IRAQ iraqi weapon war SADDAM_HUSSEIN SADDAM resolution

UNITED_STATES military inspector U_N UNITED_NATION BAGHDAD inspection

action SECURITY_COUNCIL

Austen

[SENTIMENT] felt comfort feeling feel spirit mind heart ill evil fear impossible hope poor

distress end loss relief suffering concern dreadful misery unhappy

Blogs

[ELECTIONS] november poll house electoral governor polls account ground

republicans trouble

Bible

[COMMANDS] thou thy thee shalt thine lord god hast unto not shall

Police

Reports

[RAN OFF ROAD] v1 off road ran came rest ditch traveling struck side shoulder tree

overturned control lost

CiteSeer

[GRAPHS] graph edge vertices edges vertex number directed connected degree

coloring subgraph set drawing

Search

Queries

[CREDIT] credit card loans bill loan report bad visa debt score

Enron

[ENERGY CRISIS] state california power electricity utilities davis energy prices

generators edison public deregulation billion governor federal consumers commission

plants companies electric wholesale crisis summer

Library of

Congress

[ARMY] military camp army war officer personnel british regiment tent crimean crimea

soldier guard general infantry

© Hayes/Smyth: Introduction to Biomedical Informatics: 67

Case Study: Analysis of DNA Microarray Literature

• Government-sponsored study of > 50,000 papers from PubMed that

are related to DNA microarrays

–

–

–

–

–

–

Papers date from early 1990’s to 2012

48,872 documents

59,408 unique words

6.1 million word tokens

191,569 unique authors

4,455 different journals

• Ran a topic model with T=100 topics to gain insight into what the

different sub-areas are within the field of DNA microarrays and how

these have changed over time

© Hayes/Smyth: Introduction to Biomedical Informatics: 68

Examples of Topics Learned

Displayed below are the top 5 highest probability words for 5 selected topics

Microarray Chip

Technology

Classification

Methods

Databases

and

Annotation

Regulatory

Networks

Patients and

Survival

detection

classification

databases

network

patient

surface

selection

tool

regulatory

tumor

fluorescence

cancer

annotation

pathway

cancer

hybridization

algorithm

data set

interaction

survival

array

feature

web

transcriptional

prognostic

From basic technology to applications

© Hayes/Smyth: Introduction to Biomedical Informatics: 69

How Topics Change over Time

Basic Technology

microarray technology

hybridization

probe technology

sequencing

0.16

0.12

0.1

0.08

0.06

0.04

patients and treatment

classification

pathways and networks

stem cells

0.025

FRACTION OF WORDS PER TOPIC

0.14

FRACTION OF WORDS PER TOPIC

Applications

0.02

0.015

0.01

Base Rate

0.005

0.02

Base Rate

0

1990

1995

2000

YEAR

2005

2010

0

1990

1995

2000

YEAR

2005

2010

Selected topics with largest changes in words assigned to the topic:

negative (left plot) and positive (right plot)

© Hayes/Smyth: Introduction to Biomedical Informatics: 70

New York Times 2000-02: Sample Topics

Basketball

Tour de France

Holidays

team

play

game

season

final

games

point

series

player

coach

playoff

championship

playing

win

0.028

0.015

0.013

0.012

0.011

0.011

0.011

0.011

0.010

0.009

0.009

0.007

0.006

0.006

tour

rider

riding

bike

team

stage

race

won

bicycle

road

hour

scooter

mountain

place

0.039

0.029

0.017

0.016

0.016

0.014

0.013

0.012

0.010

0.009

0.009

0.008

0.008

0.008

holiday

gift

toy

season

doll

tree

present

giving

special

shopping

family

celebration

card

tradition

0.071

0.050

0.023

0.019

0.014

0.011

0.008

0.008

0.007

0.007

0.007

0.007

0.007

0.006

LAKERS

SHAQUILLE-O-NEAL

KOBE-BRYANT

PHIL-JACKSON

NBA

SACRAMENTO

RICK-FOX

PORTLAND

ROBERT-HORRY

DEREK-FISHER

0.062

0.028

0.028

0.019

0.013

0.007

0.007

0.006

0.006

0.006

LANCE-ARMSTRONG

FRANCE

JAN-ULLRICH

LANCE

U-S-POSTAL-SERVICE

MARCO-PANTANI

PARIS

ALPS

PYRENEES

SPAIN

0.021

0.011

0.003

0.003

0.002

0.002

0.002

0.002

0.001

0.001

CHRISTMAS

THANKSGIVING

SANTA-CLAUS

BARBIE

HANUKKAH

MATTEL

GRINCH

HALLMARK

EASTER

HASBRO

0.058

0.018

0.009

0.004

0.003

0.003

0.003

0.002

0.002

0.002

© Hayes/Smyth: Introduction to Biomedical Informatics: 71

New York Times 2000-02: Sample Topics

September 11 Attacks

FBI Investigation

DC Sniper

attack

tower

firefighter

building

worker

terrorist

victim

rescue

floor

site

disaster

twin

ground

center

fire

plane

0.033

0.025

0.020

0.018

0.013

0.012

0.012

0.012

0.011

0.009

0.008

0.008

0.008

0.008

0.007

0.007

agent

investigator

official

authorities

enforcement

investigation

suspect

found

police

arrested

search

law

arrest

case

evidence

suspected

0.029

0.028

0.027

0.021

0.018

0.017

0.015

0.014

0.014

0.012

0.012

0.011

0.011

0.010

0.009

0.008

sniper

shooting

area

shot

police

killer

scene

white

victim

attack

case

left

public

suspect

killed

car

0.024

0.019

0.010

0.009

0.007

0.006

0.006

0.006

0.006

0.005

0.005

0.005

0.005

0.005

0.005

0.005

WORLD-TRADE-CTR

NEW-YORK-CITY

LOWER-MANHATTAN

PENTAGON

PORT-AUTHORITY

RED-CROSS

NEW-JERSEY

RUDOLPH-GIULIANI

PENNSYLVANIA

CANTOR-FITZGERALD

0.035

0.020

0.005

0.005

0.003

0.002

0.002

0.002

0.002

0.001

FBI

MOHAMED-ATTA

FEDERAL-BUREAU

HANI-HANJOUR

ASSOCIATED-PRESS

SAN-DIEGO

U-S

FLORIDA

0.034

0.003

0.001

0.001

0.001

0.001

0.001

0.001

WASHINGTON

VIRGINIA

MARYLAND

D-C

JOHN-MUHAMMAD

BALTIMORE

RICHMOND

MONTGOMERY-CO

MALVO

ALEXANDRIA

0.053

0.019

0.013

0.012

0.008

0.006

0.006

0.005

0.005

0.003

© Hayes/Smyth: Introduction to Biomedical Informatics: 72

Topic Trends (New York Times data set)

kwords

kwords

kwords

kwords

40

200

Basketball

Sept-11-Attacks

20

100

0

0

20

200

Tour-de-France

Anthrax

10

100

0

0

40

100

Oscars

DC-Sniper

20

50

0

0

40

100

Quarterly-Earnings

20

0

Jan00

Enron

50

Jan01

Jan02

Jan03

0

Jan00

Jan01

Jan02

Jan03

© Hayes/Smyth: Introduction to Biomedical Informatics: 73

From Dr. David Newman

Computer Science Department

UC Irvine

https://app.nihmaps.org/nih/browser/

© Hayes/Smyth: Introduction to Biomedical Informatics: 74

Background Resources: Topic Modeling

•

D. Blei, Introduction to Probabilistic topic models, Communications of the

ACM, preprint, 2011

– http://www.cs.princeton.edu/~blei/papers/Blei2011.pdf

•

D. Blei and J. Lafferty, Topic models, in A. Srivastava and M. Sahami,

editors, Text Mining: Classification, Clustering, and Applications . Chapman &

Hall/CRC Data Mining and Knowledge Discovery Series, 2009.

– http://www.cs.princeton.edu/~blei/papers/BleiLafferty2009.pdf

•

Steyvers, M. & Griffiths, T. (2007) Probabilistic topic models. In T. Landauer, D

McNamara, S. Dennis, and W. Kintsch (eds), Latent Semantic Analysis: A Road

to Meaning. Laurence Erlbaum

•

General resources (papers, code, newsgroup, etc)

– http://www.cs.princeton.edu/~blei/topicmodeling.html

© Hayes/Smyth: Introduction to Biomedical Informatics: 75

Additional Backup Slides on Application of Topic

Modeling to Domain-Specific Browsing

© Hayes/Smyth: Introduction to Biomedical Informatics: 76

Application: Domain-Specific Browsers

• Collect a large set of documents in a particular domain

– Build a domain-specific topic model for the documents

– Build a browser based on the topic model

• Example: Schizophrenia Research

– 40,000 abstracts from PubMed mention schizophrenia

– We built a topic model with 200 topics

– Also automatically extracted

• Gene names/symbols

• Brain regions

– Developed a browser/search-tool that uses the topics to

allow a user to “navigate” through the world schizophrenia

– Combines statistical model with real-time search on

documents

– Master’s thesis work by Vasanth Kumar, ICS MS 2006

P. Smyth: Fraunhofer IAIS, May 2010: 77

P. Smyth: Fraunhofer IAIS, May 2010: 78

Topics are discovered

automatically, and span the “space” of

schizophrenia research.

Only topic naming is done manually

P. Smyth: Fraunhofer IAIS, May 2010: 79

P. Smyth: Fraunhofer IAIS, May 2010: 80

P. Smyth: Fraunhofer IAIS, May 2010: 81

P. Smyth: Fraunhofer IAIS, May 2010: 82

P. Smyth: Fraunhofer IAIS, May 2010: 83

P. Smyth: Fraunhofer IAIS, May 2010: 84

Information Extraction

© Hayes/Smyth: Introduction to Biomedical Informatics: 85

Information Extraction

• Broad goal:

– Convert unstructured text into structured form (e.g., database)

– Many applications, e.g.,

• Mining of free-form narratives in clinical diagnoses

• Extraction of scientific information from biomedical papers

© Hayes/Smyth: Introduction to Biomedical Informatics: 86

Information Extraction

• Broad goal:

– Convert unstructured text into structured form (e.g., database)

– Many applications, e.g.,

• Mining of free-form narratives in clinical diagnoses

• Extraction of scientific information from biomedical papers

• Main Categories:

– Named entity recognition (NER)

• E.g., recognize disease names, genes, proteins, drugs, in text

• Can be challenging: e.g., genes are referred to in multiple ways

– Relation extraction

• Given named entities, detect how they are related, e.g.,

– Gene A regulates Gene B

– Drug X can cause adverse symptoms Y and Z

• Harder than named entity recognition

– Much variety and subtlety in how we describe relational information

© Hayes/Smyth: Introduction to Biomedical Informatics: 87

Example of Information Extraction

From Sarawagi,

Foundations and Trends in Databases, 2008

© Hayes/Smyth: Introduction to Biomedical Informatics: 88

Information Extraction Techniques

• Dictionaries

– E.g., lists of names of genes, proteins, diseases, drugs

• Natural Language Processing

– High-quality parsers can parse sentences into parts of speech

– E.g., noun-phrases

– Subject-object-verb triples useful for relation extraction

• Rule-based Algorithms

– E.g., if word is capitalized and a noun, then its an entity

• Machine learning approaches

– e.g., use human-labeled gene name mentions to train a classifier

– Features can be parts of speech, nearby words, capitalization, etc

© Hayes/Smyth: Introduction to Biomedical Informatics: 89

Example of Part of Speech (POS) Tagging

(from Chris Manning, Stanford)

RB

NNP

NNP

RB

VBD

,

JJ

NNS

When

Mr.

Holly

last

wrote

,

many

years

© Hayes/Smyth: Introduction to Biomedical Informatics: 90

Example of Phrase Parsing

(from Chris Manning, Stanford)

S

VP

NP

VP

VBD

PP

NP

VBN

IN

NP

NP

IN

NNS

Bills

PP

on

NN

NNS

CC

ports

and immigration

NNP

were

submitted

by

Senator

NNP

Brownback

© Hayes/Smyth: Introduction to Biomedical Informatics: 91

From Krallinger, Valencia, Hirschman,

Genome Biology, 2008

© Hayes/Smyth: Introduction to Biomedical Informatics: 92

From Savova et al,

J. Am. Med. Inform. Assoc, 2010

© Hayes/Smyth: Introduction to Biomedical Informatics: 93

From Erhadt, Schneider, Blaschke,

Drug Discovery Today, 2006

© Hayes/Smyth: Introduction to Biomedical Informatics: 94

From Jensen, Saric, Bork,

Nature Reviews Genetics, 2003

© Hayes/Smyth: Introduction to Biomedical Informatics: 95

From Feldman, Regev, Hurvitz, and Finkelstein-Landau,

Biosilico, 2003

© Hayes/Smyth: Introduction to Biomedical Informatics: 96

From Feldman, Regev, Hurvitz, and Finkelstein-Landau,

Biosilico, 2003

© Hayes/Smyth: Introduction to Biomedical Informatics: 97

“Buzzword Hunting”

From Jensen, Saric, Bork,

Nature Reviews Genetics, 2003

© Hayes/Smyth: Introduction to Biomedical Informatics: 98

Commercial Software, e.g., IBM Medical Record Text Analytics

© Hayes/Smyth: Introduction to Biomedical Informatics: 99

Challenges in Information Extraction

• Fundamentally hard

– Ambiguity in natural language is difficult for computers to handle

– Current systems are reasonably accurate …. but room for improvement

• Validation

– Measuring recall for example can be difficult

• Calibration

– How do we calibrate a method so that we know how reliable it is?

• Integration

– How should text-based indicators be combined with actual experimental

data?

© Hayes/Smyth: Introduction to Biomedical Informatics: 100

Resources in Information Extraction

• Review paper on basic technology:

– Information Extraction, by Sunita Sarawagi, in Foundations and Trends in

Databases, 2008

• Reviews with a biomedical focus:

– Erhardt, Schneider, and Blaschke, Status of text-mining techniques

applied to biomedical text. Drug Discovery Today, 11(7/8), pp.315-325,

2006

– Jensen, Saric, Bork, Literature mining for the biologist: from information

to biological discovery, Nature Reviews: Genetics, 7, Feb 2006.

• Additional papers on the class Web site

© Hayes/Smyth: Introduction to Biomedical Informatics: 101

Software for Natural Language Processing

(lists from Chris Manning, Stanford)

•

NLP Packages

–

–

–

–

•

NLTK Python

• http://www.nltk.org/

OpenNLP

• http://incubator.apache.org/opennlp/

Stanford NLP

• http://nlp.stanford.edu/software/

LingPipe

• http://alias-i.com/lingpipe/

NLP Frameworks

–

GATE – General Architecture for Text Engineering (U. Sheffield)

• http://gate.ac.uk/

• Java, quite well maintained (now)

–

UIMA – Unstructured Information Management Architecture. Originally IBM; now Apache

project

• http://uima.apache.org/

• Professional, scalable, etc.

–

NLTK – Natural Language To0lkit (started by Steven Bird)

• http://www.nltk.org/

• Big community; large Python package; corpora and books about it

© Hayes/Smyth: Introduction to Biomedical Informatics: 102