presentation

advertisement

Stability Yields a PTAS for

k-Median and k-Means Clustering

Pranjal Awasthi, Avrim Blum, Or Sheffet

Carnegie Mellon University

November 3rd, 2010

1

Stability Yields a PTAS for

k-Median and k-Means Clustering

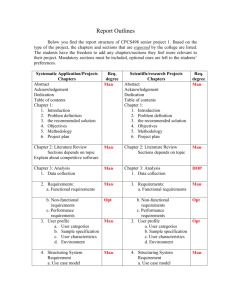

1. Introduce k-Median / k-Means problems.

2. Define stability

I. Previous notion [ORSS06]

II. Weak Deletion Stability

III. ¯-distributed instances

3. The algorithm for k-Median

4. Conclusion + open problems.

2

Clustering In Real Life

Clustering: come up with desired partition

3

Clustering in a Metric Space

Clustering: come up with desired partition

Input

• n points

• A distance function d:n£n! R¸0 satisfying:

– Reflexive:

– Symmetry:

– Triangle Inequality:

•

q

p

8 p,

d(p,p) = 0

8 p,q, d(p,q) = d(q,p)

8 p,q,r, d(p,q) · d(p,r)+d(r,q)

r

k-partition

4

Clustering in a Metric Space

Clustering: come up with desired partition

Input:

• n points

• A distance function d:n£n! R¸0 satisfying:

– Reflexive:

– Symmetry:

– Triangle Inequality:

•

8 p,

d(p,p) = 0

8 p,q, d(p,q) = d(q,p)

8 p,q,r, d(p,q) · d(p,r)+d(r,q)

k-partition

k is large, e.g. k=polylog(n)

5

k-Median

• Input:

1. n points in a finite metric space

2. k

• Goal:

–

–

–

–

Partition into k disjoint subsets: C*1, C*2 , … , C*k

Choose a center per subset

Cost: cost(C*i )= x d(x,c*i)

Cost of partition: i cost(C*i)

• Given centers ) Easy to get best partition

• Given partition ) Easy to get best centers

6

k-Means

• Input:

1. n points in Euclidean space

2. k

• Goal:

–

–

–

–

Partition into k disjoint subsets : C*1, C*2 , … , C*k

Choose a center per subset

Cost: cost(C*i )= x d2(x, c*i)

Cost of partition: i cost(C*i)

• Given centers ) Easy to get best partition

• Given partition ) Easy to get best centers

7

We Would Like To…

• Solve k–median/ k-means problems.

• NP-hard to get OPT (= cost of optimal partition)

• Find a c-approximation algorithm

c OPT

Alg

– A poly-time algorithm guaranteed to output a

clustering whose cost · c OPT

Alg

• Ideally, find a PTAS

Alg

Alg

2OPT

1.5 OPT

1.1 OPT

OPT

Polynomial Time Approximation Scheme

– Get a c-approximation algorithm where

c = (1+²), for any ²>0.

– Runtime can be exp(1/²)

0

8

Related Work

k-Median

k-Means

Small k

Easy (try all centers) in time nk

PTAS, exponential in (k/²)

[KSS04]

General k

•(3+²)-apx [GK98, CGTS99,

AGKMMP01, JMS02, dlVKKR03]

9-apx [OR00, BHPI02,

dlVKKR03, ES04, HPM04,

KMNPSW02]

No PTAS!

Special case

•(1.367...)-apx hardness [GK98,

JMS02]

Euclidean k-Median [ARR98], PTAS

if dimension is small (loglog(n)c)

[ORSS06]

• We focus on large k (e.g. k=polylog(n))

• Runtime goal: poly(n,k)

9

World

All possible instances

10

ORSS Result (k-Means)

11

ORSS Result (k-Means)

Why use 5 sites?

12

ORSS Result (k-Means)

13

ORSS Result (k-Means)

14

ORSS Result (k-Means)

15

ORSS Result (k-Means)

• Instance is stable if

OPT(k-1) > (1/®)2 OPT(k) (require 1/® > 10)

• Give a (1+O(®))-approximation.

Our Result (k-Means)

• Instance is stable if

OPT(k-1) > (1+®) OPT(k) (require ® > 0)

• Give a PTAS ((1+²)-approximation).

• Runtime: poly(n,k) exp(1/®,1/²)

16

Philosophical Note

• Stable instances:

9®>0 s.t. OPT(k-1) > (1+®) OPT(k)

• Not stable instances:

8®>0 s.t. OPT(k-1) · (1+®) OPT(k)

• A (1+®)-approximation can return a (k-1)-clustering.

• Any PTAS can return a (k-1)-clustering.

• It is not a k-clustering problem,

• It is a (k-1)-clustering problem!

• If we believe our instance inherently has k clusters

“Necessary condition“ to guarantee:

PTAS will return a “meaningful” clustering.

• Our result:

It’s a sufficient condition to get a PTAS.

17

World

All possible instances

Any (k-1) clustering is

significantly costlier than OPT(k)

ORSS Stable

18

A Weaker Guarantee

Why use 5 sites?

19

A Weaker Guarantee

20

A Weaker Guarantee

21

(1+®)-Weak Deletion Stability

• Consider OPT(k).

• Take any cluster C*i, associate its points with c*j.

• This increases the cost to at least (1+®)OPT(k).

c*i

c*j

)

c*j

1. An obvious relaxation of ORSS-stability.

2. Our result: suffices to get a PTAS.

22

World

All possible instances

ORSS Stable

Weak-Deletion Stable

Merging any two clusters in OPT(k)

increases the cost significantly

23

¯-Distributed Instances

For every cluster C*i, and every p not in C*i, we have:

p

c*i

We show that:

• k-median:

(1+®)-weak deletion stability ) (®/2)-distributed.

• k-means:

(1+®)-weak deletion stability ) (®/4)-distributed.

24

Claim: (1+®)-Weak Deletion Stability )

(®/2)-Distributed

p

c*i

c*j

®OPT · x d(x, c*j) - x d(x, c*i)

· x [d(x, c*i) + d(c*i, c*j)] - x d(x, c*i)

= x d(c*i, c*j) = |C*i| d(c*i, c*j)

) ®(OPT/|C*i|) · d(c*i, c*j) · d(c*i, p) + d(p, c*j) · 2d(c*i, p)

25

World

All possible instances

ORSS Stable

Weak-Deletion Stable

In optimal solution: large

distance between a center to

any “outside” point

¯-Distributed

26

Main Result

• We give a PTAS for ¯-distributed k-median and

k-means instances.

• Running time:

• There are NP-hard ¯-distributed instances.

(Superpolynomial dependence on 1/² is unavoidable!)

27

Stability Yields a PTAS for

k-Median and k-Means Clustering

1. Introduce k-Median / k-Means problems.

2. Define stability

3. PTAS for k-Median

I. High level description

II. Intuition (“had only we known more…”)

III. Description

4. Conclusion + open problems.

28

k-Median Algorithm’s Overview

Input: Metric, k, ¯, OPT

0. Handle “extreme” clusters

(Brute-force guessing of some clusters’ centers)

1. Populate L with components

2. Pick best center in each component

3. Try all possible k-centers

L := List of “suspected” clusters’ “cores”

30

k-Median Algorithm’s Overview

Input: Metric, k, ¯, OPT

0. Handle “extreme” clusters

(Brute-force guessing of some clusters’ centers)

1. Populate L with components

2. Pick best center in each component

3. Try all possible k-centers

• Right definition of “core”.

• Get the core of each cluster.

• L can’t get too big.

31

Intuition: “Mind the Gap”

• We know:

• In contrast, an “average” cluster contributes:

• So for an “average” point p, in an “average”

cluster C*i,

32

Intuition: “Mind the Gap”

• We know:

• Denote the core of a cluster C*i

c*i

33

Intuition: “Mind the Gap”

• We know:

• Denote the core of a cluster C*i

• Formally, call cluster C*i cheap if

• Assume all clusters are cheap.

• In general: we brute-force guess O(1/¯²) centers of expensive

clusters in Stage 0.

34

Intuition: “Mind the Gap”

• We know:

• Denote the core of a cluster C*i

• Formally, call cluster C*i cheap if

• Markov: At most (²/4) fraction of the points of

a cheap cluster, lie outside the core.

35

Intuition: “Mind the Gap”

• We know:

• Denote the core of a cluster C*i

• Formally, call cluster C*i cheap if

• Markov: At least half of the points of a cheap

cluster lie inside the core.

37

Magic (r/4) Ball

If p belongs to the core )

B(p, r/4) contains ¸ |C*i|/2 pts.

Denote r = ¯(OPT/|C*i|).

“Heavy”:

Mass ¸ |C*i|/2

r/4

· r/8

>r

c*i

p

38

Magic (r/4) Ball

1. Draw a ball of radius r/4 around all points.

2. Unite “heavy” balls whose centers overlap.

Denote r = ¯(OPT/|C*i|).

· r/8

>r

c*i

All points in the core are

merged into one set!

39

Magic (r/4) Ball

1. Draw a ball of radius r/4 around all points.

2. Unite “heavy” balls whose centers overlap.

Denote r = ¯(OPT/|C*i|).

· r/8

>r

c*i

Could we merge

core pts with

pts from other clusters?

40

Magic (r/4) Ball

1. Draw a ball of radius r/4 around all points.

2. Unite “heavy” balls whose centers overlap.

Denote r = ¯(OPT/|C*i|).

x

p

x

· r/8

>r

c*i

r/2 · d(p,c*i) · 3r/4

r/4 = r/2 - r/4 · d(x,c*i) · 3r/4 + r/4 = r

41

Magic (r/4) Ball

1. Draw a ball of radius r/4 around all points.

2. Unite “heavy” balls whose centers overlap.

Denote r = ¯(OPT/|C*i|).

p

· r/8

x

>r

c*i

r/4 · d(x,c*i) · r

x falls outside

the core

x belongs to C*i

42

Magic (r/4) Ball

1. Draw a ball of radius r/4 around all points.

2. Unite “heavy” balls whose centers overlap.

Denote r = ¯(OPT/|C*i|).

p

· r/8

x

>r

c*i

r/4 · d(x,c*i) · r

More than |C*i|/2 pts fall outside the core!

)(

43

Finding the Right Radius

1. Draw a ball of radius r/4 around all points.

2. Unite “heavy” balls whose centers overlap.

Denote r = ¯(OPT/|C*i|).

• Problem: we don’t know |C*i|

• Solution: Try all sizes, in order!

• Set s = n, n-1, n-2, …, 1

• Set rs = ¯(OPT/s)

• Complication:

• When s gets small (s=4,3,2,1) we collect many “leftovers” of one cluster.

• Solution: once we add a subset to L, we remove close-by points.

44

Population Stage

•

•

•

•

•

Set s = n, n-1, n-2, …, 1

Set rs = ¯(OPT/s)

Draw a ball of radius r/4 around each point

Unite balls containing ¸ s/2 pts whose centers overlap

Once a set ¸ s/2 is found

•

•

Put this set in L

Remove all points in a (r/2)-”buffer zone” from L.

45

Population Stage

•

•

•

•

•

Set s = n, n-1, n-2, …, 1

Set rs = ¯(OPT/s)

Draw a ball of radius r/4 around each point

Unite balls containing ¸ s/2 pts whose centers overlap

Once a set ¸ s/2 is found

•

•

Put this set in L

Remove all points in a (r/2)-”buffer zone” from L.

46

Population Stage

•

•

•

•

•

Set s = n, n-1, n-2, …, 1

Set rs = ¯(OPT/s)

Draw a ball of radius r/4 around each point

Unite balls containing ¸ s/2 pts whose centers overlap

Once a set ¸ s/2 is found

•

•

Put this set in L

Remove all points in a (r/2)-”buffer zone” from L.

47

Population Stage

•

•

•

•

•

Set s = n, n-1, n-2, …, 1

Set rs = ¯(OPT/s)

Draw a ball of radius r/4 around each point

Unite balls containing ¸ s/2 pts whose centers overlap

Once a set ¸ s/2 is found

•

•

Put this set in L

Remove all points in a (r/2)-”buffer zone” from L.

48

Population Stage

•

•

•

•

•

Set s = n, n-1, n-2, …, 1

Set rs = ¯(OPT/s)

Draw a ball of radius r/4 around each point

Unite balls containing ¸ s/2 pts whose centers overlap

Once a set ¸ s/2 is found

•

•

Put this set in L

Remove all points in a (r/2)-”buffer zone” from L.

49

Population Stage

•

•

•

•

•

Set s = n, n-1, n-2, …, 1

Set rs = ¯(OPT/s)

Draw a ball of radius r/4 around each point

Unite balls containing ¸ s/2 pts whose centers overlap

Once a set ¸ s/2 is found

•

•

Put this set in L

Remove all points in a (r/2)-”buffer zone” from L.

50

Population Stage

•

•

•

•

•

Set s = n, n-1, n-2, …, 1

Set rs = ¯(OPT/s)

Draw a ball of radius r/4 around each point

Unite balls containing ¸ s/2 pts whose centers overlap

Once a set ¸ s/2 is found

•

•

Put this set in L

Remove all points in a (r/2)-”buffer zone” from L.

51

Population Stage

•

•

•

•

•

Set s = n, n-1, n-2, …, 1

Set rs = ¯(OPT/s)

Draw a ball of radius r/4 around each point

Unite balls containing ¸ s/2 pts whose centers overlap

Once a set ¸ s/2 is found

•

•

Put this set in L

Remove all points in a (r/2)-”buffer zone” from L.

52

Population Stage

•

•

•

•

•

Set s = n, n-1, n-2, …, 1

Set rs = ¯(OPT/s)

Draw a ball of radius r/4 around each point

Unite balls containing ¸ s/2 pts whose centers overlap

Once a set ¸ s/2 is found

•

•

Put this set in L

Remove all points in a (r/2)-”buffer zone” from L.

53

Population Stage

•

•

•

•

•

Set s = n, n-1, n-2, …, 1

Set rs = ¯(OPT/s)

Draw a ball of radius r/4 around each point

Unite balls containing ¸ s/2 pts whose centers overlap

Once a set ¸ s/2 is found

•

•

Put this set in L

Remove all points in a (r/2)-”buffer zone” from L.

54

Population Stage

•

•

•

•

•

Set s = n, n-1, n-2, …, 1

Set rs = ¯(OPT/s)

Draw a ball of radius r/4 around each point

Unite balls containing ¸ s/2 pts whose centers overlap

Once a set ¸ s/2 is found

•

•

Put this set in L

Remove all points in a (r/2)-”buffer zone” from L.

Remainder of the proof:

1. Even with “buffer zones” - still collect cores.

2. #{Components without core pts} in L is O(1/¯)

3. cost(k centers from cores) · (1+²)OPT

55

A Note About k-Means

• Roughly the same algorithm, consts » squared.

• Problem:

– Can’t guess centers for expensive clusters!

• Solution:

– A random sample of O(1/²) pts from each cluster

approximates the center of mass.

– Brute force guess O(1/²) pts from O(1/¯²) expensive

clusters.

• Better solution:

– Randomly sample O(1/²) pts from expensive clusters

whose size ¸ poly(1/k) fraction of the instance.

– Slight complication: introduce intervals.

• Expected runtime:

56

Conclusion

• World:

ORSS Stable

Weak-Deletion Stable

¯-Distributed

• 8 ²>0, a (1+²)-approximation algorithm for

¯-distributed instances of k-median / k-means.

• Improve constants?

• Other clustering objectives (k-centers)?

57

Take Home Message

Life (

,

) gives you

a k-median

instance.

Stability

=

A belief that a PTAS is meaningful

- “Can you solve it?”

This allows us to introduce a PTAS!

- “NO!!!”

• Stability gives us an “Archimedean Point”

that allows us to bypass NP-hardness.

• To what other•NP-hard

problems

similar logic applies?

But that’s

not new!

58

Thank you!

59

World

All possible instances

BBG Stable+

ORSS Stable

Weak-Deletion Stable

¯-Distributed

60

BBG Result

• We have target clustering.

• k-median is a proxy:

• Target is close to OPT(k).

• Problem:

k-median is NP-hard.

• Solution:

Use approximation alg.

• We would like:

Our (1+®)-approx algorithm

outputs a meaningful

k-clustering

61

BBG Result

• We have target clustering.

• k-median is a proxy:

• Target is close to OPT(k).

• Problem:

k-median is NP-hard.

• Solution:

Use approximation alg.

• We would like:

Our (1+®)-approx algorithm

outputs a meaningful

k-clustering

62

BBG Result

• We have target clustering.

• k-median is a proxy:

• Target is close to OPT(k).

• Problem:

k-median is NP-hard.

• Solution:

Use approximation alg.

• Implicit assumption:

Any k-clustering with

cost at most (1+®)OPT

is ±-close (pointwise) to target

63

BBG Result

• Instance is (BBG) stable:

Any two k-partitions with cost · (1+®)OPT(k)

differ over no more than (2±)-fraction of the input

• Give algorithm to get O(±/®)-close to the target.

• Additionally (k-median):

if all clusters’ sizes are (±n/®)

then get ±-close to the target.

• Our result:

if BBG-stability & clusters are >2±n

then PTAS for k-median (implies: get ±-close to the target).

64

Claim: BBG-Stability & Large Clusters )

(1+®)-Weak Deletion Stability

• We know:

(i) Any two k-partitions with cost ·

(1+®)OPT(k)

differ over · 2± fraction of the

input

(ii) All clusters contain >2±n points

• Take optimal k-clustering.

• Take C*i, move all points but c*i to C*j.

c*i

c*j

)

c*i

c*j

• New partition and OPT differ on >2±n pts )

cost(OPTi!j) ¸ cost( ) ¸ (1+®)OPT(k)

65

World

All possible instances

BBG Stable+

ORSS Stable

Weak-Deletion Stable

¯-Distributed

66