Ambiguity

advertisement

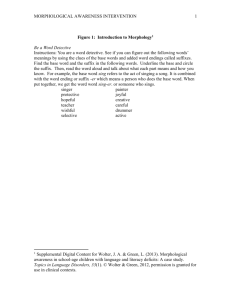

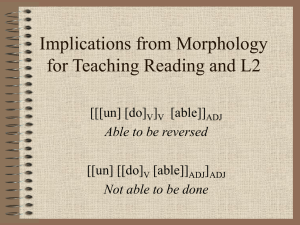

CS60057 Speech &Natural Language Processing Autumn 2007 Lecture 2 26 July 2007 Lecture 1, 7/21/2005 Natural Language Processing 1 Why is NLP difficult? Because Natural Language is highly ambiguous. Syntactic ambiguity The president spoke to the nation about the problem of drug use in the schools from one coast to the other. has 720 parses. Ex: “to the other” can attach to any of the previous NPs (ex. “the problem”), or the head verb 6 places “from one coast” has 5 places to attach … Lecture 1, 7/21/2005 Natural Language Processing 2 Why is NLP difficult? Word category ambiguity Word sense ambiguity People on mars can fly. Defining scope make up a story Fictitious worlds bank --> financial institution? building? or river side? Words can mean more than their sum of parts book --> verb? or noun? People like ice-cream. Does this mean that all (or some?) people like ice cream? Language is changing and evolving I’ll email you my answer. This new S.U.V. has a compartment for your mobile phone. Googling, … Lecture 1, 7/21/2005 Natural Language Processing 3 Dealing with Ambiguity Four possible approaches: Tightly coupled interaction among processing levels; knowledge from other levels can help decide among choices at ambiguous levels. Pipeline processing that ignores ambiguity as it occurs and hopes that other levels can eliminate incorrect structures. Lecture 1, 7/21/2005 Natural Language Processing 4 Resolve Ambiguities We will introduce models and algorithms to resolve ambiguities at different levels. part-of-speech tagging -- Deciding whether duck is verb or noun. word-sense disambiguation -- Deciding whether make is create or cook. lexical disambiguation -- Resolution of part-of-speech and word-sense ambiguities are two important kinds of lexical disambiguation. syntactic ambiguity -- her duck is an example of syntactic ambiguity, and can be addressed by probabilistic parsing. Lecture 1, 7/21/2005 Natural Language Processing 5 Resolve Ambiguities (cont.) I made her duck S NP I S VP NP V NP NP I made her duck VP V NP made DET N her duck Lecture 1, 7/21/2005 Natural Language Processing 6 Dealing with Ambiguity Three approaches: Tightly coupled interaction among processing levels; knowledge from other levels can help decide among choices at ambiguous levels. Pipeline processing that ignores ambiguity as it occurs and hopes that other levels can eliminate incorrect structures. Syntax proposes/semantics disposes approach Probabilistic approaches based on making the most likely choices Lecture 1, 7/21/2005 Natural Language Processing 7 Models and Algorithms By models I mean the formalisms that are used to capture the various kinds of linguistic knowledge we need. Algorithms are then used to manipulate the knowledge representations needed to tackle the task at hand. Lecture 1, 7/21/2005 Natural Language Processing 8 Models to Represent Linguistic Knowledge Different formalisms (models) are used to represent the required linguistic knowledge. State Machines -- FSAs, HMMs, ATNs, RTNs Formal Rule Systems -- Context Free Grammars, Unification Grammars, Probabilistic CFGs. Logic-based Formalisms -- first order predicate logic, some higher order logic. Models of Uncertainty -- Bayesian probability theory. Lecture 1, 7/21/2005 Natural Language Processing 9 Algorithms Many of the algorithms that we’ll study will turn out to be transducers; algorithms that take one kind of structure as input and output another. Unfortunately, ambiguity makes this process difficult. This leads us to employ algorithms that are designed to handle ambiguity of various kinds Lecture 1, 7/21/2005 Natural Language Processing 10 Algorithms In particular.. State-space search To manage the problem of making choices during processing when we lack the information needed to make the right choice Dynamic programming Lecture 1, 7/21/2005 To avoid having to redo work during the course of a state-space search CKY, Earley, Minimum Edit Distance, Viterbi, Baum-Welch Natural Language Processing 11 State Space Search States represent pairings of partially processed inputs with partially constructed representations. Goals are inputs paired with completed representations that satisfy some criteria. As with most interesting problems the spaces are normally too large to exhaustively explore. We need heuristics to guide the search Criteria to trim the space Lecture 1, 7/21/2005 Natural Language Processing 12 Dynamic Programming Don’t do the same work over and over. Avoid this by building and making use of solutions to sub-problems that must be invariant across all parts of the space. Lecture 1, 7/21/2005 Natural Language Processing 13 Languages Languages: 39,000 languages and dialects (22,000 dialects in India alone) Top languages: Chinese/Mandarin (885M), Spanish (332M), English (322M), Bengali (189M), Hindi (182M), Portuguese (170M), Russian (170M), Japanese (125M) Source: www.sil.org/ethnologue, www.nytimes.com Internet: English (128M), Japanese (19.7M), German (14M), Spanish (9.4M), French (9.3M), Chinese (7.0M) Usage: English (1999-54%, 2001-51%, 2003-46%, 2005-43%) Source: www.computereconomics.com Lecture 1, 7/21/2005 Natural Language Processing 14 The Description of Language Language = Words and Rules Dictionary (vocabulary) + Grammar Dictionary set of words defined in the language. Traditional - paper based Electronic - machine readable dictionaries; can be obtained from paperbased Grammar set of rules which describe what is allowable in a language Classic Grammars open (dynamic) meant for humans who know the language definitions and rules are mainly supported by examples no (or almost no) formal description tools; cannot be programmed Explicit Grammar (CFG, Dependency Grammars, Link Grammars,...) formal description can be programmed & tested on data (texts) 15 Levels of (Formal) Description 6 basic levels (more or less explicitly present in most theories): and beyond (pragmatics/logic/...) meaning (semantics) (surface) syntax morphology phonology phonetics/orthography Each level has an input and output representation output from one level is the input to the next (upper) level sometimes levels might be skipped (merged) or split 16 Phonetics/Orthography Input: acoustic signal (phonetics) / text (orthography) Output: phonetic alphabet (phonetics) / text (orthography) Deals with: Phonetics: consonant & vowel (& others) formation in the vocal tract classification of consonants, vowels, ... in relation to frequencies, shape & position of the tongue and various muscles intonation Orthography: normalization, punctuation, etc. 17 Phonology Input: sequence of phones/sounds (in a phonetic alphabet); or “normalized” text (sequence of (surface) letters in one language’s alphabet) [NB: phones vs. phonemes] Output: sequence of phonemes (~ (lexical) letters; in an abstract alphabet) Deals with: relation between sounds and phonemes (units which might have some function on the upper level) e.g.: [u] ~ oo (as in book), [æ] ~ a (cat); i ~ y (flies) 18 Morphology Input: sequence of phonemes (~ (lexical) letters) Output: sequence of pairs (lemma, (morphological) tag) Deals with: composition of phonemes into word forms and their underlying lemmas (lexical units) + morphological categories (inflection, derivation, compounding) e.g. quotations ~ quote/V + -ation(der.V->N) + NNS. 19 (Surface) Syntax Input: sequence of pairs (lemma, (morphological) tag) Output: sentence structure (tree) with annotated nodes (all lemmas, (morphosyntactic) tags, functions), of various forms Deals with: the relation between lemmas & morphological categories and the sentence structure uses syntactic categories such as Subject, Verb, Object,... e.g.: I/PP1 see/VB a/DT dog/NN ~ 20 ((I/sg)SB ((see/pres)V (a/ind dog/sg)OBJ)VP)S Meaning (semantics) Input: sentence structure (tree) with annotated nodes (lemmas, (morphosyntactic) tags, surface functions) Output: sentence structure (tree) with annotated nodes (semantic lemmas, (morpho-syntactic) tags, deep functions) Deals with: relation between categories such as “Subject”, “Object” and (deep) categories such as “Agent”, “Effect”; adds other cat’s e.g. ((I)SB ((was seen)V (by Tom)OBJ)VP)S ~ 21 (I/Sg/Pat/t (see/Perf/Pred/t) Tom/Sg/Ag/f) ...and Beyond Input: sentence structure (tree): annotated nodes (autosemantic lemmas, (morphosyntactic) tags, deep functions) Output: logical form, which can be evaluated (true/false) Deals with: assignment of objects from the real world to the nodes of the sentence structure e.g.: (I/Sg/Pat/t (see/Perf/Pred/t) Tom/Sg/Ag/f) ~ see(Mark-Twain[SSN:...],Tom-Sawyer[SSN:...])[Time:bef 99/9/27/14:15][Place:39ş19’40”N76ş37’10”W] 22 Three Views Three equivalent formal ways to look at what we’re up to (not including tables) Regular Expressions Finite State Automata Lecture 1, 7/21/2005 Regular Languages Natural Language Processing 23 Transition Finite-state methods are particularly useful in dealing with a lexicon. Lots of devices, some with limited memory, need access to big lists of words. So we’ll switch to talking about some facts about words and then come back to computational methods Lecture 1, 7/21/2005 Natural Language Processing 24 MORPHOLOGY Lecture 1, 7/21/2005 Natural Language Processing 25 Morphology Morphology is the study of the ways that words are built up from smaller meaningful units called morphemes (morph = shape, logos = word) We can usefully divide morphemes into two classes Stems: The core meaning bearing units Affixes: Bits and pieces that adhere to stems to change their meanings and grammatical functions Prefix: un-, anti-, etc Suffix: -ity, -ation, etc Infix: are inserted inside the stem Tagalog: um + hingi humingi Circumfixes – precede and follow the stem English doesn’t stack more affixes. But Turkish can have words with a lot of suffixes. Languages, such as Turkish, tend to string affixes together are called agglutinative languages. Lecture 1, 7/21/2005 Natural Language Processing 26 Surface and Lexical Forms The surface level of a word represents the actual spelling of that word. geliyorum eats cats kitabım The lexical level of a word represents a simple concatenation of morphemes making up that word. gel +PROG +1SG eat +AOR cat +PLU kitap +P1SG Morphological processors try to find correspondences between lexical and surface forms of words. Morphological recognition/ analysis – surface to lexical Morphological generation/ synthesis – lexical to surface Lecture 1, 7/21/2005 Natural Language Processing 27 Morphology: Morphemes & Order Handles what is an isolated form in written text Grouping of phonemes into morphemes sequence deliverables ~ deliver, able and s (3 units) Morpheme Combination certain combinations/sequencing possible, other not: deliver+able+s, but not able+derive+s; noun+s, but not noun+ing typically fixed (in any given language) 28 Inflectional & Derivational Morphology We can also divide morphology up into two broad classes Inflectional Derivational Inflectional morphology concerns the combination of stems and affixes where the resulting word Has the same word class as the original Serves a grammatical/semantic purpose different from the original After a combination with an inflectional morpheme, the meaning and class of the actual stem usually do not change. eat / eats pencil / pencils After a combination with an derivational morpheme, the meaning and the class of the actual stem usually change. compute / computer do / undo friend / friendly Uygar / uygarlaş kapı / kapıcı The irregular changes may happen with derivational affixes. Lecture 1, 7/21/2005 Natural Language Processing 29 Morphological Parsing Morphological parsing is to find the lexical form of a word from its surface form. cats -- cat +N +PLU cat -- cat +N +SG goose -- goose +N +SG or goose +V geese -- goose +N +PLU gooses -- goose +V +3SG catch -- catch +V caught -- catch +V +PAST or catch +V +PP There can be more than one lexical level representation for a given word. (ambiguity) Lecture 1, 7/21/2005 Natural Language Processing 30 Morphological Analysis Analyzing words into their linguistic components (morphemes). Morphemes are the smallest meaningful units of language. cars car+PLU giving give+PROG AsachhilAma AsA+PROG+PAST+1st I/We was/were coming Ambiguity: More than one alternatives flies flyVERB+PROG flyNOUN+PLU mAtAla kare Lecture 1, 7/21/2005 Natural Language Processing 31 Fly + s flys flies (y i rule) Duckling Go-getter get + er Doer do + er Beer ? What knowledge do we need? How do we represent it? How do we compute with it? Lecture 1, 7/21/2005 Natural Language Processing 32 Knowledge needed Knowledge of stems or roots Duck is a possible root, not duckl We need a dictionary (lexicon) Only some endings go on some words Do + er ok Be + er – not ok In addition, spelling change rules that adjust the surface form Get + er – double the t getter Fox + s – insert e – foxes Fly + s – insert e – flys – y to i – flies Chase + ed – drop e - chased Lecture 1, 7/21/2005 Natural Language Processing 33 Put all this in a big dictionary (lexicon) Turkish – approx 600 106 forms Finnish – 107 Hindi, Bengali, Telugu, Tamil? Besides, always novel forms can be constructed Anti-missile Anti-anti-missile Anti-anti-anti-missile …….. Compounding of words – Sanskrit, German Lecture 1, 7/21/2005 Natural Language Processing 34 Morphology: From Morphemes to Lemmas & Categories Lemma: lexical unit, “pointer” to lexicon typically is represented as the “base form”, or “dictionary headword” possibly indexed when ambiguous/polysemous: state1 (verb), state2 (state-of-the-art), state3 (government) from one or more morphemes (“root”, “stem”, “root+derivation”, ...) Categories: non-lexical small number of possible values (< 100, often < 5-10) 35 Morphology Level: The Mapping Formally: A+ 2(L,C1,C2,...,Cn) A is the alphabet of phonemes (A+ denotes any non-empty sequence of phonemes) L is the set of possible lemmas, uniquely identified Ci are morphological categories, such as: grammatical number, gender, case person, tense, negation, degree of comparison, voice, aspect, ... tone, politeness, ... part of speech (not quite morphological category, but...) A, L and Ci are obviously language-dependent 36 Morphological Analysis (cont.) Relatively simple for English. But for many Indian languages, it may be more difficult. Examples Inflectional and Derivational Morphology. Common tools: Finite-state transducers Lecture 1, 7/21/2005 Natural Language Processing 37 Simple Rules Lecture 1, 7/21/2005 Natural Language Processing 38 Adding in the Words Lecture 1, 7/21/2005 Natural Language Processing 39 Derivational Rules Lecture 1, 7/21/2005 Natural Language Processing 40 Parsing/Generation vs. Recognition Recognition is usually not quite what we need. Usually if we find some string in the language we need to find the structure in it (parsing) Or we have some structure and we want to produce a surface form (production/generation) Example From “cats” to “cat +N +PL” and back Lecture 1, 7/21/2005 Natural Language Processing 41 Finite State Transducers The simple story Add another tape Add extra symbols to the transitions On one tape we read “cats”, on the other we write “cat +N +PL”, or the other way around. Lecture 1, 7/21/2005 Natural Language Processing 42 FSTs Lecture 1, 7/21/2005 Natural Language Processing 43 Transitions c:c a:a t:t +N:ε +PL:s c:c means read a c on one tape and write a c on the other +N:ε means read a +N symbol on one tape and write nothing on the other +PL:s means read +PL and write an s Lecture 1, 7/21/2005 Natural Language Processing 44 Typical Uses Typically, we’ll read from one tape using the first symbol on the machine transitions (just as in a simple FSA). And we’ll write to the second tape using the other symbols on the transitions. Lecture 1, 7/21/2005 Natural Language Processing 45 Ambiguity Recall that in non-deterministic recognition multiple paths through a machine may lead to an accept state. Didn’t matter which path was actually traversed In FSTs the path to an accept state does matter since differ paths represent different parses and different outputs will result Lecture 1, 7/21/2005 Natural Language Processing 46 Ambiguity What’s the right parse for Unionizable Union-ize-able Un-ion-ize-able Each represents a valid path through the derivational morphology machine. Lecture 1, 7/21/2005 Natural Language Processing 47 Ambiguity There are a number of ways to deal with this problem Simply take the first output found Find all the possible outputs (all paths) and return them all (without choosing) Bias the search so that only one or a few likely paths are explored Lecture 1, 7/21/2005 Natural Language Processing 48 The Gory Details Of course, its not as easy as “cat +N +PL” <-> “cats” As we saw earlier there are geese, mice and oxen But there are also a whole host of spelling/pronunciation changes that go along with inflectional changes Cats vs Dogs Fox and Foxes Lecture 1, 7/21/2005 Natural Language Processing 49 Multi-Tape Machines To deal with this we can simply add more tapes and use the output of one tape machine as the input to the next So to handle irregular spelling changes we’ll add intermediate tapes with intermediate symbols Lecture 1, 7/21/2005 Natural Language Processing 50 Generativity Nothing really privileged about the directions. We can write from one and read from the other or viceversa. One way is generation, the other way is analysis Lecture 1, 7/21/2005 Natural Language Processing 51 Multi-Level Tape Machines We use one machine to transduce between the lexical and the intermediate level, and another to handle the spelling changes to the surface tape Lecture 1, 7/21/2005 Natural Language Processing 52 Lexical to Intermediate Level Lecture 1, 7/21/2005 Natural Language Processing 53 Intermediate to Surface The add an “e” rule as in fox^s# <-> foxes# Lecture 1, 7/21/2005 Natural Language Processing 54 Foxes Lecture 1, 7/21/2005 Natural Language Processing 55 Note A key feature of this machine is that it doesn’t do anything to inputs to which it doesn’t apply. Meaning that they are written out unchanged to the output tape. Turns out the multiple tapes aren’t really needed; they can be compiled away. Lecture 1, 7/21/2005 Natural Language Processing 56 Overall Scheme We now have one FST that has explicit information about the lexicon (actual words, their spelling, facts about word classes and regularity). Lexical level to intermediate forms We have a larger set of machines that capture orthographic/spelling rules. Intermediate forms to surface forms Lecture 1, 7/21/2005 Natural Language Processing 57 Overall Scheme Lecture 1, 7/21/2005 Natural Language Processing 58