CURRENT ISSUES IN CLINICAL NUTRITION

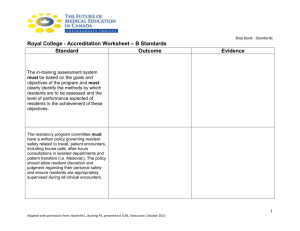

advertisement

ADVANCES IN GME: Mastering Accreditation, Learner Assessment, and the Learning Environment Robert B. Baron, MD MS Associate Dean, Graduate and Continuing Medical Education Designated Institutional Official (DIO) UCSF Disclosure: No relevant financial relationships or conflicts of interest Today’s Agenda Understanding ACGME Accreditation Understanding the Clinical Learning Environment Review (CLER) Best Practices in Learner Assessment: Drs. Hung, Rosenbluth, Coffa Accreditation Challenges Extra work, risk of poor outcome Opportunity to identify and build on assets and strengthen weaknesses Definitions Accreditation: whether a residency or fellowship is in substantial compliance with established educational standards. Responsibility of the ACGME and its Residency Review Committees (RRCs) Certification: whether a individual physician has met the requirements of a particular specialty. Responsibility of the member boards of the American Board of Medical Specialties (ABMS). Accreditation Basics Each Sponsoring Institution is accredited by the ACGME At UCSF the Sponsoring Institution is the School of Medicine (at many other institutions--including ½ of medical schools-- it is the “Teaching Hospital”) Each Program is accredited by the ACGME 2014 – 2015 UCSF Demographics 1,471 Trainees 176 Programs 931 Residents 279 ACGME/ABMS Fellows 244 Non-ACGME Fellows 17 Non-MD Trainees 26 Residencies 60 ACGME/ABMS Fellowships 84 Non-ACGME Fellowships 6 Non-MD Training 27 ACGME Residency Review Committees (RRC) Two major responsibilities: Develop and approve training standards Review and accredit residency and fellowship programs Remember: they are us! ACCREDITATION RESOURCES Office of GME staff and faculty Other UCSF program directors and coordinators Other program directors in your specialty. Attend regional and national meetings Call the RRC staff (but call us first to discuss) Why Did ACGME Create a New (“Next”) Accreditation System (NAS)? • • • • Reduce the burden of accreditation Free good programs to innovate Assist poor programs to improve Realize the promise of Outcomes Project • Provide public accountability for GME outcomes NAS Big Picture • Less prescriptive program requirements that promote curricular innovation • Continuous accreditation model • Annual monitoring of programs based on performance indicators/outcomes • Holding sponsoring institutions responsible for oversight of educational and clinical systems The Building Blocks of the Next Accreditation System Program Self Study Visits – 10 years Institutional Self Study Visits– 10 years Additional Site Visits as Needed Continuous RRC Oversight and Accreditation Core Program Oversight of Subs Sponsor Oversight for All CLER Visits every 18 months How is the burden reduced? • • • • • No Program Information Forms (PIFs) Scheduled program visits from ACGME every 10 years Focused site visits when “issues” are identified Formal mid-cycle internal reviews no longer required Most data elements used in NAS are already in place in ADS • Streamlined ADS annual update – Removed 33 questions – 14 questions simplified – Faculty CVs removed (except for Program Director) – 11 multiple choice or yes/no questions added Categorized Program Requirements Program requirements are now categorized as core, detail, and outcome. Core Statements that define structure, resource, or process elements essential to every program Detail Outcome Statements that describe specific structure, resource or process for achieving compliance with a Core Requirement Statements that specify expected measurable or observable attributes (knowledge, abilities, skills, or attitudes) of trainees at key stages of their education Categorization of Program Requirements: Reduce Burden + Promote Innovation •Why is this important? – Programs in good standing can innovate – not asked whether adhering to detailed PR – But: detailed PR do not go away. PDs will not need to demonstrate compliance with these PRs, unless it becomes evident that a particular outcome or core PR is not being met Read your requirements… 10-year Self Study • Addresses: 1) Citations, areas for improvement, other information from ACGME 2) Strengths and areas for improvement identified by • Annual Program Evaluation (APE) • Other program/institutional sources • Compliance with core requirements, faculty development, etc Data from entire period will be used Outcome Data for Annual Review • • • • • • • • • Program attrition Program changes Scholarly activity (faculty and trainees) Board pass rate (from Boards) Clinical experience (case logs, survey data) Resident survey Faculty Survey Milestones (CLER visit data) 2014 ACGME Resident/Fellow and Faculty Surveys Overall evaluation of the program Resident/Fellow Survey Faculty Survey Very Positive 66% 89% Positive 27% 10% Neutral 6% 1% Negative <<1% 0% Very Negative <<1% 0% UCSF Areas for Improvement 2014 Aggregated Survey • • • • • • • • Duty hours Confidential evaluations Use evaluations to improve Feedback after assignments Education compromised Data about practice habits Transition care if fatigued Raise concerns without fear 93% 81% 72% 61% 66% 48% 78% 82% RRC Letters of Notification • Citations • Levied by RRC without a site visit • Linked to program requirements • Reviewed annually by RRC • Reviewed during site visits • Removed (quickly) based on progress report, site visit, new annual data. Older ones removed after two years • Areas for Improvement (AFI) • Annual data raises an issue. “General concerns” • May be given by staff • Not linked to program requirement • No response required • Slate remains clean-based on each year’s submissions • Not the same as citations Special Reviews Replaces time-based, formal mid-cycle Internal Review Three types: Initial review (prior to first site visit) Periodic review (1-2 years prior to first self study) Special review (programs with relative underperformance as reviewed by GMEC) ACGME Update ACGME resident, fellow and faculty surveys ACGME RRC notifications - especially site visits UCSF Duty hour reports (UCSF) UCSF Program Directors Annual Update (UCSF) Subspecialty (Fellowship) Programs • In NAS: Core residency and subspecialty programs reviewed together • Self study visits will assess both together • Letters of Notification will include both • Assures that core residency and subspecialty programs will use resources effectively New ACGME Program Requirement The specialty-specific Milestones must be used as one of the tools to ensure residents are able to practice core professional activities without supervision upon completion of the program. (Core) ACGME Reporting Milestones (example Internal Medicine) Milestones Defined • Milestones are NOT an evaluation tool. Milestones are a reporting instrument. • The Clinical Competence Committee (CCC) of each program will review assessment data. • The CCC will take data and apply them to the milestones to mark the progress of a resident. Milestones defined Meaningful, measurable markers of progression of competence – What abilities does the trainee possess at a given stage? – What can the trainee be entrusted with? Learner Assessment Skeleton • In-training exam (or other knowledge tests) • End of rotation assessments (global assessment—fewer, more focused) • Direct observations (CEX, on-the-fly, check lists, procedures, etc) • Multi-source feedback (self, peers, students, other professional staff, patients) • Learner portfolio (Clinical experience-case logs, etc), conference presentations, QI work, scholarship, teaching, reflection, learning plans, etc) Milestone Neurology Milestones Related to Competencies (and Subcompetencies) Entrustable Professional Activities (EPAs) Define important clinical activities Link competencies/milestones Include professional judgment of competence by clinicians EPA defined A core unit of work reflecting a responsibility that should only be entrusted upon someone with adequate competencies Ole ten Cate, Medical Teacher 2010;32:669-675 Competencies versus EPAs • • • • • • Competencies EPAs person-descriptors work-descriptors knowledge, skills, attitudes, values essential parts of professional practice content expertise collaboration ability communication ability management ability professional attitude scholarly habits • • • • • • discharge patients counsel patients design treatment plans lead family meetings perform paracenteses resuscitate if needed EPA Examples Caring for an acute stroke patient Discharging a patient from the hospital and preventing readmissions Conducting a family meeting about withdrawal of support Driving a car at night (in the rain, on the freeway) The Competency-EPA Framework EPA1 EPA2 EPA3 Medical Know ++ ++ + Patient Care + Communication + Professionalism + + SBP + EPA5 ++ ++ ++ + PBLI EPA4 + ++ ++ ++ + ++ Clinical Competence Committee (CCC) • Each program/program director will be required to form a Clinical Competence Committee (CCC) • Composition: minimum three faculty; also OK non-physician members and senior residents • Review all evaluations • Report milestones to ACGME • Recommend to PD re promotion remediation dismissal. CCC Processes • Consensus-based recommendations • Respect personal privacy • Objective, behavior-based assessments • Summary minutes taken by program coordinator • Various trainee review strategies will work • Identify areas of CCC and program weakness for annual review Program Evaluation Committee • Required for each residency and fellowship • Must have a written description • Appointed by Program Director (PD) • Oversee curriculum development and program evaluation (APE) • PD may be chair or appoint chair • Two faculty and one resident or fellow • Must meet (at least) annually Program Evaluation Committee • Review and revise goals and objectives • Address areas of ACGME non-compliance • Review program using evaluations of faculty, residents, and others • Write an Annual Program review (APE), with 35 action items • Track: resident performance, faculty performance, graduate performance (including Boards), program quality and progress on previous years action plans CLER Visit – December 2 – 4, 2014 • Team of four visitors • Met with: – Senior leadership – 70 residents and fellows – 70 program directors – 70 teaching faculty – Walking rounds of 30 clinical areas • Spoke to residents, fellows, nurses, techs, etc. • Observed three end-of-shift hand-offs Findings: Patient Safety Residents/ Program Fellows Directors Faculty Knew UCSF Medical Center patient safety priorities 55% 82% 83% Received formal education/training about patient safety 92%* Believed UCSF Medical Center provides a safe, nonpunitive environment for reporting errors, near misses, and unsafe conditions 90% Experienced an adverse event or near miss 75%** 86% 84% 82% 70% Believed less than half of trainees have reported a patient safety event using the IR system Opportunity to participate in an RCA 41% * On walking rounds, knowledge of terminology and principles varied ** 44% of those, reported the event; 13% relied on a nurse to report; 31% relied on a physician supervisor; 11% didn’t submit a report Findings: Healthcare Quality Residents/ Program Fellows Directors Faculty Knew UCSF Medical Center healthcare QI priorities 59% 67% 75% Engaged with Medical Center leadership in developing and advancing quality strategy 12% Participated in a QI activity directed by Medical Center administration 78% Participated in a QI project of their own design or one designed by their program/department 88% Residents/fellows have access to organized systems for collecting/analyzing data for the purpose of QI 63% 83% 72% Findings: Transitions in Care Knew UCSF Medical Center priorities for improving transitions of care Residents/ Program Fellows Directors Faculty 62% 73% 87% Use standardized process for sign-off and transfer of 83% patient care during change of duty Use written templates of patient information to facilitate hand-off process 65% Use standardized processes for transfers of patients between floors/units 53% Use standardized processes for transfers from inpatient to outpatient care 57% • During walking rounds: – Nurses and trainees expressed concerns about patient transfers from one level of care to another – Observed hand-offs varied in use of templates, style of template, and format/level of information detail relayed – Faculty present in only one observed hand-off Findings: Supervision Residents/ Program Fellows Directors Faculty Residents/fellows always know what they are allowed to do with and without direct supervision 97% 100% 100% Been placed in a situation or witnessed one of their peers in a situation at UCSF Medical Center where they believed there was inadequate supervision 22% Have an objective way of knowing which procedures a particular resident/fellow is allowed to perform with or without direct supervision 93% 92% In the past year, had to manage an issue of resident/fellow supervision that resulted in a patient safety event 27% Perception of patients’ awareness of the different roles of residents/fellows and attending physicians 22% 56% 23% Findings: Duty Hours, Fatigue Management, and Mitigation Residents/ Program Fellows Directors Faculty Received education on fatigue management and mitigation 90% 92% 73% Scenario: Maximally fatigues resident two hours before end of shift; what would you (or residents in general) do? 37% power through 10% 24% Underreporting of moonlighting time by residents and fellows 20% Recalled a patient safety event related to trainee fatigue 8% Findings: Professionalism Residents/ Program Fellows Directors Received education on various professionalism topics during orientation 78% Received education on various professionalism topics throughout training 74% Believe UCSF Medical Center provides a supportive, non-punitive environment for bringing forward concerns regarding honesty in reporting 88% While at UCSF Medical Center, there was at least one occasion where pressure was felt to compromise integrity to satisfy an authority figure 20% Documented a history or physical finding in a patient chart they did not personally elicit 64% Believe the majority of residents/fellows have documented a history or physical finding in a patient chart they did not personally elicit 51% Faculty 48% Summary: UCSF CLER Opportunities • • • • • Continued work on MD incident reporting Better feedback and dissemination of IR results Increase participation in RCA’s Greater engagement of housestaff in QI strategy More analysis and dissemination of clinical outcomes in vulnerable populations • More standardization of handoffs (all clinicians) • Better fatigue mitigation • Enhanced EHR professionalism Summary ACGME NAS • The NAS started July 2013 • More work early. Less burden long term? • Greater opportunity for innovation for high functioning programs • Better learner assessment and outcome measurement • Much higher expectations re learner engagement in clinical environment. • Ten year cycles and self studies (PDSA, SWOT, etc) • Greater public accountability Keeping an “E” in GME Meet your program requirements, but be innovative Collaborate at UCSF and nationally Work hard on your annual program evaluation and continuous improvement processes Support our residents and fellows