Experimental Methods in Management Accounting Research

advertisement

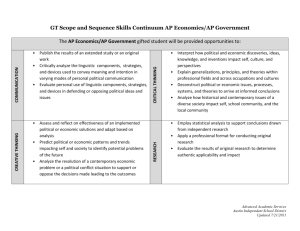

Experimental Methods in Management Accounting Research Critiquing Your Own and Others’ Experiments What is the purpose of an experiment? • To test theory under highly controlled conditions, and thus . . . • . . . to decide among alternative explanations for observed phenomena. – “Real world” data often does not support decisive tests of plausible competing theories 2 Critiques of experiments should be based on . . . • Quality of theory on which experiment is based • Quality of operationalization of theory • Quality of experimental control and analysis 3 Critiques of experiments often are based on . . . • Observations of differences between experimental setting and real world. • Such criticism may be – (a) devastating, or – (b) vacuous 4 Re-creating the real world • Someone asked an American poet: “Should literature try to re-create the real world?” • Answer: – “No. One of the damned thing is enough.” 5 What features of the real management-decision environment must be captured in experiments? 6 Shoes don’t matter . . . • How do we know what does matter? • What factors should be present, manipulated, measured, controlled for . . . .? • Answer must be theory-based. 7 Management accounting research draws on two experimental traditions • Experimental economics • Experimental psychology • Different traditions of “theory” and “control” – but both stress value of artificiality for control purposes. 8 Common errors to avoid . . . • Don’t “work with underspecified, vague, or nonexistent theories and try to generalize anyway by applying findings directly.” – Swieringa and Weick, JAR 1982 9 Example • The purpose of an experiment is to test theory. Statements like this are not theories: – “More accurate information is better.” – “Teamwork is better.” – “Fair reward systems are better.” 10 By contrast . . . . Two theory-based experimental studies on cost information quality 11 1. Callahan and Gabriel, CAR 1998 – Accuracy: less noise in reports of marginal cost (expected marginal cost is known) – Subjects choose production quantity (price) in Cournot (Bertrand) duopolies. – Greater accuracy increased profits in Cournot markets but decreased profits in Bertrand markets 12 2. Drake 1999 • Cost information = breakdown of overhead costs by activity/ feature of product (vs lump-sum overhead for product) • Subjects negotiate contract for sale of components (price and component features). • More detailed cost info ==> increase in buyer/ seller common surplus when data shared -- but but also less information sharing. 13 Callahan & Gabriel study is unrealistic . . . . • Nineteen-year olds interacting via computer networks are not representative of important interactions in the economy. • Small sums of money involved. • Individual not institutional decisions. • Expected marginal costs known and identical across firms • One-period world. 14 Does the lack of realism matter? • Does the experiment misrepresent the underlying theory by (e.g.) using nineteenyear olds and small sums of money? • Does the theory misrepresent important real-world phenomena? 15 Theories • Economics – A theory of the domain – Other social sciences don’t tell us much about production functions, cost functions, market structures . . . • Psychology – A theory of the people in the domain – Economics does not have very plausible theories of cognition or preferences. 16 Control • Experimental Economics: control through specification – If it’s not in the theory, find ways of keeping it out of the lab! • Experimental Psychology: control through comparisons – If it’s not in the theory, find ways of holding it constant! 17 Experimental Economics • Start with a model that specifies (e.g.) – Actions – Outcomes & probability distributions of outcomes conditional on actions – Payoffs – Utility functions – Information & communication structure – Resulting equilibrium 18 Control through specification • If it’s in the model, operationalize it in the lab! – Induce utility functions in model • If it’s not in the model keep it out of the lab! – Keep task and information abstract 19 Example: concrete vs. abstract • U = U(w, a) • where w = wealth and a = effort, • U’(w) > 0, U’(a) < 0 • Some experiments use monetary payoffs to represent both w and a. Is this a problem? – Operationalize the math, not the words. 20 A few problems . . . • Most models have simple utility functions, U = U(w,a). People bring other preferences to the lab with them. • Experimental econ solution: be sure monetary payoffs dominate other considerations. 21 How easy (possible) is it to achieve dominance in the lab? – Baiman and Lewis 1989 • Easy – Evans et al. 1999 • Not easy. – Kachelmeier and Towry 1999 • Depends on context. – What game do people think they’re playing? This probably matters in both lab and ‘real world.’ 22 A few problems, cont. . . . • We want to test interesting models • Interesting models often have – Surprising (unintuitive) solutions, or – Solutions that are too hard to work out intuitively 23 This means . . . • People in the lab will not (at first) do what interesting models say they will do. • They may do so eventually, with appropriate incentives and learning opportunities, but . . . 24 Lab tests must allow for • Difficulty of problem, time & practice to figure it out, “costs of thinking . . .” – Multiple trials used to test one-period models. • But these are auxiliary assumptions tacked onto the model, with little theoretical basis. 25 Experimental Psychology: Control through Comparisons • Economics-based experiments can in principle have just one experimental condition and test for equilibrium in the given model. • Psychology doesn’t do one-condition experiments. Structured designs: – 1 x 4, 3 x 3, –2x2x2x2..... 26 Assumption: we can’t convincingly specify everything . . . • We won’t succeed in restricting people’s utility functions to one argument and clearing everything out of their brains except the conditional probability distribution of payoff exactly as represented with the bingo cage. • So . . . . 27 Control through comparisons • Rather than try to eliminate nuisance factors, hold them constant. Create multiple experimental conditions that differ only on the variables of interest. • Test for differences between conditions-and differences in differences (interactions) to deal with factors that cannot be held constant. 28 Example: Vera-Muñoz, TAR 1998 • Is “thinking accounting” different from “thinking management”? Is a financial-statement-based model of the firm a poor basis for management decision-making? • Too much accounting training ingrains in people mental models of business based on financial statements, leading them to think (e.g.) in historical-cost not opportunity-cost terms. 29 Experimental Design • Task. Make a recommendation about when to relocate a store that will lose its lease next year. • Difference: Subjects who have taken more accounting courses omit more opportunity costs. 30 • Problem! – People who have taken more accounting courses (M.S. students vs. MBA’s) might not only be more financial-statement-minded. – They might be stupider, less motivated, have less understanding of business . . . . • Solution – Differences in differences: an interaction test. 31 Interaction: 2 x 2 design • Two identically structured tasks given to subjects with high or low accounting training – Recommend when a store that will lose its lease should move (business context) – Recommend when an individual who will lose his data processing job should move (personal context) 32 Differences in differences • Subjects pick up most of the opportunity costs in the personal context, regardless of how many accounting courses they’ve taken, but-- • Subjects with many accounting courses omit opportunity costs in the business context. 33 Control through comparisons . . . • . . . Often requires the inclusion of conditions that are not ‘realistic’ or interesting in themselves. • Criticisms like this are inappropriate: – “18,000-a-year data clerks don’t go to professional accountants or consultants for advice about when to leave a job. This condition is unrealistic and shouldn’t be in the experiment.” 34 The value of artificiality (again) . . . . • “Situations which are rare in the natural world are often ideal to test theoretical derivations.” – Swieringa and Weick 1982 35 Another interaction example . . . • Luft & Shields 1999 – Cognitive value of nonfinancial reporting of quality measures (vs. cost of quality) – Field-based literature sometimes argues that relations of NF measures are more transparent, easier for ordinary employees to understand. 36 Experiment – Subjects examine data on % defects (rework & spoilage expense) and profits. – r(defects, profit) = r(rework $$, profit) – Ss detect the relation between past quality and future profits more accurately with the nonfinancial measure. – Profit prediction task used to measure detection of relation. 37 But . . . . • Maybe people bring different priors about % defects and rework $$ to the task, and so they make different profit predictions even though they see the same relations in the sample data provided. • Maybe the problem isn’t what people can or can’t ‘see’ in eyeballing the data. Other factors are affecting their judgments. 38 So there’s an additional experimental condition . . . • . . . In which Ss are given a statistical analysis of the defects (NF) - profit, or rework (F) - profit relation in the sample data. • If people make worse profit predictions in the rework condition because they can’t see the correlation in the raw data as well in this condition, then providing the stats should solve the problem. If people have other reasons for predicting differently in this condition, providing the stats shouldn’t solve the problem. 39 Experiments, models, and the ‘real world’ • Empirical research outside the lab defines important problems, documents prevailing practices, and provides limited evidence for or against theory. • Analytical modeling develops theories about how key variables affect each other. • Experimental research tests (competing) theories under highly controlled conditions. 40