Slides

advertisement

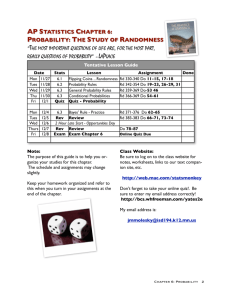

Machine Learning in Practice Lecture 7 Carolyn Penstein Rosé Language Technologies Institute/ Human-Computer Interaction Institute Plan for the Day Announcements No new homework this week No quiz this week Project Proposal Due by the end of the week Naïve Bayes Review Linear Model Review Tic Tac Toe across models Weka Helpful Hints O X X X O O X O X Project proposals If you are using one of the prefabricated projects on blackboard, let me know which one Otherwise, tell me what data you are using Number of instances What you’re predicting What features you are working with 2 sentence description of what your ideas are for improving performance If convenient, let me know what the baseline performance is Example of ideas: How could you expand on what’s here? Example of ideas: How could you expand on what’s here? Add features that describe the source Example of ideas: How could you expand on what’s here? Add features that describe things that were going on during the time when the poll was taken Example of ideas: How could you expand on what’s here? Add features that describe personal characteristics of the candidates Getting the Baseline Performance Percent correct Percent correct, controlling for correct by chance Performance on individual categories Confusion matrix * Right click in Result list and select Save Result Buffer to save performance stats. Clarification about Cohen’s Kappa Coder 2’s Codes A B A 5 2 7 B 1 8 9 6 10 16 OverallTotal Assume 2 coders were assigning instances to category A or category B, and you want to measure their agreement. Coder 1’s Codes Total agreements = 13 Percent agreement = 13/16 = .81 Agreement by chance = i(Rowi*Coli)/OverallTotal = 7*6/16 + 9*10/16 = 2.63 + 5.63 = 8.3 Kappa = (TotalAgreement – Agreement by Chance)/ (Overall Total – Agreement by Chance) = (13 – 8.3)/(16 – 8.3) = 4.7 / 7.7 = .61 Naïve Bayes Review Naïve Bayes Simulation You can modify the Class counts and Counts for each attribute value within each class. You can also turn smoothing on or off. Finally, you can manipulate the attribute values for the instance you want to classify with your model. Naïve Bayes Simulation You can modify the Class counts and Counts for each attribute value within each class. You can also turn smoothing on or off. Finally, you can manipulate the attribute values for the instance you want to classify with your model. Naïve Bayes Simulation You can modify the Class counts and Counts for each attribute value within each class. You can also turn smoothing on or off. Finally, you can manipulate the attribute values for the instance you want to classify with your model. Linear Model Review What do linear models do? Notice that what you want to predict is a number You use the number to order instances Result = 2*A - B - 3*C Actual values between 2 and -4, rather than between 1 and 5, but order is the same. Order affects correlation, actual value affects absolute error. You want to learn a function that can get the same ordering Linear models literally add evidence What do linear models do? If what you want to predict is a category, you can assign values to ranges Sort Result = 2*A - B - 3*C Actual values between 2 and -4, rather than between 1 and 5, but order is the same. instances based on predicted value Cut based on threshold i.e., Val1 where f(x) < 0, Val2 otherwise What do linear models do? F(x) = X0 + C1X1 + C2X2 + C3X3 X1-Xn are our attributes C1-Cn are coefficients We’re learning the coefficients, which are weights Think of linear models as imposing a ranking on instances Features associated with one class get negative weights Features associated with the other class get positive weights More on Linear Regression Linear regressions try to minimize the sum of the squares of the differences between predicted values and actual values for all training instances Sum over all instances [ Square(predicted value of instance – actual value of instance) ] Note that this is different from back propagation for neural nets that minimize the error at the output nodes considering only one training instance at a time What is learned is a set of weights (not probabilities!) Limitations of Linear Regressions Can only handle numeric attributes What do you do with your nominal attributes? You could turn them into numeric attributes For example: red = 1, blue = 2, orange = 3 But is red really less than blue? Is red closer to blue than it is to orange? If you treat your attributes in an unnatural way, your algorithms may make unwanted inferences about relationships between instances Another option is to turn nominal attributes into sets of binary attributes Performing well with skewed class distributions Naïve Bayes has trouble with skewed class distributions because of the contribution of prior probabilities Linear models can compensate for this They don’t have any notion of prior probability per se If they can find a good split on the data, they will find it wherever it is Problem if there is not a good split Skewed but clean separation Skewed but clean separation Skewed but no clean separation Skewed but no clean separation Tic Tac Toe Tic Tac Toe O X X X O O X O X What algorithm do you think would work best? How would you represent the feature space? What cases do you think would be hard? Tic Tac Toe O X X X O O X O X Tic Tac Toe O X Decision Trees: .67 Kappa SMO: .96 Kappa Naïve Bayes: .28 Kappa What do you think is different about what these algorithms is learning? X X O O X O X Decision Trees Naïve Bayes O X X X O O X O X Each conditional probability is based on each square in isolation Can you guess which square is most informative? Linear Function Counts every X as evidence of winning If there are more X’s, then it’s a win for X Usually right, except in the case of a tie O X X X O O X O X Take Home Message Naïve Bayes is affected by prior probabilities in two places Note that prior probabilities have an indirect effect on all conditional probabilities Linear functions are not directly affected by prior probabilities So sometimes they can perform better on skewed data sets Even with the same data representation, different algorithms learn something different Naïve Bayes learned that the center square is important Decision trees memorized important trees Linear function counted Xs Weka Helpful Hints Use the visualize tab to view 3-way interactions Use the visualize tab to view 3-way interactions Click in one of the boxes to zoom in Use the visualize tab to view 3-way interactions