neurocognitive inspiraitons in Natural Language Processing

advertisement

Cognitive Architectures:

Where do we go from here?

Włodek Duch

Department of Informatics,

Nicolaus Copernicus University, Poland

Google: W. Duch

Richard J. Oentaryo and Michel Pasquier

School of Computer Engineering,

Nanyang Technological University, Singapore

AGI, Memphis, 1-2 March 2007

Plan

Overview of cognitive architectures suitable for AGI.

• AI failures

• Grand challenges for AGI

• Symbolic cognitive architectures

• Emergent cognitive architectures

• Hybrid cognitive architectures

• Where do we go from here?

Failures of AI

Many ambitious general AI projects failed, for example:

A. Newell, H. Simon, General Problem Solver (1957).

Eduardo Caianiello (1961) – mnemonic equations explain everything.

5th generation computer project 1982-1994.

AI has failed in many areas:

• problem solving, reasoning

• flexible control of behavior

• perception, computer vision

• language ...

Why?

• Too naive?

• Not focused on applications?

• Not addressing real challenges?

Ambitious approaches…

CYC, started by Douglas Lenat in 1984, commercial since 1995.

Developed by CyCorp, with 2.5 millions of assertions linking over

150.000 concepts and using thousands of micro-theories (2004).

Cyc-NL is still a “potential application”, knowledge representation in

frames is quite complicated and thus difficult to use.

Hall baby brain – developmental approach, www.a-i.com

Open Mind Common Sense Project (MIT): a WWW collaboration with over

14,000 authors, who contributed 710,000 sentences; used to generate

ConceptNet, very large semantic network.

Some interesting projects are being developed now around this network but

no systematic knowledge has been collected.

Other such projects:

HowNet (Chinese Academy of Science),

FrameNet (Berkeley), various large-scale ontologies,

MindNet (Microsoft) project, to improve translation.

Mostly focused on understanding all relations in text/dialogue.

Challenges: language

• Turing test – original test is too difficult.

• Loebner Prize competition, for almost two decades

played by chatterbots based on template or contextual

pattern matching – cheating can get you quite far ...

• A “personal Turing test” (Carpenter and Freeman), with programs

trying to impersonate real personally known individuals.

• Question/answer systems; Text Retrieval Conf. (TREC) competitions.

• Word games, 20-questions game - knowledge of objects/properties,

but not about complex relations between objects. Success in learning

language depends on automatic creation, maintenance and the ability

to use large-scale knowledge bases.

• Intelligent tutoring systems? How to define milestones?

Challenges: reasoning

• Super-expert system in a narrow domain (Feigenbaum), needs a lot

of general intelligence to communicate, should reason in math,

bioscience or law, experts will pose problems, probe understanding.

• Same direction, but without NLP: Automated Theorem Proving (ATM)

System Competitions (CASC) in many sub-categories.

• General AI in math: general theorem provers, perhaps using metalearning techniques with specialized modules + NLP.

• Automatic curation of genomic/pathways databases, creation of

models of genetic and metabolic processes for bioorganisms.

• Partners that advice humans in their work, evaluating their reasoning

(theorem checking), adding creative ideas, interesting associations.

Real AGI?

• General purpose systems that can be taught skills needed to

perform human jobs, and to measure which fraction of these jobs can

be done by AI systems (Nilsson, Turing’s “child machine”).

• Knowledge-based information processing jobs – progress measured by

passing a series of examinations, ex. accounting.

• Manual labor requires senso-motoric coordination, harder to do?

• DARPA Desert & Urban Challenge competitions (2005/07), old

technology, integration of vision, signal processing, control, reasoning.

• Humanoid robotics: understanding of perception, attention, learning

casual models from observations, hierarchical learning with different

temporal scales.

• “Personal Assistants that Learn” (PAL), DARPA 2007 call, SRI+21 inst.

5-year project to create partners/personal assistants, rather than

complete replacements for human workers (also CM RADAR).

• Many jobs in manufacturing, financial services, printing houses etc

have been automatized by alternative organization of work, not AI.

Cognitive architectures

• CA frequently created to model human performance in

multimodal multiple task situations, rather than AGI.

• Newell, Unified Theories of Cognition (1990), 12 criteria for CS:

behavioral: adaptive, dynamic, flexible; development, evolution,

learning, knowledge integration, vast knowledge base, natural

language, real-time performance, and brain realization.

Cognitive architectures

Symbolic

Emergent

Memory

Hybrid

Memory

Memory

Rule-based memory

Globalist memory

Localist-distributed

Graph-based memory

Localist memory

Symbolic-connectionist

Learning

Learning

Learning

Inductive learning

Associative learning

Bottom-up learning

Analytical learning

Competitive learning

Top-down learning

Symbolic CA: remarks

• Type of architectures ~ type of problems.

• Physical symbol system (Newell & Simon): input, output & manipulate

symbolic entities, carry actions in order to reach its goals.

• The majority of symbolic CA: centralized control over the information

flow from sensory inputs through memory to motor outputs; logical

reasoning; rule-based representations of perception-action memory;

stress working memory executive functions + semantic memory.

• Graph-based representations: semantic networks and conceptual

graphs, frames/schemata, reactive action packages (RAPs).

• Analytical & inductive learning techniques.

• Analytical: infer other facts that they entail logically, ex.

explanation-based learning (EBL) and analogical learning.

• Inductive learning: examples => general rules; ex. knowledge-based

inductive learning (KBIL), delayed reinforcement learning.

• A few potential candidates for AGI.

SOAR

• SOAR (State, Operator And Result): classic example (>20 y)

of knowledge-based system, approximation to physical symbol

systems expert rule-based CA designed to model general intelligence.

• Knowledge = production rules, operators act in problem spaces.

• Learning through chunking, analytical EBL technique for formulating

rules and macro-operations from problem solving traces.

• Many high-level cognitive functions demonstrated: processing large

and complex rule sets in planning, problem solving & natural language

comprehension (NL-SOAR) in real-time distributed environments.

• Many extensions to basic SOAR CA, not yet fully integrated, include:

learning - reinforcement to adjust the preference values for operators,

episodic learning to retain history of system evolution,

semantic learning to describe more abstract, declarative knowledge,

visual imagery, emotions, moods and feelings used to speed up

reinforcement learning and direct reasoning.

• Still missing: memory decay/forgetting, attention & info selection,

learning hierarchical representations, handling uncertainty/imprecision.

Other symbolic CA

• EPIC (Executive Process Interactive Control), D.E. Kieras, HCI model,

perceptual, cognitive and motor activities, parallel processors,

controlled by production rules + perceptual (visual, auditory, tactile) &

motor processors operating on symbolically coded features.

• EPIC-SOAR for problem solving, planning and learning, applied to air

traffic control simulation.

• ICARUS (P. Langley) integrated CA for physical agents, knowledge as

reactive skills, goal-relevant reactions to a class of problems (~2005).

Includes perceptual, planning, execution and several memory systems.

Percepts => Concepts; knowledge about general classes of objects.

Goals => Skills; procedural knowledge, hierarchical LTM & STM.

Hierarchical, incremental reinforcement learning of skills, attention

focus, fast reactions & search.

Applications to in-city driving, blocks world, games.

• OSCAR (J. Pollock, 1989), defeasible reasoning, logical approach.

Other symbolic CA 2

• NARS (Non-Axiomatic Reasoning System) (Wang, ~20 years),

reasoning based on experience-grounded semantics of the language, a

set of inference rules, a memory structure, and a control mechanism,

carrying out high-level cognitive tasks as different aspects of the same

underlying process. Non-axiomatic logic used for adaptation with

insufficient knowledge/resources, “truth-value” is evaluated according

to the system’s “experience” with using these patterns.

Working NARS prototypes solving relatively simple problems.

• SNePS (Semantic Network Processing System) (Shapiro ~30 y);

logic, frame and network-based knowledge representation, reasoning,

and acting; own inference scheme, logic formula, frame slots and

network path, are integrated in SNIP, the SNePS Inference Package.

Belief revision system handles contradictions.

• The SNePS Rational Engine controls plans and sequences of actions

using frames and believing/disbelieving propositions.

• Used for commonsense reasoning, NLP, cognitive agent, Q/A system

etc, but no large-scale real applications yet.

Emergent CA: general

Cognition emerging from connectionist models, networks

of simple processing elements.

• Globalist and localist memory organization:

learning complex logical functions requires both!

• Globalist: MLP networks use delocalized transfer functions, distributed

representations, outputs depend on all parameters. Good

generalization, learning may lead to catastrophic forgetting.

• Localist: basis set expansion networks use localized functions

(Gaussians, RBF); outputs depend on a small subset of parameters.

• Modular organization in connections models creates subgroups of

processing elements that react in a local way.

• Diverse learning methodologies: heteroassociative supervised and

reinforcement learning, competitive learning (WTA or WTM),

correlation-based learning (Hebb) creates internal models.

• In complex reasoning behind symbolic architectures, but may be closer

to natural perception and reasoning based on perceptions.

Emergent CA: IBCA

• IBCA (Integrated Biologically-based Cognitive Architecture),

(O'Reilly, Y. Munakata 2000): 3 different types of memory.

• In posterior cortex (PC), overlapping, distributed localist

organization, sensory-motor + multi-modal, hierarchical processing.

• In frontal cortex (FC), non-overlapping, recurrent localist organization,

working memory units work in isolation, contribute combinatorially.

• In hippocampus (HC), sparse, conjunctive globalist organization,

binding all activation patterns across PC and FC (episodic memory).

• LEABRA learning algorithm includes error-driven learning of skills and

Hebbian learning with inhibitory competition dynamics.

• PC & FC modules: slow, integrative learning of regularities.

• HC module: fast learning, retains & discriminates individual events.

Cooperation HC - FC/PC reflects complementary learning in the brain.

• Higher-level cognition emerging from activation-based processing

(updating active representations for self-regulation) in the FC module.

• So far basic psychophysical tasks; scalability? emotions? goals?

Spiking vs. mean field

Brain: 1011 Neurons

Linked Pools

A

C

Mean-Field

Model:

B

F

I B I B aF ( A) I ext ...

t

Networks of Spiking Neurons

Neuron Pools

neuron

Pool

spikes

1

2

3

V2 (t ) neuron 1

neuron 2

t

Integrate and

Fire Model: m

t

d

Vi (t ) gm (Vi (t ) VL ) I syn (t )

dt

M Neurons

Pool Activity:

t

M

A(t ) lim

t 0

nspikes (t , t t )

M t

Synaptic Dynamics

Synapses

Soma

Blue Brain Project

I syn (t )

Spike

EPSP, IPSP

Rsyn

Spike

Csyn

Cm

I AMPA,ext (t ) g AMPA,ext (Vi (t ) VE ) w s

AMPA, ext

ij j

Rm

s AMPA

(t )

d AMPA

j

s j (t )

(t t kj )

dt

AMPA

k

(t )

j

I AMPA,rec (t ) g AMPA,rec (Vi (t ) VE ) w s

AMPA, rec

ij j

s NMDA

(t )

d NMDA

j

s j (t )

x j (t )(1 s NMDA

(t ))

j

dt

NMDA,decay

(t )

j

I NMDA,rec (t )

g NMDA,rec (Vi (t ) VE )

(1 [ Mg

2

ws

]exp( 0.062V (t ) / 3.57))

i

, rec

I GABA,rec (t ) gGABA,rec (Vi (t ) VE ) wij s GABA

(t )

j

j

NMDA, rec

ij j

j

x NMDA

(t )

d NMDA

j

(t ) x j (t )

(t t kj )

dt

NMDA,rise k

sGABA

(t )

d GABA

j

s j (t )

(t t kj )

dt

GABA

k

Emergent CA: others

• NOMAD (Neurally Organized Mobile Adaptive Device)

(Edelman >20y) based on “neural Darwinism” theory,

emergent architectures for pattern recognition task in real

time. Large (~105 neurons with ~107 synapses) simulated nervous

system, development through behavioral tasks, value systems based

on reward mechanisms in adaptation and learning, importance of selfgenerated movement in development of perception, the role of

hippocampus in spatial navigation and episodic memory, invariant

visual object recognition, binding of visual features by neural

synchrony, concurrent, real-time control. Higher-level cognition?

• Cortronics (Hecht-Nielsen 2006), thalamocortical brain functions.

• Lexicons based on localist cortical patches with reciprocal connections

create symbols, with some neurons in patches overlapping.

• Items of knowledge = linked symbols, with learning and information

retrieval via confabulation, a competitive activation of symbols.

• Confabulation is involved in anticipation, imagination and creativity, on

a shorter time scale than reasoning processes.

Emergent CA: directions

• The NuPIC (Numenta Platform for Intelligent Computing) (J. Hawking

2004), Hierarchical Temporal Memory (HTM) technology, each node

implementing learning and memory functions. Specific connectivity

between layers leads to invariant object representation. Stresses

temporal aspects of perception, memory for sequences, anticipation.

• Autonomous mental development (J. Weng, ~10 y).

• M.P. Shanahan, internal simulation with a global workspace (2006)

weightless neural network, control of simulated robot, very simple.

• P. Haikonen “conscious machines” (2007) is based on recurrent neural

architecture with WTA mechanisms in each module.

• J. Anderson, Erzatz brain project (2007), simple model of cortex.

• COLAMN (M. Denham, 2006), and Grossberg “laminar computing”.

• E. Korner & G. Matsumoto: CA controls constraints used to select a

proper algorithm from existing repertoire to solve a specific problem.

• DARPA Biologically-Inspired Cognitive Architectures (BICA) program

(2006), “TOSCA: Comprehensive brain-based model of human mind”.

Hybrid CA: ACT-R

• ACT-R (Adaptive Components of Thought-Rational) (Anderson, >20 y),

aims at simulations of full range of human cognitive tasks.

• Perceptual-motor modules, memory modules, pattern matcher.

• Symbolic-connectionist structures for declarative memory (DM), chunks

for facts; procedural memory (PM), production rules.

Symbolic construct has a set of sub-symbolic parameters that reflect its

past usage and control its operations, thus enabling an analytic

characterization of connectionist computations using numeric

parameters (associative activation) that measure the general

usefulness of a chunk or production in the past and current context.

• Buffers - WM for inter-module communications and pattern matcher

searching for production that matches the present state of buffers.

• Top-down learning approach, sub-symbolic parameters of most useful

chunks or productions are tuned using Bayesian approach.

• Rough mapping of ACT-R architecture on the brain structures.

• Used in a large number of psychological studies, intelligent tutoring

systems, no ambitious applications to problem solving and reasoning.

Hybrid CA: CLARION

• CLARION (Connectionist Learning Adaptive Rule Induction ON-line)

(R. Sun, ~15 y): develop agents for cognitive tasks, and to understand

learning and reasoning processes in these domains.

4 memory modules, each with explicit-implicit representation:

action-centered subsystem (ACS) to regulate the agent’s actions,

non-action-centered (NCS) to maintain system knowledge,

motivational subsystem (MS) for perception, action and cognition,

metacognitive subsystem (MCS) controlling all models.

• Localist section encodes the explicit knowledge and the distributed

section (e.g. an MLP network) the implicit knowledge.

• Implicit learning using reinforcement learning or MLP network for

bottom-up reconstruction of implicit knowledge at the explicit level.

• Precoding/fixing some rules at the top level, and modifying them

bottom-up by observing actions guided by these rules.

• Software is available for experimentation; psychological data simulated

+ complex sequential decision-making for a minefield navigation task.

Hybrid CA: Polyscheme

• Polyscheme (N.L. Cassimatis, 2002) integrates multiple methods of

representation, reasoning and inference schemes in problem solving.

Polyscheme “specialist” models some aspects of the world.

• Scripts, frames, logical propositions, neural networks and constraint

graphs represent knowledge, interacting & learning from other

specialists; attention is guided by a reflective specialist, focus schemes

implement inferences via script matching, backtracking search, reason

maintenance, stochastic simulation and counterfactual reasoning.

• High-order reasoning is guided by policies for focusing attention.

Operations handled by specialists include forward inference,

subgoaling, grounding, with different representations but same focus,

may integrate lower-level perceptual and motor processes.

• Both for abstract and common sense physical reasoning in robots.

• Used to model infant reasoning including object identity, events,

causality, spatial relations. This is a meta-learning approach,

combining different approaches to problem solving.

• No ambitious larger-scale applications yet.

Hybrid CA: 4CAPS

• 4CAPS (M.A. Just 1992) is designed for complex tasks,

language comprehension, problem solving or spatial reasoning.

• Operating principle: “Thinking is the product of the concurrent activity of

multiple centers that collaborate in a large scale cortical network”.

• Used to model human behavioral data (response times and error rates)

for analogical problem solving, human–computer interaction, problem

solving, discourse comprehension and other complex tasks solved by

normal and mentally impaired people.

• Activity of 4CAPS modules correlates with fMRI and other data.

• Has number of centers (corresponding to particular brain areas) that

have different processing styles; ex. Wernicke’s area is constructing

and selectively accessing structured sequential & hierarchical reps.

Each center can perform and be a part of multiple cognitive functions,

but has a limited computational capacity constraining its activity.

Functions are assigned to centers depending on the resource

availability, therefore the topology of the whole large-scale network is

not fixed. Interesting but not designed for AGI?

Hybrid CA: Others

• LIDA (The Learning Intelligent Distribution Agent) (S. Franklin, 1997),

framework for intelligent software agent, global workspace (GW) ideas.

• LIDA: partly symbolic and partly connectionist memory organization,

modules for perception, working memory, emotions, semantic memory,

episodic memory, action selection, expectation, learning procedural

tasks, constraint satisfaction, deliberation, negotiation, problem solving,

metacognition, and conscious-like behavior.

• Cooperation of codelets, specialized subnetworks.

• Perceptual, episodic, and procedural learning, bottom-up type.

• DUAL (B. Kokinov 1994), inspired by Minsky’s “Society of Mind”,

hybrid, multi-agent architecture, dynamic emergent computations,

interacting micro-agents for memory and processing, agents form

coalitions with emergent dynamics, at macrolevel psychological

interpretations may be used to describe model properties.

• Micro-frames used for symbolic representation of facts, relevance in a

particular context is represented by network connections/activations.

• Used in a model of reasoning and psychophysics. Scaling?

Hybrid CA: others 2

• Shruti (Shastri 1993), biologically-inspired model of human reflexive

inference, represents in connectionist architecture relations, types,

entities and causal rules using focal-clusters. These clusters encode

universal/existential quantification, degree of belief, and the query

status. The synchronous firing of nodes represents dynamic binding,

allowing for representations of complex knowledge and inferences.

Has great potential, but development is slow .

• The Novamente AI Engine (B. Goertzel, 1993), psynet model and

“patternist philosophy of mind”: self-organizing goal-oriented

interactions between patterns are responsible for mental states.

• Emergent properties of network activations lead to hierarchical and

relational (heterarchical) pattern organization.

• Probabilistic term logic (PTL), and the Bayesian Optimization Algorithm

(BOA) algorithms are used for flexible inference.

• Actions, perceptions, internal states represented by tree-like structures.

• New architecture, scaling properties are not yet known.

Where to go?

• Many architectures, some developed over ~ 30 y, others new.

• Used in very few real-world applications.

• Grand challenges + smaller steps that lead to human and super-human

levels of competence should be formulated to focus the research.

• Extend small demonstrations in which a cognitive system reasons in a

trivial domain to results that may be of interest to experts, or acting as

an assistant to human expert.

• What type of intelligence do we want?

H. Gardner (1993), at least seven kinds of intelligence:

logical-mathematical, linguistic, spatial, musical, bodily-kinesthetic,

interpersonal and intrapersonal intelligence, perhaps extended by

emotional intelligence and a few others.

• To some degree they are independent!

Perhaps AGI does not have to be very general ... just sufficiently broad

to achieve human-level competence in some areas and lower in others.

Behavioral intelligence?

• R. Brooks: elephants don’t play chess, robots need integrated vision,

hearing and dextrous manipulation to “learn to ‘think’ by building on its

bodily experiences to accomplish progressively more abstract tasks”.

• Cog project has many followers, but after 15 years it is a reactive agent

and there are no good ideas how to extend it to higher cognitive levels.

• EU 7th Framework priority in intelligence and cognition: deep

embodiment, new materials, physics doing computations.

• What may be expected from embodied cognitive robotics, what are the

limitations of symbolic approaches?

• Results in the last two decades not very encouraging for AGI.

• Elephants are intelligent, but don’t play chess, use language etc.

• General CA may not be sufficient for problems in computer vision

(cf. Poggio’s brain-based architecture for vision), or language,

quite specific models of some brain functions are needed to reach the

animal-level competence.

Progress evaluation

• How to measure progress? Depends on the area.

• Variants of Turing test, Loebner competition, 20Q and other word

games – methodology exists.

• Machine Intelligence Quotient (MIQ) can be systematically measured in

human-machine cooperative control tasks, ex. using Intelligence Task

Graph (ITG) as a modeling and analysis tool (Park, Kim, Lim 2001).

• HCI indicators of efficiency of various AI tools, ex. tutoring tools.

• Agent-Based Modeling and Behavior Representation (AMBR) Model

Comparison (2005), compared humans/CA performance in a simplified

air traffic controller environment.

• 2007 AAAI Workshop “Evaluating Architectures for Intelligence”

proposed several ideas: in-city driving environment as a testbed for

evaluating cognitive architectures, measuring incrementality and

adaptivity components of general intelligent behavior.

Cognitive age

• “Cognitive age” based on a set of problems that children

at a given age are able to solve, in several groups:

e.g. vision and auditory perception, understanding language, commonsense reasoning, abstract reasoning, probing general knowledge about

the world, learning, problem solving, imagination, creativity.

• Solving all problems from a given age group will qualify cognitive

system to pass to the next grade.

• Some systems will show advanced age in selected areas, and not in

the others – CA are very young in vision but quite advanced in

mathematical reasoning, at least comparing to typical population.

• General world knowledge may be probed using a Q/A system.

Compare CA answers with answers of a 5-year old child.

• Common sense knowledge bases are quite limited, except for CyC, but

it seems to be quite difficult to use. Common-sense ontologies are

missing, representation of concepts in dictionaries is minimal.

Trends

• Hybrid architectures dominate, but biological inspirations are

very important, expect domination of BICA architectures.

• Focus is mainly on the role of thalamo-cortical and limbic systems,

identified with cognitive and emotional aspects.

• Several key brain-inspired features should be preserved in all BICA:

hierarchical organization of information processing at all levels;

specific spatial localization of functions, flexible use of resources, time

scales; attention; role of different types of memory, imagination,

intuition, creativity.

Missing so far:

• Specific role of left and right hemisphere, brain stem etc.

• Many specific functions, ex. various aspects of self,

fear vs. apprehension, processed by different amygdala structures.

• Regulatory role of the brain stem which may provide overall metacontrol selecting different types of behavior is completely neglected.

Memory

Different types of memory are certainly important:

processing of speech or texts requires:

• recognition of tokens, or mapping from sounds or strings of letters to

unique terms;

• resolving ambiguities and mapping terms to concepts in some ontology

• a full semantic representation of text that facilitates understanding and

answering questions about its content.

• These 3 steps several kinds of human memory.

• Recognition memory to focus attention when something is unexpected.

• Semantic memory that serves not only as hierarchical ontology, but

approximates spreading activation, associations, using both structural

properties of concepts and their relations.

• Episodic memory to store the topic/event/experience.

• Working memory to give space for instatiation.

BICA as approximation

• Significant progress has been made in drawing inspirations from

neuroscience in analysis of perception, less in higher cognition.

• For example, neurocognitive approach to linguistics has been used

only to analyze linguistic phenomena, but has no influence on NLP.

• “Brain pattern calculus” to approximate spreading neural activation in

higher cognitive functions is urgently needed! How to do it?

Neural template matching? Network-constrained quasi-stationary

waves describing global brain states (w,Cont)?

• Practical algorithms to discover “pathways of the brain” has been

introduced recently (Duch et al, in print) to approximate symbolic

knowledge & associations stored in human brain.

• Efforts to build concept descriptions from electronic dictionaries,

ontologies, encyclopedias, results of collaborative projects and active

searches in unstructured sources are under way.

• Architecture that uses large semantic memory to control an avatar

playing word games has been demonstrated.

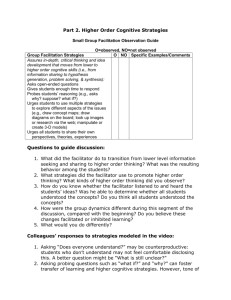

Query

Semantic memory

Applications, eg.

20 questions game

Humanized interface

Store

Part of speech tagger

& phrase extractor

verification

Manual

Parser

On line dictionaries

Active search and

dialogues with users

Realistic goals?

Different applications may require different knowledge representation.

Start from the simplest knowledge representation for semantic memory.

Find where such representation is sufficient, understand limitations.

Drawing on such semantic memory an avatar may formulate and may

answer many questions that would require exponentially large number of

templates in AIML or other such language.

Adding intelligence to avatars involves two major tasks:

• building semantic memory model;

• provide interface for natural communication.

Goal:

create 3D human head model, with speech synthesis & recognition, use it to

interact with Web pages & local programs: a Humanized InTerface (HIT).

Control HIT actions using the knowledge from its semantic memory.

ICD-9 coding challenge

Words in the brain

Psycholinguistic experiments show that most likely categorical,

phonological representations are used, not the acoustic input.

Acoustic signal => phoneme => words => semantic concepts.

Phonological processing precedes semantic by 90 ms (from N200 ERPs).

F. Pulvermuller (2003) The Neuroscience of Language. On Brain Circuits of

Words and Serial Order. Cambridge University Press.

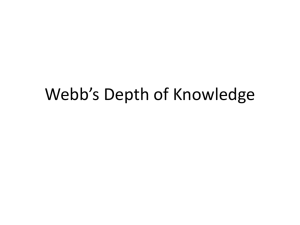

Action-perception

networks inferred

from ERP and fMRI

Phonological neighborhood density = the number of words that are similar

in sound to a target word. Similar = similar pattern of brain activations.

Semantic neighborhood density = the number of words that are similar in

meaning to a target word.

Insights and brains

Activity of the brain while solving problems that required insight and that

could be solved in schematic, sequential way has been investigated.

E.M. Bowden, M. Jung-Beeman, J. Fleck, J. Kounios, „New approaches to

demystifying insight”. Trends in Cognitive Science 2005.

After solving a problem presented in a verbal way subjects indicated

themselves whether they had an insight or not.

An increased activity of the right hemisphere anterior superior temporal

gyrus (RH-aSTG) was observed during initial solving efforts and insights.

About 300 ms before insight a burst of gamma activity was observed,

interpreted by the authors as „making connections across distantly related

information during comprehension ... that allow them to see connections

that previously eluded them”.

Insight interpreted

What really happens? My interpretation:

•

•

•

•

•

•

•

•

LH-STG represents concepts, S=Start, F=final

understanding, solving = transition, step by step, from S to F

if no connection (transition) is found this leads to an impasse;

RH-STG ‘sees’ LH activity on meta-level, clustering concepts into

abstract categories (cosets, or constrained sets);

connection between S to F is found in RH, leading to a feeling of vague

understanding;

gamma burst increases the activity of LH representations for S, F and

intermediate configurations;

stepwise transition between S and F is found;

finding solution is rewarded by emotions during Aha! experience;

they are necessary to increase plasticity and create permanent links.

Creativity

What features of our brain/minds are most mysterious?

Consciousness? Imagination? Intuition? Emotions, feelings?

Higher mental functions?

Masao Ito (director of RIKEN, neuroscientist) answered: creativity.

Still domain of philosophers, educators and a few psychologists, for ex.

Eysenck, Weisberg, or Sternberg (1999), who defined creativity as:

“the capacity to create a solution that is both novel and appropriate”.

MIT Encyclopedia of Cognitive Sciences has 1100 pages, 6 chapters

about logics & over 100 references to logics in the index.

Creativity: 1 page (+1 page about „creative person”).

Intuition: 0, not even mentioned in the index.

In everyday life we use intuition more often than logics.

Unrestricted fantasy? Creativity may arise from higher-order schemes!

Use templates for analytical thinking, J. Goldenberg & D. Mazursky,

Creativity in Product Innovation, CUP 2002

Words: experiments

A real letter from a friend:

I am looking for a word that would capture the following qualities: portal to

new worlds of imagination and creativity, a place where visitors embark on

a journey discovering their inner selves, awakening the Peter Pan within.

A place where we can travel through time and space (from the origin to the

future and back), so, its about time, about space, infinite possibilities.

FAST!!! I need it sooooooooooooooooooooooon.

creativital, creatival (creativity, portal), used in creatival.com

creativery (creativity, discovery), creativery.com (strategy+creativity)

discoverity = {disc, disco, discover, verity} (discovery, creativity, verity)

digventure ={dig, digital, venture, adventure, venue, nature} still new!

imativity (imagination, creativity); infinitime (infinitive, time)

infinition (infinitive, imagination), already a company name

learnativity (taken, see http://www.learnativity.com)

portravel (portal, travel); sportal (space, sport, portal), taken

quelion – lion of query systems! Web site

timagination (time, imagination); timativity (time, creativity)

tivery (time, discovery); trime (travel, time)

Word games

Word games were popular before computer games.

They are essential to the development of analytical thinking.

Until recently computers could not play such games.

The 20 question game may be the next great challenge for AI,

because it is more realistic than the unrestricted Turing test;

a World Championship could involve human and software players.

Finding most informative questions requires knowledge and creativity.

Performance of various models of semantic memory and episodic

memory may be tested in this game in a realistic, difficult application.

Asking questions to understand precisely what the user has in mind is

critical for search engines and many other applications.

Creating large-scale semantic memory is a great challenge:

ontologies, dictionaries (Wordnet), encyclopedias, MindNet

(Microsoft), collaborative projects like Concept Net (MIT) …

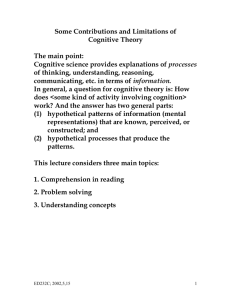

HIT – larger view …

T-T-S synthesis

Affective

computing

Learning

Brain models

Behavioral

models

Speech recognition

HIT projects

Talking heads

Cognitive Architectures

AI

Robotics

Graphics

Lingu-bots

A-Minds

VR avatars

Info-retrieval

Cognitive

science

Knowledge

modeling

Semantic

memory

Episodic

Memory

Working

Memory

DREAM architecture

Web/text/

databases interface

NLP

functions

Natural input

modules

Cognitive

functions

Text to

speech

Behavior

control

Talking

head

Control of

devices

Affective

functions

Specialized

agents

DREAM is concentrated on the cognitive functions + real time control, we plan to

adopt software from the HIT project for perception, NLP, and other functions.

Exciting times

are coming!

Thank you for

lending your

ears

Google: W Duch => Papers