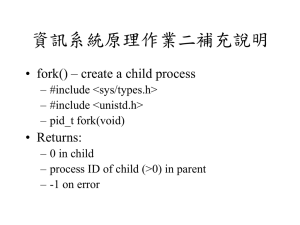

Chapter 3: Processes(PPT)

advertisement

Chapter 3 – Processes (Pgs 101 – 141)

CSCI 3431: OPERATING SYSTEMS

Processes

Pretty much identical to a “job”

A task that a computer is performing

Consists of:

1. Text Section – Program Code

2. Data Section – Heap, Stack, & Free Space

3. Current State – CPU Register Values

Program:

Process:

Thread:

Passive entity on disk

Active entity in memory

A part of a process (Ch. 4)

Process Layout (x86)

c.f., Text Fig 3.1

Process States

New: Being created

Running: Has a CPU, being executed

Suspended: Not running (covers both ready

and waiting)

Ready: Could be running if assigned to a CPU

Waiting: Needs something (e.g., I/O, signal)

Terminated: Done

Fig. 3.2 Process State

Process Control Block (PCB)

O/S (Kernal) Data Structure for a process

State - ready, etc.

Registers – current values, includes PC

Scheduling Info – priority, queue address

Memory Info – pages tables, base address

Accounting Info – owner, parent, CPU use, pid

I/O Status Info – open files, devices assigned

The Unix ps command

tami@cs:0~]ps -l

F S

UID

PID

PPID

C PRI

NI ADDR SZ WCHAN

TTY

TIME CMD

0 S

1021 17012 17011

0

80

0 -

9848 rt_sig pts/18

00:00:00 zsh

0 R

1021 17134 17012

0

80

0 -

1707 -

00:00:00 ps

pts/18

tami@cs:0~]

F = Flags, S = State, UID = User, PID = Process

PPID = Parent, C = CPU % use, PRI = Priority

NI = Nice, ADDR = Swap Addr, SZ = Num pages

WCHAN = Kernel function where sleeping

TTY = Controlling Terminal Device

TIME = CPU Time, CMD = File Name

Lots of other data is available using options!

Scheduling Queues

Happy little places for processes to wait (just

like the lines at Starbucks)

Job Queue: All processes in the system

Ready Queue: All processes ready to run

Device Queue(s): Processes waiting for a

particular device (each has own queue)

Often just a linked list of PCBs

Queueing Diagram (Fig 3.7)

Scheduler

O/S component that selects a process from a

queue

Long term (job): select process to be loaded into

memory (make ready)

Short term (cpu): select ready process to run

I/O: select process from the I/O queue for that device

Context Switch: Changing the executing process

on a CPU; requires saving state of outgoing

process, restoring state (if any) of incoming

process

Process Mix

Computers work best when a variety of

processes with different needs exist

CPU-bound process: most of its life is

computations, very little I/O

I/O-bound process: most of its life is spent

doing I/O, minimal computation

Long term scheduling works best if a mix of

these is in memory

Medium Term Scheduler

Monitors memory and removes some

processes which it writes to disk (“swap out”)

Later moves the process back to memory so

it can be executed (“swap in”)

Will cover this more in Ch. 8

Process Creation

All processes have a parent

Thus there is a process tree

Every process has a PID

Two step process in Unix

Create a new (duplicate) process : fork()

2. Overlay duplicate with new program: exec()

1.

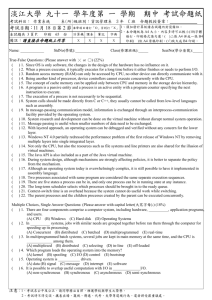

Fork() and Exec() (Fig 3.10)

pid_t pid = fork();

// Duplicate myself

if (pid < 0) error(); // No child, parent OK

if

(pid == 0) {

// Child, don’t know own pid

execlp(“/bin/ls”,”ls”,NULL);

} else {

// Parent w. opt. wait for child completion

wait(NULL);

printf(“Child complete.\n”);

}

fork(): Making a COPY

The copy is identical. Has same code, same heap,

same stack, same variables with same values

Only difference is that child has different PID and

PPID

Forking

After fork(), either child or parent, or both,

could be executing – no guarantees here!

wait() causes parent to wait for child to

terminate

exec family of syscalls overlays child process

Additional coding must be performed to

allow parent and child to communicate (IPC)

Termination

Processes terminate with the exit() syscall

GCC inserts it for you after the last executable

instruction (or a jmp to it if many exit points)

Some O/S terminate all children of a terminating

process, “cascading termination”

Parents can terminate their children

Arbitrary processes cannot terminate each other

O/S can terminate a process for a variety of

reasons (e.g., security, on errors)

Exit value of a terminated process is stored for a

while (e.g., until reboot or PID reused)

Summary (Unix)

New processes made by an existing process

using FORK

New process is a copy of the old

Both parent and child are running after the

FORK

New process must get new program and data

using EXEC

Parent can WAIT for child or continue

Processes terminate using EXIT

Interprocess Communication

Efficiency: Save having 2 copies of something

by letting it be shared

Performance: Sharing results of parallel

computations

Co-ordination: Synchronising the transfer of

results, access to resources etc.

Two primary reasons:

Coordination/Synchronisation

2. Data transfer

1.

IPC Models (SGG)

1. Shared Memory – common memory

location for both processes to read and write

2. Message Passing – kernel acts as a gobetween to receive and send messages

(shared memory is managed by the kernel

instead)

But, really, its always just some kind of shared

memory and there are more than two models

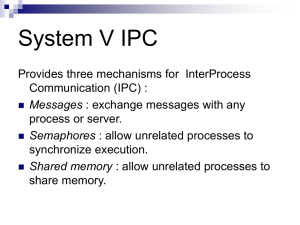

IPC Models

Shared memory

Message passing

Mailboxes & Ports

Remote Procedure Calls

Pipes

Interrupts (users to O/S)

Signals (limited information content)

Semaphore

Based on a railroad analogy

Semaphores (flags) determined which track a

train could use so that only one was on the track

at a time

Used to create “mutual exclusion”

Usually requires hardware (CPU) support

Will look more carefully at semaphores in

Chapter 6

Coordination and synchronisation is one of the

most difficult tasks in multiprocess

environments

Making a Semaphore

P1: Reads, sees its clear, decides to use

OS: Switches from P1 to P2 (time slice end)

P2: Reads, sees its clear, decides to use

P2: Writes, starts using

OS: Switches from P2 to P1 (time slice end)

P1: Writes, starts using

*** CRASH! P1 and P2 BOTH USING ***

Read/Write has to be atomic and not

interruptable

Tricky to implement efficiently

Shared Memory

O/S normally prevents one process from

accessing the memory of another

Requires setup to be effective

Processes must somehow co-operate to

share the memory effectively or else they can

over-write each other’s data

Simultaneous writes cause a big mess!

Unix Shared Memory

A “key” is used to name the shared segment.

Any process with the key can access it.

The segment is located using shmget()

The segment is attached using shmat()

Generally “fast” for doing communication

Uses standard Unix permission system

Highly useful techniques are possible

Read/Write coordination is needed

Producer-Consumer Problem

Producer creates something

Consumer uses that product

Consumer must wait for it to be produced

and fully put into memory

Producer must wait for consumer to create

space where the product can be put if the

space fills up

Requires careful communication and

synchronisation

Message Passing

Uses the O/S to receive, buffer, and send a

message

Uses the send() and receive() syscalls

What if a process tries to receive when

nothing has been (or will be) sent?

Like shared memory, message passing

requires careful coding and co-ordination by

the two+ processes

Direct Communication

Uses process names (PIDs)

Requires knowing the PID of everyone we

communicate with

If a process terminates, must find its PID and

delete it

Main drawback is maintaining the list of active

PIDs we communicate with

Send goes to only one process so multiple sends

may be needed

Works great in many situations though

Indirect Communication

Uses mailboxes or ports

Mailbox is an O/S maintained location where

a message can be dropped off or picked up

All mailboxes have a name

Multiple processes can read from the mailbox

Mailbox can be owned by O/S or a process

Still have the problem of communicating the

mailbox name/location, but at least now the

O/S knows this information as well

Synchronicity

(Not the Police Album)

Message passing can be either:

1.

2.

Blocking / Synchronous

Non-Blocking / Asynchronous

Synchronous send() waits for receipt before

returning

Synchronous receive() waits for the send to

complete before returning

Asynchronous operations return even if no

receipt occurs or if data is not available

When both are synchronous, we have a

“rendezvous”

Buffering

1. Zero Capacity: There is no buffer and data is

discarded if the communication is not

synchronous

2. Bounded Capacity: The buffer is fixed size

and sender blocks when the buffer fills

3. “Infinite” Capacity: O/S allocates more space

to buffer as required

Remote Procedure Call (RPC)

A protocol designed so that a process on one

machine can have computation performed by

calling a procedure (function) that is executed

by a different process on another machine

Issues with endianism, data encoding

Must define a common data representation

Issues with synchronisation of processes and

returning of results

Sockets

Associated with Internet-based IPC

A socket is an IP address (a machine) and a

specific port on that machine

We normally don’t see them because WWW

services tend to have a default port, e.g., Port

80 for HTTP

Can use 127.0.0.1 (loopback) to test things,

e.g., client and server on same machine

http://en.wikipedia.org/wiki/List_of_TCP_and_UDP_port_numbers

Pipes

A connection (with buffer) for sending data

Data of any form can be sent

Best for one directional communication

Can be used two way if emptied between

uses

Very common in Unix, considered as the most

basic form of IPC

Named pipes (called FIFOs) are file system

entities in Unix

To Do:

Complete this week’s lab on chmod()

Finish reading Chapter 3 (pgs 101-141; this

lecture) if you haven’t already