Regression - University of Toronto

advertisement

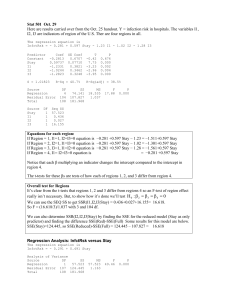

Simple Linear Regression (SLR) CHE1147 Saed Sayad University of Toronto Types of Correlation Positive correlation Negative correlation No correlation dependent Variable (Y) Simple linear regression describes the linear relationship between a predictor variable, plotted on the x-axis, and a response variable, plotted on the y-axis Independent Variable (X) Y o 1 X Y 1 1.0 o X Y o 1 X Y 1 1.0 o X Y X Y ε ε X Fitting data to a linear model Yi o 1 X i i intercept slope residuals How to fit data to a linear model? The Ordinary Least Square Method (OLS) Least Squares Regression Model line: Y 0 1 X Residual (ε) = Y Y 2 ( Y Y ) Sum of squares of residuals min = (Y Y ) 2 • we must find values of o and 1 that minimise Regression Coefficients xy b1 2 S xx x S xy b0 Y b1 X Required Statistics n number of observatio ns X X n Y Y n Descriptive Statistics X X n Var ( X ) 2 i 1 n 1 Y Y n Var (Y ) 2 S yy (SST ) i 1 n 1 X X Y Y n Covar ( X , Y ) S xx i 1 n 1 S xy Regression Statistics SST (Y Y ) 2 SSR (Y Y ) SSE (Y Y ) 2 2 Variance to be explained by predictors (SST) Y X1 Variance explained by X1 (SSR) Y Variance NOT explained by X1 (SSE) Regression Statistics SST SSR SSE Regression Statistics SSR R SST 2 Coefficient of Determination to judge the adequacy of the regression model Regression Statistics R R R 2 S xy S xx S yy xy x y Correlation measures the strength of the linear association between two variables. Regression Statistics Standard Error for the regression model S e S ˆ 2 e SSE S n2 2 S e MSE 2 e 2 SSE (Y Y ) 2 ANOVA H 0 : 1 0 H A : 1 0 df SS MS F P-value 1 SSR SSR / df MSR / MSE P(F) Residual n-2 SSE SSE / df Total n-1 SST Regression If P(F)<a then we know that we get significantly better prediction of Y from the regression model than by just predicting mean of Y. ANOVA to test significance of regression Hypothesis Tests for Regression Coefficients H 0 : i 0 H1 : i 0 t( n k 1) bi i Sbi Hypotheses Tests for Regression Coefficients H 0 : 1 0 H A : 1 0 t( n k 1) b1 1 b1 1 2 S e (b1 ) Se S xx Confidence Interval on Regression Coefficients b1 ta / 2,( n k 1) Se2 Se2 1 b1 ta / 2,( n k 1) S xx S xx Confidence Interval for 1 Hypothesis Tests on Regression Coefficients H 0 : 0 0 H A : 0 0 t( n k 1) b0 0 Se (b0 ) b0 0 1 X S n S xx 2 2 e Confidence Interval on Regression Coefficients b0 ta / 2,( nk 1) 2 2 1 X 1 X 2 2 0 b0 ta / 2,( nk 1) Se Se n S xx n S xx Confidence Interval for the intercept Hypotheses Test the Correlation Coefficient H0 : 0 HA : 0 T0 R n2 1 R 2 We would reject the null hypothesis if t0 ta / 2,n 2 Diagnostic Tests For Regressions Expected distribution of residuals for a linear model with normal distribution or residuals (errors). i Yi Diagnostic Tests For Regressions Residuals for a non-linear fit i Yi Diagnostic Tests For Regressions Residuals for a quadratic function or polynomial i Yi Diagnostic Tests For Regressions Residuals are not homogeneous (increasing in variance) i Yi Regression – important points 1. Ensure that the range of values sampled for the predictor variable is large enough to capture the full range to responses by the response variable. Y Y X X Regression – important points 2. Ensure that the distribution of predictor values is approximately uniform within the sampled range. Y Y X X Assumptions of Regression 1. The linear model correctly describes the functional relationship between X and Y. Assumptions of Regression Y 1. The linear model correctly describes the functional relationship between X and Y. X Assumptions of Regression Y 2. The X variable is measured without error X Assumptions of Regression 3. For any given value of X, the sampled Y values are independent 4. Residuals (errors) are normally distributed. 5. Variances are constant along the regression line. Multiple Linear Regression (MLR) The linear model with a single predictor variable X can easily be extended to two or more predictor variables. Y o 1 X 1 2 X 2 ... p X p Common variance explained by X1 and X2 Unique variance explained by X2 X2 X1 Unique variance explained by X1 Y Variance NOT explained by X1 and X2 A “good” model X1 X2 Y Y o 1 X 1 2 X 2 ... p X p intercept Partial Regression Coefficients residuals Partial Regression Coefficients (slopes): Regression coefficient of X after controlling for (holding all other predictors constant) influence of other variables from both X and Y. The matrix algebra of Ordinary Least Square Intercept and Slopes: ( X ' X ) X 'Y 1 Predicted Values: Y X Residuals: Y Y Regression Statistics How good is our model? SST (Y Y ) 2 SSR (Y Y ) SSE (Y Y ) 2 2 Regression Statistics SSR R SST 2 Coefficient of Determination to judge the adequacy of the regression model Regression Statistics 2 adj R n 1 2 1 (1 R ) n k 1 n = sample size k = number of independent variables Adjusted R2 are not biased! Regression Statistics Standard Error for the regression model S e S ˆ 2 e SSE S n k 1 2 S e MSE 2 e 2 SSE (Y Y ) 2 ANOVA H 0 : 1 2 ... k 0 H A : i 0 at least one! Regression Residual Total df SS MS F P-value k SSR SSR / df MSR / MSE P(F) n-k-1 SSE SSE / df n-1 SST If P(F)<a then we know that we get significantly better prediction of Y from the regression model than by just predicting mean of Y. ANOVA to test significance of regression Hypothesis Tests for Regression Coefficients H 0 : i 0 H1 : i 0 t( n k 1) bi i Sbi Hypotheses Tests for Regression Coefficients H 0 : i 0 H A : i 0 t( n k 1) b1 i bi i 2 S e (bi ) S e Cii 2 e S S xx Confidence Interval on Regression Coefficients bi ta / 2,( nk 1) Se2Cii i bi ta / 2,( nk 1) Se2Cii Confidence Interval for i ( X ' X ) X 'Y 1 ( X ' X ) X 'Y 1 ( X ' X ) X 'Y 1 t( n k 1) b1 i bi i 2 S e (bi ) S e Cii Diagnostic Tests For Regressions Expected distribution of residuals for a linear model with normal distribution or residuals (errors). i Residuals X Residual Plot 10 5 0 -5 0 2 4 X Xi 6 8 Standardized Residuals di ei S 2 e Standard Residuals 2.5 2 1.5 1 0.5 0 -0.5 0 -1 -1.5 -2 5 10 15 20 25 Model Selection Avoiding predictors (Xs) that do not contribute significantly to model prediction Model Selection - Forward selection The ‘best’ predictor variables are entered, one by one. - Backward elimination The ‘worst’ predictor variables are eliminated, one by one. Forward Selection Backward Elimination Model Selection: The General Case H 0 : q 1 q 2 ... k 0 H1 : at least one in not zero SSE ( x1 , x2 ,..., xq ) SSE ( x1 , x2 ,..., xq , xq 1 ,..., xk ) F k q SSE ( x1 , x2 ,..., xq , xq 1 ,..., xk ) n k 1 Reject H0 if : F Fa ,k q ,nk 1 Multicolinearity The degree of correlation between Xs. A high degree of multicolinearity produces unacceptable uncertainty (large variance) in regression coefficient estimates (i.e., large sampling variation) Imprecise estimates of slopes and even the signs of the coefficients may be misleading. t-tests which fail to reveal significant factors. Scatter Plot Multicolinearity If the F-test for significance of regression is significant, but tests on the individual regression coefficients are not, multicolinearity may be present. Variance Inflation Factors (VIFs) are very useful measures of multicolinearity. If any VIF exceed 5, multicolinearity is a problem. 1 VIF ( i ) Cii 2 1 Ri Model Evaluation n 2 PRESS ( yi y(i ) ) i 1 Prediction Error Sum of Squares (leave-one-out) Thank You!