department of computer science

advertisement

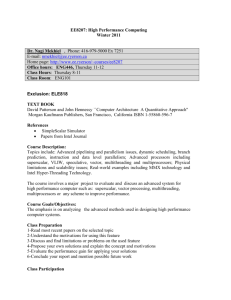

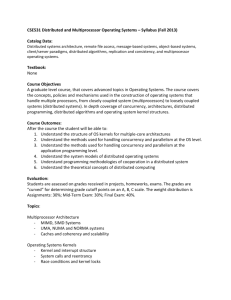

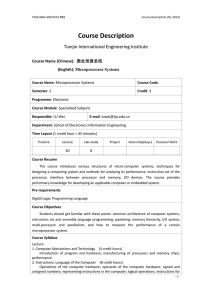

DEPARTMENT OF COMPUTER SCIENCE SIR SYED UNIVERSITY OF ENGINEERING & TECHNOLOGY, KARACHI COURSE LECTURE PLAN: Course Title Course Code Credit Hours Instructor Consultation Time Semester Contact e-mail Course Website Parallel Computing CS405 2 +1 Engr. Muhammad Nadeem Tuesday - at 9:00 am to 4:00 pm BS (CS) – VII (Sec A, B & C) mnadeem79@hotmail.com http://Zjsolution/Courses/CS_PrallelComp_2012/ TEXT BOOK: Ananth Grama, George Karypis, Vipin Kumar, and Anshul Gupta. Introduction to Parallel Computing (2nd Edition), Addison Wesley, 2003. REFERENCE BOOK: Fayez Gebali. Algorithms and Parallel Computing (1st Edition), Wiley, 2011. COURSE DESCRIPTION: Parallel computing has become mainstream and very affordable today. This is mainly because hardware costs have come down rapidly. Processing voluminous datasets is highly computation intensive. Parallel computing has been fruitfully employed in numerous application domains to process large datasets and handle other time-consuming operations of interest. The goal of CS405 is to introduce you to the foundations of parallel computing including the principles of parallel algorithm design, analytical modeling of parallel programs and parallel computer architectures. A key aim of the course is for you to gain a hands-on knowledge of the fundamentals of parallel programming by writing efficient parallel programs in some of the programming models that you learn in class. GRADING POLICY: The final grade for the class will be calculated as follows: Distribution Assignment Lab Mid Term Examinations Final exams Total MARKS 05 20 15 60 100 THEORY Week Lecture No. No. 1 2 Lecture Name Lecture Details 1 Introduction to Parallel Computing 2 Parallel Computer Architectures 3 Concepts and Terminology of Parallel Computing 4 Parallel Computing Metrics What is parallel computing? Why we need parallel computing? Why parallel computing is more difficult? Parallel computing few metrics Parallel Computing in Science and Engineering Parallel Computing in Industrial and Commercial Parallel Computers Examples Flynn's Classical Taxonomy Single Instruction, Single Data (SISD) Single Instruction, Multiple Data (SIMD) Multiple Instruction, Single Data (MISD) Multiple Instruction, Multiple Data (MIMD) Classification for MIMD Computers Supercomputing / High Performance Computing CPU / Socket / Processor / Core Task Symmetric Multi-Processor (SMP) Synchronization Granularity Parallel Overhead Massively Parallel Multiprogramming Multiprocessing Multitasking Simultaneous multithreading (SMT) Amdahl’s Law Gustafson’s Law 3 4 5 6 5 Intro to Pipelining 6 Levels of Parallelism 7 Interconnection Networks - I 8 Interconnection Networks - II 9 Multiprocessors Computing 10 Multicore Computing 11 Multicomputer 12 Cache Coherence in Parallel Computing Pipelining Synchronous Pipelining Asynchronous Pipelining Types of Pipelines Instruction Level Parallelism Memory Level Parallelism Thread Level Parallelism Process Level Parallelism Message Passing Parallelism Interconnection Networks Types of Interconnection Networks Interconnection Media Types Bus Crossbar Network: Multistage Networks Hypercube Networks Mesh Network Tree Networks Butterfly Pyramid Star Network Multiprocessors Multiprocessor Memory Types Centralized Multiprocessor Distributed Multiprocessor General Context Of Multiprocessors Single-core computer Single-core CPU Chip Multi-core architectures Multi-core CPU chip What applications benefit from multi-core? Multicomputer Asymmetrical Multicomputer Symmetrical Multicomputer ParPar Cluster, A Mixed Model Commodity Cluster Network of Workstations Cache Coherence Solutions for cache coherence Invalidation protocol with snooping Update Protocol 7 Invalidation vs. update Protocol Shared Memory Distributed Memory Hybrid Distributed-Shared Memory Multithreading Thread and Process level parallelism Process Vs Thread How can be thread created User Thread Benefit of threading Thread in Application A few thread Example Reflection on Threading Revision Revision Revision of Lecture 8-16 13 Parallel Computer Memory Architectures 14 Multithreading 8 Revision of Lectures 1-7 MID TERM 9 10 15 Simultaneous Multithreading 16 Symmetric Multiprocessors 17 GPU Computing 18 Distributed Computing Contemporary forms of parallelism Simultaneous Multithreading Multiprocessor vs. SMT SMT Architecture Parallelism on a SMT Processor Symmetric Multiprocessors Symmetric Multiprocessing Typical SMP Organization Multiprocessor OS Design Considerations Example of Symmetric Multiprocessors What is GPU Computing? Comparison of a GPU and a CPU GPU Methods GPU Data Structure GPU Programming Distributed System A Typical View Of Distributed Environment Goal Characteristics Architecture Application Advantages Disadvantages 11 12 13 19 Virtualization 20 Grid Computing 21 Cloud Computing 22 Mobile and Ubiquitous Computing 23 Introduction to Parallel Algorithms-I Issues and Challenges Introduction to Virtualization Server Virtualization Approaches Virtual Machines Virtual Machine Monitor (VMM) Hypervisor Introduction to Grid Computing Criteria for a Grid Benefits Grid Applications Grid Topologies Methods of Grid Computing A typical view of Grid environment What is Cloud computing Cloud Architecture Common Characteristics Essential Characteristics Cloud Service Models Different Cloud Computing Layers Cloud Computing Service Layers Some Commercial Cloud Offerings Advantages Disadvantages What is Pervasive or Ubiquitous Computing? Mobile Computing Vs Ubiquitous Computing? Ubiquitous Computing Example What is a Parallel Algorithm The PRAM Model Memory Protocols Parallel Search Algorithm Merge Sort Merge Sort Analysis Parallel Design and Dynamic Programming Current Applications that Utilize Parallel Architectures What is MapReduce A brief history of MapReduce MapReduce Algorithm Analysis Think parallel Program using abstraction Program in tasks Design with the option to turn concurrency off Avoid using locks 24 Introduction to Parallel Algorithms-II 14 25 MAPReduce Algorithm 26 Rules for Parallel Programming 15 16 Use tools and libraries designed to help with concurrency Use scalable memory allocators Design to scale through increased workloads 27 Parallelism and the Programmer Types of Parallelism Established Standards 28 Software Patterns in Parallel Programming Design patterns The Finding Concurrency Pattern Task Decomposition Pattern Data Decomposition Pattern Group Task Patter Order Task Pattern Revision Revision of Lectures 15-21 Revision Revision of Lectures 22-28 LABS: Lab No. Lab Name Lab 1 Delegate Lab 2 Event Lab 3 LINQ - I Lab 4 LINQ - II Lab 5 Multithreading I Lab 6 Multithreading II Lab 7 Asynchronous Programming Lab 8 Task Parallelism Lab 9 Data Parallelism Lab 10 Parallel LINQ Lab Book Submission + VIVA Project Submission + VIVA