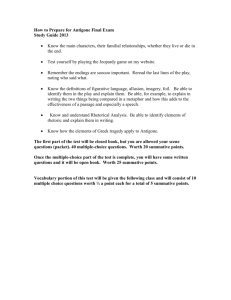

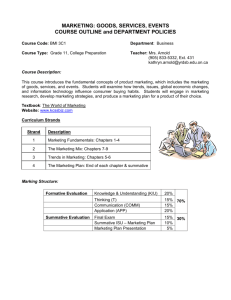

Elements of the Student Score Report

advertisement

Assessment Update: June 2015 Join the conversation: todaysmeet.com/SB AC Reconceptualizing assessment without at the same time reconceptualizing instruction will have little benefit. Pullin, 2008 Required State Data for Each of Eight State February 2015 Draft LCAP Evaluation Rubric Implementation Timeline for New State Assessments and Accountability English Language Arts Science and English Language and MathematicsHistory / Social Studies Development https://goo.gl/7SlA20 Agenda • • • • • Principles of Scoring Understanding the Reports Using the Online Reporting System Overview of the Reporting Timeline Interpreting, Using, and Communicating Results 2015 Post-Test Workshop: Reporting Summative Assessment Results 17 Principles of Scoring Computer Adaptive Testing: Philosophy “Computer adaptive testing (CAT) holds the potential for more customized assessment with test questions that are tailored to the students’ ability levels, and identification of students’ skills and weaknesses using fewer questions and requiring less testing time.” Shorr, P. W. (2002, Spring). A look at tools for assessment and accountability. Administrator Magazine. 2015 Post-Test Workshop: Reporting Summative Assessment Results 19 How Does a CAT Work? • Each student is administered a set of test questions that is appropriately challenging. • The student’s performance on the test questions determines if subsequent questions are harder or easier. • The test adapts to the student item-by-item and not in stages. • Fewer test questions are needed as compared to a fixed form to obtain precise estimates of students’ ability. • The test continues until the test content outlined in the blueprint is covered. 2015 Post-Test Workshop: Reporting Summative Assessment Results 20 How Does a CAT Work? Expanded Example: A Student of Average Ability Very High High Ability Med-High Medium Med-Low Expanded Low Very Low Test Questions Answers (R/W) 1 2 3 4 5 6 7 8 9 10 R R W R W W W Workshop: W RReporting R Summative Assessment Results 2015 Post-Test 21 Computer Adaptive Testing: Behind the Scenes • Requires a large pool of test questions statistically calibrated on a common scale with ability estimates, e.g., from the Field Test • Uses an algorithm to select questions based on a student’s responses, to score responses, and to iteratively estimate the student’s performance • Final scale scores are based on item pattern scoring 2015 Post-Test Workshop: Reporting Summative Assessment Results 22 Scoring the CAT • As a student progresses through the test, his or her pattern of responses is tracked and revised estimates of the student’s ability are calculated. • Successive test questions are selected to increase the precision about the level of achievement given the current estimate of his or her ability. • Resulting scores from the CAT portion of the test are based the specific test questions selected as a result of the student’s responses, but NOT the sum of the number answered correctly. • The test question pools for a particular grade level are designed to include enhanced pool of test questions that are more or less difficult for that grade but still matching the test blueprint for that grade. 2015 Post-Test Workshop: Reporting Summative Assessment Results 23 Human Scored Items in the CAT • Some items administered on the Smarter Balanced adaptive test component require human scoring of items • The adaptive algorithm will select these items based on performance on prior items. • Since these items cannot be scored in real time by a human, performance on these items will not impact later item selection. 2015 Post-Test Workshop: Reporting Summative Assessment Results 24 Performance Tasks (PTs) • In all Smarter Balanced tests, a PT and a set of stimuli on a given topic are administered as well as the CAT. • PTs are administered at the classroom/group level so they are not targeted to students’ specific ability level. • The items associated with the PTs may be scored by machine or by human raters. 2015 Post-Test Workshop: Reporting Summative Assessment Results 25 Final Scoring • For each student, the responses from the PT and CAT portions are merged for final scoring. • Resulting ability estimates are based on the specific test questions that a student answered, not the total number of items answered correctly. • Higher ability estimates are associated with test takers who correctly answer difficult and more discriminating items. • Lower ability estimates are associated with test takers who correctly answer easier and less discriminating items. • Two students will have the same ability estimate if they have the same set of test questions with the same responses. • It is possible for students to have the same ability estimate through different response patterns • This type of scoring is called “Item Pattern Scoring.” 2015 Post-Test Workshop: Reporting Summative Assessment Results 26 Final Scoring: Contribution of CAT and PT Sections Number of Items defined by Test Blueprints ELA/Literacy Mathematics Grade 3–5 6–8 11 CAT 38–41 37–42 39–41 PT 5–6 5–6 5–6 CAT 31–34 30–34 33–36 PT 2–6 2–6 2–6 2015 Post-Test Workshop: Reporting Summative Assessment Results 27 Final Scoring: Contribution of CAT and PT Sections (cont.) • Based on the test blueprint, the CAT section is emphasized because there are more CAT items/points than PT items/points. • Claims with more items/points are emphasized. – Mathematics: Concepts and Procedures Problem Solving/Modeling and Data Analysis Communicating Reasoning – ELA: Reading Writing Speaking/Listening Research • Because scores are based on pattern scoring, groups of items that are more difficult and discriminating will have a larger contribution on final scores. • Therefore there is no specific weight associated with either 2015 Post-Test Workshop: Reporting Summative Assessment Results PT or CAT Sections 28 Final Scoring: Mapping • After estimating the student’s overall ability, it is mapped onto the reporting scale through a linear transformation. • Mathematics: – Scaled Score = 2514.9 + 79.3 * • ELA: – Scaled Score = 2508.2 + 85.8 * • Limited by grade level lowest and highest obtainable scaled score 2015 Post-Test Workshop: Reporting Summative Assessment Results 29 Properties of the Reporting Scale • Scores are on a vertical scale. – Expressed on a single continuum for a content area – Allows users to describe student growth over time across grade levels • Scale score range – ELA/Literacy: 2114–2795 – Mathematics: 2189–2862 • For each grade level and content area, there is a separate scale score range. 2015 Post-Test Workshop: Reporting Summative Assessment Results 30 Smarter Balanced Scale Score Ranges by Grade Level Grade Subject Min Max Subject Min Max 3 ELA 2114 2623 Mathematics 2189 2621 4 ELA 2131 2663 Mathematics 2204 2659 5 ELA 2201 2701 Mathematics 2219 2700 6 ELA 2210 2724 Mathematics 2235 2748 7 ELA 2258 2745 Mathematics 2250 2778 8 ELA 2288 2769 Mathematics 2265 2802 11 ELA 2299 2795 Mathematics 2280 2862 2015 Post-Test Workshop: Reporting Summative Assessment Results 31 Achievement Levels • Achievement level classifications based on overall scores – – – – Level 1—Standard Not Met Level 2—Standard Nearly Met Level 3—Standard Met Level 4—Standard Exceeded 2015 Post-Test Workshop: Reporting Summative Assessment Results 32 Achievement Levels by Grade 2015 Post-Test Workshop: Reporting Summative Assessment Results 33 Smarter Balanced Scale Score Ranges for ELA/Literacy Grade Level 1 Level 2 Level 3 Level 4 3 2114–2366 2367–2431 2432–2489 2490–2623 4 2131–2415 2416–2472 2473–2532 2533–2663 5 2201–2441 2442–2501 2502–2581 2582–2701 6 2210–2456 2457–2530 2531–2617 2618–2724 7 2258–2478 2479–2551 2552–2648 2649–2745 8 2288–2486 2487–2566 2567–2667 2668–2769 11 2299–2492 2493–2582 2583–2681 2682–2795 2015 Post-Test Workshop: Reporting Summative Assessment Results 34 Achievement Levels by Grade 2015 Post-Test Workshop: Reporting Summative Assessment Results 35 Smarter Balanced Scale Score Ranges for Mathematics Grade Level 1 Level 2 Level 3 Level 4 3 2189–2380 2381–2435 2436–2500 2501–2621 4 2204–2410 2411–2484 2485–2548 2549–2659 5 2219–2454 2455–2527 2528–2578 2579–2700 6 2235–2472 2473–2551 2552–2609 2610–2748 7 2250–2483 2484–2566 2567–2634 2635–2778 8 2265–2503 2504–2585 2586–2652 2653–2802 11 2280–2542 2543–2627 2628–2717 2718–2862 2015 Post-Test Workshop: Reporting Summative Assessment Results 36 Measurement Precision: Error Bands • For each scale score estimated for a student, there is measurement error associated with each score. An error band is a useful tool that describes the measurement error associated with a reported scale score. • Error bands are used to construct an interval estimate corresponding to a student’s true ability/proficiency for a particular content area with a certain level of confidence. • The error bands used to construct interval estimates were based on one standard error of measurement. – If the same test is given to student multiple times, about 68 percent of the time, the student will score within this band. 2015 Post-Test Workshop: Reporting Summative Assessment Results 37 Achievement Levels for Claims • Achievement Levels for claims are very similar to subscores. They provide supplemental information regarding a student’s strengths or weaknesses. • No achievement level setting occurred for claims. • Only three achievement levels for claims were developed since there are fewer items within each claim. • Achievement levels for claims are based on the distance a student’s performance on the claim is from the Level 3 proficiency cut. • A student must complete all items within a claim to receive an estimate of his or performance on a claim. 2015 Post-Test Workshop: Reporting Summative Assessment Results 38 Achievement Levels for Claims (2) • A student’s ability, along with the corresponding standard error, are estimated for each claim. • The student’s ability estimate for the claim (C ) is compared to the Level 3 proficiency cut ( P ) . • Differences between C and P greater than 1.5 standard errors of the claim would indicate a strength or weakness. 2015 Post-Test Workshop: Reporting Summative Assessment Results 39 Achievement Levels for Claims (3) C 1.5SE At/Near Standard C Below Standard C 1.5SE C P P 2015 Post-Test Workshop: Reporting Summative Assessment Results 40 Achievement Levels for Claims (4) C 1.5SE Above Standard P C 2015 Post-Test Workshop: Reporting Summative Assessment Results 41 Understanding the Reports Available Reports Secure Preliminary student test results Location ORS ORS Preliminary and partial aggregate test results TOMS Student Score Reports (ISRs) TOMS Final student data Public Location Smarter Balanced ELA and mathematics CDE CAASPP Web page CST/CMA/CAPA for Science and STS for RLA CDE CAASPP Web page 2015 Post-Test Workshop: Reporting Summative Assessment Results 43 Secure Reporting Report Preliminary Student Data Preliminary Aggregate Data Final Student Score Reports (ISRs) pdf/paper Final Student Data File LEA School* ORS ORS ORS ORS TOMS†/Paper†† TOMS†/Paper Paper Parent TOMS * Access to ORS will be granted to CAASPP Test Site Coordinators in August. † PDFs of the Student Score Reports will be available in TOMS. †† LEAs must forward or mail the copy of the CAASPP Student Score Report to each student’s parent/guardian within 20 working days of its delivery to the LEA. 2015 Post-Test Workshop: Reporting Summative Assessment Results 44 Preliminary Test Results: Student and Aggregate • • • Through the Online Reporting System (ORS) Available approximately three to four weeks after student completes both parts—CAT and PT—of a content area Added daily Use Caution: The results are partial and may not be a good representation of the school or district’s final aggregate results. The results are preliminary; the processing of appeals may result in score changes. 2015 Post-Test Workshop: Reporting Summative Assessment Results 45 • Student Score Reports (ISR): Overview One-page report – Double-sided: All Smarter Balanced CAPA for Science – Single-sided: CST/CMA for Science (Grade 10) STS for RLA • • • Student’s final CAASPP test results Reports progress toward the state’s academic content standards Indicates areas of focus to: – – • Help students’ achievement Improve educational programs LEA distributes to parents/guardians 2015 Post-Test Workshop: Reporting Summative Assessment Results 46 Student Score Reports: Shipments to LEAs • Two copies of each student’s Student Score Report – – • • One for the parent/guardian One for the school site 2015 LEA CAASPP Reports Shipment Letter 2015 School CAASPP Reports Shipment Letter Note: Per California Code of Regulations, Title 5, Section 863, LEAs must forward one copy to parent/guardian within 20 business days. Schools may file the copy they receive, or they may give it to the student’s current teacher or counselor. If the LEA receives the reports after the last day of instruction, the LEA must mail the pupil results to the parent or guardian at their last known address. If the report is non-deliverable, the LEA must make the report available to the parent or guardian during the next school year. 2015 Post-Test Workshop: Reporting Summative Assessment Results 47 Test Results Reported on the Student Score Reports For students who took Smarter Balanced ELA and mathematics, CST, CMA or CAPA for Science Grade Smarter Balanced ELA and mathematics For students who took STS RLA CST, CMA, or CAPA Science Grade STS RLA 2 3 3 4 4 5 5 6 6 7 7 8 8 9 10 11 10 11 2015 Post-Test Workshop: Reporting Summative Assessment Results 48 Elements of the Student Score Report Back Page Front Page 5 1 2 3 6 7 4 8 2015 Post-Test Workshop: Reporting Summative Assessment Results 49 Elements of the Student Score Report Front Page 1 2015 Post-Test Workshop: Reporting Summative Assessment Results 50 Elements of the Student Score Report Front Page 2 2015 Post-Test Workshop: Reporting Summative Assessment Results 51 Elements of the Student Score Report Front Page 3 2015 Post-Test Workshop: Reporting Summative Assessment Results 52 Elements of the Student Score Report Front Page 4 2015 Post-Test Workshop: Reporting Summative Assessment Results 53 Elements of the Student Score Report Back Page 5 2015 Post-Test Workshop: Reporting Summative Assessment Results 54 Elements of the Student Score Report Back Page 6 2015 Post-Test Workshop: Reporting Summative Assessment Results 55 Elements of the Student Score Report Back Page 7 2015 Post-Test Workshop: Reporting Summative Assessment Results 56 Elements of the Student Score Report: Science Grades 5, 8, & 10 only Back Page 8 2015 Post-Test Workshop: Reporting Summative Assessment Results 57 Elements of the Student Score Report: Early Assessment Program Grade 11 only Back Page 8 2015 Post-Test Workshop: Reporting Summative Assessment Results 58 Student Score Reports (cont.) • A guide explaining the elements of student score reports will be available electronically on the caaspp.org reporting Web page. 2015 Post-Test Workshop: Reporting Summative Assessment Results 59 Final Student Data File • Downloadable file in CSV format – Data layout to be released soon on caaspp.org • • • Includes test results for all students tested in the LEA Available within four weeks after the end of an LEA’s test administration window in TOMS Additional training planned 2015 Post-Test Workshop: Reporting Summative Assessment Results 60 Public Web Reporting Site • • • Available on the CDE Web site through DataQuest Planned release in mid-August Access two testing programs through one Web site – Smarter Balanced ELA and mathematics – CST/CMA/CAPA Science and STS RLA • Additional training planned 2015 Post-Test Workshop: Reporting Summative Assessment Results 61 Reporting Timeline Timeline for Preliminary Results, Student Score Reports, and Final Student Data File Appeals Additional preliminary results received Rescores Week 0: Student completes a content area. Weeks 1–3: Student responses are scored and merged; preliminary results are checked. Week 4: LEA accesses ORS to view preliminary results. 4 Weeks After Test Administration Window Closes: LEA accesses and downloads the final student data file from TOMS. Beginning Early July: LEAs receive paper Student Score Reports with final test results; PDFs of Student Score Reports available in TOMS. 2015 Post-Test Workshop: Reporting Summative Assessment Results 63 Timeline for Public Reporting on DataQuest Early August LEAs preview embargoed public reporting site. Mid-August CDE releases public reporting results through DataQuest based on results through June 30. Mid-September CDE releases updated public reporting results based on results for 100% of LEAs. 2015 Post-Test Workshop: Reporting Summative Assessment Results 64 Communications Toolkit (Cont.) TOM TORLAKSON State Superintendent of Public Instruction • Sample parent and guardian letter to accompany the Individual Student Report • Reading Your Student Report, in multiple languages, to help parents and guardians read and interpret the Individual Student Report • Documents that include released questions that exemplify items in the Smarter Balanced assessments to help parents/guardians understand the achievement levels • Short video to help parents/guardians understand the Individual Student Report http://www.cde.ca.gov/ta/tg/ca/communicationskit.asp 65 TOM TORLAKSON Questions? State Superintendent of Public Instruction 66