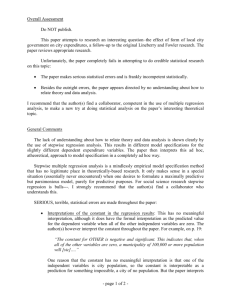

Session 9: Marketing Dashboards

advertisement

Counting what will count Does your dashboard predict ? Koen Pauwels Amit Joshi Marketing dashboards • Marketing accountability & accelerating change • Limits to human processing capacity still there: MSI: ‘separate signal from noise’, ‘dashboards’ • Top management interest: ‘substantial effort’ by 40% of US and UK companies (Clark ea 2006) • But current applications often fail to impress: Marketing Scientists can help raise the bar ! Academic research focus Reibstein ea (2005) discuss 5 development stages: 1) Identify key metrics (align with firm goals) 2) Populate the dashboard with data 3) Establish relation between dashboard items 4) Forecasting and ‘what if ’ analysis 5) Connect to financial consequences Most dashboards yet to move beyond stage 2 ! Need research to select best metrics, relate to performance You’ve got 33 x 3 = 99 variables • Market variables: price national brand, store brand, ∆ • Awareness: top-of-mind, aided, unaided, ad awareness • Trial/usage: ever, last week, last 4 weeks, 3 months • Liking/Satisfaction: given aware and given tried • Preference: favorite brand, will it satisfy future needs? • Purchase intent: given aware and given tried • Attribute ratings: taste, quality, trust, value, fun, feel • Usage occasion: home, on the go, afternoon, entertain Need to reduce 99 to 6-10 metrics (US) or 10-20 (UK) Metric deletion rules (Ambler 2003) 1) Does the metric rarely change? 2) Is the metric too volatile to be reliable? Univariate tests on time series properties 3) Is metric leading indicator of market outcome? Pairwise tests of metric with performance 4) Does the metric add sufficient explanatory power to existing metrics? Econometric models to explain performance This research • • • 1) 2) 3) • • Univariate: st. dev., coef. of variation, evolution Pairwise: Granger Causality test with performance Explanatory power: regression model comparison Stepwise regression (Hocking 1976, Meiri ea 2005) Reduced Rank Regression (Reinsel and Velu 1998) Forecast error variance decomposition, based on Vector Autoregressive Model (Hanssens 1998) Assessment: forecasting accuracy hold-out sample Managerial control: impact size and lead time Stepwise regression • Automatic selection based on statistical criteria Objective: select set of metrics with highest R2 • Forward: add variables with lowest p-value Backward: delete variables with highest p-value • Unidirectional: considers one variable at a time Stepwise: checks all included against criterion Combinatorial: evaluates every combination Reduced Rank Regression • Uses correlation of key metrics and performance Yi = Xi’C + εi with Yi (m x 1) and Xi (n x 1) C (m x n) has rank r ≤ min (m, n) Restriction: m – r linear restrictions on C Maximize explained variance under restriction • Originally shrinkage regression (Aldrin 2002), now for selecting best combination variables Forecast variance decomposition • Based on Vector Autoregressive Model A ‘dynamic R2’, FEVD calculates the percentage of variation in performance that can can be attributed to changes in each of the endogenous variables (Hanssens 1998, Nijs ea 2006) • Measures the relative performance impact over time of shocks initiated by each endogenous var • We consider the FEVD at 26 weeks Methods share 4/10 metrics Stepwise Reduced R FEVD Market pricenb, -st pricenb, -st pricenb, -st Awareness awareunnb awunnb, -st awareunnb Usage/trial tried3m∆ tried3m∆ tried4wnb satistriednb liketriednb Purch. Intent piawarenb Affect satistriednb Attribute Ratings Usage occasion satisnb, feelst fun∆, taste ∆, trust ∆ afternoonnb entertainnb entertainnb satisnb, trust∆, qual∆ afternoonnb entertainnb Stepwise scores within sample STEPWISE REGRESSION REDUCED RANK REGRESSION FORECAST ERROR VARIANCE DECOMPOSITION 100 90 80 70 60 50 40 30 20 10 0 R-SQUARED ADJUSTED R-SQUARED But sucks out-of-sample STEPWISE REGRESSION REDUCED RANK REGRESSION FORECAST ERROR VARIANCE DECOMPOSITION 25 20 15 10 5 0 MEAN AVERAGE PERCENTAGE ERROR THEIL'S INEQUALITY COEFFICIENT Sales Impact Size and Timing 1% change in Short-term Long-term Wear-in Wear-out PRICENB -161,794 -84,417 0 8 PRICEST 71,561 121,997 0 1 AFTERNOON 32, 129 32, 129 0 0 TRUST∆ 23,707 23,707 0 0 LIKEgiventriednb 0 40,420 1 0 QUALITY∆ 0 66,963 1 0 TRIED4WNB 0 72, 481 2 4 SatisfyingNB 0 46,788 2 0 EntertainfriendsNB 0 60,071 2 3 AWAREUNNB 0 68,537 3 4 Value (price-quality) matters right now, Awareness and Trial soon ! 90,000 PRICE Long-term Sales Impact 80,000 QUALITY Tried last month Unaided Awareness 70,000 Entertain friends 60,000 50,000 Like if tried 40,000 Satisfying Afternoon Lift 30,000 TRUST 20,000 10,000 0 0 1 2 Weeks till peak impact 3 4 Summary dashboard intuition 1) To increases sales immediately (0-1 weeks) a) promote on price and on afternoon lift usage b) communication focus on quality, affect, trust 2) To increase sales soon (2-3 weeks) a) provide free samples (to up ‘tried last month’) b) focus on satisfying and entertainment use c) advertise for unaided awareness Conclusion: P-model for dashboards 1. Which metrics are leading indicators? Granger causality tests 2. Explain most of performance dynamics Forecast error Variance decomposition 3. Forecast multivariate baseline with Vector Autoregressive or Error Correction model 4. Displays timing and size of sales impact Your Questions ?