- Computer Science and Engineering

Peer-to-Peer Systems as

Real-life Instances of Distributed Systems

Adriana Iamnitchi

University of South Florida anda@cse.usf.edu

http://www.cse.usf.edu/~anda

P2P Systems – 1

Why Peer-to-Peer Systems?

• Wide-spread user experience

• Large-scale distributed

application, unprecedented growth and popularity

– KaZaA – 389 millions downloads

(1M/week) one of the most popular applications ever!

• Heavily researched in the last 8-

9 years with results in:

– User behavior characterization

– Scalability

– Novel problems (or aspects): reputation, trust, incentives for fairness

• Commercial impact

– Do you know of any examples?

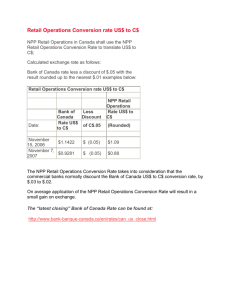

eDonkey

FastTrack (Kazaa)

Gnutella

Cvernet

3,108,909

2,114,120

2,899,788

691,750

Filetopia 3,405

Number of users for file-sharing applications

(estimate www.slyck.com, Sept ‘06)

P2P Systems – 2

Outline Today: Peer-to-peer Systems

• Background

• Some history

• Unstructured overlays

– Napster

– Gnutella (original and new)

– BitTorrent

– Exploiting user behavior in distributed file-sharing systems

• Structured overlays (“DHT”s)

– Basics

– Chord

– CAN

P2P Systems – 3

Node

What Is a P2P System?

Node

Node

Internet

Node Node

• A distributed system architecture:

– No centralized control (debatable: Napster?)

– Nodes are symmetric in function (debatable: New Gnutella protocol?)

• Large number of unreliable nodes

• Initially identified with music file sharing

P2P Systems – 4

P2P Definition(s)

A number of definitions coexist:

• Def 1 : “A class of applications that takes advantage of resources — storage, cycles, content, human presence — available at the edges of the Internet.”

– Edges often turned off, without permanent IP addresses

• Def 2 : “A class of decentralized, self-organizing distributed systems, in which all or most communication is symmetric.”

Lots of other definitions that fit in between

P2P Systems – 5

The Promise of P2P Computing

• High capacity through parallelism:

– Many disks

– Many network connections

– Many CPUs

• Reliability:

– Many replicas:

• of data

• of network paths

– Geographic distribution

• Automatic configuration

• Useful in public and proprietary settings

P2P Systems – 6

History

• Decentralized, P2P solutions: USENET

• As a grass-root movement: started in 1999 with Napster

– Objective: (music) file sharing

P2P Systems – 7

Popularity since 2004

Britney Spears 2.60

p2p 1.00

Normalized and compared to the popularity of Britney Spears as shown by Google Trends

P2P Systems – 8

Napster: History

• Program for sharing files over the Internet

• History:

– 5/99: Shawn Fanning (freshman, Northeasten U.) founds Napster Online music service

– 12/99: first lawsuit

– 3/00: 25% UWisc traffic Napster

– 2000: est. 60M users

– 2/01 : US Circuit Court of Appeals:

Napster knew users violating copyright laws

– 7/01: # simultaneous online users:

Napster 160K, Gnutella: 40K, Morpheus: 300K

P2P Systems – 9

Basic Primitives for File Sharing

Join: How do I begin participating?

Publish: How do I advertise my file(s)?

Search: How do I find a file?

Fetch: How do I retrieve a file?

P2P Systems – 10

Napster: How It Works napster.com

• Client-Server: Use central server to locate files

• Peer-to-Peer: Download files directly from peers

P2P Systems – 11

1. File list is uploaded

(Join and Publish)

Napster napster.com

users

P2P Systems – 12

Napster

2. User requests search at server (Search).

Request and results napster.com

user

P2P Systems – 13

Napster

3.

User pings hosts that apparently have data.

Looks for best transfer rate.

ping napster.com

ping user

P2P Systems – 14

4.

User retrieves file

(Fetch)

Napster napster.com

Download file user

P2P Systems – 15

Lessons Learned from Napster

• Strengths: Decentralization of Storage

– Every node “pays” its participation by providing access to its resources

• physical resources (disk, network), knowledge (annotations), ownership (files)

– Every participating node acts as both a client and a server (“servent”): P2P

– Decentralization of cost and administration = avoiding resource bottlenecks

• Weaknesses: Centralization of Data Access Structures (Index)

– Server is single point of failure

– Unique entity required for controlling the system = design bottleneck

– Copying copyrighted material made Napster target of legal attack increasing degree of resource sharing and decentralization

Centralized

System

Decentralized

System

P2P Systems – 16

Gnutella: File-Sharing with No Central Server

P2P Systems – 17

Gnutella: History

• Developed in a 14 days “quick hack” by Nullsoft (winamp)

– Originally intended for exchange of recipes

• Evolution of Gnutella

– Published under GNU General Public License on the Nullsoft web server

– Taken off after a couple of hours by AOL (owner of Nullsoft)

• Too late: this was enough to “infect” the Internet

– Gnutella protocol was reverse engineered from downloaded versions of the original

– Protocol published

– Third-party clients were published and Gnutella started to spread

– Many iterations to fix poor initial design

• High impact:

– Many versions implemented

– Many different designs

– Lots of research papers/ideas

P2P Systems – 18

Gnutella: Search in an Unstructured Overlay

I have file A.

I have file A.

Reply

Flooding

Query

Where is file A?

P2P Systems – 19

Gnutella: Overview

• Join: on startup, client contacts a few other nodes; these become its “neighbors”

– Initial list of contacts published as gnutellahosts.com:6346

– Outside the Gnutella protocol specification

– Default value for number of open connections (neighbors): C = 4

• Publish: no need

• Search:

– Flooding: ask neighbors, who ask their neighbors, and so on...

• Each forwarding of requests decreases a TTL. Default: TTL = 7

• When/if found, reply to sender

• Drop forwarding requests when TTL expires

• One request leads to

2 *

TTL i

0

C * ( C

1 ) i

26 , 240

– Back-propagation in case of success (Why?) messages

• Fetch: get the file directly from peer (HTTP)

P2P Systems – 20

Gnutella: Protocol Message Types

Type

Ping

Pong

Query

QueryHit

Push

Description

Announce availability and probe for other servents

Response to a ping

Search request

Returned by servents that have the requested file

File download requests for servents behind a firewall

Contained Information

None

IP address and port# of responding servent; number and total kb of files shared

Minimum network bandwidth of responding servent; search criteria

IP address, port# and network bandwidth of responding servent; number of results and result set

Servent identifier; index of requested file; IP address and port to send file to

P2P Systems – 21

What would you ask about a Gnutella network?

P2P Systems – 22

Gnutella: Tools for Network Exploration

• Eavesdrop traffic - insert modified node into the network and log traffic.

• Crawler - connect to active nodes and use the membership protocol to discover membership and topology.

P2P Systems – 23

Gnutella: Heterogeneity

All Peers Equal? (1)

1.5Mbps DSL 1.5Mbps DSL

56kbps Modem

1.5Mbps DSL

10Mbps LAN

1.5Mbps DSL

56kbps Modem

56kbps Modem

P2P Systems – 24

Gnutella Network Structure: Improvement

Gnutella Protocol 0.6

Two tier architectures of ultrapeers and leaves

Ultrapeers

Leaves

Data transfer (file download)

Control messages (search, join, etc)

P2P Systems – 25

Déjà vu?

Gnutella Protocol 0.6

Two tier architectures of ultrapeers and leaves

Ultrapeers

Leaves

Data transfer (file download)

Control messages (search, join, etc)

P2P Systems – 26

Gnutella: Free Riding

All Peers Equal? (2)

More than 25% of Gnutella clients share no files; 75% share 100 files or less

Conclusion: Gnutella has a high percentage of free riders

• If only a few individuals contribute to the public good, these few peers effectively act as centralized servers.

• Outcome:

– Significant efforts in building incentive-based systems

– BitTorrent?

Adar and Huberman (Aug ’00)

P2P Systems – 27

Flooding in Gnutella: Loops?

Seen request already

P2P Systems – 28

Improvements of Message Flooding

• Expanding Ring

– start search with small TTL (e.g. TTL = 1)

– if no success iteratively increase TTL (e.g. TTL = TTL +2)

• k-Random Walkers

– forward query to one randomly chosen neighbor only, with large TTL

– start k random walkers

– random walker periodically checks with requester whether to continue

• Experiences (from simulation)

– adaptive TTL is useful

– message duplication should be avoided

– flooding should be controlled at fine granularity

P2P Systems – 29

Gnutella Topology (Mis)match?

P2P Systems – 30

Gnutella: Network Size?

Explosive growth in 2001, slowly shrinking thereafter

• High user interest

– Users tolerate high latency, low quality results

• Better resources

– DSL and cable modem nodes grew from 24% to 41% over first 6 months.

P2P Systems – 31

Is Gnutella a Power-Law Network?

Power-law networks: the number of links per node follows a power-law distribution N = L -k

10000

1000

100

10

November 2000

Examples of power-law networks:

– The Internet at AS level

– In/out links to/from HTML pages

– Airports

– US power grid

– Social networks

1

1 10

Number of links (log scale)

100

Implications: High tolerance to random node failure but low reliability when facing of an ‘intelligent’ adversary

P2P Systems – 32

Network Resilience

Partial Topology Random 30% die Targeted 4% die from Saroiu et al., MMCN 2002

P2P Systems – 33

Is Gnutella a Power-Law Network? (Later Data)

Later, larger networks display a bimodal distribution

Implications :

– High tolerance to random node failures preserved

– Increased reliability when facing an attack.

10000

From Ripeanu, Iamnitchi, Foster, 2002

1000

100

10

1

1

May 2001

10

Number of links (log scale)

100

P2P Systems – 34

Discussion Unstructured Networks

• Performance

– Search latency: low (graph properties)

– Message Bandwidth: high

• improvements through random walkers, but essentially the whole network needs to be explored

– Storage cost: low (only local neighborhood)

– Update cost: low (only local updates)

– Resilience to failures good: multiple paths are explored and data is replicated

• Qualitative Criteria

– search predicates: very flexible, any predicate is possible

– global knowledge: none required

– peer autonomy: high

P2P Systems – 35

BitTorrent

P2P Systems – 36

BitTorrent Components

• Torrent File

– Metadata of file to be shared

– Address of tracker

– List of pieces and their checksums

• Tracker

– Lists peers interested in the distribution of the file

• Peers

– Clients interested in the distribution of the file

– Can be “seeds” or “leachers”

P2P Systems – 37

A BitTorrent Swarm

• A “seed” node has the file

• A “tracker” associated with the file

• A “.torrent” meta-file is built for the file: identifies the address of the tracker node

• The .torrent file is published on web

• File is split into fixed-size segments

(e.g., 256KB)

P2P Systems – 38

Choking Algorithm

• Each connected peer is in one of two states

– Choked: Download requests by a choked peer are ignored

– Unchoked: Download requests by an unchoked peer are honored

– Choking occurs at the peer level

• Each peer has a certain number of unchoke slots

– 4 regular unchoke slots (per BitTorrent standard)

– 1 optimistic unchoke slot (per BitTorrent standard)

• Choking Algorithm

– Peers unchoke connected peers with best service rate

• Service rate = rolling 20 second average of its upload bandwidth

– Optimistically unchoking peers prevents a static set of unchoked peers

– The choking algorithm runs every 10 seconds

– Peers optimistically unchoked every 30 seconds

• New peers are 3 times more likely to be optimistically unchoked

P2P Systems – 39

Piece Selection

• Random First Piece

– Piece download at random

– Algorithm used by new peers

• Rarest Piece First

– Ensures > 1 distributed copies of a piece

– Increases interest of connected peers

– Increases scalability

• Random Piece vs. Rarest Piece

– Rarest has probabilistically high download time

– New peers want to reduce download time but also increase their interest

P2P Systems – 40

BitTorrent: Overview

• Join: nothing

– Just find that there is a community ready to host your tracker

• Publish: Create tracker, upload .torrent metadata file

• Search:

– For file: nothing

• the community is supposed to provide search tools

– For segments: exchange segment IDs maps with other peers.

• Fetch: exchange segments with other peers (HTTP)

P2P Systems – 41

Gnutella vs. BitTorrent: Discussion

• Architecture

– Decentralization?

• System properties

– Reliability?

– Scalability?

– Fairness?

– Overheads?

– Quality of Service

• Search coverage for content?

• Ability to download content fast?

P2P Systems – 42

Distributed Hash Tables:

Design and Performance

P2P Systems – 43

What Is a DHT?

• A building block used to locate key-based objects over millions of hosts on the internet

• Inspired from traditional hash table:

– key = Hash(name)

– put(key, value)

– get(key) -> value

– Service: O(1) storage

• How to do this across millions of hosts on the Internet?

– Distributed Hash Tables

• What might be difficult?

– Decentralized: no central authority

– Scalable: low network traffic overhead

– Efficient: find items quickly (latency)

– Dynamic: nodes fail, new nodes join

– General-purpose: flexible naming

P2P Systems – 44

From Hash Tables to Distributed Hash Tables

Challenge: Scalably distributing the index space:

– Scalability issue with hash tables: Add new entry => move many items

– Solution: consistent hashing (Karger 97)

• Consistent hashing:

– Circular ID space with a distance metric

– Objects and nodes mapped onto the same space

– A key is stored at its successor: node with next higher ID K5

N105

K: object IDs

N: node IDs

Circular

ID space

N90

K20

N32

K80

P2P Systems – 45

The Lookup Problem

Put (Key=“title”

Value=file data…)

Publisher

N

1

N

2 N

3

N

4

Internet

?

N

5

N

6

Client

Get(key=“title”)

P2P Systems – 46

DHTs: Main Idea

N

1

N

2

Publisher

Key=H(audio data)

Value={artist, album title, track title}

N

4

N

9

N

6

N

7

N

3

Client

Lookup(H(audio data))

N

8

P2P Systems – 47

What Is a DHT?

• Distributed Hash Table: key = Hash(data) lookup(key) -> IP address send-RPC(IP address, PUT, key, value) send-RPC(IP address, GET, key) -> value

• API supports a wide range of applications

– DHT imposes no structure/meaning on keys

– And thus build complex data structures

P2P Systems – 48

Approaches

• Different strategies

– Chord: constructing a distributed hash table

– CAN: Routing in a d-dimensional space

– Many more…

• Commonalities

– Each peer maintains a small part of the index information (routing table)

– Searches performed by directed message forwarding

• Differences

– Performance and qualitative criteria

P2P Systems – 49

Example 1: Distributed Hash Tables (Chord)

• Hashing of search keys AND peer addresses on binary keys of length m

– Key identifier = SHA-1(key); Node identifier = SHA-1(IP address)

– SHA-1 distributes both uniformly

– e.g. m=8, key(“yellow-submarine.mp3")= 17 , key(192.178.0.1)= 3 predecessor m=8

32 keys stored at k p3 peer data k with hashed identifier with hashed identifier stored at node p2 p k

, such that p2 the smallest node ID larger than p2

Search possibilities?

1. every peer knows every other

O(n) routing table size

2. peers know successor

O(n) search cost

P2P Systems – 50 is k

Routing Tables

• Every peer knows m peers with exponentially increasing distance p p+1 p+2

Each peer p stores a routing table

First peer with hashed identifier p such that s i

=successor(p+2 i-1 ) for i=1,..,m

We write also s i

= finger(i, p) p+4 s

5 s

1, s s

2,

4 s

3 p3 p2 p+8 s i s

1 s

2 s

3 s

4 s

5 p p2 p2 p2 p3 p4 p4 p+16

Search

O(log n) routing table size

P2P Systems – 51

k2 p4 s

5

Search search(p, k) find in routing table largest (s i node ID in interval [p,k]

, p*) such that p* largest if such a p* exists then search(p*, k) else return (successor(p)) // found p p+1 p+2 p+4 s

1, s

2, s

3 p2 s

4 p3 p+8 k1

Search

O(log n) search cost p+16

P2P Systems – 52

Finger i

Points to Successor of n+2 i

112

¼

N120

1/8

1/16

1/32

1/64

1/128

N80

½

P2P Systems – 53

Lookups Take O( log(N)

) Hops

N5

N110

N99

N10

N20

K19

N32

Lookup(K19)

N80

N50

N60

P2P Systems – 54

Node Insertion (Join)

• New node q joining the network p4 p p+1 p+2 q p+4 p2 p+8 p3 p+16 routing table of p i s

1 s

2 s

3 s

4 s

5 p q q p2 p3 p4 routing table of q i s

3 s

4 s

1 s

2 s

5 p p2 p2 p3 p3 p4

P2P Systems – 55

Network size n=10^4

5 10^5 keys

Load Balancing in Chord

P2P Systems – 56

Network size n=2^12

100 2^12 keys

Path length ½ Log

2

(n)

Length of Search Paths

P2P Systems – 57

Chord Discussion

• Performance

– Search latency: O(log n) (with high probability, provable)

– Message Bandwidth: O(log n) (selective routing)

– Storage cost: O(log n) (routing table)

– Update cost: low (like search)

– Node join/leave cost: O(Log 2 n)

– Resilience to failures: replication to successor nodes

• Qualitative Criteria

– search predicates: equality of keys only

– global knowledge: key hashing, network origin

– peer autonomy: nodes have by virtue of their address a specific role in the network

P2P Systems – 58

Example 2: Topological Routing (CAN)

• Based on hashing of keys into a d-dimensional space (a torus)

– Each peer is responsible for keys of a subvolume of the space (a zone)

– Each peer stores the addresses of peers responsible for the neighboring zones for routing

– Search requests are greedily forwarded to the peers in the closest zones

• Assignment of peers to zones depends on a random selection made by the peer

P2P Systems – 59

Network Search and Join

Node 7 joins the network by choosing a coordinate in the volume of 1

P2P Systems – 60

CAN Refinements

• Multiple Realities

– We can have r different coordinate spaces

– Nodes hold a zone in each of them

– Creates r replicas of the (key, value) pairs

– Increases robustness

– Reduces path length as search can be continued in the reality where the target is closest

• Overloading zones

– Different peers are responsible for the same zone

– Splits are only performed if a maximum occupancy (e.g. 4) is reached

– Nodes know all other nodes in the same zone

– But only one of the neighbors

P2P Systems – 61

CAN Path Length

P2P Systems – 62

Increasing Dimensions and Realities

P2P Systems – 63

CAN Discussion

• Performance

– Search latency: O(d n 1/d ), depends on choice of d (with high probability, provable)

– Message Bandwidth: O(d n 1/d ), (selective routing)

– Storage cost: O(d) (routing table)

– Update cost: low (like search)

– Node join/leave cost: O(d n 1/d )

– Resilience to failures: realities and overloading

• Qualitative Criteria

– search predicates: spatial distance of multidimensional keys

– global knowledge: key hashing, network origin

– peer autonomy: nodes can decide on their position in the key space

P2P Systems – 64

Gnutella

Chord

CAN

Comparison of (some) P2P Solutions

Search Paradigm Overlay maintenance costs

Search Cost

Breadth-first on search graph

O(1)

2*

TTL i

0

C *( C

1) i

Implicit binary search trees

O(log n) O(log n) d-dimensional space

O(d) O(d n 1/d )

P2P Systems – 65

DHT Applications

Not only for sharing music anymore…

– Global file systems [OceanStore, CFS, PAST, Pastiche, UsenetDHT]

– Naming services [Chord-DNS, Twine, SFR]

– DB query processing [PIER, Wisc]

– Internet-scale data structures [PHT, Cone, SkipGraphs]

– Communication services [i3, MCAN, Bayeux]

– Event notification [Scribe, Herald]

– File sharing [OverNet]

P2P Systems – 66

Discussions

P2P Systems – 67

Research Trends: A Superficial History Based on

Articles in IPTPS

• In the early ‘00s (2002-2004):

– DHT-related applications, optimizations, reevaluations… (more than 50% of

IPTPS papers!)

– System characterization

– Anonymization

• 2005-…

– BitTorrent: improvements, alternatives, gaming it

– Security, incentives

• More recently:

– Live streaming

– P2P TV (IPTV)

– Games over P2P

P2P Systems – 68

What’s Missing?

• Very important lessons learned

– …but did we move beyond vertically-integrated applications?

• Can we distribute complex services on top of p2p overlays?

P2P Systems – 69

References

• Chord: A Scalable Peer-to-peer Lookup Service for Internet

Applications, Stoica et al., Sigcomm 2001

• A Scalable Content-Addressable Network, Ratnasamy et al., Sigcomm

2001

• Mapping the Gnutella Network: Properties of Large-Scale Peer-to-Peer

Systems and Implications for System Design. Matei Ripeanu, Adriana

Iamnitchi and Ian Foster. IEEE Internet Computing, vol. 6(1), Feb 2002

• Interest-Aware Information Dissemination in Small-World Communities,

Adriana Iamnitchi and Ian Foster, HPDC 2005, Raleigh, NC, July 2005

• Small-World File-Sharing Communities. Adriana Iamnitchi, Matei

Ripeanu, Ian Foster, Infocom 2004, Hong Kong, March 2004

• IPTPS paper archive: http://www.iptps.org/papers.html

• Many materials available on the web, including lectures by Matei Ripeanu,

Karl Aberer, Brad Karp, and others.

P2P Systems – 70

Exploiting Usage Behavior in

Small-World File-Sharing

Communities

P2P Systems – 71

Context and Motivation

• By the time we did this research, many p2p communities were large, active and stable

• Characterization of p2p systems showed particular user behavior

• Our question: instead of building system without user behavior in mind, could we (learn, observe, and) exploit it in system design?

• Follow-up questions:

– What user behavior should we focus on?

– How to exploit it?

– Is this pattern particular to one type of file-sharing community or more general?

P2P Systems – 72

“ Yellow Submarine ”

“ Wood Is a Pleasant

Thing to Think About ”

“ Yellow Submarine ”

“ Les Bonbons ”

“No 24 in B minor, BWV 869”

“ Les Bonbons ”

“ Wood Is a Pleasant

Thing to Think

About ”

New metric: The Data-Sharing Graph

G m

T (V, E) :

V is set of users active during interval T

An edge in E connects users that asked for at least m common files within T

P2P Systems – 73

The DØ Collaboration

2

1.5

1

0.5

0

4

3.5

3

2.5

6 months of traces (January – June 2002)

300+ users, 2 million requests for 200K files

Average path length: 7days, 50 files

Random D0

Small average path length

1

0.9

0.8

0.7

0.6

0.5

0.4

0.3

0.2

0.1

0

CCoef =

# Existing Edges

# Possible

Edges

Clustering coeficient: 7days, 50 files

Random D0

Small World!

Large clustering coefficient

P2P Systems – 74

Small-World Graphs

• Small path length, large clustering coefficient

– Typically compared against random graphs

• Think of:

– “It’s a small world!”

– “Six degrees of separation”

• Milgram’s experiments in the 60s

• Guare’s play “Six Degrees of Separation”

P2P Systems – 75

10.0

Other Small Worlds

Food web

Power grid

LANL coauthors

Web

Film actors

1.0

Internet

Word co-occurrences

0.1

1 10 100 1000 10000

Clustering coefficient ratio (log scale)

D. J. Watts and S. H. Strogatz, Collective dynamics of small-world networks . Nature, 393:440-442, 1998

R. Albert and A.-L. Barabási, Statistical mechanics of complex networks , R. Modern Physics 74, 47 (2002).

Web Data-Sharing Graphs

10.0

300s,

1file

Web data-sharing graph

Other small-world graphs

1800s,

10file

7200s,

50files

1.0

1800s,

100files

3600s,

50files

0.1

1 10 100 1000 10000

Clustering coefficient ratio (log scale)

Data-Sharing Relationships in the Web, Iamnitchi, Ripeanu, and Foster, WWW’03

P2P Systems – 77

10.0

DØ Data-Sharing Graphs

Web data-sharing graph

D0 data-sharing graph

Other small-world graphs

1.0

0.1

1

28 days,

1 file

7days,

1file

10 100 1000 10000

Clustering coefficient ratio (log scale)

P2P Systems – 78

KaZaA Data-Sharing Graphs

10.0

Web data-sharing graph

D0 data-sharing graph

Other small-world graphs

Kazaa data-sharing graph

2 hours

1 file

1.0

4h

2 files

28 days

1 file

12h

4 files

7day,

1file

1 day

2 files

0.1

1 10 100 1000 10000

Clustering coefficient ratio (log scale)

Small-World File-Sharing Communities, Iamnitchi, Ripeanu, and Foster, Infocom ‘04

P2P Systems – 79

Overview

• Small-world file sharing communities:

– The data-sharing graph

– Traces from 3 file-sharing communities:

• D0, Kazaa, Web

– It’s a small world!

• Exploiting small-world patterns:

– Overlay construction

– Cluster identification

– Information dissemination

P2P Systems – 80

Exploiting Small-World Patterns

• Exploit the small-world properties of the data-sharing graph:

– Large clustering coefficient

– (… and small average path length)

• Interest-aware information dissemination

– Objective: dynamically identify groups of users with proven common interests in data

– Direct relevant information to groups of interest

• Case study: File location

– Concrete problem

– Real traces

– Specific performance metrics

– Real, new requirements

• Other mechanisms:

– Reputation mechanisms

– Replica placement

– …

P2P Systems – 81

Graph construction

Clustering

Dissemination

Interest-Aware Information Dissemination in Small-World Communities,

Iamnitchi and Foster, HPDC’05

P2P Systems – 82

Step 1: Graph Construction

Objective:

Make nodes aware of their common interests without central control

N

<T

1

, F>

N

F

A

X, T

0

, F x

A, T

1

C, T

1

, F

, F

3

…

<T

2

, F>

N

A,T

1

,F

F

B

Log access when downloading file (not when requesting location!)

P2P Systems – 83

Step 2: Clustering

• (extra) Challenge: no global knowledge (graph)

• Idea: Label edges

– Each node labels its edges as ‘short’ or ‘long’ based only on local information

• Multiple ways to define ‘short’/‘long’:

– “Short” edge if:

• Dead end

• In a triad

– “Long” otherwise

Web

70

60

50

40

30

20

10

0

2 min 5 min 15 min 30 min

• Skewed cluster size distribution

• Similar results obtained with centralized algorithm

• Need solutions to limit size

P2P Systems – 84

P2P Systems – 85

Step 3: Information Dissemination (1)

Hit rate due to previous information dissemination within clusters: up to 70%

(compared to under 5% for random groups of same size)

100

90

80

70

60

50

40

30

20

10

0

Web

2 min

Except largest cluster

Total hit rate

5 min 15 min 30 min

D0 100

90

80

70

60

50

40

30

20

10

Except largest cluster

Total hit rate

0

Kazaa

100

3 days 7 days 10 days 14 days 21 days 28 days

Except largest cluster

Total hit rate

90

80

70

60

50

40

30

20

10

0

1 hour 4 hours 8 hours

P2P Systems – 86

Step 3: Information Dissemination (2)

3 days, 10 files 3 days, 100 files

CDF of files per collection found locally due to information dissemination.

% collection found locally due to information dissemination

21 days, 100 files 21 days, 500 files

% collection found locally due to information dissemination

P2P Systems – 87

The Message:

•The D0 experiment

•Web traces

•KaZaA network

Small Worlds!

…that can be exploited for designing algorithms

Dissemination

– Information dissemination

– Must be other mechanisms, as well!

P2P Systems – 88

Where Are We?

• We saw the major solutions in unstructured P2P systems:

– Napster

– Gnutella

– BitTorrent

• And a solution that starts from usage patterns to get inspiration for system design

– Exploiting small-world patterns in file-sharing

• There are many other ideas for unstructured p2p networks but

• There are also the structured p2p networks!

P2P Systems – 89