Process Synchronisation. Critical Section. Mutual exclusion and

advertisement

Inter Process Synchronization

and Communication

CT213 – Computing Systems

Organization

Content

• Parallel computation

• Process interaction

– Processes unaware of each other

– Processes indirectly aware of each other

– Processes directly aware of each other

• Critical sections

• Mutual exclusion

– Software approaches (Dekker Algorithm, Peterson’s Algorithm)

– Hardware approaches (Interrupt disabling, ISA modification)

• Semaphores & mutual exclusion with semaphores

• Producer Consumer problems

• Semaphores implementation

• Messages & design issues

• Readers/Writers problem

Parallel Computation

• Is made up from multiple, independent parts that

are able to execute simultaneously using one part

per process or thread

– In some cases, different parts are defined by one

program, but in general they are defined by multiple

programs;

– Cooperation is achieved through logically shared

memory that is used to share information and to

synchronize the operation of the constituent processes

• The operating system should provide at least a

base level mechanism to support sharing and

synchronization among a set of processes

Operating System Concerns

• Keep track of active processes – done using process

control block

• Allocate and de-allocate resources for each active process

(Processor time, Memory, Files, I/O devices)

• Protect data and resources against unwanted interference

from other processes

– Programming errors done in one process should not affect the

stability of other processes in the system

• Result of process must be independent of the speed of

execution of other concurrent processes

– This includes process interaction and synchronization and it is

subject of this presentation

Process Interaction

• Processes unaware of each other

– Independent processes that are not intended to work together;

i.e. two independent applications want to access same printer or

same file; the operating system must regulate these accesses;

these processes exhibit competition

• Processes indirectly aware of each other

– Processes that are not aware of each other by knowing each

other’s process ID, but they are sharing some object (such as an

I/O buffer); such processes exhibit cooperation

• Process directly aware of each other

– These are processes that are able to communicate to each other

by means of process IDs; they are usually designed to work

jointly on some activity; these processes exhibit cooperation

Competition Among Processes for

Resources

• Two or more processes need to access a resource

– Each process is not aware of the existence of the other

– Each process should leave the state of the resource it uses unaffected (I/O devices,

memory, processor, etc)

• Execution of one process may affect the execution of the others

– Two processes wish access to single resource

– One process will be allocated the resource, the other one waits (therefore slowdown)

– Extreme case: waiting process may never get access to the resource, therefore never

terminate successfully

• Control problems:

– Mutual Exclusion

• Critical sections

– Only one program at a time is allowed in a critical section

– i.e. only one process at a time is allowed to send command to the printer

– Deadlock

• P1 and P2 with R1 and R2; each process needs to access both resources to perform part of

its function; R1 gets allocated to P2 and R2 gets allocated to P1 … both processes will

wait indefinitely for the resources … deadlock

– Starvation

• P1, P2, P3 and resource R … P1 and P3 take successively access to resource R … P2 may

never get access to it, even if there is no deadlock situation … starvation

Cooperation Among Processes by

Sharing

• Processes that interact with other processes without being

aware of them

– i.e. multiple processes that have access to global variables,

shared files or databases

– The control mechanism must ensure the integrity of the shared

data

• Control problems:

–

–

–

–

Deadlock

Starvation

Mutual exclusion

Data coherence

• Data items are accessed in two modes: read and write

• Writing must be mutually exclusive to shared resources

• Critical sections are used to provide data integrity

Cooperation Among Processes by

Communication

• When processes cooperate by communication, various

processes participate in a common effort that links all of

the processes

– Communication provides a way to sync or coordinate the

various activities

• Communication can be characterized by

sending/receiving messages of some sort

– Mutual exclusion is not a control requirement since nothing is

shared between process in the act of passing messages

• Control problems:

– Possible to have deadlock

• Each process waiting for a message from the other process

– Possible to have starvation

• Two processes sending message to each other while another process

waits for a message

Example of Communication by Sharing

problems

• Process 1 KEYBOARD:

– Services the keyboard interrupts

– The read characters are placed in a keyboard buffer

• Process 2 DISPLAY

– Displays the content of buffer on the monitor

• Shared resources:

• Input buffer

• Characters counter of how many chars in buffer and not

displayed

KEYBOARD and DISPLAY processes

Keyboard Process

Display process

1.

2.

3.

4.

5.

1.

2.

3.

4.

5.

ld AC, counter

inc AC

store AC, counter

…

…

ld AC, counter

dec AC

store AC, counter

…

…

• If KEYBOARD process is interrupted after instruction “1” (its registers

(context) are saved and restored when gains the control of the CPU back) and

the control passed to the process DISPLAY, the value of the counter variable

will be altered, the two processes will continue to function improperly from this

time forward …

• Causes for malfunction:

– The presence of two copies of same counter variable, one in memory and one in AC

with different values

– Parallel execution of the two processes

• Situations where two or more processes are reading or writing some shared data

and the final result depends on who runs precisely when, are called race

conditions

Critical Sections

• Are parts of the code (belonging to a process) that during

execution has exclusive control over a system resource

• It has a well defined beginning and end

• During the execution of the critical section, the process

can’t be interrupted and the control of the processor

given to another process that is using same system

resource

• At a given moment, there is just one active critical

section corresponding to a given resource

• A critical section is executed in mutual exclusion mode

• Critical section examples:

– Value update for a global shared variable

– Modification of a shared database record

– Printing to a shared printer

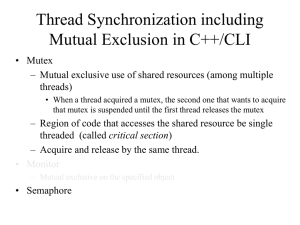

Mutual Exclusion

•

•

If we could arrange matters so no two processes were ever in

their critical regions at the same time, we could avoid race

conditions

This requirement avoids race conditions, but is not sufficient for

having parallel processes cooperate correctly and efficiently

using shared data. Four conditions to achieve good results:

1.

2.

3.

4.

No two processes may be simultaneously inside their critical regions (have

mutual exclusion)

No assumption may be made about speeds and number of CPUs

(independence of speed and number of processors)

No process outside critical section may block other processes (correct

functionality is to be guaranteed if one of the processes is abnormally

terminated outside of the critical section)

No process would have to wait forever to enter its critical section (the

access in the critical section is guaranteed in a finite time)

Mutual Exclusion

• While one process is busy updating shared

memory (or modifying a shared resource) in its

critical region, no other process will enter its

critical region and cause trouble

• There are a number of different approaches:

– Software approaches

• Dekker Algorithm

• Peterson’s Algorithm

– Hardware approaches

• Interrupt disabling

• ISA modification

Dekker’s Algorithm – First Attempt

void proc1(){

while (TRUE){

while (turn ==PROC2){;}

/*critical section code */

turn = PROC2;

something_else();

}

}

•

•

•

void proc2(){

while (TRUE){

while (turn ==PROC1){;}

/*critical section code */

turn = PROC1;

something_else();

}

}

int turn; /*global variable*/

main(){

turn = PROC1;

init_proc(&proc1(), …);

init_proc(&proc2(), …);

}

turn – global memory location that both processes could access; each process

examines the value of turn, if it is equal to the number of process, then the process

could proceed to the critical section

This procedure is known as busy waiting, since the processes are waiting (doing

nothing productive but taking processor) to get the access to critical section

Mutual exclusion conditions:

1.

2.

3.

4.

Satisfied

Unsatisfied – processes can be executed alternatively only, thus, the rhythm of execution is

given by the slower process

Unsatisfied – abnormal termination of one process determines blocking of the other

Satisfied

Dekker’s Algorithm – Second Attempt

void proc1(){

void proc2(){

while (TRUE){

while (TRUE){

while (p2use ==TRUE){;}

while (p1use ==TRUE){;}

p1use = TRUE;

p2use = TRUE;

/*critical

/*critical

section

section

code */

code */

p1use = FALSE;

p2use = FALSE;

something_else();

something_else();

}

}

}

}

•

•

•

/*global variables*/

int p1use, p2use;

main(){

p1use = FALSE;

p2use = FALSE;

init_proc(&proc1(), …);

init_proc(&proc2(), …);

}

The problem with first attempt is that it stores the name of the process that may enter

its critical section, when in fact we need state information about both processes

Each process has to have its key to the critical section, so if one process fails, the other

could still have access to the critical section

Mutual exclusion conditions:

1.

2.

3.

4.

Unsatisfied – if process proc1 is getting interrupted right before have set p1use = TRUE, and

the second process takes over the processor, then at one stage both processes will have

access to the critical section

Satisfied

Satisfied if the processes are not failing in the critical section or during setting the flags

Satisfied

Dekker’s Algorithm – Third Attempt

void proc1(){

while (TRUE){

p1use = TRUE;

while (p2use ==TRUE){;}

/*critical section code */

p1use = FALSE;

something_else();

}

}

•

•

•

void proc2(){

while (TRUE){

p2use = TRUE;

while (p1use ==TRUE){;}

/*critical section code */

p2use = FALSE;

something_else();

}

}

int p1use, p2use; /*global

variables*/

main(){

p1use = FALSE;

p2use = FALSE;

init_proc(&proc1(), …);

init_proc(&proc2(), …);

}

Because one process could change its state after the other checked it, both

processes could go in the critical section, so the second attempt failed

Perhaps we could change this with just changing the position of two

statements …

Mutual exclusion conditions:

1.

2.

3.

4.

Satisfied

Satisfied

Satisfied if the processes are not failing in the critical section or during setting the

flags; Unsatisfied otherwise (the other process is blocking)

Unsatisfied – if both processes set their flags to true before either of them has

executed the while statement, then each will think that the other has entered its

critical section, causing deadlock

Dekker’s Algorithm – Forth Attempt

void proc1(){

void proc2(){

int p1use, p2use; /*global

while (TRUE){

while (TRUE){

variables*/

p1use = TRUE;

p2use = TRUE;

main(){

while (p2use ==TRUE){

while (p1use ==TRUE){

p1use = FALSE;

p1use = FALSE;

p2use = FALSE;

p2use = FALSE;

delay(…);

delay(…);

init_proc(&proc1(), …);

p1use = TRUE;

p2use = TRUE;

init_proc(&proc2(), …);

}

}

}

/*critical section code */

/*critical section code */

p1use = FALSE;

p2use = FALSE;

something_else();

something_else();

}

}

}

}

•

Third algorithm failed because deadlock occurred as result of irreversibly change of each

process’s state to TRUE before actually having the test done. No way back off from this position

•

Try to fix this by having each process to indicate its desire to enter the critical section, but it is

prepared to defer to the other process

•

Mutual exclusion conditions:

1.

Satisfied

2.

Unsatisfied – if the processes are executing with exact speed, then neither of the processes

will enter the critical section

3.

Satisfied if the processes are not failing in the critical section or during setting the flags;

Unsatisfied otherwise (the other process is blocking)

4.

Satisfied

Dekker’s Algorithm – Correct Version

void proc1(){

while (TRUE){

p1use = TRUE;

while (p2use ==TRUE){

if (turn == PROC2){

p1use = FALSE;

while (turn ==PROC2){;}

p1use = TRUE;

}

}

/*critical section*/

turn = PROC2;

p1use = FALSE;

something_else();

}

}

•

•

void proc2(){

while (TRUE){

p2use = TRUE;

while (p1use ==TRUE){

if (turn == PROC1){

p2use = FALSE;

while (turn ==PROC1){;}

p2use = TRUE;

}

}

/*critical section code */

turn = PROC1

p2use = FALSE;

something_else();

}

}

int p1use, p2use, turn; /*global

variables*/

main(){

p1use = FALSE;

p2use = FALSE;

turn = PROC1;

init_proc(&proc1(), …);

init_proc(&proc2(), …);

}

It is an algorithm that respects the 4 conditions of mutual exclusion, but only for two concurrent

processes.

Mutual exclusion conditions:

1.

Satisfied

2.

Satisfied

3.

Satisfied

4.

Satisfied

Peterson’s Algorithm

void proc1(){

while (TRUE){

p1use = TRUE;

turn = PROC2;

while (p2use ==TRUE && turn==PROC2){;}

/*critical section*/

p1use = FALSE;

something_else();

}

}

•

•

•

void proc2(){

while (TRUE){

p2use = TRUE;

turn = PROC1;

while (p1use ==TRUE && turn==PROC1){;}

/*critical section*/

p1use = FALSE;

something_else();

}

}

If proc2 is in its critical section, then p2use =

TRUE and proc1 is blocked from entering its

critical section.

Peterson’s Algorithm can be easily generalized for

n threads

Mutual exclusion conditions:

1.

Satisfied

2.

Satisfied

3.

Satisfied

4.

Satisfied

int p1use, p2use;

int turn;

main(){

p1use = FALSE;

p2use = FALSE;

init_proc(&proc1(), …);

init_proc(&proc2(), …);

}

Hardware Solutions - Disabling Interrupts

• Simplest solution is to have the process disable interrupts right

after entering the critical region and enable them just before

leaving it

– With interrupts disabled, no interrupts will occur;

– The processor is switched from process to process only as result of interrupt

occurrence, so the processor will not be switched to other process

• It is unwise to give a user process the power to turn off interrupts

– Suppose it did it and never turn them on again …

– Suppose it is a multi-processor system …disabling interrupts on one of them,

will not help too much

• It is a useful technique within the operating system itself, but it is

not appropriate as general mutual exclusion mechanism for user

processes

while (TRUE){

/*disable interrupts*/

/*critical section*/

/*enable interrupts*/

something_else();

}

Special Machine Instructions

• In a multiprocessor configuration, several processors

share access to a common main memory; the processors

behave independently

• There is no interrupt mechanism between processors on

which mutual exclusion can be based

• At a hardware level, access to a memory location

excludes any other access to same memory location;

based on this, several machine level instructions to help

mutual exclusion have been added. Most common ones:

– Test & Set Instruction

– Exchange Instruction

• Because those operations are performed in a single

machine cycle, they are not subject to interference from

other instructions

Test & Set Instruction

/*test & set instruction */

boolean testset (int i) {

if (i==0){

i=1;

return TRUE;

}

else{

return FALSE;

}

}

void proc(int i){

while (TRUE){

while (!testset(bolt)){;}

/*critical section*/

bolt = 0;

something_else();

}

}

/*number of processes*/

const int n = 2;

int bolt;

main(){

int i;

i=n;

bolt = 0;

while (i >0){

init_proc(&proc1(i), …);

i--;

}

}

• The shared variable bolt is initialized to 0;

• The only process that may enter its critical section is the one that finds the bolt

variable equal to 0

• All processes that attempt to enter the critical section go into a busy waiting

mode

• When a process leaves the critical section, it resets bolt to 0; at this point only

one from the waiting processes is granted access to the critical section

Exchange Instruction

/*exchange instruction */

void exchange (int register, int memory) {

int temp;

temp = memory;

memory = register;

register = temp;

}

void proc(int i){

int keyi;

while (TRUE){

keyi=1;

while (keyi != 0){

exchange(keyi, bolt);

}

/*critical section*/

exchange (keyi, bolt);

something_else();

}

}

/*number of processes*/

const int n = 2;

int bolt;

main(){

int i;

i=n;

bolt = 0;

while (i >0){

init_proc(&proc1(i), …);

i--;

}

}

• The instruction exchanges the contents of a register with that of a

memory location; during the execution of the instruction, access to

that memory location is blocked for any other instruction

• The shared variable bolt is initialized to 0; each process holds a

local variable key that is initialized to 1

• The only process that enters the critical section is the one that finds

the variable bolt equal to 0;

Properties of Hardware Approach

• Advantages:

– Simple and easy to verify

– It is applicable to any number of processes on either a single processor or

multiple processors sharing main memory

– It can be used to support multiple critical sections, each critical section

defined by its own global variable

• Problems:

– Busy waiting is employed

– Starvation is possible – selection of a waiting process is arbitrary, thus some

process could indefinitely be denied access

– Deadlock is possible – consider following scenario:

• P1 executes special instruction (test&exchange) and enters its critical section

• P1 is interrupted to give the processor to P2 (which has higher priority)

• P2 wants to use same resource as P1 so wants to enter critical section so, it will

test the critical section variable and wait

• P1 will never be dispatched again because it is of lower priority than another

ready process, P2

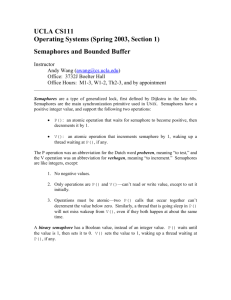

Semaphores

• Because of the drawback of both the software and

hardware solutions, we need to look into other

solutions

• Dijkstra defined semaphores

– Two or more processes can cooperate by means of

simple signals, such that a process can be forced to

stop at a specific place until it has received a specific

signal

– For signaling, special variables, called semaphores are

used

Semaphores

• Special variable called a semaphore is used for

signaling

– to transmit a signal, execute signal(s)

• Dijkstra used V for signal (increment - verhogen in Dutch)

– to receive a signal, execute wait(s)

• Dijkstra used P for wait (test - proberen in Dutch)

• If a process is waiting for a signal, it is suspended

until that signal is sent

• Wait and Signal operations cannot be interrupted

• A queue is used to hold processes waiting on the

semaphore

Semaphores – Simplified view

• We can view the semaphore as a variable that has

an integer value; three operations are defined

upon this value:

– A semaphore may be initialized to a non-negative

value

– The wait operation decrements the semaphore value. If

the value becomes negative, then the process

executing wait is blocked

– The signal operation increments the semaphore value.

If the value is not positive, then a process blocked by a

wait operation is unblocked

Semaphores – Formal view

• A queue is used to hold

processes waiting on a

semaphore

– What order should the

processes be removed

• Fairest policy is FIFO, a

semaphore that implements this is

called strong semaphore

• A semaphore that doesn’t specify

the order in which processes are

removed from the queue is

known as week semaphore

struct semaphore {

int count;

queueType queue;

}

void wait(semaphore s)

{

s.count--;

if (s.count < 0)

{

place this process in s.queue;

block this process

}

}

void signal(semaphore s)

{

s.count++;

if (s.count <= 0)

{

remove a process P from s.queue;

place process P on ready list;

}

}

Binary Semaphores

• A more restrictive version of

semaphores

• A binary semaphore may take on

the values 0 or 1.

• In principle it should be easier to

implement binary semaphores

and they have expressive power

as the general semaphore

• Both semaphores and binary

semaphores do have a queue to

hold the waiting processes

struct binary_semaphore {

enum (zero, one) value;

queueType queue;

};

void waitB(binary_semaphore s){

if (s.value == 1)

s.value = 0;

else{

place this process in s.queue;

block this process;

}

}

void signalB(semaphore s){

if (s.queue.is_empty())

s.value = 1;

else{

remove a process P from s.queue;

place process P on ready list;

}

}

Example of Semaphore Mechanism

• Processes A, B and C depend on the results from

process D

• Initially, process D has produced an item of its

resources and it is available (the value of the

semaphore is s=1)

• (1) A is running, B, D and C are ready; When A issues a wait(s) it

will immediately pass the semaphore and will be able to continue

its execution, so it rejoins the ready queue

• (2) B runs and will execute a wait(s) primitive and it is suspended

(allowing D to run)

• (3) D completes a new result and issues a signal(s)

•

•

•

•

•

(4) signal(s) from (3) allows B to move in ready queue; D rejoins the ready queue and C

is allowed to run

(5) C is suspended when it issues a wait(s), similarly A and B are suspended on the

semaphore

(6) D takes processor again and produces one reslut again, calling signal(s);

(7) C is removed from the semaphore queue and placed in ready list

Latter cycles of D will release A and B from suspension

Mutual Exclusion with Semaphores

/* program mutual exclusion */

const int n = 3; /* number of

processes */;

semaphore lock = 1;

void P(int i){

while (true){

waitB(lock);

/* critical section */;

signalB(lock);

/* other processing*/;

}

}

void main(){

int i;

i=n;

while (i>0){

init_proc(&proc(i), …);

i--;

}

}

Producer/Consumer Problem

• Problem formulation:

– One or more producers generating some type of data

(i.e. records, characters, etc…) and placing these in a

buffer

– Consumer that are taking items out of the buffer, one

at a time; only one agent (either producer or consumer

can access the buffer at a time)

– The system is to be constrained to prevent the overlap

of buffer operations

• Examine a number of solutions to this problem

Infinite linear array buffer

producer:

while (true) {

/* produce item v */

Buff[in] = v;

in++;

}

consumer:

while (true) {

while (in <= out) {;}/*do

w = Buff[out];

out++;

/* consume item w */

}

Note: shaded area indicates occupied locations

out

Buff[0]

in

Buff[1]

Buff[2]

Buff[3]

Buff[4]

Buff[5]

nothing */;

• Consumer has to make sure that

will not read data from an empty

buffer (makes sure in >out)

•Try to implement this using

binary semaphores

...

One producer, One consumer, Infinite

buffer

void producer(){

while (TRUE){

produce_object();

append();

signal(product);

something_else();

}

}

void consumer{

while (TRUE){

wait(product);

take();

consume_object();

something else();

}

}

main(){

create_semaphore(product);

init_proc(&producer(), …);

init_proc(&consumer(), …);

}

• product is a semaphore that counts the number of objects placed in the buffer

and not consumed yet

• Product is (in – out) (see the previous buffer)

• This solution works only if there is just one consumer, one producer and the

intermediary buffer is infinite. If multiple producers/consumers than the

append() and take() need to be serialized (as they increment/decrement buffer

pointers)

Multiple producers, Multiple consumers

and infinite buffer

void producerX(){

while (TRUE){

produce_object();

wait(buff_access_prod);

append();

signal(buff_access_prod);

signal(product);

something_else();

}

}

void consumerY{

while (TRUE){

wait(product);

wait(buff_access_cons);

take();

signal(buff_access_cons);

consume_object();

something else();

}

}

main(){

create_semaphore(product);

create_semaphore(buff_access_prod);

create_semaphore(buff_access_cons);

init_proc(&producer1(), …);

init_proc(&producer2(), …);

/*…*/

init_proc(&producerN(), …);

init_proc(&consumer1(), …);

init_proc(&consumer2(), …);

/*…*/

init_proc(&consumerM(), …);

}

• buff_access_prod is a semaphore that synchronizes the access of producers to

the buffer

• buff_access_cons is a sempahore that synchronizes the access of the

consummers to the buffer

• product is a semaphore that counts the produced objects yet unconsumed

Finite (circular) buffer

producer:

while (true) {

/* produce item v */

/* do nothing while buffer

full */;

while ((in + 1)%n==out){;}

Buff[in] = v;

in = (in + 1) % n

}

out

Buff[0]

Buff[1]

in

Buff[2]

in

Buff[0]

Buff[1]

consumer:

while (true) {

/* do nothing while no data */;

while (in == out){;}

w = Buff[out];

out = (out + 1) % n;

/* consume item w */

}

Buff[3]

Buff[4]

Buff[5]

...

Buff[n-1]

Buff[4]

Buff[5]

...

Buff[n-1]

out

Buff[2]

Buff[3]

Note: shaded area indicates occupied locations

•Block:

(i) Producer – insert in full buffer

(ii) Consumer – remove from empty

buffer

•Unblock:

(i) Consumer – remove item

(ii) Producer – insert item

Multiple producers, Multiple consumers,

Finite buffer

void producerX(){

while (TRUE){

produce_object();

wait (buff_space);

wait(buff_access_prod);

append();

signal(buff_access_prod);

signal(product);

something_else();

}

}

•

•

•

•

void consumerY{

while (TRUE){

wait(product);

wait(buff_access_cons);

take();

signal(buff_access_cons);

signal(buff_space);

consume_object();

something else();

}

}

main(){

create_semaphore(product);

create_semaphore(buff_access_prod);

create_semaphore(buff_access_cons);

create_semaphore(buff_space);

/*initialize the buff_space semaphor to

the size of the buffer*/

init_proc(&producer1(), …);

init_proc(&producer2(), …);

/*…*/

init_proc(&producerN(), …);

init_proc(&consumer1(), …);

init_proc(&consumer2(), …);

/*…*/

init_proc(&consumerM(), …);

}

buff_space – semaphore that counts the free space from the buffer

product – semaphore that counts the produced items and not consumed yet

buff_access_prod is a semaphore that synchronizes the access of producers to the buffer

buff_access_cons is a semaphore that synchronizes the access of the consumers to the buffer

Semaphores implementation

• As mentioned earlier, it is imperative that the wait and

signal operations to be atomic primitives

• The essence of the problem is mutual exclusion

– Only one process at a time may manipulate a semaphore with

either a wait or signal operation

• Any of the software schemas would do (Peterson’s and Dekker’s

algorithms)

• Large amount of processing overhead

• The alternative is to use hardware supported schemas for

mutual exclusion

– Semaphores implemented with test&set instruction

– Semaphores implemented with disable/enable interrupts

Semaphores implementation with

test&set

wait(s){

while (!testset(s.flag))/* do nothing */;

s.count--;

if (s.count < 0){

/*place this process in s.queue;

block this process*/

}

s.flag = 0;

}

signal(s){

while (!testset(s.flag)){;} /*do nothing*/

s.count++;

if (s.count <= 0){

/*remove a process P from s.queue;

place process P on ready list*/

}

s.flag = 0;

}

• The semaphore s is a structure, as explained earlier, but

now includes a new integer component, s.flag

• This involves a form of busy waiting, but the primitives

wait and signal are short, so the amount of waiting time

involved should be minor

Semaphores implemented using interrupts

wait(s){

disable_interrupts();

s.count--;

if (s.count < 0){

/*place this process in s.queue;

block this process

}

enable_interrupts();

}

signal(s){

disable_interrupts();

s.count++;

if (s.count <= 0){

/*remove a process P from s.queue;

place process P on ready list*/

}

enable_interrupts();

}

• For single processor system it is possible to

inhibit the interrupts for the duration of a wait or

signal operation

• The relative short duration of these operations

means that this approach is reasonable

Inter Process Communication

• Inter process data exchange, execution state report,

results collection is part of inter process communication;

it can be done using some shared memory zones,

therefore synchronization is required

• Semaphores are primitive (yet powerful) tools to enforce

mutual exclusion and for process coordination; still, it

may be difficult to produce a correct program using

semaphores

– The difficulty is caused by the fact that wait and signal

operations may be scattered throughout a program and is not

easy to see an overall effect

Messages

• When a process interacts with another, two main

fundamental requirements must be satisfied:

communication and synchronization

• Message passing provides both of those functions

• Message-passing systems come in many forms; this

section will provide a general view of typical features

found in such systems

• The message-passing function is normally provided in

the form of primitives:

– Send (destination, message)

– Receive (source, message)

Messages design issues

• Synchronization

– Blocking vs. Non-blocking

• Addressing

– Direct

– Indirect

• Message transmission

– Through value

– Through reference

• Format

– Content

– Length

• Fixed

• Variable

• Queuing discipline

– FIFO

– Priority

Synchronization

• Send gets executed in a process

– Sending process is blocked until the receiver gets the message

– Sending process continues its execution in a non blocking

fashion

• Receive gets executed in a process

– If a message has been previously sent, the message is received

and execution continues

– If there is no waiting message then

• Process can be blocked until a message arrives

• The process continues to execute, abandoning the attempt to receive

• So both the sender and receiver can be blocking or not

Synchronization …

• There are three typical combinations of systems

– Blocking send, blocking receive

• Both the receiver and sender are blocked until the message

is delivered (provides tight sync between processes)

– Non-blocking send, blocking receive

• The sender can continue the execution after sending a

message, the receiver is blocked until message arrives (it is

probably the most useful combination)

– Non-blocking send, non-blocking receive

• Neither party waits

• Typically only one or two combinations are

implemented

Addressing

• Direct addressing

– Send primitive include the identifier of the receiving process

– Receive can be handled in two ways

• Receiving process explicitly designates the sending process (effective for

cooperating processes)

• Receiving process is not specifying the sending process (known as

implicit addressing); in this case, the source parameter of receive

primitive has a value returned when the receive operation has been

completed

• Indirect addressing

– The messages are not sent directly from sender to receiver, but

rather they are sent to a shared data structure consisting of

queues that temporarily can hold messages; those are referred to

as mailboxes.

– Two communicating process:

• One process sends a message to a mail box

• Receiving process picks the message from the mailbox

Indirect process communication

• Indirect addressing decuples the

sender form the receiver allowing

for greater flexibility.

• Relationship between sender and

receiver:

– One to one

• Private communications link to be set

up between two processes

– Many to one

• Useful for client server interaction;

one process provides services to other

processes; in this case, the mailbox is

known as port

– One to many

• Message or information is

broadcasted across a number of

processes

– Many to many

Message transmission

• Through value

– Require temp buffers in the operating system that would store the sent

messages until the receiver would receive them

– Overloading of the OS

• Through reference

–

–

–

–

Doesn’t require temporary buffers

Faster than the value passing method

Requires protection of the shared memory

It is difficult to implement when the sender and receiver are located on

different machines

• Mixed passing

– The sending is done through reference

– If it is the case (the receiver tries to modify the received message), the

message gets transmitted again, this time through value

Message format

• Fixed length

– Easy to implement

– Minimizes the processing and

storage overhead

– If large amount of data is to be

sent, that data is placed into a file

and the file is referenced by the

message

• Variable length

– Message is divided in two parts:

header and body

– Requires dynamic memory

allocation, so fragmentation could

occur

Queuing discipline

• Simplest queuing discipline is first in first out

• Sometime it is not enough, since some messages

may have higher priority then others

– Allow the sender to specify the message priority, either

explicitly or based on some sort of message types

– Allow the receiver to inspect the message queue and

select which message to receive next

Mutual exclusion using messages

/* program mutualexclusion */

void Proc(int i)

{

message msg;

while (true){

receive (mutex, msg);

/* critical section */;

send (mutex, msg);

/* remainder */;

}

}

•

•

•

•

•

void main(){

create_mailbox (mutex);

/*initialize the mailbox with a null message*/

send (mutex, null);

init_proc(&proc(1), …);

init_proc(&proc(2), …);

init_proc(&proc(3), …);

/*…*/

init_proc(&proc(N), …);

}

We assume the using of a blocking receive primitive and a non-blocking send primitive

mutex – is a shared mailbox, which can be used by all processes to send and receive

The mailbox is initialized to contain a single message with null content

A process wishing to enter its critical section, first attempts to receive a message; it will be

block if no message is in the mailbox; once has aquired the message, it performs its

critical section and then places a message back into the mailbox

The message functions as a token that is passed between process to process

Readers/Writers problems

• There is a data area shared among a number of processes (i.e. file,

bank of main memory, etc..)

• There are a number of processes that only read the data area

(readers) or write it (writers)

• Conditions that must be satisfied:

– Any number of readers may simultaneously read the data

– Only one writer at the time may write the data

– If a writer is writing the data, no reader may read it

• Note that this problem is not the same as the producer/consumer

problem, since the readers only read data and the writers only write

data … the readers don’t modify the data structures nor the writers

read the data structures (to find out where to write).

• Two solutions

– Readers with priority

– Writers with priority

Readers have priority

void readerX(){

while (TRUE){

wait(readcount_sem);

readcount++;

if (readcount==1){

wait(write_sem); /*block the

writers while read in progress*/

}

signal(readcount_sem);

READ_UNIT();

wait(readcount_sem);

readcount--;

if(readcount==0){

signal(write_sem); //unblock writers

}

signal(readcount_sem);

}

}

void writerY{

while (TRUE){

wait(write_sem);

WRITE_UNIT();

signal(write_sem);

}

}

int readcount = 0;

main(){

create_semaphore(readcount_sem);

create_semaphore(write_sem);

/*initialize the semaphores to 1*/

signal(readcount_sem);

signal(write_sem);

init_proc(&reader1(), …);

init_proc(&reader2(), …);

/*…*/

init_proc(&readerN(), …);

init_proc(&writer1(), …);

init_proc(&writer2(), …);

/*…*/

init_proc(&writerM(), …);

}

• readcount_sem – semaphore that synchronizes the access to the variable that

keeps the number of parallel readers (readcount)

• write_sem – semaphore that controls the access of writers

• As long as the writer is accessing the shared area, no other writers nor readers

may access it

References

• “Operating Systems”, William Stallings,

ISBN 0-13-032986-X

• “Operating Systems – A modern perspective”,

Garry Nutt, ISBN 0-8053-1295-1

• Additional slides

• fork()

Linux Fork()

– returns 0 to child

– return child_pid to parent

• Parent is responsible to look after children

– Failure to do so can create zombies

– pid = wait( &status ) to explicitly wait for the child

to terminate

– signal(SIGCHLD, SIG_IGN) to ignore childtermination signals

– May also set SIGCHLD to call a specified

subroutine when a child dies

Fork() Example

/* Example of use of fork system call */

#include <stdio.h>

main()

{

int pid;

int var = 100;

pid = fork();

if (pid < 0) { /* error occurred */

fprintf(stderr, "Fork failed!\n");

exit(-1);

}

else if (pid==0) { /* child process */

var = 200;

printf("I am the child, pid=%d\n", getpid());

printf("My child variable value is %d\n",var);

}

else { /* parent process */

printf("I am the parent, pid=%d\n", getpid() );

printf("My parent variable value is %d\n",var);

exit(0);

}

}

System V Semaphores

• Generalization of wait and signal primitives

– Several operations can be done simultaneously and the

increment and decrement operations can be values greater than

1

– The kernel does all the requested operations atomically

• Elements:

– Current value of the semaphore

– Process ID of last process that operates on the semaphore

– Number of processes waiting for the semaphore value to be

greater than its current value

– Number of processes waiting for the semaphore value to be

zero

• Associated with the semaphores are queues of processes

suspended on that semaphore

System V Semaphores

• Key - 4 byte value for the “name”

• Create/access semaphores (you can create them in sets)

– id = semget( KEY, Count, IPC_CREAT | PERM )

– id = semget( KEY, 0, 0 )

• Perm = file permission bits (0666)

• to create only (fails if exists) use

IPC_CREAT|IPC_EXCL|PERM

• Count = # of semaphores to create

• Controlling semaphores

– i = semctl( id, num, cmd, … )

• id – identifier of the semaphore set

• num – number of the semaphore to be processed

• cmd – type of command (GETVAL, SETVAL, GETPID

…)

• … - up to the type of command

• i.e. - deleting semaphores

– i = semctl( id, 0, IPC_RMID, 0 )

Semaphore Operations (System V)

• Operations on semaphores

– semop(id, sembuf *sops, nsops)

• id – identifier of the semaphore set

• sops – points to the user defined array of sembuf structures that contain the

semaphore operations

• nsops – The number of sembuf structures in the array

– Semaphore Structure

struct sembuf {

short sem_num;

(semaphore ID)

short sem_op; (change amount)

short sem_flg; (wait/don’t wait)

} op;

– sem_flg can be 0 or IPC_NOWAIT

• Wait():

– op.sem_op = -1

(decrement)

– res = semop( id, &op, 1 )

• Signal():

– op.sem_op = 1

(increment)

– res = semop( id, &op, 1 )

Linux Semaphore (Posix)

• int sem_init(sem_t *sem, int pshared, unsigned int

value)

• int sem_wait(sem_t *sem)

• int sem_post(sem_t *sem)

• int sem_getvalue(sem_t *sem, int *val)

• int sem_destroy(sem_t *sem)

Shared Memory

• Fastest way of inter process communication

• It is a common block of virtual memory shared by

multiple processes

• Read and write of the shared memory is done

using same read and write for normal memory

• Permissions (read only or read/write) are

determined per process basis

• Mutual exclusion constrains are not provided by

the shared memory, but they have to be

programmed in the processes that are using it

Shared Memory

• Create/Access Shared Memory

– id = shmget( KEY, Size, IPC_CREAT | PERM )

– id = shmget( KEY, 0, 0 )

• Deleting Shared Memory

– i = shmctl( id, IPC_RMID, 0 )

– Or use ipcrm

• Accessing Shared Memory

– memaddr = shmat( id, 0, 0 )

– memaddr = shmat( id, addr, 0 )

• Addr should be multiple of SHMLBA

– memaddr = shmat( id, 0, SHM_READONLY )

• System will decide address to place the memory at

– shmdt( memaddr )

• Detach from shared memory

Unix Pipes

• Circular buffer allowing processes to communicate to

each other following producer-consumer model

– It is a first in first out queue, written by one process and read by

another

• When a pipe is created is given a fixed size in bytes

– When a process attempts to write into the pipe, the write request

is immediately executed if there is enough room; otherwise, the

process is blocked

– Similarly, a read process is blocked if attempts to read more

than it is in the pipe

Unix Pipes

• Creates a one-way connection between processes

• Created using pipe() call

– Returns a pair of file descriptors

• pend[0] = read end

• pend[1] = write end

– Use dup() call to convert to stdin/stdout

• Example:

int pend[2]

pipe( pend )

fork()

parent

child

close(pend[1])

close(pend[0])

write(pend[1],…)

read(pend[0],…)

close(pend[0])

close(pend[1])

Unix Messages

• Send information (type + data) between processes

• Message Structure

– long type

– char text[]

• Functions:

– Create/access

• id = msgget( KEY, IPC_CREAT|IPC_EXCL…)

• id = msgget( KEY, 0 )

– Control

• msgctl( id, IPC_RMID )

– Send/receive

• msgsnd( id, buf, text_size, flags )

• msgrcv( id, buf, max_size, flags )

– Useful Flags

• IPC_NOWAIT

• MSG_NOERROR (truncate long messages to max_size)

Semaphore example

#include <errno.h>

#include <pthread.h>

#include <semaphore.h>

#include <stdarg.h>

#include <stdio.h>

/* Posix Threads */

/* Posix Semaphores */

int main(){

int i;

/* iteration variable */

int status; /* return status of calls */

pthread_t tid; /* Thread id */

/* Semaphore initialization */

sem_init(&s,

/* the semaphore */

0,

/* is the semaphore shared or not?

(on Linux only non shared sems are supported) */

1);

/* Initial semaphore value */

/* Create and run the threads */

status = pthread_create(&tid, NULL, MyThread, (void *) NULL);

if (status != 0){

errprint("MyThread creation failed with %d, errno = %d\n",

status, errno, strerror(errno));

}

/* Main continues execution, main is its own thread */

for (i = 0; i < 15; ++i){

sem_wait(&s);

printf("Main gets access to critical section\n");

/* some critical sectin code */

sleep(1);

sem_post(&s);

}

/* Wait for Thread Termination */

pthread_join(tid, NULL);

if (status != 0){

errprint("Thread join of MyTread failed with %d, errno = %d\n",

status, errno, strerror(errno));

}

sem_t

s;

/* takes free format error message much like printf,

exits unconditionally */

void errprint(const char *fmt, ...){

va_list args;

va_start(args,fmt);

vfprintf(stderr, fmt, args);

va_end(args);

exit(1);

}

void * MyThread(void * arg){

int i;

printf("Entering MyThread\n");

for (i = 0; i < 10; ++i){

sem_wait(&s);

printf("MyThread gets access to critical section\n");

fflush(stdout);

/*some critical section code here*/

sleep(2);

sem_post(&s);

}

pthread_exit( (void *) 0);

return (void *) NULL;

}

}

Shared memory server

/*--------------------------------------+

| UNIX header files

|

+--------------------------------------*/

#include <sys/types.h>

#include <unistd.h>

#include <fcntl.h>

#include <sys/ipc.h>

#include <sys/stat.h>

#include <sys/shm.h>

/*--------------------------------------+

| ISO/ANSI header files

|

+--------------------------------------*/

#include <stdlib.h>

#include <stdio.h>

#include <string.h>

#include <time.h>

#include <errno.h>

/*--------------------------------------+

| Constants

|

+--------------------------------------*/

/* value to fill memory segment

*/

#define MEM_CHK_CHAR

'*'

/* shared memory key

*/

#define SHM_KEY (key_t)1097

/* size of memory segment (bytes)

*/

#define SHM_SIZE (size_t)256

/* give everyone read/write permission */

/* to shared memory

*/

#define SHM_PERM (S_IRUSR|\

S_IWUSR|S_IRGRP|S_IWGRP|S_IROTH|S_IWOTH)

/*----------------------------------------+

| Name:

main

|

| Returns: exit(EXIT_SUCCESS) or

|

|

exit(EXIT_FAILURE)

|

+-----------------------------------------*/

int main(){

/* shared memory segment id

*/

int shMemSegID;

/* shared memory flags

*/

int shmFlags;

/* ptr to shared memory segment

*/

char * shMemSeg;

/*---------------------------------------*/

/* Create shared memory segment

*/

/* Give everyone read/write permissions. */

/*---------------------------------------*/

shmFlags = IPC_CREAT | SHM_PERM;

if ( (shMemSegID = shmget(SHM_KEY, SHM_SIZE, shmFlags)) < 0 ){

perror("SERVER: shmget");

exit(EXIT_FAILURE);

}

/*-------------------------------------------*/

/* Attach the segment to the process's data */

/* space at an address

*/

/* selected by the system.

*/

/*-------------------------------------------*/

shmFlags = 0;

if ( (shMemSeg = shmat(shMemSegID, NULL, shmFlags)) == (void *) -1 ){

perror("SERVER: shmat");

exit(EXIT_FAILURE);

}

Shared memory server

/*-------------------------------------------*/

/* Fill the memory segment with MEM_CHK_CHAR */

/* for other processes to read

*/

/*-------------------------------------------*/

memset(shMemSeg, MEM_CHK_CHAR, SHM_SIZE);

/*-----------------------------------------------*/

/* Go to sleep until some other process changes */

/* first character

*/

/* in the shared memory segment.

*/

/*-----------------------------------------------*/

while (*shMemSeg == MEM_CHK_CHAR){

sleep(1);

}

/*------------------------------------------------*/

/* Call shmdt() to detach shared memory segment. */

/*------------------------------------------------*/

if ( shmdt(shMemSeg) < 0 ){

perror("SERVER: shmdt");

exit(EXIT_FAILURE);

}

/*--------------------------------------------------*/

/* Call shmctl to remove shared memory segment. */

/*--------------------------------------------------*/

if (shmctl(shMemSegID, IPC_RMID, NULL) < 0){

perror("SERVER: shmctl");

exit(EXIT_FAILURE);

}

exit(EXIT_SUCCESS);

} /* end of main() */

Shared memory client

/*-------------------------------------+

| UNIX header files

|

+-------------------------------------*/

#include <sys/types.h>

#include <sys/stat.h>

#include <sys/ipc.h>

#include <sys/shm.h>

/*-------------------------------------+

| ISO/ANSI header files

|

+-------------------------------------*/

#include <stdlib.h>

#include <stdio.h>

#include <string.h>

#include <errno.h>

/*-------------------------------------+

| Constants

|

+-------------------------------------*/

/* memory segment character value */

#define MEM_CHK_CHAR

'*'

/* shared memory key

*/

#define SHM_KEY (key_t)1097

#define SHM_SIZE (size_t)256

/* size of memory segment (bytes) */

/* give everyone read/write

*/

/* permission to shared memory

*/

#define SHM_PERM (S_IRUSR|S_IWUSR|\

S_IRGRP|S_IWGRP|S_IROTH|S_IWOTH)

/*------------------------------------+

| Name:

main

|

| Returns: exit(EXIT_SUCCESS) or

|

exit(EXIT_FAILURE)

|

+------------------------------------*/

|

int main()

{

/* loop counter

*/

int i;

/* shared memory segment id

*/

int shMemSegID;

/* shared memory flags

*/

int shmFlags;

/* ptr to shared memory segment

*/

char * shMemSeg;

/* generic char pointer

*/

char * cptr;

/*-------------------------------------*/

/* Get the shared memory segment for */

/* SHM_KEY, which was set by

*/

/* the shared memory server.

*/

/*-------------------------------------*/

shmFlags = SHM_PERM;

if ( (shMemSegID = shmget(SHM_KEY, SHM_SIZE, shmFlags)) < 0 ){

perror("CLIENT: shmget");

exit(EXIT_FAILURE);

}

Shared memory client

/*-----------------------------------------*/

/* Attach the segment to the process's */

/* data space at an address

*/

/* selected by the system.

*/

/*-----------------------------------------*/

shmFlags = 0;

if ( (shMemSeg = shmat(shMemSegID, NULL, shmFlags)) == (void *) -1 ){

perror("SERVER: shmat");

exit(EXIT_FAILURE);

}

/*-------------------------------------------*/

/* Read the memory segment and verify that */

/* it contains the values

*/

/* MEM_CHK_CHAR and print them to the screen */

/*-------------------------------------------*/

for (i=0, cptr = shMemSeg; i < SHM_SIZE; i++, cptr++){

if ( *cptr != MEM_CHK_CHAR ){

fprintf(stderr, "CLIENT:

Memory Segment corrupted!\n");

exit(EXIT_FAILURE);

}

putchar( *cptr );

/* print 40 columns across

*/

if ( ((i+1) % 40) == 0 ){

putchar('\n');

}

}

putchar('\n');

/*--------------------------------------------*/

/* Clear shared memory segment.

/*--------------------------------------------*/

memset(shMemSeg, '\0', SHM_SIZE);

/*------------------------------------------*/

/* Call shmdt() to detach shared

*/

/* memory segment.

*/

/*------------------------------------------*/

if ( shmdt(shMemSeg) < 0 ){

perror("SERVER: shmdt");

exit(EXIT_FAILURE);

}

exit(EXIT_SUCCESS);

} /* end of main() */

*/

Pipe example

#include <errno.h>

#include <stdio.h>

#include <unistd.h>

void error(char *mesg){

fprintf(stderr, "Error <%s> errno = %d", mesg, errno);

perror(mesg);

exit(1);

}

int main(){

char buffer[80];

int pfid[2]; /* the pipe file descriptors */

int status;

status = pipe(pfid);

if (status == -1){

error("Bad pipe call");

}

status = fork();

/* create 2 processes */

if (status == -1){

error("Bad fork call");

}

else if (status == 0){

/*child process*/

status = read(pfid[0], buffer, sizeof(buffer));

if (status != sizeof(buffer)){

error("Bad read from pipe");

}

printf("Child pid = %d received the message <%s>\n", getpid(), buffer);

status = close(pfid[0]);

/* close the reader end */

}

else {

/*parent process*/

sprintf(buffer, "Pid of the Parent is <%d>", getpid());

status = write(pfid[1], buffer, sizeof(buffer));

if (status != sizeof(buffer)){

error("Bad write to pipe");

}

status = close(pfid[1]);

/* close the writer end */

}

}

Unix messages example

/* testmsg.c - uses the messages in Unix */

#include <errno.h>

#include <stdio.h>

#include <sys/stat.h>

#include <sys/types.h>

#include <sys/ipc.h>

#include <sys/msg.h>

void error(char *mesg)

{

fprintf(stderr, "Error <%s> errno = %d", mesg, errno);

perror(mesg);

exit(1);

}

void show_usage(char *mesg, char *prog_name){

fprintf(stderr, "Error <%s>\n", mesg);

fprintf(stderr, "Usage is\n\t%s [\"-r\" | \"-w\"]\n\t"

" r for reader, w for writer\n", prog_name);

}

/* read in up to n bytes from stdin into buf */

void read_buffer( char *buf, int n)

{

int c, done = 0, i;

for (i = 0; !done && i < n

&& !feof(stdin) && !ferror(stdin); ++i){

c = getchar();

if (c != EOF){

buf[i] = (char) c;

done = buf[i] == '\n'; /* newline? */

}

}

if (ferror(stdin)){

perror("Bad read from stdin");

}

else {

buf[i - 1] = '\0';

/* use ASCII NULL terminator */

}

}

int main(int argc, char *argv[])

{

key_t k;

int

msgqid;

int

status;

struct {

long mtype; /* what message type ? */

struct{

long

pid;

/* process id of sender */

char

str[80];

/* some string to send */

} data;

} msg_rec;

/* get the key of the message queue */

k = ftok(argv[0], 0);

printf ("Key Value is %d\n", k);

if (argc != 2){

show_usage("Wrong Number of Parameters", argv[0]);

exit(1);

}

Unix message example

else if (!strcmp(argv[1],"-r")){

msgqid = msgget(k, S_IRUSR);

/* Open read only, not creating so ignore perms */

if (msgqid < 0){

printf("Error creating the message\n");

fprintf(stderr, "Bad msgget %ld for read\n", (long) k);

error("Bad msgget create and read perms");

}

status = msgrcv( msgqid,

/* which msg queue */

(void *) &msg_rec, /* data to recv */

sizeof(msg_rec.data),

0,

/* Message Type 0 = wild card */

0);

/* Flags (Blocking) */

/* if msg type is > 0 then looks for exact match,

if msg type < 0 then will take the first message

satisfying -(msg.type) >= incoming_msg.type */

if (status < 0){

perror("Bad msgrcv");

}

printf("Received Message data.pid = %ld, str=<%s>\n",

msg_rec.data.pid, msg_rec.data.str);

}

else if (!strcmp(argv[1],"-w")){

/* Create a message queue with owner level

read/write permissions */

msgqid = msgget(k, IPC_CREAT|S_IWUSR|S_IRUSR);

/*test to see if the message queue has been succesfully created*/

if (msgqid < 0){

fprintf(stderr, "Bad msgget %ld create for

read and write\n", (long) k);

error("Bad msgget create, read and write");

}

/*initialize the message type*/

msg_rec.mtype = 99;

/* encode the pid in the msg */

msg_rec.data.pid = getpid();

printf("Enter your message here>");

flush(stdout);

/* user inputs the string to send */

read_buffer(msg_rec.data.str, sizeof(msg_rec.data.str));

status = msgsnd( msgqid, /* which msg queue */

(void *) &msg_rec, /* data to send */

sizeof(msg_rec.data), /* how much data to send */

0); /* Flags (Blocking Send) */

if (status < 0){

error("Bad msgsnd");

}

/* remove the msg queue */

status = msgctl( msgqid, IPC_RMID, 0);

if (status < 0){

error("Bad remove of message queue");

}

}

}