joint

Bayesian Learning,

Cont’d

Administrivia

•

Various homework bugs:

•

Due: Oct 12 (Tues) not 9 (Sat)

•

Problem 3 should read:

•

(duh)

•

(some) info on naive Bayes in Sec. 4.3 of text

Administrivia

•

Another bug in last time’s lecture:

•

Multivariate Gaussian should look like:

5 minutes of math...

•

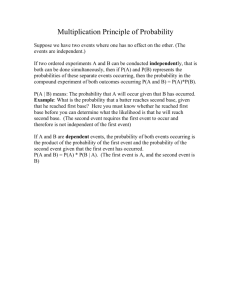

Joint probabilities

•

Given d different random vars,

•

The “joint” probability of them taking on the simultaneous values

• given by

•

Or, for shorthand,

•

Closely related to the “joint PDF”

5 minutes of math...

•

Independence:

•

Two random variables are statistically independent iff:

•

Or, equivalently (usually for discrete RVs):

•

For multivariate RVs:

Exercise

•

Suppose you’re given the PDF:

•

Where z is a normalizing constant.

•

What must z be to make this a legitimate

PDF?

•

Are and independent? Why or why not?

•

What about the PDF:

Parameterizing PDFs

•

Given training data, [ X , Y ] , w/ discrete labels Y

•

Break data out into sets , etc.

•

Want to come up with models,

, etc.

,

•

Suppose the individual f() s are Gaussian, need the params μ and σ

•

How do you get the params?

•

Now, what if the f()s are something really funky you’ve never seen before in your life, with parameters

Maximum likelihood

•

Principle of maximum likelihood:

•

Pick the parameters that make the data as probable (or, in general “likely”) as possible

•

Regard the probability function as a func of two variables: data and parameters:

•

Function

L is the “likelihood function”

•

Want to pick the that maximizes

L

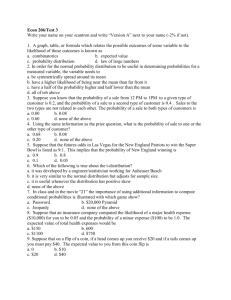

Example

•

Consider the exponential PDF:

•

Can think of this as either a function of x or τ

Exponential as fn of x

Exponential as a fn of τ

Max likelihood params

•

So, for a fixed set of data, X , want the parameter that maximizes

L

•

Hold X constant, optimize

•

How?

•

More important: f () is usually a function of a single data point (possibly vector), but

L is a func. of a set of data

•

How do you extend f () to set of data?

IID Samples

•

In supervised learning, we usually assume that data points are sampled independently and from the same distribution

•

IID assumption: data are independent and identically distributed

IID Samples

•

In supervised learning, we usually assume that data points are sampled independently and from the same distribution

•

IID assumption: data are independent and identically distributed

•

⇒ joint PDF can be written as product of individual (marginal) PDFs:

The max likelihood recipe

Start with IID data

•

Assume model for individual data point, f ( X ; Θ )

•

Construct joint likelihood function (PDF):

•

Find the params Θ that maximize

L

•

(If you’re lucky): Differentiate L w.r.t. Θ , set

=0 and solve

•

Repeat for each class

Exercise

•

Find the maximum likelihood estimator of μ for the univariate Gaussian:

•

Find the maximum likelihood estimator of β for the degenerate gamma distribution:

•

Hint: consider the log of the likelihood fns in both cases

Putting the parts together

[ X , Y ]

5 minutes of math...

•

Marginal probabilities

•

If you have a joint PDF:

•

... and want to know about the probability of just one RV (regardless of what happens to the others)

•

Marginal PDF of or :

5 minutes of math...

•

Conditional probabilities

•

Suppose you have a joint PDF, f ( H , W )

•

Now you get to see one of the values, e.g.,

H=“ 183cm ”

•

What’s your probability estimate of A , given this new knowledge?

5 minutes of math...

•

Conditional probabilities

•

Suppose you have a joint PDF, f ( H , W )

•

Now you get to see one of the values, e.g.,

H=“ 183cm ”

•

What’s your probability estimate of A , given this new knowledge?

Everything’s random...

•

Basic Bayesian viewpoint:

•

Treat (almost) everything as a random variable

•

Data/independent var: X vector

•

Class/dependent var: Y

•

Parameters : Θ

•

E.g., mean, variance, correlations, multinomial params, etc.

•

Use Bayes’ Rule to assess probabilities of classes