ppt

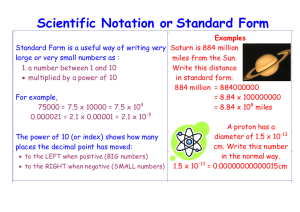

advertisement

EFFICIENT COMPUTATION OF DIVERSE QUERY RESULTS Presenting: Karina Koifman Course : DB Seminar Example Example Yahoo! Autos Maybe a better retrieval Introduction The article talks about the problem of efficiently computing diverse query results in online shopping applications. The Goal The goal of diverse query answering is to return a representative set of top-k answers from all the tuples that satisfy the user selection condition The Problem Users issues query for a product Only most relevant answers are shown. Many Duplications Agenda Existing Solutions Definition of diversity Impossibility results of diversity. Query processing technique. Existing Solutions Existing solutions are inefficient or do not work in all situations. Example: Obtain all the query results and then pick a diverse subset from these results doesn’t scale for large data sets. Existing Solutions Web search engines: first retrieve c × k and then pick a diverse subset from these. It is more efficient than the previous method. many duplicates product sale. (inefficient and doesn’t guarantee diversity) Existing Solutions issuing multiple queries to obtain diverse results: Pro’s\Con’s The good: Diversity The Bad: Hurts performance Empty results *There are no Honda Accord convertibles Agenda Existing Solutions Definition of diversity Impossibility results of diversity. Query processing technique. Diversity Ordering A diversity ordering of a relation R with attributes A, denoted by , is a total R ordering of the attributes in A. Example: Make ≺ Model ≺ Color ≺ Year ≺ Description ≺ Id The DB example Id Make Model Color Year Description 1 Honda Civic Green 2007 Low miles 2 Honda Civic Blue 2007 Low miles 3 Honda Civic Red 2007 Low miles 4 Honda Civic Black 2007 Low miles 5 Honda Civic Black 2006 Low miles 6 Honda Accord Blue 2007 Best Price 7 Honda Accord Red 2006 Good miles 8 Honda Odyssey Green 2007 Rare 9 Honda Odyssey Green 2006 Good miles 10 Honda CRV Red 2007 Fun Car 11 Honda CRV Orange 2006 Good miles 12 Toyota Prius Tan 2007 Low miles 13 Toyota Corolla Black 2007 Low miles 14 Toyota Tercel Blue 2007 Low miles 15 Toyota Camry Blue 2007 Low miles Similarity – SIM(X,Y) 1 Honda Civic Green 2007 Low miles SIM ( x, y ) 1 2 Honda Civic Blue 2007 Low miles 1 Honda Civic Green 2007 Low miles 2007 Low miles SIM ( x, y ) 0 12 Toyota Prius Find a result set that minimizes Tan x , yS SIM ( x, y ) Example - Similarity Id Make Model Color Year Description 1 Honda Civic Green 2007 Low miles 6 Honda Accord Blue 2007 Best Price 8 Honda Odyssey Green 2007 Rare Id Make Model Color Year Description 1 Honda Civic Green 2007 Low miles 2 Honda Civic Blue 2007 Low miles 12 Toyota Prius Tan 2007 Low miles Prefix Id Make Model Color Year Description 1 Honda Civic Green 2007 Low miles Id Make Model Color Year Description 2 Honda Civic Blue 2007 Low miles Id Make Model Color Year Description 8 Honda Odyssey Green 2007 Rare 9 Honda Odyssey Green 2006 Good miles Few more definitions K R, Q RES(R,Q) of size k Given relation R and query Q, let maxval = maxT K Score(T ), where Score T , is the sum of the scores of tuples in T Agenda Existing Solutions Definition of diversity Impossibility results of diversity. Query processing technique. Impossibility Results Intuition: IR score of an item depends only on the item and possibly statistics from the entire corpus, but diversity depends on the other items in the query result set. Inverted Lists Honda cars Honda d1 d4 d8 d10 d17 Car d4 d10 d11 d17 d20 Merged Inverted List: d4 d10 d17 Impossibility Results Item in an inverted list has a score, which can either be a global score (e.g., PageRank) or a value/keyword -dependent score (e.g., TF-IDF). The items in each list are usually ordered by their score – so that we could handle top-k queries . If we assume that we have a scoring function f() that is monotonic- which as a normal assumption for traditional IR system, then the article proofs either it’s not diverse or to inefficient\infeasible. Agenda Existing Solutions Definition of diversity Impossibility results of diversity. Query processing technique. The DB example Id Make Model Color Year Description 1 Honda Civic Green 2007 Low miles 2 Honda Civic Blue 2007 Low miles 3 Honda Civic Red 2007 Low miles 4 Honda Civic Black 2007 Low miles 5 Honda Civic Black 2006 Low miles 6 Honda Accord Blue 2007 Best Price 7 Honda Accord Red 2006 Good miles 8 Honda Odyssey Green 2007 Rare 9 Honda Odyssey Green 2006 Good miles 10 Honda CRV Red 2007 Fun Car 11 Honda CRV Orange 2006 Good miles 12 Toyota Prius Tan 2007 Low miles 13 Toyota Corolla Black 2007 Low miles 14 Toyota Tercel Blue 2007 Low miles 15 Toyota Camry Blue 2007 Low miles The car indexing example One-pass Algorithm Lets say Q looks for descriptions with ‘Low’, with k=3 Initialization •Pick first K While we can improve Diversity •go to next option and check if better , if so – prune Honda.Civic.Green.2007.’Low miles’ One-pass Algorithm We start from two Civics , then we know that we need only one more so we pick the next Civic One-pass Algorithm Then we look for another in next level (Accord)- no such, because it doesn’t have ‘Low’ in it (also no other in that level). One-pass Algorithm Then we look for another in next level (make)- and prune, This is maximum diverse – we stop here. One-pass Algorithm If we had a Ford, we would continue Ford 0 Focus 0 Black 07 0 Low 0 miles Scored One-pass Algorithm Give each car a score , then the query would take this score as parameter- minScore- smallest score in the result set, Choose next next ID by : The smallest ID such that score(id)>=root.minScore. And the algorithm proceeds as before. Probing Algorithm Main idea: to go over all the cars as they were on an axis K=3 K=2 K=1 Advantage of bidirectional exploring “Honda” only has one child, we found it quickly not exploring every option (only civic). Each time we add a node to the diverse solution we do not have to prune it- unlike the OnePass algorithm. WAND algorithm WAND is an efficient method of obtaining top-K lists of scored results, without explicitly merging the full inverted lists. AND(X1,X2,...Xk)≡ WAND(X1,1,X2,1, ...Xk,1,k), OR(X1,X2,...Xk) ≡ WAND(X1,1,X2,1, ...Xk,1,1). To obtain k best results the operator uses the upper bounds of maximum contribution, and temp threshold. WAND(X1,UB1,X2,UB2,...,Xk ,UBk, θ) Scored Probing Algorithm We use the WAND algorithm- to obtain the top-k list. Next step is marking all possible nodes to add- as MIDDLE. we also maintain a heap – for a node with minimum child. Each step we move nodes from tentative to useful . Experiments MultQ – rewriting the query as multiple queries and merging their results. Naïve – all the results of a query Basic - just first k answers – without diversity. OnePass , Probe – our algorithms U = unscored S = scored Experiments Experiments Conclusions Formalized diversity in structured search and proposed inverted-list algorithms. The experiments showed that the algorithms are scalable and efficient. In particular, diversity can be implemented with little additional overhead when compared to traditional approaches Extension of the algorithm Assign higher weights to Hondas and Toyotas when compared to Teslas, so that the diverse results have more Hondas and Toyotas. Questions? Thank You !