A Broad Overview of Key Statistical Concepts

advertisement

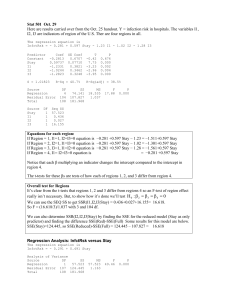

Analysis of variance approach to regression analysis … an alternative approach to testing for a linear association Regression Plot y1 = 54.4758 - 0.764016 x S = 7.81137 R-Sq = 6.5 % R-Sq(adj) = 3.2 % y-i y1 60 y-bar 50 y-hat 40 0 1 2 3 4 5 6 7 8 9 10 x 2 n y y i 1 i 1827.6 2 n y i 1 i yˆ i 1708.5 2 n yˆ i 1 i y 119.1 Regression Plot y2 = 75.5458 - 5.76402 x S = 7.81137 80 R-Sq = 79.9 % R-Sq(adj) = 79.2 % y-i 70 y2 60 y-bar 50 40 30 y-hat 20 10 0 1 2 3 4 5 6 7 8 9 10 x 2 n y y i 1 i 8487.8 2 n y i 1 i yˆ i 1708.5 2 n yˆ y i 1 i 6679.3 Basic idea … well, kind of … • Break down the variation in Y (“total sum of squares”) into two components: – a component that is due to the change in X (“regression sum of squares”) – a component that is just due to random error (“error sum of squares”) • If the regression sum of squares is much greater than the error sum of squares, conclude that there is a linear association. Winning times (in seconds) in Men’s 200 meter Olympic sprints, 1900-1996. Are men getting faster? Row 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 Year 1900 1904 1908 1912 1920 1924 1928 1932 1936 1948 1952 1956 1960 1964 1968 1972 1976 1980 1984 1988 1992 1996 Men200m 22.20 21.60 22.60 21.70 22.00 21.60 21.80 21.20 20.70 21.10 20.70 20.60 20.50 20.30 19.83 20.00 20.23 20.19 19.80 19.75 20.01 19.32 Regression Plot Men200m = 76.1534 - 0.0283833 Year S = 0.298134 R-Sq = 89.9 % R-Sq(adj) = 89.4 % Men200m 22.5 21.5 20.5 19.5 1900 1950 Year 2000 Analysis of Variance Table Analysis of Variance Source DF Regression 1 Residual Error 20 Total 21 SS 15.796 1.778 17.574 MS 15.796 0.089 F P 177.7 0.000 The regression sum of squares, 15.796, accounts for most of the total sum of squares, 17.574. There appears to be a significant linear association between year and winning times … let’s formalize it. Y Y Yˆ Y Y Yˆ i i i i The cool thing is that the decomposition holds for the sum of the squared deviations, too. That is …. ˆ ˆ Y Y Y Y Y Y 2 n i 1 i 2 n i 1 i 2 n i 1 i i Total sum of squares (SSTO) Regression sum of squares (SSR) Error sum of squares (SSE) SSTO SSR SSE Breakdown of degrees of freedom Degrees of freedom associated with SSTO n 1 1 n 2 Degrees of freedom associated with SSR Degrees of freedom associated with SSE Definition of Mean Squares The regression mean square (MSR) is defined as: SSR MSR SSR 1 where, as you already know, the error mean square (MSE) is defined as: SSE MSE n2 The Analysis of Variance (ANOVA) Table Expected Mean Squares E ( MSR ) 2 E ( MSE ) 2 1 X n i 1 X 2 i 2 If there is no linear association (β1= 0), we’d expect the ratio MSR/MSE to be 1. If there is linear association (β1≠0), we’d expect the ratio MSR/MSE to be greater than 1. So, use the ratio MSR/MSE to draw conclusion about whether or not β1= 0. The F-test Hypotheses: H 0 : 1 0 H A : 1 0 Test statistic: MSR F MSE * P-value = What is the probability that we’d get an F* statistic as large as we did, if the null hypothesis is true? (One-tailed test!) Determine the P-value by comparing F* to an F distribution with 1 numerator degrees of freedom and n-2 denominator degrees of freedom. Reject the null hypothesis if P-value is “small” as defined by being smaller than the level of significance. Analysis of Variance Table DFE = n-2 = 22-2 = 20 MSE = SSE/(n-2) = 1.8/20 = 0.09 MSR = SSR/1 = 15.8 Analysis of Variance Source DF SS Regression 1 15.8 Residual Error 20 1.8 Total 21 17.6 DFTO = n-1 = 22-1 = 21 MS 15.8 0.09 F 177.7 P 0.000 F* = MSR/MSE = 15.796/0.089 = 177.7 P = Probability that an F(1,20) random variable is greater than 177.7 = 0.000… Equivalence of F-test to T-test Predictor Constant Year Coef SE Coef T 76.153 4.152 18.34 -0.0284 0.00213 -13.33 Analysis of Variance Source DF SS Regression 1 15.796 Residual Error 20 1.778 Total 21 17.574 (13.33) 177.7 2 MS 15.796 0.089 t 2 * ( n 2) P 0.000 0.000 F P 177.7 0.000 F * (1, n 2 ) Equivalence of F-test to t-test • For a given significance level, the F-test of β1=0 versus β1≠0 is algebraically equivalent to the two-tailed t-test. • Will get same P-values. • If one test leads to rejecting H0, then so will the other. And, if one test leads to not rejecting H0, then so will the other. F test versus T test? • F-test is only appropriate for testing that the slope differs from 0 (β1≠0). Use the t-test if you want to test that the slope is positive (β1>0) or negative (β1<0) . • F-test will be more useful to us later when we want to test that more than one slope parameter is 0. Getting ANOVA table in Minitab • Default output for either command – Stat >> Regression >> Regression … – Stat >> Regression >> Fitted line plot … Oxygen consumption (ml/kg/min) Example: Oxygen consumption related to treadmill duration? 65 60 55 50 45 40 35 30 25 20 500 600 700 800 900 Treadmill duration (seconds) 1000 Regression Plot vo2 = -1.10367 + 0.0643694 duration Oxygen consumption (ml/kg/min) S = 4.12789 R-Sq = 79.6 % R-Sq(adj) = 79.1 % 65 60 55 50 45 40 35 30 25 20 500 600 700 800 900 Treadmill duration (seconds) 1000 The regression equation is vo2 = - 1.10 + 0.0644 duration Predictor Constant duration S = 4.128 Coef -1.104 0.064369 SE Coef 3.315 0.005030 R-Sq = 79.6% Analysis of Variance Source DF SS Regression 1 2790.6 Residual Error 42 715.7 Total 43 3506.2 T -0.33 12.80 P 0.741 0.000 R-Sq(adj) = 79.1% MS 2790.6 17.0 F 163.77 P 0.000