6.3 Synaptic convergence to centroids: AVQ Algorithms

advertisement

神经网络与模糊系统

Chapter 6

Architecture and Equilibria

结构和平衡

学生: 李 琦

导师:高新波

6.1 Neutral Network As Stochastic Gradient system

Classify Neutral network model By their synaptic connection topologies

and by how learning modifies their connection topologies

synaptic connection topologies

1. feedforward : if no closed synaptic loops

2. feedback : if closed synaptic loops or feedback pathways

how learning modifies their connection topologies

1.Supervised learning : use class membership information of

training samples

2.Unsupervised learning : use unlabelled training samplings

2003.11.19

2

6.1 Neutral Network As Stochastic Gradient system

Feedforward

Decode

Feedback

Gradient descent

Su

pe

rv

is

ed

LMS

BackPropagation

Reinforcement Learing

Self-Organization Maps

Competitve learning

Counter-propagation

Un

su

pe

rv

is

ed

En

co

de

Vetor Quantization

Recurrent BackPropagation

RABAM

Brownian annealing

Boltzmann learning

ABAM

ART-2

BAM-Cohen-Grossberg Model

Hopfield circuit

Brain-State-In-A-Box

Masking field

Adaptive-Resonance

ART-1

ART-2

Neural NetWork Taxonomy

2003.11.19

3

6.2 Global Equilibria: convergence and stability

Three dynamical systems in neural network:

synaptic dynamical system M

neuronal dynamical system x

joint neuronal-synaptic dynamical system ( x, M )

Historically,Neural engineers study the first or second neural

network.They usually study learning in feedforward neural

networks and neural stability in nonadaptive feedback

neural networks. RABAM and ART network depend on

joint equilibration of the synaptic and neuronal dynamical

systems.

2003.11.19

4

6.2 Global Equilibria: convergence and stability

Equilibrium is steady state (for fixed-point attractors)

Convergence is synaptic equilibrium. Μ = 0

Stability is neuronal equilibrium. x = 0

More generally neural signals reach steady state even

though the activations still change. We denote steady

state in the neuronal field F : Fx 0

x

Global stability: x 0, M 0

Stability - Equilibrium dilemma :

Neurons fluctuate faster than synapses fluctuate.

Convergence undermines stability.

2003.11.19

5

6.3 Synaptic convergence to centroids: AVQ Algorithms

Competitive learning adaptively quantizes the input pattern

space R n . Probability density function p(x) characterizes the

continuous distributions of patterns in R n .

We shall prove that competitive AVQ synaptic vector m j

converge exponentially quickly to pattern-class centroids and,

more generally, at equilibrium they vibrate about the centroids

in a Browmian motion.

2003.11.19

6

6.3 Synaptic convergence to centroids: AVQ Algorithms

Competitive AVQ Stochastic Differential Equations:

R n D1 D2 D3 .... Dk

Di D j ,

if i j

The Random Indicator function

1 if x D j

I D j ( x)

0 if x D j

Supervised learning algorithms depend explicitly on the

indicator functions.Unsupervised learning algorithms

don’t require this pattern-class information.

Centriod of D j:

2003.11.19

xj

D j xp ( x ) dx

D j p ( x ) dx

7

6.3 Synaptic convergence to centroids: AVQ Algorithms

The Stochastic unsupervised competitive learning law:

m j S j ( y j )[ x m j ] n j

We want to show that at equilibrium m j x j or E(m j ) x j

As discussed in Chapter 4: S j I D j ( x )

The linear stochastic competitive learning law:

m j I D j ( x)[ x m j ] n j

The linear supervised competitive learning law:

m j rj ( x) I D j ( x)[ x m j ] n j

rj ( x ) I D j ( x )

2003.11.19

I

i j

Di

( x)

8

6.3 Synaptic convergence to centroids: AVQ Algorithms

The linear differential competitive learning law:

mj S j [x mj ] n j

In practice:

m j sgn[ y j ][ x m j ] n j

1 if z 0

sgn[ z ] 0 if z 0

1 if z 0

2003.11.19

9

6.3 Synaptic convergence to centroids: AVQ Algorithms

Competitive AVQ Algorithms

1. Initialize synaptic vectors: mi (0) x(i) , i 1 ,...... , m

2.For random sample x(t ), find the closest (“winning”) synaptic

vector m j (t ):

m j (t ) x(t ) min mi (t ) x(t )

i

where x x12 ....... xn2gives the squared Euclidean norm of x

2

3.Update the wining synaptic vectors m j (t ) by the UCL ,SCL,or

DCL learning algorithm.

2003.11.19

10

6.3 Synaptic convergence to centroids: AVQ Algorithms

Unsupervised Competitive Learning (UCL)

m j (t 1) m j (t ) ct [ x(t ) mj (t )]

mi (t 1) mi (t )

if i j

{ct }defines a slowly decreasing sequence of learning coefficient

t

For instance , ct 0.1 1

for 10,000 samples x(t )

10,000

Supervised Competitive Learning (SCL)

m j (t 1) m j (t ) ct rj ( x(t )) x(t ) m j (t )

m j (t ) ct [ x(t ) m j (t )]

m j (t ) ct [ x(t ) m j (t )]

2003.11.19

if x D j

if x D j

11

6.3 Synaptic convergence to centroids: AVQ Algorithms

Differential Competitive Learning (DCL)

m j (t 1) m j (t ) ct S j ( y j (t ))[ x(t ) m j (t )]

mi (t 1) mi (t )

if i j

S j ( y j (t )) denotes the time change of the jth neuron’s competitive signal S j ( y j )

S j ( y j (t )) S j ( y j (t 1)) S j ( y j (t ))

In practice we often use only the sign of the signal difference or sgn[ y j ] ,

the sign of the activation difference.

2003.11.19

12

k

ct c0 1 影响迭代步长

T

m j (t 1) m j (t ) ct [ x(t ) m j (t )]

T

终止迭代条件:m j (t )-m j (t 1)

总迭代次数

样本数

总迭代次数可以

人为设定?

样本数决定计算

时间及精度

计算时间及精度

可以人为设定?

2003.11.19

终止迭代的条件

是否不需要?

13

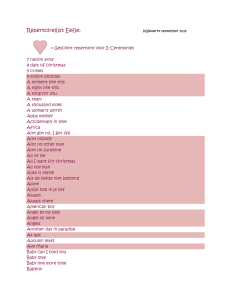

基于UCL的AVQ算法

1

0.8

0.8

0.7

0.7

0.6

0.6

0.6

0.5

0.5

0.5

0.4

0.4

0.3

0.3

0.2

0.2

0.1

0.1

0.9

0.8

0.7

0.4

0.3

0.2

0.1

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

0

0.1

0.2

0.3

0.4

T=10

0.8

0.8

0.7

0.7

0.7

0.6

0.6

0.6

0.5

0.5

0.5

0.4

0.4

0.4

0.3

0.3

0.3

0.2

0.2

0.2

0.1

0.1

0.1

0.2

0.3

0.4

0.5

0.6

T=30

2003.11.19

0.7

0.8

0.9

1

0

0.1

0.2

0.3

0.4

0.5

0.6

T=40

0.6

0.7

0.8

0.9

1

0.7

0.8

0.9

1

T=20

0.8

0

0.1

0.5

0.7

0.8

0.9

1

0

0.1

0.2

0.3

0.4

0.5

0.6

T=100

14

6.3 Synaptic convergence to centroids: AVQ Algorithms

Stochastic Equilibrium and Convergence

Competitive synaptic vector m j converge to decision-class

centroids. The centroids may correspond to local maxima of the

sampled but unknown probability density function p (x )

2003.11.19

15

6.3 Synaptic convergence to centroids: AVQ Algorithms

AVQ centroid theorem:

If a competitive AVQ system converges, it converges to the

centroid of the sampled decision class.

Prob(m j x j ) 1

at equilibrium

Proof. Suppose the jth neuron in FY wins the competition.

Suppose the jth synaptic vector m j codes for decision class D j

I D j ( x) 1 iff S j 1 by S j ( y j ) I D j ( x)

mj 0

2003.11.19

16

6.3 Synaptic convergence to centroids: AVQ Algorithms

Take Expectation :

o E m j

n I D j ( x)( x m j ) p( x)dx E n j

R

mj

I ( x)[ x m ] n

Dj

j

j

( x m j ) p( x)dx

Dj

xp( x)dx m j p( x)dx

Dj

mj

Dj

Dj

Dj

xp( x)dx

p( x)dx

xj

In general the AVQ centroid theorem concludes that at equilibrium:

E[m j ] x j

2003.11.19

17

6.3 Synaptic convergence to centroids: AVQ Algorithms

Arguments:

•The AVQ centriod theorem applies to the stochastic SCL and

DCL law.

• The spatial and temporal integrals are approximate equal.

•The AVQ centriod theorem assumes that stochastic

convergence occurs.

2003.11.19

18

6.4 AVQ Convergence Theorem

AVQ Convergence Theorem:

Competitive synaptic vectors converge exponentially

quickly to pattern-class centroids.

Proof.Consider the random quadratic form L:

1 n m

L (xi mij )2

2 i 0 j 0

Note: L 0

The pattern vectors x do not change in time.

2003.11.19

19

6.4 AVQ Convergence Theorem

L

i

L

L

xi

mij

xi

i

j mij

L

mij

i

j mij

( xi mij )mij

i

mj

I ( x)[ x m ] n

Dj

j

j

j

I D j ( x)( xi mij )2 ( xi mij )nij

i

j

i

j

L equals a random variable at every time t. E[L] equals a

deterministic number at every t. So we use the average E[L]

as Lyapunov function for the stochastic competitive

dynamical system.

2003.11.19

20

6.4 AVQ Convergence Theorem

Assume: sufficient smoothness to interchange the time derivative

and the probabilistic integral—to bring the time derivative

“inside” the integral.

E L E[ L]

j

Dj

2

(

x

m

)

i ij p( x)dx 0

i

So, the competitive AVQ system is asymptotically stable,

and in general converges exponentially quickly to a locally

equilibrium.

Suppose E( L) 0 .Then every synaptic vector has reached

equilibrium and is constant (with probability one) if m j 0

holds.

2003.11.19

21

6.4 AVQ Convergence Theorem

Since p(x) is a nonnegative weight function, the weighted

integral of the learning differences xi mij must equal zero :

Dj

( x m j ) p( x)dx 0

So, with probability one, equilibrium synaptic vector equal

centroids. More generally, average equilibrium synaptic

vector are centroids: E[m j ] x j

2003.11.19

22

6.4 AVQ Convergence Theorem

Arguments:

The vector integral in D ( x m j ) p( x)dx 0 equals the gradient of E [L]

j

with respect to m j.

So the AVQ convergence theorem implies that the class centroidsand, asymptotically ,competitive synaptic vector-minimize the

mean-squared error of vector quantization.

2003.11.19

23

6.5 Global stability of feedback neural networks

Global stability is jointly neuronal-synaptic steady state.

Global stability theorems are powerful but limited.

Their power:

•their dimension independence

•nonlinear generality

•their exponentially fast convergence to fixed points.

Their limitation:

• not tell us where the equilibria occur in the state space.

2003.11.19

24

6.5 Global stability of feedback neural networks

Stability-Convergence Dilemma

Stability-Convergence Dilemma arises from the asymmetry in

neuronal and synaptic fluctuation rates.

Neurons change faster than synapses change.

Neurons fluctuate at the millisecond level.

Synapses fluctuate at the second or even minute level.

The fast-changing neurons must balance the slow-changing

synapses.

2003.11.19

25

6.5 Global stability of feedback neural networks

Stability-Convergence Dilemma

1.Asymmetry:Neurons in FX and FY fluctuate faster than the

synapses in M.

2.stability: F 0 and F Y 0 (pattern formation).

X

3.Learning: F 0 and F Y 0 M 0.

X

4.Undoing: M 0 F 0 and F Y 0.

X

The ABAM theorem offers a general solution to stabilityconvergence dilemma.

2003.11.19

26

6.6 The ABAM Theorem

Hebbian ABAM model:

p

xi ai ( xi ) bi ( xi ) S j ( y j )mij

j 1

n

y j a j ( y j ) b j ( y j ) Si ( xi )mij

i 1

mij mij Si ( xi ) S j ( y j )

Competitive ABAM model (CABAM):

mij S j ( y j ) Si ( xi ) mij

Differential Hebbian ABAM model:

mij mij Si S j Si S j

Differential competitive ABAM model:

mij S j Si mij

2003.11.19

27

6.6 The ABAM Theorem

The ABAM Theorem: The Hebbian ABAM and competitive

ABAM models are globally stable.

We define the dynamical systems as above.

If the positivity assumptions ai 0, a j 0, Si 0, S j 0 hold, then

the models are asymptotically stable, and the squared

activation and synaptic velocities decrease exponentially

quickly to their equilibrium values:

ij2 0

xi2 0, y 2j 0, m

2003.11.19

28

6.6 The ABAM Theorem

Proof. The proof uses the bounded lyapunov function L:

L Si S j mij S ( i )bi ( i )d i

xi

i

j

0

i

the chain rule of

'

i

j

yj

0

1

S ( j )bj ( j )d j mij2

2 i j

'

j

differentiation gives :

d

dF dxi

F ( xi (t ))

dt

dxi dt

L Si' xi S j mij S 'j y j Si mij Si S j mij

i

j

j

i

i

j

Si'bi xi S 'jbj y j mij mij

i

j

i

j

Si' xi (bi S j mij ) S 'j y j (b j Si mij ) mij ( Si S j mij )

i

j

j

i

i

j

Si' ai (bi S j mij ) 2 S 'j a j (b j Si mij ) 2 (mij Si S j ) 2

i

j

j

i

i

j

because of ai 0, a j 0, Si 0, S j 0, So L 0, along system trajectories.

This proves global stability for signal Hebbian ABAMs.

2003.11.19

29

6.6 The ABAM Theorem

mij S j Si mij

for the competitive learning law:

L Si' ai (bi S j mij ) 2 S 'j a j (b j Si mij ) 2 S j ( Si mij )(Si S j mij )

i

j

j

i

i

j

We assume that S jbehaves approximately as a zero-one threshold.

if S j 0

0

mij ( Si S j mij ) S j ( Si mij )( Si S j mij )

2

( Si mij ) if S j 1

L 0 along trajectories.

This proves global stability for the competitive ABAM system.

2003.11.19

30

6.6 The ABAM Theorem

Also for signal Hebbian learning:

L Si' xi (bi S j mij ) S 'j y j (b j Si mij ) mij ( Si S j mij )

i

j

j

i

i

j

S 'j 2

Si' 2

xi y j mij2 0

i ai

j bj

i

j

along trajectories for any nonzero change in any neuronal

activation or any synapse.

This proves asymptotic global stability.

L 0 iff

xi2 y 2j mij2 0

iff

xi y j mij 0

(Higher-Order ABAMs, Adaptive Resonance ABAMs, Differential Hebbian ABAMs)

2003.11.19

31

6.7 structural stability of unsupervised learning and RABAM

•Structural stability is insensitivity to small perturbations.

•Structural stability allows us to perturb globally stable

feedback systems without changing their qualitative

equilibrium behavior.

•Structural stability differs from the global stability, or

convergence to fixed points.

•Structural stability ignores many small perturbations. Such

perturbations preserve qualitative properties.

2003.11.19

32

6.7 structural stability of unsupervised learning and RABAM

Random Adaptive Bidirectional Associative Memories RABAM

Brownian diffusions perturb RABAM models.

Suppose Bi , B j , and Bij denote Brownian-motion (independent

Gaussian increment) processes that perturb state changes in the ith

neuron in FX ,the jth neuron in FY ,and the synapse mij ,respectively.

The signal Hebbian diffusion RABAM model:

dxi ai ( xi ) bi ( xi ) S j ( y j )mij dt dBi

j

dy j a j ( y j ) b j ( y j ) Si ( xi )mij dt dB j

i

dmij mij dt Si ( xi ) S j ( y j )dt dBij

2003.11.19

33

6.7 structural stability of unsupervised learning and RABAM

With the stochastic competitives law:

dmij S j ( y j )[ Si ( xi ) mij ]dt dBij

(Differential Hebbian, differential competitive diffusion laws)

The signal-Hebbian noise RABAM model:

xi ai ( xi ) bi ( xi ) S j ( y j ) mij ni

j

y j a j ( y j ) b j ( y j ) Si ( xi )mij n j

i

mij mij Si ( xi ) S j ( y j ) nij

E ni E n j E nij 0

V ni i2 , 2j , ij2

2003.11.19

34

6.7 structural stability of unsupervised learning and RABAM

The RABAM theorem ensures stochastic stability.

In effect, RABAM equilibria are ABAM equilibria that randomly

vibrate. The noise variances control the range of vibration.

Average RABAM behavior equals ABAM behavior.

RABAM Theorem.

The RABAM model above is global stable. If signal functions

are strictly increasing and amplification functions ai and b j

are strictly positive, the RABAM model is asymptotically stable.

2003.11.19

35

6.7 structural stability of unsupervised learning and RABAM

Proof. The ABAM lyapunov function L :

L Si S j mij Si' ( i )bi ( i )d i

xi

i

j

i

0

j

yj

0

S 'j ( j )bj ( j ) d j

1

mij2

2 i j

defines a random process. At each time t, L(t) is a random variable.

The expected ABAM lyapunov function E(L) is a lyapunov function

for the RABAM system.

LRABAM E ( L) .... L p( x, y, M )dxdydM

2003.11.19

36

6.7 structural stability of unsupervised learning and RABAM

E L E L

E Si' xi (bi S j mij ) S 'j y j (b j Si mij ) mij ( Si S j mij )

j

j

i

i

j

i

E Si' ai (bi S j mij ) 2 S 'j a j (b j Si mij ) 2 (mij Si S j ) 2

j

j

i

i

j

i

E ni Si' (bi S j mij ) E n j S 'j (b j Si mij ) E nij ( mij Si S j )

i

j

i

i j

j

E LABAM E ni E Si' (bi S j mij )

i

j

E n j E S 'j (b j Si mij ) E nij E (mij Si S j )

j

i

i j

E LABAM

So E L 0 or E L 0 along trajectories according as L ABAM 0 or L ABAM 0

2003.11.19

37

6.7 structural stability of unsupervised learning and RABAM

Noise-Saturation Dilemma:

How neurons can have an effective infinite dynamical range when

they operate between upper and lower bounds and yet not treat

small input signals as noise: If the xi are sensitive to large inputs,

then why do not small inputs get lost in internal system noise? If

the xi are sensitive to small inputs, then why do they not all saturate

at their maximum values in response to large inputs?

2003.11.19

38

6.7 structural stability of unsupervised learning and RABAM

RABAM Noise Suppression Theorem:

As the above RABAM dynamical systems converge exponentially quickly, the

mean-squared velocities of neuronal activations and synapses decrease to

their lower bounds exponentially quickly:

E xi2 i2 , E y 2j 2j , E mij2 ij2

Guarantee: no noise processes can destabilize a RABAM if the noise processes

have finite instantaneous variances (and zero mean).

(Unbiasedness Corollary, RABAM Annealing Theorem)

2003.11.19

39

Thank you!

2003.11.19

40