ChongLingChee_FYP2010

advertisement

SIM UNIVERSITY

SCHOOL OF SCIENCE AND TECHNOLOGY

AUTOMATED DETECTION OF DIABETIC

RETINOPATHY USING DIGITAL FUNDUS

IMAGES

STUDENT

: E0604276 (PI NO.)

SUPERVISOR

: DR RAJENDRA ACHARYA

PROJECT CODE

UDYAVARA

: JAN2010/BME/0016

A project report submitted to SIM University

in partial fulfilment of the requirements for the degree of

Bachelor of Biomedical Engineering

November 2010

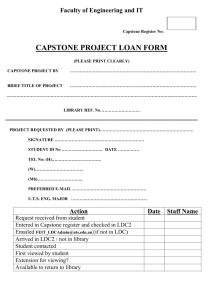

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

TABLE OF CONTENTS

Page

ABSTRACT

5

ACKNOWLEDGEMENT

6

LISTS OF FIGURES

7

LIST OF TABLES

10

CHAPTER ONE

AIMS AND INTRODUCTION

11-12

1.1

Background

11

1.2

Objectives

12

1.3

Scope

12

CHAPTER TWO

LITERATURE

13-22

2.1

Anatomy structure of the human eye

13

2.1.1

The Cornea

14

2.1.2

The Aqueous Humor

14

2.1.3

The Iris

14

2.1.4

The Pupil

14

2.1.5

The Lens

15

2.1.6

The Vitreous Humor

15

2.1.7

The Sclera

15

2.1.8

The Optic Disc

15

2.1.9

The Retina

15

2.1.10 Macula

16

2.1.11 Fovea

16

2.2

Diabetic Retinopathy (DR) and stages

16

2.3

Diabetic Retinopathy (DR) features

18

2.3.1

Blood Vessels

18

2.3.2

Microaneurysms

19

2.3.3

Exudates

20

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

2

2.4

2.5

Diabetic Retinopathy (DR) examination methods

20

2.4.1

Opthalmoscopy (Indirect and Direct)

20

2.4.2

Fluorescein Angiography

21

2.4.3

Fundus Photography

21

Diabetic Retinopathy (DR) treatment

21

2.5.1

Scatter Laser treatment

21

2.5.2

Vitrectomy

22

2.5.3

Focal Laser treatment

22

2.5.4

Laser Photocoagulation

22

CHAPTER THREE

METHODS AND MATERIALS

23-54

3.1

System block diagram

23

3.2

Image processing techniques

24

3.2.1

Image preprocessing

24

3.2.2

Structuring Element

25

3.3

3.2.2.1 Disk shaped Structuring Element (SE)

26

3.2.2.2 Ball shaped Structuring Element (SE)

26

3.2.2.3 Octagon shaped Structuring Element (SE)

27

3.2.3

27

Morphological image processing

3.2.3.1 Morphological operations

28

3.2.3.2 Dilation and Erosion

28

3.2.3.3 Dilation

29

3.2.3.4 Erosion

29

3.2.3.5 Opening and Closing

30

3.2.4

Thresholding

31

3.2.5

Edge detection

32

3.2.6

Median filtering

35

Feature extraction

36

3.3.1

Blood vessels detection

36

3.3.2

Microaneurysms detection

40

3.3.3

Exudates detection

44

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

3

3.3.4

3.4

3.5

Texture analysis

47

Significance test

48

3.4.1

48

Student’s t-test

Classification

49

3.5.1

Fuzzy

51

3.5.2

Gaussian Mixture Model (GMM)

54

CHAPTER FOUR

RESULTS

58-60

4.1

60

Graphical User Interface (GUI)

CHAPTER FIVE

CONCLUSION AND RECOMMENDATION

61

CHAPTER SIX

REFLECTIONS

62-63

REFERENCES

64-66

APPENDIX A: BOX PLOT FOR FEATURES (AREA)

67-68

APPENDIX B: BLOOD VESSELS MATLAB CODE

69-70

APPENDIX C: MICROANEURYSMS MATLAB CODE

71-72

APPENDIX D: EXUDATES MATLAB CODE

73-74

APPENDIX E : TEXTURES MATLAB CODE

75

APPENDIX F : MEETING LOGS

76-79

APPENDIX G: GANTT CHART

80-81

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

4

ABSTRACT

Diabetic retinopathy (DR) is the resultant cause of blindness due to diabetes. The main

aim for this project is to develop a system to automate the detection of DR using the fundus

images. This is first achieved by using the fundus images to image processed them using

morphological processing techniques and texture analysis to extract features such as areas of

blood vessels, exudates, microaneurysms and textures. Using the significance test on the

features to determine which features have statistically significance of around p ≤ 0.05. The

selected features are then input to fuzzy and GMM classifier for automatic classification.

After which, the best classifier is then used for the final graphical user interface (GUI) based

on percentage of correct data rate of 85.2% and average classification rate of 85.2%.

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

5

ACKNOWLEGEMENTS

I would like to thank my family for their support and encouragement.

I would like to thank NUH of Singapore for providing me the fundus images for this

project.

I would like to thank Unisim for the school facilities.

I would like to thank Fabian Pang for his patience and guidance on fuzzy and GMM

classification.

I would like to thank Jacqueline Tham, Vicky Goh, Mabel Loh, Brenda Ang and

Audrey Tan for their moral support and encouragement.

Last but most importantly, I would like to thank my project supervisor, Dr Rajendra

Acharya Udyavara for his kindness, patience, guidance, advice and enlightenment.

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

6

LIST OF FIGURES

Page

Figure 2.1: Anatomy structure of the eye

13

Figure 2.1.10: Location of macula, fovea and optic disc

16

Figure 2.3.1: Retinal blood vessels

19

Figure 2.3.2: Microaneurysms in DR

19

Figure 2.3.3: Exudates in DR

20

Figure 3.1: System block diagram for the detection and

classification of diabetic retinopathy

24

Figure 3.2.1a: Original image (left) and its histogram (right)

25

Figure 3.2.1b: Image after CLAHE (left) and its histogram (right)

25

Figure 3.2.2.1: Disk shaped structuring element

26

Figure 3.2.2.2: Ball shaped structuring element (nonflat ellipsoid)

27

Figure 3.2.2.3: Octagon shaped structuring element

27

Figure 3.2.3.3a: Original image

29

Figure 3.2.3.3b: Image after dilation with disk shaped SE

29

Figure 3.2.3.4a: Original image

30

Figure 3.2.3.4b: Image after erosion with disk shaped SE

30

Figure 3.2.3.5a: Opening operation with disk shaped image

31

Figure 3.2.3.5b: Closing operation with disk shaped SE image

31

Figure 3.2.4a: Original image

32

Figure 3.2.4b: Image with too high threshold value

32

Figure 3.2.4c: Image with too low threshold value

32

Figure 3.2.5a: Original image

34

Figure 3.2.5b: Sobel

34

Figure 3.2.5c: Prewitt

34

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

7

Figure 3.2.5d: Roberts

34

Figure 3.2.5e: Laplacian of Gaussian (LoG)

34

Figure 3.2.5f: Canny

35

Figure 3.2.6a: Illustration of a 3 x 3 median filter

35

Figure 3.2.6b: Original image (left) and image after median filtering (right)

36

Figure 3.3.1a: System block diagram for detecting blood vessels

36

Figure 3.3.1b: Normal retinal fundus image

37

Figure 3.3.1c: Green component

37

Figure 3.3.1d: Inverted green component

37

Figure 3.3.1e: Image after CLAHE

38

Figure 3.3.1f: Image after opening operation

38

Figure 3.3.1g: Image after subtraction

38

Figure 3.3.1h: Image after thresholding

39

Figure 3.3.1i: Image after median filtering

39

Figure 3.3.1j: Final image

39

Figure 3.3.1k: Final image (inverted)

39

Figure 3.3.2a: System block diagram for detecting microaneurysms

40

Figure 3.3.2b: Abnormal retinal fundus image

40

Figure 3.3.2c: Red component

41

Figure 3.3.2d: Inverted red component

41

Figure 3.3.2e: Image after Canny edge detection

41

Figure 3.3.2f: Image with boundary

41

Figure 3.3.2g: Image after boundary subtraction

41

Figure 3.3.2h: Image after filling up the holes or gaps

42

Figure 3.3.2i: Image after subtraction

42

Figure 3.3.2j: Blood vessels detection

42

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

8

Figure 3.3.2k: Blood vessels after edge detection

42

Figure 3.3.2l: Image after subtraction

43

Figure 3.3.2m: Image after filling holes or gaps

43

Figure 3.3.2n: Final image

43

Figure 3.3.3a: System block diagram for detecting exudates

44

Figure 3.3.3b: Abnormal retinal fundus image

44

Figure 3.3.3c: Green component

45

Figure 3.3.3d: Image after closing operation

45

Figure 3.3.3e: Image after column wise neighbourhood operation

45

Figure 3.3.3f: Image after thresholding

46

Figure 3.3.3g: Image after morphological closing

46

Figure 3.3.3h: Image after Canny edge detection

46

Figure 3.3.3i: Image after ROI

46

Figure 3.3.3j: Image after removing optic disc

46

Figure 3.3.3k: Image after removing border

46

Figure 3.3.3l: Final image

47

Figure 3.5: Block diagram of training and testing data

50

Figure 3.5.2: Block diagram of GMM method

55

Figure 4: Graphical plot for average percentage

classification results from two classifiers

59

Figure 4.1: GUI

60

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

9

LIST OF TABLES

Page

Table 2.2: Summary of the features of diabetic retinopathy

18

Table 3.2.3.2: Rules for dilation and erosion

28

Table 3.2.5: Methods and description of various edge detection algorithms

33

Table 3.4.1: Student’s t-test results

49

Table 3.5.1a: testing1, testing2 and testing3 data output using fuzzy classifier

52

Table 3.5.1b: testing1 data output calculation using fuzzy classifier

53

Table 3.5.1c: testing2 data output calculation using fuzzy classifier

53

Table 3.5.1d: testing3 data output calculation using fuzzy classifier

54

Table 3.5.2a: testing1, testing2 and testing3 data output using GMM classifier

56

Table 3.5.2b: testing1 data output calculation using GMM classifier

56

Table 3.5.2c: testing2 data output calculation using GMM classifier

57

Table 3.5.2d: testing3 data output calculation using GMM classifier

57

Table 4a: Fuzzy classification results

58

Table 4b: GMM classification results

59

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

10

CHAPTER ONE

AIMS AND INTRODUCTION

1.1 BACKGROUND

Diabetes mellitus or commonly known as diabetes is a chronic systemic disease of

disordered metabolism of carbohydrate, protein and fat[21]; most notably known for its

condition in which a person has a high blood sugar (glucose) level and as a result of the

body either not able to produce enough insulin (type 1 insulin-dependent diabetes mellitus or

IDDM[48]) or insulin resistance (type 2 non-insulin-dependent diabetes mellitus or

NIDDM[48]). Diabetes is always a disease burden[32], especially in developed countries.

According to Ministry Of Health (MOH) in Singapore, 8.2% of total population suffered

from diabetes in 2004[32].

Diabetic retinopathy (DR) is one of the complications resulted from prolonged diabetic

condition usually after ten to fifteen years of having diabetes. In the case of DR, the high

glucose level or hyperglycemia causes damage to the tiny blood vessels inside the retina.

This tiny blood vessels will leak blood and fluid on the retina, forming features such as

microaneurysms, haemorrhages, hard exudates, cotton wool spots, or venous loops [47]. DR

affects about 60% of patients having diabetes for 15 years or more and a percentage of these

are at risk of developing blindness[44] in Singapore. Despite these intimidating statistics,

research indicates that at least 90% of these new cases could be reduced if there was proper

and vigilant treatment and monitoring of the eyes[50].

Laser photocoagulation is an example of surgical method that can reduce the risk of

blindness in people who have proliferative retinopathy[9]. However, it is of vital importance

for diabetic patients to have regular eye checkups. Current examination methods use to

detect and grade retinopathy include ophthalmoscopy (indirect and direct)[23], photography

(fundus images) and fluorescein angiography. These methods of detection and assessment

of diabetic retinopathy is manual, expensive and require trained ophthalmologists.

Therefore, it is important to have an automatic detection method for diabetic

retinopathy in an early stage to retard the progression in order to prevent blindness, thus

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

11

encouraging improvement in diabetic control. It can also reduce the total annual economic

cost of diabetes significantly.

1.2 OBJECTIVES

The objective of this project is to implement an automated detection of diabetic

retinopathy (DR) using digital fundus images. By using MATLAB to extract and detect the

features such as blood vessels, microaneurysms, exudates and textures which will

determine two general classifications: normal or abnormal (DR) eye. An early detection of

diabetic retinopathy enables medication or laser therapy to be performed to prevent or delay

visual loss.

1.3 SCOPE

The scope of this project involves using various MATLAB imaging techniques (eg;

converting image to binary format, erosion, dilation, boundary detection, etc) to obtain the

desire final image and area of the features (eg: blood vessels, microaneurysms, exudates and

textures) before using the values to do significance test (eg: student’s t-test) to determine the

accuracy of the results obtained mentioned earlier. Next, using the chosen results obtained

from student’s t-test to insert into the classifier (eg: Fuzzy and Gaussian Mixture Model or

GMM) to obtain the average classification rate, sensitivity and specificity and to classify

them into normal and abnormal classes.

Lastly, using the data collected to develop a graphical user interface (GUI) for

displaying normal or abnormal (DR) eye images based on the best classifier.

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

12

CHAPTER TWO

LITERATURE

This section will discuss about the structure of the eye, definition of diabetic

retinopathy (DR) and stages, examination and treatment methods and DR features.

2.1 ANATOMY STRUCTURE OF THE HUMAN EYE

The eye is a hollow, spherical organ about 2.5cm in diameter. It has a wall composed

of three layers, and its interior spaces are filled with fluids that support the walls and

maintain the shape of the eye[45]. Figure 2.1 shows the cross-sectional structure of the eye.

The eyes are so important that four-fifth of all of the information the brain receives, come

from the eyes. Section 2.1 will explain some of the important parts of the eye.

Figure 2.1: Anatomy structure of the eye[3]

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

13

2.1.1 THE CORNEA

The cornea is a transparent medium situated in the front of the eye covering the iris,

pupil and anterior chamber that helps to focus incoming light[20] with a water content of

78%[38]. The cornea is elliptical in shape with a vertical and horizontal diameter of 11 and

12mm, respectively[38]. The cornea is supplied with oxygen and nutrients through tear-fluid

and not through blood vessels[28]. Therefore, there are no blood vessels in it. The function of

the cornea is to refract and transmit light[38].

2.1.2 THE AQUEOUS HUMOR

The aqueous humor contains aqueous fluid in the front part of the eye between the lens

and the cornea. The aqueous fluid’s main function is to supply the cornea and the lens with

nutrients and oxygen[28].

2.1.3 THE IRIS

The iris is a thin, pigmented, circular structure in the eye which regulates the amount

of light that enters the eye[28]. The function of the iris is to control the size of the pupil by

adjusting it to the intensity of the lighting conditions[38]. By expanding the size of the pupil,

more light can then enter. This reflex known as the Accommodation Reflex [28] expands the

pupil to allow more light to enter when focusing on distant objects or in the darkness.

2.1.4 THE PUPIL

The pupil is a hole in the center of the iris. The size of the pupil determines the amount

of light that enters the eye. The pupil size is controlled by the dilator and sphincter muscles

of the iris[42]. It appears black because most of the light entering the pupil is absorbed by

the tissues inside the eye[36].

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

14

2.1.5 THE LENS

The lens is a transparent, biconvex structure in the eye that, along with the cornea,

helps to refract light to be focused on the retina[27]. By changing the shape of the lens, the

lens is able to change the focal distance of the eye so it can focus on objects at different

distances, thus allowing sharp image to form on the retina.

2.1.6 THE VITREOUS HUMOR

The vitreous humor contains clear fluid which fills the eyeball (between the lens and

the retina). It is the largest domain of the human eye. The fluid contains more than 95% of

water.

2.1.7 THE SCLERA

The sclera is the white opaque tissue that acts as the eye protective outer coat. Six

tiny muscles connect to it around the eye and control the eye's movements. The optic nerve

is attached to the sclera at the very back of the eye[42].

2.1.8 THE OPTIC DISC

The optic disc, also known as the optic nerve head or the blind spot, the optic disc is

where the optic nerve attaches to the eye[28]. There are no light sensitive rods or cones to

respond to a light stimulus at this point. This causes a break in the visual field called "the

blind spot" or the "physiological blind spot"[35]. Figure 2.1.10 shows the location of the optic

disc.

2.1.9 THE RETINA

The retina is a thin layer of neural cells[38] that lines in the inner back of the eye. It is

light sensitive and absorbs light. The image signals are received and send to the brain. The

retina contains two kinds of light receptors; rods and cones. The rods absorb light in black

and white. The rods are responsible for night vision. The cones are colour sensitive and

absorb stronger light. The cones are responsible for colour vision.

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

15

2.1.10 MACULA

The macula is the area around the fovea[28]. It is an oval-shaped highly pigmented

yellow spot near the center of the retina[31] as shown in Figure 2.1.10. It is a small and

highly sensitive part of the retina responsible for detailed central vision.

Fovea

Macula

Optic disc

Figure 2.1.10: Location of macula, fovea and optic disc

2.1.11 FOVEA

The fovea is the most central part of the macula. The visual cells located in the

fovea are packed tightest, resulting in optimal sharpness of vision. Unlike the retina, it has

no blood vessels to interfere the passage of light striking the foveal cone mosaic[15]. Figure

2.1.10 shows the location of fovea.

2.2 DIABETIC RETINOPATHY (DR) AND STAGES

Diabetes is the chronic state caused by an abnormal increase in the glucose level in the

blood and which causes the damage to the blood vessels. The tiny blood vessels that nourish

the retina are damaged by the increased glucose level[47]. Diabetic retinopathy (DR) is one of

the complications that affect retinal capillaries. This effect causes thickening of arterial wall

and blockage of blood flow to the eye occurs.

DR can be broadly classified as non-proliferative diabetic retinopathy (NPDR) and

proliferative diabetic retinopathy (PDR)[47] as shown in Figure 2.2. There are four DR

stages:

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

16

1.Stage 1 – Background diabetic retinopathy (also termed mild or moderate nonproliferative retinopathy). At least one microaneurysm with or without the presence

of retinal haemorrhages, hard exudates, cotton wool spots or venous loops will be

present[6,7].

2. Stage 2 – Moderate non-proliferative retinopathy. Numerous microaneurysms and

retinal haemorrhages will be present. Cotton wool spots and a limited amount of

venous beading can also be seen[47]. Some blood vessels are starting to become

blocked.

3. Stage 3 – Severe non-proliferative retinopathy. Many features such as haemorrhages

and microaneurysms are present in the retina. Other features are also present except

less growth of new blood vessels; many more blood vessels are now blocked and

these areas of the retina start to send signals to the body to grow new blood vessels

for nourishment[38].

4. Stage 4 – Proliferative retinopathy. PDR is the advanced stage where the fluids sent by

the retina for nourishment trigger the growth of new blood vessels[22]. The main

blood vessels become stiff and blockage of blood flow occurs. Small pockets of

blood begin to form around the boundary of the main blood vessels. These fragile

blood vessels have thin walls and when the walls burst, blood spatters form.

Exudates (proteins and other lipids) and blood from the leakage forms around the

retina and in some cases, leakage may form on the fovea, resulting in sudden severe

vision loss and blindness.

Figure 2.2: Stages of DR fundus images[51]

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

17

The features of each stage are summarised in Table 2.2.

Classification

Alternative terminology

Features

Background diabetic

retinopathy

Mild/moderate nonproliferative diabetic

retinopathy

Haemorrhages

Oedema

Microaneurysms

Exudates

Cotton wool spots

Dilated viens

Pre-proliferative diabetic

retinopathy

Severe/very severe nonproliferative retinopathy

Deep retinal haemorrhages in

four quadrants

Venous abnormalities

Intraretinal microvascular

abnormalities (IRMA)

Multiple cotton wool spots

Proliferative diabetic

retinopathy

Proliferative diabetic

retinopathy (PDR)

New vessels on optic disc

Advanced diabetic eye

disease

Complications of

proliferative diabetic

retinopathy

Vitreous haemorrhage

New vessels elsewhere

Retinal detachment

Neovascular glaucoma

Table 2.2: Summary of the features of diabetic retinopathy[18]

2.3 DIABETIC RETINOPATHY (DR) FEATURES

There are many features which are present in a DR eye. However, since the main

objective of this project is to have an automated system for early DR detection on some of

the extracted features. Therefore, features such as blood vessels, microaneurysms, exudates

and textures (in feature extraction section) will be discussed.

2.3.1 BLOOD VESSELS

In normal retina, the main function of the blood vessels is to send nutrients such as

oxygen and blood to the eye (Figure 2.3.1). In the case of DR, the simulation to the growth

of new fragile blood vessels is due to the blockage and thickening of the main blood

vessels. When the main blood vessels are blocked, new vessels are triggered to grow in an

attempt to send oxygen and nourishment to the eye. However, these new blood vessels are

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

18

very fragile and abnormal. They are prone to rupture and leak fluids (proteins and lipids)

and blood into the eye. This may not hinder the patient’s sight if the leakage does not occur

on the fovea or macula. However, if the blood spatters happen to be on the fovea or macula,

sudden loss of vision in that eye occurs as the spatters block all light entering into the eye.

Retinal

Blood

Vessels

Figure 2.3.1: Retinal blood vessels

2.3.2 MICROANEURYSMS

Microaneurysms are small saclike out pouching in the small vessels and

capillaries[25] as shown in Figure 2.3.2. They are an early feature of DR and it appears as

small red dots due to the ballooning of capillaries. They represent a small weakness in the

retinal capillary wall that leaks blood and serum[18]. They appear as tiny red dots in fundus

photographs.

Microaneurysms

Figure 2.3.2: Microaneurysms in DR

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

19

2.3.3 EXUDATES

Exudates often described as hard exudates, these are deposits of extravasated

plasma proteins, especially lipoproteins as shown in Figure 2.3.3. They leak into retinal

tissue with serum, and are left behind as oedema fluid is absorbed. Eventually exudates are

cleared from the retina by macrophages[18]. They appear as yellow-white dots within the

retina. The yellow deposits may be seen as either individual spots or clusters[25], usually

near optic disc.

Sometimes the exudates may be formed on macula or fovea, as a result, there will

be sudden loss of vision in that eye, regardless of the diabetic retinopathy stages.

Exudates

Figure 2.3.3: Exudates in DR

2.4 DIABETIC RETINOPATHY (DR) EXAMINATION METHODS

There are few types of DR examination methods but mainly ophthalmoscopy (indirect

and direct), fluorescein angiogram and fundus photography.

2.4.1 OPTHALMOSCOPY (INDIRECT AND DIRECT)

Direct opthalmoscopy is the examination method performs by the specialist in a

dark room. A beam of light is shined through the pupil using opthalmoscope. This allows

the specialist to view the back of the eyeball.

Indirect opthalmoscopy is performed with a head or spectacles-mounted source of

illumination positioned in the middle of the forehead[26]. A bright light is shined into the

eye using the instrument on the forehead. The condensing lens is placed on the eye to

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

20

intercept the fundus reflex. A real and inverted image of the fundus will form between the

examiner and the patient[26].

2.4.2 FLUORESCEIN ANGIOGRAPHY

Fluorescein angiography is a test which allows the blood vessels in the back of the

eye to be photographed as a fluorescent dye is injected into the bloodstream via the hand or

arm[49]. The pupils will be dilated with eye drops and the yellow dye (Fluorescein Sodium)

is injected into a vein in the arm[49]. It is used to examine the blood circulation of the retina

using the dye tracing method.

2.4.3 FUNDUS PHOTOGRAPHY

Fundus photography is the usage of fundus camera to photograph the regions of the

vitreous, retina, choroid and optic nerve[16]. Fundus photographs are only considered

medically necessary where the results may influence the management of the patient. In

general, fundus photography is performed to evaluate abnormalities in the fundus, follow the

progress of a disease, plan the treatment for a disease, and assess the therapeutic effect of

recent surgery[16]. In this report, the images for imaging processing were taken from fundus

camera.

2.5 DIABETIC RETINOPATHY (DR) TREATMENT

Treatment of diabetic retinopathy varies depending on the extent of the disease[10].

During the early stages of DR, no treatment is needed unless macular oedema is present.

However, for advanced DR such as proliferative diabetic retinopathy, surgery is necessary.

2.5.1 SCATTER LASER TREATMENT

Advanced stage diabetic retinopathy is treated by performing scatter laser treatment.

During scatter laser treatment, an ophthalmologist uses a laser to "scatter" many small

burns across the retina. This causes leaking and abnormal blood vessels to shrink[10]. This

surgical method is used to reduce vision loss. However, if there is significant amount of

haemorrhages, scatter laser treatment is not suitable.

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

21

2.5.2 VITRECTOMY

A vitrectomy is performed under either local or general anesthesia. An

ophthalmologist makes a tiny incision in the eye and carefully removes the vitreous gel that

is clouded with blood. After the vitreous gel is removed from the eye, a clear salt solution is

injected to replace the contents[10].

2.5.3 FOCAL LASER TREATMENT

Leakage of fluid from blood vessels can sometimes lead to macular oedema, or

swelling of the retina. Focal laser treatment is performed to treat macular oedema. Several

hundred small burns are placed around the macula in order to reduce the amount of fluid

build-up in the macula[10].

2.5.4 LASER PHOTOCOAGULATION

Laser photocoagulation is a powerful beam of light which, combined with

ophthalmic equipment and lenses, can be focused on the retina[41]. Small bursts of laser are

used to seal leaky blood vessels, destroy abnormal blood vessels, seal retinal tears, and

destroy abnormal tissue in the back of the eye[41]. This procedure is used to treat diabetic

retinopathy patients in proliferative diabetic retinopathy stage. The main advantage of using

this surgical method is the short surgical duration and the patient usually can resume

activities immediately.

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

22

CHAPTER THREE

METHODS AND MATERIALS

Total 60 fundus images from various demographics are used in this project. These

fundus images were taken from the ophthalmology department in National University

Hospital (NUH) of Singapore. The images were taken in 720 x 576 pixels.

3.1 SYSTEM BLOCK DIAGRAM

Figure 3.1 shows the system block diagram for identification of diabetic retinopathy.

Using the input image, the image is processed using the image processing techniques using

MATLAB. Features such as, areas of blood vessels, microaneurysms, exudates and textures

are extracted. The extracted features are then inserted into Student’s t-test to generate

significance test (probability of true significance). Using the Student’s t-test results (results

which have high probability of true significance) to the classifiers (eg: Fuzzy and Gaussian

Mixture Model or GMM), the average classification rate, sensitivity and specificity, etc are

generated. Lastly, at the final stage, using the results generated from the classifiers to

determine the diabetic retinopathy (DR) classes; normal and abnormal.

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

23

Feature Extraction

Input Image

Image

Processing

Techniques

Areas of

1.

2.

3.

4.

Blood vessels

Microaneurysms

Exudates

Textures

Significance Test

Figure 3.1: System block diagram for

the detection and classification of

diabetic retinopathy

Student’s t-test

Normal

Classification

Fuzzy and GMM

Classifiers

Abnormal

3.2 IMAGE PROCESSING TECHNIQUES

The image processing techniques are used to enhance the images, morphological image

processing and texture analysis. They are also used to reduce image noise, contrast and

invert the images.

3.2.1 IMAGE PREPROCESSING

Before image processing is carried out, the fundus images need to be preprocessed to

remove non-uniform background. Non-uniform brightness and variation in the fundus

images are the main reasons for non-uniformity. Therefore, the error needs to be corrected

by applying contrast-limited adaptive histogram equalization (CLAHE) to the image before

applying the image processing operations[22].

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

24

A histogram is a graph which indicates the number of times each gray level occurs in

an image. For example, in bright images, the gray levels will be clustered at the upper end

of the graph. As for images that are darker, the gray levels will then be at the lower end of

the graph. For a gray level that is evenly spread out in the histogram, the image is wellcontrasted. CLAHE operates on small regions in the image, called tiles. Each tile's contrast

is enhanced, so that the histogram of the output region approximately matches a specified

histogram[2]. Figure 3.2.1a shows the fundus image before CLAHE and its histogram shows

more bright level regions than dark level regions. Figure 3.2.1b shows the fundus image

after CLAHE and its histogram shows an evenly distributed brightness.

Figure 3.2.1a: Original image (left) and its histogram (right)

Figure 3.2.1b: Image after CLAHE (left) and its histogram (right)

3.2.2 STRUCTURING ELEMENT

A structuring element (SE) is a binary morphology that is used to probe the image. It is

a matrix consisting of only 0's and 1's that can have any arbitrary shape and size. The pixels

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

25

with values of 1 define the neighbourhood[34]. There are two types of SE, two-dimensional

or flat SE usually consists of origin, radius and approximation N value. Three-dimensional

or nonflat SE usually consists of radius (x-y planes), height and approximation N value.

There are different types of SE shapes but in this project, disk shaped, ball shaped and

octagon shaped SE are used.

3.2.2.1

DISK SHAPED STRUCTURING ELEMENT (SE)

Disk shaped SE, SE = strel('disk', R, N) creates a flat, disk shaped structuring

element, where R specifies the radius. R must be a nonnegative integer. N must be 0, 4, 6,

or 8[8]. Figure 3.2.2.1 shows a disk shaped SE with radius 3 and its centre of origin.

Figure 3.2.2.1: Disk shaped structuring element

3.2.2.2

BALL SHAPED STRUCTURING ELEMENT (SE)

Ball shaped SE, SE = strel('ball', R, H, N) creates a nonflat, ball-shaped structuring

element (actually an ellipsoid) whose radius in the X-Y plane is R and whose height is H.

Note that R must be a nonnegative integer, H must be a real scalar, and N must be an even

nonnegative integer[8]. Figure 3.2.2.2 shows a ball shaped SE with x-y axis as radius and z

axis as height.

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

26

Figure 3.2.2.2: Ball shaped structuring element (nonflat ellipsoid)

3.2.2.3

OCTAGON SHAPED STRUCTURING ELEMENT (SE)

Octagon shaped SE, SE = strel('octagon', R) creates a flat, octagonal structuring

element, where R specifies the distance from the structuring element origin to the sides of

the octagon, as measured along the horizontal and vertical axes. R must be a nonnegative

multiple of 3[8]. Figure 3.2.2.3 shows an octagon shaped SE with radius 3 and its centre of

origin.

Figure 3.2.2.3: Octagon shaped structuring element

3.2.3 MORPHOLOGICAL IMAGE PROCESSING

Morphological image processing is a branch of image processing that is particularly

useful for analyzing shapes in images[3]. Mathematical morphology is the foundation of

morphological image processing, which consists of a set of operators that transform images

according to size, shape, connectivity, etc.

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

27

3.2.3.1

MORPHOLOGICAL OPERATIONS

Morphological operations are used to understand the structure or form of an

image. This usually means identifying objects or boundaries within an image. Morphological

operations play a key role in applications such as machine vision and automatic object

detection[33].

Morphological operations apply a structuring element to an input image, creating an

output image of the same size. In a morphological operation, the value of each pixel in the

output image is based on a comparison of the corresponding pixel in the input image with its

neighbours. By choosing the size and shape of the neighborhood, a morphological operation

can be created that is sensitive to specific shapes in the input image[34]. There are many types

of morphological operations such as dilation, erosion, opening and closing.

3.2.3.2

DILATION AND EROSION

Dilation and erosion are basic morphological processing operations. They are

defined in terms of more elementary set operations, but are employed as the basic elements

of many algorithms. Both dilation and erosion are produced by the interaction of structuring

element with a set of pixels of interest in the image[19].

Dilation adds pixels to the boundaries of objects in an image, while erosion removes

pixels on object boundaries. The number of pixels added or removed from the objects in an

image depends on the size and shape of the structuring element used to process the image.

In the morphological dilation and erosion operations, the state of any given pixel in the

output image is determined by applying a rule to the corresponding pixel and its neighbours

in the input image. The rule used to process the pixels defines the operation as a dilation or

an erosion[34]. Table 3.2.3.2 shows the operations and the rules.

Operation

Dilation

Rule

The value of the output pixel is the maximum value of all the pixels in the

input pixel's neighborhood. In a binary image, if any of the pixels is set to

the value 1, the output pixel is set to 1.

Erosion

The value of the output pixel is the minimum value of all the pixels in the

input pixel's neighborhood. In a binary image, if any of the pixels is set to

0, the output pixel is set to 0.

Table 3.2.3.2: Rules for dilation and erosion[34]

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

28

3.2.3.3

DILATION

Suppose 𝐴 and 𝐵 are sets of pixels. Then the dilation of 𝐴 by 𝐵, denoted 𝐴 ⊕ 𝐵, is

defined as 𝐴 ⊕ 𝐵 = ∪𝑥∈𝐵 𝐴𝑥 . This means that for every point 𝑥 ∈ 𝐵, 𝐴 is translated by

those coordinates. An equivalent definition is that 𝐴 ⊕ 𝐵 = {(x, y) + (u, v): (x, y) ∈

A, (u, v) ∈ 𝐵}. Dilation is seen to be commutative, that 𝐴 ⊕ 𝐵 = B ⊕ 𝐴[3]. Figure 3.2.3.3a

shows an original fundus image before dilation and Figure 3.2.3.3b shows the same image

after dilation with disk shaped SE of radius 8. Optic disc becomes more prominent and

exudates can also be seen near macula.

Figure 3.2.3.3a: Original image

3.2.3.4

Figure 3.2.3.3b: Image after dilation with

disk shaped SE

EROSION

Given sets 𝐴 and 𝐵, the erosion of 𝐴 by 𝐵, written 𝐴 ⊝ 𝐵, is defined as 𝐴 ⊝ 𝐵 =

{𝑤: 𝐵𝑤 ⊆ 𝐴}[3]. Figure 3.2.3.4a shows an original fundus image before dilation and Figure

3.2.3.4b shows the same image after erosion with disk shaped SE of radius 8. Blood vessels

become more prominent.

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

29

Figure 3.2.3.4a: Original image

3.2.3.5

Figure 3.2.3.4b: Image after erosion with

disk shaped SE

OPENING AND CLOSING

Dilation and erosion are often used in combination to implement image processing

operations[34]. Erosion followed by dilation is called an open operation. Opening of an

image smoothes the contour of an object, breaks narrow isthmuses (“bridges”) and

eliminates thin protrusions[12]. Dilation followed by erosion is called a close operation.

Closing of an image smoothes section of contours, fuses narrow breaks and long thin gulfs,

eliminates small holes in contours and fills gaps in contours[12].

Opening operation of image is defined as 𝐴 ∘ 𝐵 = (𝐴 ⊖ 𝐵) ⊕ 𝐵 [3]. Since opening

operation of image consists of erosion followed by dilation, therefore it can also be defined

as 𝐴 ∘ 𝐵 =∪ {𝐵𝑤 : 𝐵𝑤 ⊆ 𝐴}[3].

Closing operation of image is defined as 𝐴 ∙ 𝐵 = (𝐴 ⊕ 𝐵) ⊖ 𝐵[3]. Figure 3.2.3.5a

and Figure 3.2.3.5b shows the difference between opening operation and closing operation

of fundus images.

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

30

Figure 3.2.3.5a: Opening operation with

disk shaped image

Figure 3.2.3.5b: Closing operation with

disk shaped SE image

3.2.4 THRESHOLDING

Thresholding turns a colour or grayscale image into a 1-bit binary image. This is done

by allocating every pixel in the image either black or white, depending on their value. The

pivotal value that is used to decide whether any given pixel is to be black or white is

the threshold[17].

Thresholding is useful to remove unnecessary detail from an image to concentrate

on essentials[3]. In the case of the fundus image, by removing all gray level information, the

blood vessels are reduced to binary pixels. It is necessary to distinguish blood vessels

foreground from the background information. Thresholding can also be used to bring out

hidden detail. It is very useful in the image region which is obscured by similar gray levels.

Therefore, choosing an appropriate threshold value is important because a low value

may decrease the size of some of the objects or reduce the number and a high value may

include extra background information. Figure 3.2.4a shows original fundus image before

thresholding with CLAHE. Figure 3.2.4b shows the same image with too high threshold

value resulting in too much background information. Figure 3.2.4c shows the same image

with too low threshold value resulting in missing foreground information.

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

31

Figure 3.2.4a: Original image

Figure 3.2.4b: Image with too high threshold value

Figure 3.2.4c: Image with too low threshold value

3.2.5 EDGE DETECTION

In an image, an edge is a curve that follows a path of rapid change in image intensity.

Edges are often associated with the boundaries of objects in a scene[4]. Edge detection refers

to the process of identifying and locating sharp discontinuities in an image[39]. It is possible

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

32

to use edges to measure the size of objects in an image, isolate particular objects from their

background, and to recognize or classify objects[3].

There are generally six edge detection algorithms and they are, Sobel, Prewitt, Roberts,

Laplacian of Gaussian (LoG), zero-cross and Canny. Table 3.2.5 shows the six edge

detection methods and their descriptions.

Methods

Descriptions

The Sobel method finds edges using the

Sobel approximation to the derivative. It

returns edges at those points where the

gradient of I is maximum.

The Prewitt method finds edges using the

Prewitt

Prewitt approximation to the derivative. It

returns edges at those points where the

gradient of I is maximum.

The Roberts method finds edges using the

Roberts

Roberts approximation to the derivative. It

returns edges at those points where the

gradient of I is maximum.

The Laplacian of Gaussian method finds

Laplacian of Gaussian (LoG)

edges by looking for zero crossings after

filtering I with a Laplacian of Gaussian

filter.

The zero-cross method finds edges by

zero-cross

looking for zero crossings after filtering I

with a filter the user specify.

The Canny method finds edges by looking

Canny

for local maxima of the gradient of I. The

gradient is calculated using the derivative of

a Gaussian filter. The method uses two

thresholds, to detect strong and weak edges,

and includes the weak edges in the output

only if they are connected to strong edges.

This method is therefore less likely than the

others to be fooled by noise, and more likely

to detect true weak edges.

Table 3.2.5: Methods and description of various edge detection algorithms[14]

Sobel

After comparing all six edge detection algorithms, the Canny method performs better

than the others due to the fact that it uses two thresholds to detect strong and weak edges and

for this reason, Canny algorithm is chosen for edge detection over the others for this project.

Figure 3.2.5a, b, c, d, e, f shows original image, Sobel edge detection, Prewitt edge

detection, Roberts edge detection, Laplacian of Gaussian (LoG) edge detection and Canny

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

33

edge detection methods respectively. It is apparent that by using Canny edge detection

method, the weak fine blood vessels can be detected.

Figure 3.2.5a: Original image

Figure 3.2.5b: Sobel

Figure 3.2.5c: Prewitt

Figure 3.2.5d: Roberts

Figure 3.2.5e: Laplacian of Gaussian (LoG)

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

34

Figure 3.2.5f: Canny

3.2.6 MEDIAN FILTERING

Median filtering is a nonlinear operation often used in image processing to reduce "salt

and pepper" noise. A median filter is more effective than convolution when the goal is to

simultaneously reduce noise and preserve edges[1]. Median of a set is the middle value when

values are sorted. For even number of values, the median is the mean of the middle of two[3].

Figure 3.2.6a shows an illustration of a 3 x 3 median filter for a set of sorted values to

obtain the median value.

55

70

57

68

260

63

66

65

62

55 57 62 63 65 66 68 70 260

65

Figure 3.2.6a: Illustration of a 3 x 3 median filter

This method of obtaining the median value means that very large or very small values

(noisy values) will be replaced by the value closer to its surroundings. Figure 3.2.6b shows

the difference before and after applying median filtering. The “salt and pepper” noise in the

original image have been clearly reduced after applying the median filtering.

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

35

Figure 3.2.6b: Original image (left) and image after median filtering (right)

3.3

FEATURE EXTRACTION

Features namely, blood vessels, microaneurysms, exudates and textures are

extracted. The steps are explained below.

3.3.1 BLOOD VESSELS DETECTION

Figure 3.3.1a shows the system block diagram of blood vessels detection. The

detailed steps are explained below.

Original image

Green component

of original image

Inverting intensity

of green

component

Edge detection

(Canny)

Border detection

Morphological

opening using

disk SE of radius

8

Blood vessels

detection

Perform CLAHE

(adaptive

histogram

equalization)

Perform median

filtering

Image with

boundary is

obtained (after

subtracting image

with border)

Morphological

opening using ball

SE of radius and

height 8

Thresholding

Fiill holes and

remove boundary

Final image and

area extracted

Figure 3.3.1a: System block diagram for detecting blood vessels

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

36

All coloured images consist of RGB (red, green blue) primary colours channels. Each

pixel has a particular colour by the amount of red, green and blue. If each colour component

has a range of 0-255 then three components give 2553 = more than 16 million colours. Each

pixel consists of 24 bits and therefore is a 24-bit colour image. The fundus images used in

this project are 24-bit, 720 x 576 pixels. Normal images as shown in Figure 3.3.1b basically

consist of blood vessels, optic disc and macula without any other abnormal features. Blood

vessels detection is important in identification of diabetic retinopathy (DR) through image

processing techniques.

Retinal

Blood

Vessels

Fovea

Macula

Optic disc

Figure 3.3.1b: Normal retinal fundus image

Firstly, as part of the image preprocessing step, the green component of the image is

extracted as shown in Figure 3.3.1c and the green component’s intensity is inverted as

shown in Figure 3.3.1d.

Figure 3.3.1c: Green component

Figure 3.3.1d: Inverted green component

After inverting the green component’s intensity, edge detection is performed using

Canny method. The border is then detected and a disk shaped structuring element (SE) of

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

37

radius 8 is created with morphological opening operation (erosion then dilation). Next,

subtract the eroded image with the original image and the border or boundary is obtained.

Afterwards, adaptive histogram equalization is performed to improve the contrast of

the image and to correct uneven illumination as shown in Figure 3.3.1e. A morphological

opening operation (erosion then dilation) is performed using the ball shaped structuring

element (SE) to smooth the background and to highlight the blood vessels as shown in

Figure 3.3.1f.

Figure 3.3.1e: Image after CLAHE

Figure 3.3.1f: Image after opening operation

The image is then subtracted from the adaptive histogram equalized image (CLAHE).

As shown in Figure 3.3.1g, the resulting image shows higher intensity at the foreground

(blood vessels) as compared with the background – a contrast.

Figure 3.3.1g: Image after subtraction

From the subtracted image, the image is converted from grayscale to binary by

performing thresholding with value of 0.1 as shown in Figure 3.3.1h. Median filtering is

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

38

performed to remove "salt and pepper" noise as shown in Figure 3.3.1i. The boundary is

obtained after subtracting the border with disk shaped SE with image with median filtering.

Figure 3.3.1h: Image after thresholding

Figure 3.3.1i: Image after median filtering

The border is then eliminated after filling the holes that do not touch the edge to obtain

the final image as shown in Figure 3.3.1j. The pixel values of the image are inverted to get

only the blood vessels with black background as shown in Figure 3.3.1k. The detailed

MATLAB code is attached in Appendix B.

Figure 3.3.1j: Final image

Figure 3.3.1k: Final image (inverted)

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

39

3.3.2 MICROANEURYSMS DETECTION

Figure 3.3.2a shows the system block diagram of microaneurysms detection. The

detailed steps are explained below.

Original image

Red component

of original

image

Inverting

intensity of red

component

Edge detection

(Canny)

Border detection

Morphological

opening using

disk SE of radius

8

Remove

boundary

Fill holes

Subtract image

without

boundary with

blood vessels

after edge

detection

Subtract image

with holes from

image with filled

holes

Blood vessels

detection

Edge detection

(Canny)

Fill holes

Subtract image

with filled holes

from the image

with

microaneurysms

and unwanted

artifacts

Final image and

area extracted

Figure 3.3.2a: System block diagram for detecting microaneurysms

Microaneurysms appear as tiny red dots on retinal fundus image as shown in Figure

3.3.2b, therefore the red component of the RGB image are used to identify the

microaneurysms as shown in Figure 3.3.2c. Next, the intensity is then inverted as shown in

Figure 3.3.2d. Similar to blood vessels detection, Canny method is used for edge detection

for microaneurysms detection as shown in Figure 3.3.2e.

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

40

Microaneurysms

Figure 3.3.2b: Abnormal retinal fundus image

Figure 3.3.2c: Red component

Figure 3.3.2d: Inverted red component

The boundary is detected by filling up the holes and a disk shaped structuring element

(SE) of radius 8 is created with morphological opening operation (erosion then dilation) as

shown in Figure 3.3.2f. The edge detected image is then subtracted from the image with

boundary to obtain image without boundary as shown in Figure 3.3.2g.

Figure 3.3.2e: Image after Canny edge

detection

Figure 3.3.2f: Image with boundary

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

41

Figure 3.3.2g: Image after boundary subtraction

After which, the holes or gaps are filled, resulting in microaneurysms and other

unwanted artifacts present as shown in Figure 3.3.2h. The image with filled holes or gaps

then subtracts the image before filled holes or gaps. The resulting image thus has

microaneurysms and other unwanted artifacts without the edge as shown in Figure 3.3.2i.

Figure 3.3.2h: Image after filling up the

holes or gaps

Figure 3.3.2i: Image after subtraction

The blood vessels are detected using the same method mentioned in section 3.3.1.

Figure 3.3.2j shows the blood vessels detected image. Edge detection Canny method is then

used on the blood vessels image to detect the edges as shown in Figure 3.2.2k. This image

is then subtracted from the image after boundary subtraction (Figure 3.3.2g). The resulted

image is shown in Figure 3.3.2l.

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

42

Figure 3.3.2j: Blood vessels detection

Figure 3.3.2k: Blood vessels after

edge detection

Figure 3.3.2l: Image after subtraction

Finally, after filling the holes or gaps as shown in Figure 3.3.2m, this image is

subtracted with the image with microaneurysms and unwanted artifacts to obtain the final

image with only microaneurysms as shown in Figure 3.3.2n. The detailed MATLAB code is

attached in Appendix C.

Figure 3.3.2m: Image after filling

holes or gaps

Figure 3.3.2n: Final image

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

43

3.3.3 EXUDATES DETECTION

Figure 3.3.3a shows the system block diagram of exudates detection. The detailed

steps are explained below.

Original image

Green component of

original image

Morphological

closing using

cctagon shaped SE

of radius 9

Column wise

neighbourhood

operation

Thresholding

Morphological

closing using disk

SE of radius 10

Edge detection

(Canny)

ROI of radius 82

Remove border

Morphological

erosion operation

using disk shaped

SE of radius 3

Final image and area

extracted

Remove optic disc

Figure 3.3.3a: System block diagram for detecting exudates

Exudates appear as yellowish dots in the fundus images as shown in Figure 3.3.3b.

It is easier to spot them than microaneurysms. In order to detect exudates, firstly similar to

blood vessels detection, green component of the RGB image is extracted as shown in

Figure 3.3.3c and octagon shaped structuring element (SE) of size 9 is created. A

morphological closing is performed on the SE as shown in Figure 3.3.3d. As clearly

shown, the exudates become more prominent than the background although the optic disc is

also present, as their grey levels are similar.

Exudates

Figure 3.3.3b: Abnormal retinal fundus image

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

44

Figure 3.3.3c: Green component

Figure 3.3.3d: Image after closing operation

Column wise neighbourhood operation is performed to rearrange the image into

columns first. The parameter sliding indicates that overlapping neighbourhoods are being

used[3]. This operation is performed to remove most of the unwanted artifacts leaving only

the border, exudates and the optic disc as shown in Figure 3.3.3e.

Figure 3.3.3e: Image after column wise neighbourhood operation

Next, thresholding is performed to the image with the threshold value of 0.7 as

shown in Figure 3.3.3f. Morphological closing with disk shaped structuring element (SE)

of size 10 is used to fill up the holes or gaps of the exudates as shown in Figure 3.3.3g.

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

45

Figure 3.3.3f: Image after thresholding

Figure 3.3.3g: Image after morphological

closing

The optic disc contains the highest pixel value in the image. Therefore, to remove

the optic disc, edge detection using Canny method (Figure 3.3.3h) is used together with

region of interest (ROI). First, a radius of 82 is defined as most optic disc is of size 80 x 80

pixels as shown in Figure 3.3.3i. Next, the optic disc is removed together with the border

as shown in Figure 3.3.3j and Figure 3.3.3k.

Figure 3.3.3h: Image after Canny edge

detection

Figure 3.3.3i: Image after ROI

Figure 3.3.3k: Image after removing

Figure 3.3.3j: Image after removing

border

optic disc

BME499

ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT

REPORT

46

Finally, by performing morphological erosion operation with disk shaped

structuring element (SE) of size 3 to obtain the final image with only exudates as shown in

Figure 3.3.3l. The detailed MATLAB code is attached in Appendix D.

Figure 3.3.3l: Final image

3.3.4 TEXTURE ANALYSIS

Texture describes the physical structure characteristic of a material such as

smoothness and coarseness. It is a spatial concept indicating what, apart from color and the

level of gray, characterizes the visual homogeneity of a given zone of an image[24]. Texture

analysis of an image is the study of mutual relationship among intensity values of

neighbouring pixels repeated over an area larger than the size of the relationship [22]. The

main types of texture analysis are structural, statistical and spectral.

Mean, standard deviation, third moment and entropy are statistical type. Mean,

standard deviation and third moment are concern with properties of individual pixels. Mean

N 1 N 1

is defined as: Mean = µ1 =

iPi, j [6] and standard deviation is defined as: SD = σ1 =

i 0 j 0

N 1 N 1

Pi, j i

i 0 j 0

2 [6]

1

. Third moment is a measure of the skewness of the histogram and is

[37]

3

defined as: 𝜇3 (𝑧) = ∑𝐿−1

. Entropy is a statistical type of texture that

𝑖=0 (𝑧𝑖 − 𝑚) 𝑝(𝑧𝑖 )

measures randomness in an image texture. An image that is perfectly flat will have entropy

of zero. Consequently, they can be compressed to a relatively small size. On the other hand,

high entropy images such as an image of heavily cratered areas on the moon have a great

deal of contrast from one pixel to the next and consequently cannot be compressed as much

as low entropy images[7]. Entropy is defined as: − ∑ 𝑃 log 2 𝑃. The texture features used in

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

47

this project are mean, standard deviation, third moment and entropy. The detailed

MATLAB code is attached in Appendix E.

3.4

SIGNIFICANCE TEST

Significance test is to calculate statistically whether set(s) of data is occurred by

chance or true occurrence or the level of true occurrence. The significance level is defined

as p-value. The lower the p-value, the more statistically significant a set of data is. For

example, given set A has a p-value of 0.1 and set B has a p-value of 0.05, then set B is said

to be more statistically significant than set A as there is only 5% chance that it could occur

by chance or coincidence. Unlike set B, set A has 5% more chance than set B that it could

occur by chance or coincidence. The typical level of significance is 5% or p-value ≤ 0.05.

Significance test is done prior to classification.

3.4.1 STUDENT’S T-TEST

Student’s t-test deals with the problems associated with inference based on “small”

samples[46]. When independent samples are available from each population the procedure is

often known as the independent samples t-test and the test statistic is: 𝑡 =

𝑥̅ 1 −𝑥̅ 2

1

1

+

𝑛1 𝑛2

𝑠√

where 𝑥̅1

and 𝑥̅2 are the means of samples of size 𝑛1 and 𝑛2 taken from each population[5].

Using the area of the features for blood vessels, microaneurysms, exudates, mean,

standard deviation, third moment and entropy into Student’s t-test, the significance test

results are generated. Appendix A shows the box plot for various features (area) with high,

median and low values. Table 3.4.1 shows the p-values of each set of features (area). The

highlighted (yellow) rows indicate that data is statistically significant. Therefore, only the

statistically significant sets of data are used in the classification (ie: blood vessels,

microaneurysms, mean and third moment).

After selecting the features, normalization of the data is then processed prior to

classification. Normalization is done by dividing each value in the particular feature by the

highest value of that particular feature. This is to ensure each value is ≤ 1 > 0 to improve

the classification as it will have less distribution among the data.

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

48

Mean ± Standard Deviation

Features

P-Value

Normal

Abnormal

Blood Vessels

31170 ± 7989

35950 ± 10430

0.051

Exudates

1909 ± 1224

1477 ± 957

0.13

Microaneurysms

330±238

884±564

<0.0001

Textures

Mean

74.1± 17.0

83.3±21.4

0.072

Textures

Standard

Deviation

37.0±6.42

39.5±8.36

0.20

Textures

Third Moment

0.139±0.609

-0.400±0.389

0.0001

Textures

Entropy

4.04±0.320

4.13±0.305

0.30

Table 3.4.1: Student’s t-test results

3.5

CLASSIFICATION

For this project, Fuzzy and Gaussian Mixture Model (GMM) are used for automatic

classification of diabetic retinopathy (DR). There are 42 training data and 18 testing data.

Figure 3.5 shows the block diagram of training and testing data processing prior to

inputting to the classifier. Normalized data is first split into 70% and 30%. Step I consists

of 70% of normal and abnormal data and 30% of normal and abnormal data. They are then

grouped into step III. Train1 and test1 is then further split into set A, B, C and D (step IV).

They are then mixed and split into train2, test2 and train3, test3 (step V). Lastly, the training

and testing data is exported to MATLAB as variables to load into the classifier.

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

49

Normalized

data

Step I

70%

Step II

30%

Step III

70% normal

70%

abnormal

30% normal

30%

abnormal

Step IV

70% train1

normal

70% train1

abnormal

30% test1

normal

30% test1

abnormal

train1

A - 30%

B - 30%

D - 10%

train1

A - 30%

B - 30%

D - 10%

test1

C - 30%

test1

C - 30%

train2

C - 30%

B - 30%

D - 10%

train2

C - 30%

B - 30%

D - 10%

test2

A - 30%

test2

A - 30%

train3

C - 30%

A - 30%

D - 10%

train3

C - 30%

A - 30%

D - 10%

test3

B - 30%

test3

B - 30%

Step V

Figure 3.5: Block diagram of training and testing data

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

50

3.5.1 FUZZY

A fuzzy classifier is any classifier which uses fuzzy sets either during its training or

during its operation[29].

Fuzzy pattern recognition is sometimes identified with fuzzy

clustering or with fuzzy if-then systems used as classifiers[29].

In a fuzzy classification system, a case or an object can be classified by applying a

set of fuzzy rules based on the linguistic values of its attributes. Every rule has a weight,

which is a number between 0 and 1, and this is applied to the number given by the

antecedent. It involves 2 distinct parts. The first part involves evaluating the antecedent,

fuzzifying the input and applying any necessary fuzzy operators[40] such as, union: 𝜇 𝐴 ∩

𝐵 (𝑥) = Min[𝜇𝐴 (𝑥), 𝜇𝐵 (𝑥)], intersection: 𝜇 𝐴 ∩ 𝐵 (𝑥) = Min[𝜇𝐴 (𝑥), 𝜇𝐵 (𝑥)], complement:

𝜇𝐴 − (𝑥) = 1 − 𝜇𝐴 (𝑥) where 𝜇 is the membership function[40]. The second part requires

application of that result to the consequent, known as inference. A fuzzy inference system

is a rule-based system that uses fuzzy logic, rather than Boolean logic, to reason about

data[40]. Fuzzy Logic (FL) is a multivalued logic, that allows intermediate values to be

defined between conventional evaluations like true/false, yes/no, high/low, etc[30]. These

fuzzy rules define the connection between input and output fuzzy variables[40].

Table 3.5.1a shows the output of 3 set of testing data from fuzzy classifier. The

correct (true) Boolean rule from nos 1-9 is supposed to be [0, 1] (true positives for normal

data) so [1, 0] (false positives) is incorrect (false). Therefore, there are some errors.

Likewise for data from nos 10-18, the correct (true) Boolean rule is supposed to be [1, 0]

(true negatives for abnormal data) so [0, 1] (false negatives) is incorrect (false). Therefore,

there are some errors too. Label 1 denotes normal data and label 2 denotes abnormal data.

The correct labeling should be 1 from nos 1-9 and 2 from nos 10-18.

Table 3.5.1b-d shows fuzzy testing data for positive predictive value, negative

predictive value, sensitivity and specificity calculation. TP denotes true positives, TN

denotes true negatives, FP denotes false positives and FN denotes false negatives. Using

the formula: Specificity =

Sensitivity =

𝑛𝑢𝑚𝑏𝑒𝑟 𝑜𝑓 𝑡𝑟𝑢𝑒 𝑛𝑒𝑔𝑎𝑡𝑖𝑣𝑒𝑠

𝑛𝑢𝑚𝑏𝑒𝑟 𝑜𝑓 𝑡𝑟𝑢𝑒 𝑛𝑒𝑔𝑎𝑡𝑖𝑣𝑒𝑠 + 𝑛𝑢𝑚𝑏𝑒𝑟 𝑜𝑓 𝑓𝑎𝑙𝑠𝑒 𝑝𝑜𝑠𝑖𝑡𝑖𝑣𝑒𝑠

𝑛𝑢𝑚𝑏𝑒𝑟 𝑜𝑓 𝑡𝑟𝑢𝑒 𝑝𝑜𝑠𝑖𝑡𝑖𝑣𝑒𝑠

𝑛𝑢𝑚𝑏𝑒𝑟 𝑜𝑓 𝑡𝑟𝑢𝑒 𝑝𝑜𝑠𝑖𝑡𝑖𝑣𝑖𝑒𝑠 + 𝑛𝑢𝑚𝑏𝑒𝑟 𝑜𝑓 𝑓𝑎𝑙𝑠𝑒 𝑛𝑒𝑔𝑎𝑡𝑖𝑣𝑒𝑠

∗ 100% [43] and

∗ 100% [43]. A specificity of

100% means that the test recognizes all actual negatives[43] and a sensitivity of 100% means

that the test recognizes all actual positives[43]. Positive predictive value denotes positive test

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

51

results which are correctly diagnosed and Negative predictive value denotes negative test

results which are correctly diagnosed.

No

Fuzzy

comparing

testing1

Label

Error

Fuzzy

comparing

testing2

Label

Error

Fuzzy

comparing

testing3

Label

1

0

1

1

0

1

1

0

1

1

2

0

1

1

0

1

1

0

1

1

3

1

0

2

0

1

1

0

1

1

4

0

1

1

0

1

1

0

1

1

5

0

1

1

1

0

2

0

1

1

6

1

0

2

0

1

1

1

0

2

7

0

1

1

0

1

1

0

1

1

8

1

0

2

Error

0

1

1

0

1

1

9

1

0

2

Error

0

1

1

0

1

1

10

1

0

2

1

0

2

1

0

2

11

1

0

2

1

0

2

1

0

2

12

1

0

2

1

0

2

1

0

2

13

1

0

2

1

0

2

1

0

2

14

0

1

1

1

0

2

1

0

2

15

1

0

2

0

1

1

1

0

2

16

1

0

2

1

0

2

0

1

1

17

1

0

2

1

0

2

1

0

2

18

1

0

2

1

0

2

0

1

1

Error

Error

Error

Error

Error

Error

Error

Error

Error

Table 3.5.1a: testing1, testing2 and testing3 data output using fuzzy classifier

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

52

Fuzzy comparing testing1

POSITIVE NEGATIVE

POSITIVE

NEGATIVE

FP = 4

Positive

predictive

value = TP

/ (TP + FP)

= 5 / (5 +

4) = 5 / 9

5 / 9 * 100 =

55.6%

FN = 1

TN = 8

Negative

predictive

value = TN

/ (FN +

TN)

= 8 / (1 +

8)

=8/9

8 / 9 * 100 =

88.9%

Sensitivity =

TP / (TP +

FN) = 5 / (5 +

1) = 5 / 6

Specificity

= TN / (FP +

TN) = 8 / (4

+ 8) = 8 / 12

TP = 5

5 / 6 * 100 =

8 / 12 * 100

83.3%

= 66.7%

Table 3.5.1b: testing1 data output calculation using fuzzy classifier

Fuzzy comparing testing2

POSITIVE NEGATIVE

POSITIVE

NEGATIVE

FP = 1

Positive

predictive

value = TP

/ (TP + FP)

= 8 / (8 +

1) = 8 / 9

8 / 9 * 100 =

88.9%

FN = 1

TN = 8

Negative

predictive

value = TN

/ (FN +

TN)

= 8 / (1 +

8)

=8/9

8 / 9 * 100 =

88.9%

Sensitivity =

TP / (TP +

FN) = 8 / (8 +

8) = 8 / 16

Specificity

= TN / (FP +

TN) = 8 / (1

+ 8) = 8 / 9

TP = 8

8 / 16 * 100 = 8 / 9 * 100 =

50%

88.9%

Table 3.5.1c: testing2 data output calculation using fuzzy classifier

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

53

Fuzzy comparing testing3

POSITIVE

POSITIVE

NEGATIVE

NEGATIVE

FP = 1

Positive

predictive

value = TP /

(TP + FP) =

8 / (8 + 1) =

8/9

8 / 9 * 100 =

88.9%

FN = 2

TN = 7

Negative

predictive

value = TN

/ (FN + TN)

= 7 / (2 + 7)

=7/9

7 / 9 * 100 =

77.8%

Sensitivity =

TP / (TP +

FN) = 8 / (8 +

2) = 8 / 10

Specificity

= TN / (FP +

TN) = 7 / (1

+ 7) = 7 / 8

TP = 8

8 / 10 * 100 = 7 / 8 * 100 =

80%

87.5%

Table 3.5.1d: testing3 data output calculation using fuzzy classifier

3.5.2 GAUSSIAN MIXTURE MODEL (GMM)

A Gaussian Mixture Model (GMM) is a parametric probability density function

represented as a weighted sum of Gaussian component densities. GMMs are commonly

used as a parametric model of the probability distribution of continuous measurements or

features in a biometric system[11]. A GMM is a weighted sum of 𝑀component Gaussian

densities as given by the equation: 𝑝(𝑥|𝜆) = ∑𝑀

𝑖=1 𝑤𝑖 𝑔(𝑥|𝜇𝑖 , ∑𝑖 ) where 𝑥 is a Ddimensional continuous-valued data vector, 𝑤𝑖 , 𝑖 = 1, … , 𝑀, are the mixture weights, and

𝑔(𝑥|𝜇𝑖 , ∑𝑖) , 𝑖 = 1, … , 𝑀, are the component Gaussian densities. Each component density is

a D-variate Gaussian function of the form: 𝑔(𝑥|𝜇𝑖 , ∑𝑖) =

1

𝐷

1

(2𝜋) 2 | ∑𝑖 |2

1

exp{− 2 (𝑥 −

𝜇𝑖 )′ ∑−1

𝑖 (𝑥 − 𝜇𝑖 )}, with mean vector 𝜇𝑖 and covariance matrix ∑𝑖. The mixture weights

[11]

satisfy the constraint that ∑𝑀

.

𝑖=1 𝑤𝑖 = 1

Figure 3.5.2 shows the GMM classification method. Table 3.5.2a shows the output

of 3 set of testing data from GMM classifier. Column No of incorrect normal data denotes

false positives and there are 2 incorrect normal data in testing1. Column No of incorrect

abnormal data denotes false negatives and there are 6 incorrect abnormal data in testing1

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

54

and testing3. The Classification rate denotes percentage of correct data. The higher the

classification rate, the higher the accuracy.

Table 3.5.2b-d shows GMM testing data for positive predictive value, negative

predictive value, sensitivity and specificity calculation. TP denotes true positives, TN

denotes true negatives, FP denotes false positives and FN denotes false negatives. Using

the formula: Specificity =

Sensitivity =

𝑛𝑢𝑚𝑏𝑒𝑟 𝑜𝑓 𝑡𝑟𝑢𝑒 𝑛𝑒𝑔𝑎𝑡𝑖𝑣𝑒𝑠

𝑛𝑢𝑚𝑏𝑒𝑟 𝑜𝑓 𝑡𝑟𝑢𝑒 𝑛𝑒𝑔𝑎𝑡𝑖𝑣𝑒𝑠 + 𝑛𝑢𝑚𝑏𝑒𝑟 𝑜𝑓 𝑓𝑎𝑙𝑠𝑒 𝑝𝑜𝑠𝑖𝑡𝑖𝑣𝑒𝑠

𝑛𝑢𝑚𝑏𝑒𝑟 𝑜𝑓 𝑡𝑟𝑢𝑒 𝑝𝑜𝑠𝑖𝑡𝑖𝑣𝑒𝑠

𝑛𝑢𝑚𝑏𝑒𝑟 𝑜𝑓 𝑡𝑟𝑢𝑒 𝑝𝑜𝑠𝑖𝑡𝑖𝑣𝑖𝑒𝑠 + 𝑛𝑢𝑚𝑏𝑒𝑟 𝑜𝑓 𝑓𝑎𝑙𝑠𝑒 𝑛𝑒𝑔𝑎𝑡𝑖𝑣𝑒𝑠

∗ 100% [43] and

∗ 100% [43]. A specificity of

100% means that the test recognizes all actual negatives[43] and a sensitivity of 100% means

that the test recognizes all actual positives[43]. Positive predictive value denotes positive test

results which are correctly diagnosed and Negative predictive value denotes negative test

results which are correctly diagnosed.

Normalized

data

Train

GMM

Test

Classifier

Output

Figure 3.5.2: Block diagram of GMM method

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

55

GMM

comparing

testing1

GMM

comparing

testing2

GMM

comparing

testing3

No of

correct

normal

data

No of

incorrect

normal

data

No of

correct

abnormal

data

No of

incorrect

abnormal

data

Classification

rate

7

2

6

3

72.2%

9

0

9

0

100%

9

0

6

3

83.3%

(testing1+testing2

+ testing3) / 3 =

85.2%

Average

classification

rate

Table 3.5.2a: testing1, testing2 and testing3 data output using GMM classifier

GMM comparing testing1

POSITIVE

POSITIVE

NEGATIVE

NEGATIVE

FP = 2

Positive

predictive

value = TP /

(TP + FP) =

7 / (7 + 2) =

7/9

7 / 9 * 100 =

77.8%

FN = 3

TN = 6

Negative

predictive

value = TN

/ (FN + TN)

= 6 / (3 + 6)

=6/9

6 / 9 * 100 =

66.7%

Sensitivity =

TP / (TP +

FN) = 7 / (7 +

3) = 7 / 10

Specificity

= TN / (FP +

TN) = 6 / (2

+ 6) = 6 / 8

TP = 7

7 / 10 * 100 = 6 / 8 * 100 =

70%

75%

Table 3.5.2b: testing1 data output calculation using GMM classifier

BME499 ENG499 MTD499 ICT499 MTH499 CAPSTONE PROJECT REPORT

56

GMM comparing testing2

POSITIVE

POSITIVE

NEGATIVE

TP = 9

FN = 0

NEGATIVE

FP = 0

Positive

predictive

value = TP /

(TP + FP) =

9 / (9 + 0) =

9/9=1

9 / 9 * 100 =

100%

TN = 9

Negative

predictive

value = TN

/ (FN + TN)

= 9 / (0 + 9)

=9/9=1

9 / 9 * 100 =

100%

Specificity

Sensitivity =

= TN / (FP +

TP / (TP +

TN) = 9 / (0

FN) = 9 / (9 +

+ 9) = 9 / 9 =

0) = 9 / 9 = 1

1

9 / 9 * 100 = 9 / 9 * 100 =

100%

100%