TX/OK AETC

advertisement

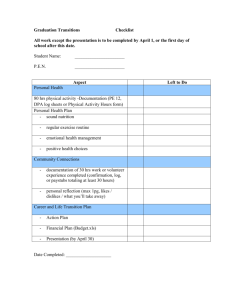

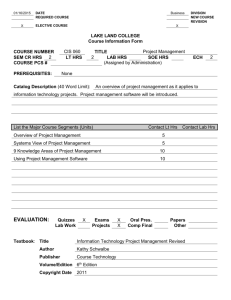

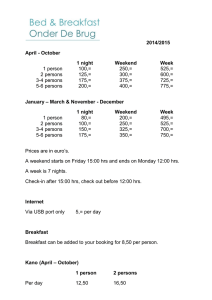

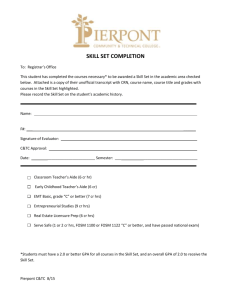

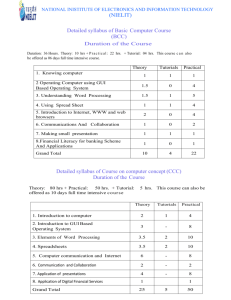

Framework For Excellence Assessing Provider Behavior Change Resulting from AETC and Related Training Activities Facilitator: Janet Myers, Director AETC National Evaluation Center July 27, 2004 Framework for Excellence Measuring Results Which helps in: – Refining Site Analysis – Marketing – Curriculum Design – Needs Assessment – Course Delivery and Development – Further Measurement and Evaluation! Presenters Cheryl Hamill, RN, MS, ACRN & Nancy Showers, DSW Delta Region AETC HIVQual Results 2002-2003 Sample RW Title III Community Health Center in Mississippi Mari Millery, PhD NY/NJ AETC Lessons from Assessing Knowledge & Practice Outcomes of Level III Trainings Jennifer Gray, RN, PhD & Richard Vezina, MPH TX/OK AETC, Women & HIV Symposium (JG) Pacific AETC, Asilomar Faculty Development Conference (RV) Debbie Isenberg, MPH, CHES & Margaret Clawson, MPH Southeast AETC Intensive On-Site Training Evaluation: A Mixed Methods Approach Brad Boekeloo, PhD, ScM NMAETC, Delta AETC Analysis of HIV Patient-Provider Communication Measurement and Evaluation Why evaluate? – To determine if the training was successful in meeting aims (for participants and faculty) – To decide how to change training content – To improve the quality of training Why measure provider behavior change? – To determine if training has the desired effect on participants and ultimately, on quality of care Kirkpatrick’s Model (from Kirkpatrick, Donald L. Evaluating Training Programs (2nd edition) 1998) Training Level: Key Evaluation Question: Level 1: Reaction How do participants react to the training? Level 2: Learning To what extent do participants change attitudes, improve knowledge and/or increase skill as a result of the training? Level 3: Behavior To what extent do changes in behavior occur because of participation in the training? Level 4: Results What are the final results (e.g., patient perception of care or outcomes of care) that occur because of participation in the training? The HIVQUAL Project Nancy Showers, DSW Delta Region AETC The HIVQUAL Project Capacity–building and organizational support for QI Individualized on-site consultation services – Strengthen HIV-specific QI structure – Foster leadership support for quality – Guide performance measurement – Facilitate implementation of QI projects – Train HIV staff in QI methods Performance measurement data with comparative reports Partnership with HRSA to support quality management in Ryan White CARE Act communitybased programs HIVQUAL Participants - 2003 Title III Title IV Total Active 87 12 99 Independe nt Total 27 3 30 114 15 129 Percentage of Patients Annual PAP Test 100 90 80 70 60 50 40 30 20 10 0 65 43 2002 2003 Year Percentage of Patients Annual Syphilis Screen 100 90 80 70 60 50 40 30 20 10 0 86.7 45.5 2002 2003 Year Percentage of Patients Hepatitis C Status Known 100 90 80 70 60 50 40 30 20 10 0 90 50 2002 2003 1/1-4/30 5/1-8/31 9/1-12/31 Percentage of Patients Adherence Discussed 100 90 80 70 60 50 40 30 20 10 0 93.3 100 85.7 38.5 40 20 2002 2003 Year Percentage of Patients Viral Load Every 4 Months 100 90 80 70 60 50 40 30 20 10 0 56.7 18.2 2002 2003 Year Percentage of Patients MAC Prophylaxis (CD4<50) 100 90 80 70 60 50 40 30 20 10 0 100 67.7 2002 2003 Year Percentage of Patients Annual Dental Exam 100 90 80 70 60 50 40 30 20 10 0 60 30.4 2002 2003 Year Percentage of Patients Annual Mental Health Assessment 100 90 80 70 60 50 40 30 20 10 0 86.7 18.2 2002 2003 Year Delta AIDS Education and Training Center (DRAETC) Mississippi LPS - Training Summary Report Reporting period: July 1, 2002 - June 30, 2003 for Targeted RW Title-Funded Community Health Centers Cheryl Hamill, MS, RN, ACRN Instructor of Medicine Resource Center Director http://hivcenter.library.umc.edu HIV/AIDS Program University of MS Medical Center 2500 North State Street Jackson, MS 39216-4505 MS LPS Training Programs Totals by Level & Discipline For Targeted RW Title III Funded Clinic July 2002-03 Level I 1 M.D. 15 hrs. Level II 8 M.D. 63 hrs. 2 Pharm. 4 hrs. 1 N.P. 1.25 hrs. 7 Nurses 83 hrs. 4 S.W. 53 hrs. 3 C.M., 39 hrs. Level III Level IV/ICC Level IV/GCC Total 3 M.D., 5 M.D., 7 M.D., 24 M.D. 48 hrs. 10 hrs. 34 hrs. 200 hrs. 2 Pharm. 4 hrs. 1 N.P. 1.25 hrs. 5 Nurses 13 Nurse 46 hrs. 129 hrs. 1 Dental 1 Dental 8 hrs. 8 hrs. 4 S.W. 53 hrs. 3 C.M. 39 hrs. 48 trainees 434 hrs. Lessons from Assessing Knowledge and Practice Outcomes of Level III Trainings Mari Millery, PhD Decided to focus more outcome evaluation efforts on Level III because it is the most intensive and a high priority modality; and participants can be asked to devote time to extra paperwork Pre-test, post-test, and 3-month follow-up surveys Measures: – Self-rating of comfort in performing clinical tasks – Case-based knowledge questions 1. Please rate your current level of comfort in performing the following: (Circle only one answer for each question.) Very low Low Medium High Very high Choosing an appropriate HAART regimen 1 2 3 4 5 Evaluating ongoing adherence in HIV patients 1 2 3 4 5 Deciding to change HIV medications 1 2 3 4 5 2. Mrs. Z is a 34 year-old female with HIV CDC A2 disease, CD4 300 cells/cmm and viral load 50,000 copies/ml, who presents for treatment. Which of the following is the most appropriate initial regimen? a) Zidovudine (AZT)/stavudine (D4T)/indinavir b) Didanosine (DDI)/zalcitabine (DDC)/nevirapine c) Zidovudine (AZT)/lamivudine (3TC)/efavirenz d) Stavudine (D4T)/lamivudine (3TC)/nelfinavir/ritonavir Wave 1 Wave 2 Pilot Project Results (Oct 2002-June 2003) Wave 3 Respondent Averages Across All Topics/Questions: Wave 1 (n = 26), Wave 2 (n = 21), Wave 3 (n = 7) 7 6.3 6 5.8 Rating/Number Correct 5 4.2 4 3.5 3.5 3 2.5 2 1 0 Average Comfort Self-Rating Average Number of Correct Answers Lessons Learned Can be done but getting follow-up surveys back is a challenge Preliminary results are encouraging – self-reported practice comfort and case-based knowledge questions appear to work as measures Survey needs to be minimum length Dropped knowledge questions in post-test because they were too soon after baseline – post-test focuses on feedback on training Nature of Level III varies: intensity/length, profession trained, topics covered, etc. – Developed special versions for nurses and HepC 40 surveys collected with revised instruments this year – still working on getting all follow-up surveys back Measuring Training Outcomes Through Qualitative Interviewing TX/OK AETC Women & HIV Symposium (JG) and Asilomar Faculty Development Conference (RV) Jennifer Gray, RN, PhD (JG) TX/OK AETC Richard Vezina, MPH (RV) Pacific AETC TX/OK AETC Women & HIV Symposium (JG) First time region-wide symposium Multidisciplinary planning committee Lack of knowledge about gender-specific care Increased # of HIV infections among women in the region. Symposium goal: Improved care of HIV+ women Asilomar Faculty Development Conference (RV) Annual region-wide training conference 125 Participants, all PAETC faculty and program staff Conference goals: Improved skills and knowledge among faculty/trainers Improved training outcomes throughout region as a result of staff development Evaluation Plans JG Email one month post to all registrants Simple open-ended questions, for all disciplines Identify how content was used with patients and shared with peers. RV Post-Post: Form A: Self-assessment at end of Conference Identify skills and content learned, areas in which to integrate new skills and content Form B: 6 month Follow-Up Individualized telephone interviews, reviewing Form A Focus on how skills/content were applied; barriers Why these evaluation methods? Able to assess at multiple levels (Kirkpatrick model): Level 2 (Learning: improved knowledge) (RV) Level 3 (Behavior: change in practices) (JG, RV) Seeking specific content regarding conference (RV) Limited resources and time (JG) No existing tool found that met needs (JG) Findings Major Themes: (RV) Identified high need for continued skills training Transferred new skills/information to coworkers and employees Barrier to continued integration: Time constraints Major Themes: (JG) Impact on patients 13 had taught patients information learned at the symposium 3 had used info for referrals 3 system changes- i. e. assessment forms, clinical strategies Shared information with others: 8 informally, 1 structured, 4 created materials Most common topics: medication/adherence, HIV in general Strengths & Challenges of Methods What went well: What’s Next: Provide Incentives (JG, RV) Change instrument Announced at end of symposium/conf. (JG, RV) Brief instrument encouraged higher response (JG) Longer instrument yielded rich responses (RV) Shorter, easier instrument for higher response rate (RV) longer instrument for greater depth (JG) More effective confirmation of contact information (JG, RV) Intensive On-site Training Evaluation: A Mixed Methods Approach Debbie Isenberg, MPH, CHES Margaret Clawson, MPH Southeast AETC Study Overview Main research questions – Process and Impact (Reaction and Learning) • What was the quality of the training? • How well were learning objectives met? • What are the trainees’ intentions to change their clinical practice? – Outcome (Learning and Behavior) • How has the provider’s experience in the clinical training program impacted his/her ability (if at all) to provide HIV quality care to PLWH? Study Protocol Phase One – Post training CQI form completed by participants Phase Two – Recruitment packets mailed 3 months after last IOST – Research staff contact potential participants 1 week later for interview Phase Three – Reminder letter for 2nd interview sent 9 months after initial interview (total 12 months post IOST) – Research staff contact participants 1 week later for interview Content: Phase Two and Three Written Demographic Assessment (PIF+) Semi-Structured Phone Interview (Tape recorded) - Quantitative: participant asked to rate the effect of training in each specific training area - Qualitative: participant asked to give concrete examples of how training has affected their skills in the clinical area If no effect reported, participants are asked for more explanation Strengths and Challenges Strengths Challenges Quantify and qualify Timely follow-up Flexible study design Getting forms back Addresses Reaction, Learning and Behavior stages Provides ongoing training and trainer feedback Participants’ recall Staff turnover Lessons Learned Think about what motivates the training audience to participate in the study when deciding on study design Develop the protocol to lower respondent form and time burden Don’t be afraid to change the protocol midway in the study if not working Consider the resources that you have to collect and analyze the data in choosing a study design Analysis of HIV Patient-Provider Communication Bradley O. Boekeloo, Ph.D., Sc.M. University of Maryland Grant #6 H4A HA 00066-02-01 from the National Minority AIDS Education and Training Center, Health Resources and Services Administration Methods Providers Randomized (n=8) Brief cultural competency training vs. none Audiotapes of HIV Visits (n=24) 3 patient visits tape recorded per physician. Tapes transcribed. Patient Exit Questionnaire (n=24) Interviewer read patient questions and patient answered on an answer form. RESULTS: Randomized Trial Audiotape Observations Audiotape Variables Study Group Control Intervention (n=4) (n=4) Mean + S.D. Mean + S.D. Patient Word Count 991 + 490 Length of visit (minutes) 20 + 8.3 1050 + 629 20 + 7.2 RESULTS: Randomized Trial Exit Interview Observations (1=Very uncomfortable, 4=Very Comfortable) Exit Interview Variables Comfort talking to Dr. about sex Study Group Control Intervention (n=4) (n=4) Mean + S.D. Mean + S.D. 3.3 + .7 3.6 + .7 Comfort talking about substance use 3.5 + .5 3.3 + 1.0 Comfort talking about medication 3.6 + .9 3.7 + .9 Hypothesis Based on Exploratory Data and Next Steps Brief Intervention not enough for change Patients may be more comfortable discussing medical therapy than personal risk behaviors Try to determine whether different types of communication on audiotapes account for differences in patient comfort communicating with physician. Presenter Contact Information NY/NJ AETC: Mari Millery, PhD Richard Vezina, MPH 212-305-0409 415-597-9186 rvezina@psg.ucsf.edu mm994@columbia.edu Delta Region AETC: 817-272-2776 601-984-5552 jgray@uta.edu chamill@medicine.umsmed.edu - Nancy Showers, DSW 732-603-9681 301-405-8546 bb153@umail.umd.edu Southeast AETC: ASSESS materials available at www.socio.com - Margaret Clawson, MPH - Debbie Isenberg, MPH, CHES 404-727-2931 disenbe@emory.edu NMAETC, Delta AETC: Brad Boekeloo, PhD, ScM njshowers@aol.com 404-712-8448 mclawso@emory.edu TX/OK AETC: Jennifer Gray, RN, PhD - Cheryl Hamill, RN, MS, ACRN Pacific AETC: AETC National Evaluation Center: Janet Myers, PhD, MPH Director 415-597-8168 jmyers@psg.ucsf.edu Conference Call Evaluation Call 8: July 27, 2004 http://www.ihi.org/feedback/survey.asp?surveycode=AE TCCall072704 Survey Code: AETCCall072704 For assistance contact: Lorna Macdonald at lmacdonald@ihi.org