Ch04

advertisement

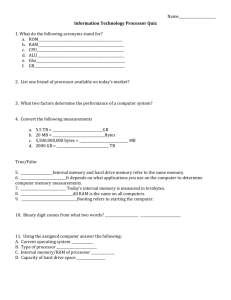

Chapter 4 The Processor CPU 效能因素(performance factors) 指令數(Instruction count) 由CPU硬體決定(Determined by CPU hardware) 兩種MIPS實作( two MIPS implementations) 由ISA及編譯器決定(Determined by ISA and compiler) 指令週期數 & 週期時間(CPI and Cycle time) §4.1 Introduction 簡介Introduction 簡易版(A simplified version) 實際管線版(A more realistic pipelined version) 檢單子集Simple subset, shows most aspects 記憶題存取(Memory reference): lw, sw 算術邏輯(Arithmetic/logical): add, sub, and, or, slt 控制轉移(Control transfer): beq, j Chapter 4 — The Processor — 2 執行指令Instruction Execution 擷取指令(PC instruction memory, fetch instruction) 暫存器(Register numbers register file, read registers) 與指令類別有關(Depending on instruction class) 計算(Use ALU to calculate) 記憶體存取(Memory address for load/store) Arithmetic result Access data memory for load/store 下一指令(Branch target address) PC target address or PC + 4 Chapter 4 — The Processor — 3 CPU Overview Chapter 4 — The Processor — 4 多工器Multiplexers 不能將線直接連接:多工器 Can’t just join wires together 程式計數器 指令記憶體 暫存器檔案 Use multiplexers 資料記憶體 Chapter 4 — The Processor — 5 控制線路Control Chapter 4 — The Processor — 6 二元資訊Information encoded in binary 組合邏輯Combinational element Low voltage = 0, High voltage = 1 One wire per bit Multi-bit data encoded on multi-wire buses §4.2 Logic Design Conventions 邏輯設計Logic Design Basics 資料運算Operate on data 輸出入函數關係Output is a function of input 序向邏輯State (sequential) elements Store information Chapter 4 — The Processor — 7 Combinational Elements AND-gate Y=A&B A B Multiplexer A + Y=A+B Y B Y Adder Arithmetic/Logic Unit Y = F(A, B) Y = S ? I1 : I0 A I0 I1 M u x S ALU Y Y B F Chapter 4 — The Processor — 8 Sequential Elements 暫存器(Register): stores data in a circuit 利用clock信號決定何時更新資料(Uses a clock signal to determine when to update the stored value) 邊緣觸發Clk改變(上升緣)觸發(Edge-triggered: update when Clk changes from 0 to 1) Clk D Q D Clk Q Chapter 4 — The Processor — 9 Sequential Elements 暫存器具寫入控制(Register with write control) 當Clk上升緣且Write為High時才寫入(Only updates on clock edge when write control input is 1) Used when stored value is required later Clk D Write Clk Q Write D Q Chapter 4 — The Processor — 10 Clocking Methodology 組合邏輯在時脈週期轉換資料(Combinational logic transforms data during clock cycles) 位準觸發(Between clock edges) 輸出入皆為序向元件(Input from state elements, output to state element) 最常延遲決定時脈寬度(Longest delay determines clock period) Chapter 4 — The Processor — 11 資料路徑(Datapath) CPU中處理資料及位址之元件(Elements that process data and addresses in the CPU) §4.3 Building a Datapath 建立資料路徑Building a Datapath 暫存器、ALU、多工器(Registers, ALUs, mux’s,memories, …) 建構MIPS資料路徑(Build a MIPS datapath incrementally) Refining the overview design Chapter 4 — The Processor — 12 Instruction Fetch 32-bit register 32位元暫存器 Increment by 4 for next instruction 下一指令 PC+4 Chapter 4 — The Processor — 13 R格式指令(R-Format Instructions) 讀取兩個傳存器運算元(Read two register operands) 執行算術邏輯運算(Perform arithmetic/logical operation) 寫回傳暫存器結果(Write register result) 5條輸入線表示有25=32個暫存器 Chapter 4 — The Processor — 14 Load/Store Instructions 讀取暫存器運算元(Read register operands) 使用16bit偏移計算位址(Calculate address using 16bit offset) Use ALU, but sign-extend offset Load: Read memory and update PC register Store: Write register value to memory 0110 0000 0110 1010 1111 1010 Chapter 4 — The Processor — 15 分支指令Branch Instructions 讀取運算元(Read register operands) 比較運算元(Compare operands) 使用ALU、相減並檢查零輸出(Use ALU, subtract and check Zero output) 計算目標位址(Calculate target address) 符號擴展置換(Sign-extend displacement) 位移2個位置(字組位移) Shift left 2 places (word displacement) PC值加4(Add to PC + 4) 指令擷取時已計算Already calculated by instruction fetch Chapter 4 — The Processor — 16 Branch Instructions Just re-routes wires Sign-bit wire replicated Chapter 4 — The Processor — 17 Composing the Elements 將指令的資料路徑切在一個時脈週期內 (First-cut data path does an instruction in one clock cycle) 任一資歷路徑元件只能值一次行一個功能 (Each datapath element can only do one function at a time) 將指令與資料記憶體分開 (separate instruction and data memories) 使用多工器選擇不同指令的資料來源 (Use multiplexers where alternate data sources are used for different instructions) Chapter 4 — The Processor — 18 R-Type/Load/Store Datapath R型/載入/儲存指令 資料路徑 add addi lw sw $t1, $s3, $t0, $t0, $t1, $s6 $s3, 1 32($s3) 48($s3) Chapter 4 — The Processor — 19 Full Datapath(完整資料路徑) add addi lw sw $t1, $s3, $t0, $t0, $t1, $s6 $s3, 1 32($s3) 48($s3) Chapter 4 — The Processor — 20 ALU之用途(ALU used for) Load/Store: F = add Branch: F = subtract R-type: F depends on funct field(功能欄) ALU control(ALU控制信號) Function(功能) 0000 AND 0001 OR 0010 add 0110 subtract 0111 set-on-less-than 1100 NOR §4.4 A Simple Implementation Scheme ALU控制ALU Control Chapter 4 — The Processor — 21 ALU控制ALU Control 假設2位元ALUOp 從opcode中取得(Assume 2-bit ALUOp derived from opcode) Combinational logic derives ALU control opcode ALUOp Operation funct ALU function lw 00 load word XXXXXX add 0010 sw 00 store word XXXXXX add 0010 beq 01 branch equal XXXXXX subtract 0110 R-type 10 add 100000 add 0010 subtract 100010 subtract 0110 AND 100100 AND 0000 OR 100101 OR 0001 set-on-less-than 101010 set-on-less-than 0111 Functl功能欄只有在R型指令時才有作用 ALU control 主要控制單元(The Main Control Unit) Control signals derived from instruction R-type Load/ Store Branch 0 rs rt rd shamt funct 31:26 25:21 20:16 15:11 10:6 5:0 35 or 43 rs rt address 31:26 25:21 20:16 15:0 4 rs rt address 31:26 25:21 20:16 15:0 opcode always read read, except for load write for R-type and load sign-extend and add 運算碼 一定要讀 一定要讀 除了load 寫入Reg R、Load 符號擴展、加 Chapter 4 — The Processor — 23 資料路徑+控制(Datapath With Control) Chapter 4 — The Processor — 24 R型指令(R-Type Instruction) Chapter 4 — The Processor — 25 R型指令(R-Type Instruction) 執行指令 add $t1, $t2, $t3 執行步驟 1.擷取指令,PC值+4 2.從暫存器檔案中讀出$t2,$t3; 同時,CU解碼指令(計算所需之控制訊號線之值) 3.ALU根據功能碼(funct)對$t2,$t3做運算 4.ALU結果寫入目的暫存器 Chapter 4 — The Processor — 26 載入指令(Load Instruction) lw $t0, 32($s3) ; 35 19 8 32 35 or 43 rs rt address 31:26 25:21 20:16 15:0 Chapter 4 — The Processor — 27 載入指令(Load Instruction) 執行指令 lw $t0, 32($s3) 執行步驟: 1.擷取指令,PC值+4 2.從暫存器檔案中讀出$s3; 3.ALU計算$s3與經過符號延伸之32之和 4. ALU計算結果為資料記憶體的輸入位址 5.資料記憶體傳回之值寫入暫存器檔案($t0) Chapter 4 — The Processor — 28 (條件分支指令)Branch-on-Equal Instruction beq $s1, $s2, 100 ; 4 17 18 25 4 rs rt address 31:26 25:21 20:16 15:0 Chapter 4 — The Processor — 29 (條件分支指令)Branch-on-Equal Instruction 執行指令 beq $s0, $s1, 100 執行步驟 1.擷取指令,PC值+4 2.從暫存器檔案中讀出$s0, $s1 3.ALU執行減法, (PC+4) 值與經過符號延伸之 並左移2位之值相加之和(i.e.分支目的位址) 4. ALU之Zero輸出決定哪一個加法器結果寫回 PC Chapter 4 — The Processor — 30 絕對跳躍指令(Implementing Jumps) Jump 2 address 31:26 25:0 Jump uses word address Update PC with concatenation of Top 4 bits of old PC 26-bit jump address 002 需要從運算碼(opcode)多一個額外控制訊號 (Need an extra control signal decoded from opcode) Chapter 4 — The Processor — 31 跳躍指令增加之資料路徑 Datapath With Jumps Added Chapter 4 — The Processor — 32 效能議題Performance Issues Longest delay determines clock period 關鍵路徑:Load指令(Critical path: load instruction) 指令記憶體暫存器檔案 ALU 資料記憶體傳存器檔案 (Instruction memory register file ALU data memory register file) 對不同指令沒有彈性可以改變週期 (Not feasible to vary period for different instructions) 違反設計原則Violates design principle 讓一般情況加快(Making the common case fast) 利用管線處理來增進效能 (We will improve performance by pipelining) Chapter 4 — The Processor — 33 管線式洗衣(Pipelined laundry: overlapping execution) 平行處理增進效能(Parallelism improves performance) Four loads: §4.5 An Overview of Pipelining 管線之比喻Pipelining Analogy Speedup = 8/3.5 = 2.3 Non-stop: Speedup = 2n/0.5n + 1.5 ≈ 4 = number of stages Chapter 4 — The Processor — 34 MIPS管線處理(MIPS Pipeline) 管線處理(pipelining)之定義 將指令區分成數個步驟,分別由不同的功能單元同時加以執行, 以增進整體程式之效能 管線處理五個步驟(Five stages, one step per stage) 1. IF: Instruction Fetch(擷取指令) 從記憶體擷取指令(Instruction fetch from memory) 2. ID: Instruction Decode(指令解碼) 解碼指令並讀取暫存器(Instruction decode & register read) 3. EX: Execution(執行指令) 執行運算或計算位址(Execute operation or calculate address) 4. MEM: Memory Access(記憶體存取) 存取記憶體運算元(Access memory operand) 5. WB: Write Back(寫回) 將結果寫回至暫存器(Write result back to register) Chapter 4 — The Processor — 35 管線處理效能Pipeline Performance 假設每一階段的時間為(Assume time for stages is) 暫存器讀寫:100ps for register read or write 其他階段:200ps for other stages 比較管線處理與單一週期的資料路徑 (Compare pipelined datapath with single-cycle datapath) Instr Instr fetch Register read ALU op Memory access Register write Total time lw 200ps 100 ps 200ps 200ps 100 ps 800ps sw 200ps 100 ps 200ps 200ps R-format 200ps 100 ps 200ps beq 200ps 100 ps 200ps 700ps 100 ps 600ps 500ps Chapter 4 — The Processor — 36 Pipeline Performance Single-cycle (Tc= 800ps) Pipelined (Tc= 200ps) Chapter 4 — The Processor — 37 管線處理加速Pipeline Speedup 所有階段都一致If all stages are balanced 每一階段時間都相同(i.e., all take the same time) Time between instructionspipelined = Time between instructionsnonpipelined Number of stages 若階段不一致,加速值較少If not balanced, speedup is less 管線處理的「加速』源自增加處理量(產量) Speedup due to increased throughput 延遲時間(latency每一指令的時間)沒有減少 Latency (time for each instruction) does not decrease Chapter 4 — The Processor — 38 Pipelining and ISA Design MIPS ISA專為管線化處理所設計 (MIPS ISA designed for pipelining) 所有指令皆為32位元(All instructions are 32-bits) 較容易在一個週期內擷取並解碼 (Easier to fetch and decode in one cycle) c.f. x86: 1- to 17-byte instructions 少量且規則的指令格式(Few and regular instruction formats) 能在一個步驟內解碼並讀取暫存器 (Can decode and read registers in one step) 載入與儲存定址(Load/store addressing) 能在第3階段計算位址,在第4階段存犬記憶體 (Can calculate address in 3rd stage, access memory in 4th stage) 記憶體運算元對齊(Alignment of memory operands) 記憶體存取只需一個週期 (Memory access takes only one cycle) Chapter 4 — The Processor — 39 危障Hazards 下一週期的起始位址不是下一指令 Situations that prevent starting the next instruction in the next cycle 危障種類Hazard types 結構危障(Structure hazards) 所需資源忙碌中(A required resource is busy) 資料危障(Data hazard) 等待前一指令完成資料讀寫 Need to wait for previous instruction to complete its data read/write 控制危障(Control hazard) 依前一指令結果決定控制動作 Deciding on control action depends on previous instruction Chapter 4 — The Processor — 40 結構危障Structure Hazards 使用資源衝突Conflict for use of a resource MIPS中只有一個記憶體In MIPS pipeline with a single memory Load/store 需要做資料存取Load/store requires data access 該週期的指令擷取必須延遲(stall),需管線泡泡 Instruction fetch would have to stall for that cycle Would cause a pipeline “bubble” 管線式資料路徑需要獨立的指令/資料記憶體 或獨立的指令/資料快取(記憶體) Hence, pipelined datapaths require separate instruction/data memories Or separate instruction/data caches Chapter 4 — The Processor — 41 資料危障Data Hazards 與前依指令資料存取完成結果有關 An instruction depends on completion of data access by a previous instruction add sub $s0, $t0, $t1 $t2, $s0, $t3 Chapter 4 — The Processor — 42 前饋Forwarding (aka Bypassing) 使用已經計算完成的結果Use result when it is computed 不需等到存至暫存器中Don’t wait for it to be stored in a register 資料路徑需要額外的連接線 Requires extra connections in the datapath Chapter 4 — The Processor — 43 Load指令-資料危障(Load-Use Data Hazard) 使用前饋仍無法避免要用延遲(stall) Can’t always avoid stalls by forwarding 當所需要的值尚未計算完成 If value not computed when needed 無法前饋至之前的時間 Can’t forward backward in time! Chapter 4 — The Processor — 44 Code Scheduling to Avoid Stalls 利用指令重排避免下一 指令為Load指令 Reorder code to avoid use of load result in the next instruction A = B + E; C = B + F; C code for stall stall lw lw add sw lw add sw $t1, $t2, $t3, $t3, $t4, $t5, $t5, 0($t0) 4($t0) $t1, $t2 12($t0) 8($t0) $t1, $t4 16($t0) 13 cycles lw lw lw add sw add sw $t1, $t2, $t4, $t3, $t3, $t5, $t5, 0($t0) 4($t0) 8($t0) $t1, $t2 12($t0) $t1, $t4 16($t0) 11 cycles Chapter 4 — The Processor — 45 控制危障Control Hazards 分支決定控制流程Branch determines flow of control 擷取下一指令取決於分支結果 Fetching next instruction depends on branch outcome 管線處理不能永遠擷取正確的下一個指令 Pipeline can’t always fetch correct instruction 仍在分支指令的ID階段 Still working on ID stage of branch MIPS的管線處理中In MIPS pipeline 需要在管線中比較暫存器與提早計算目標位址 Need to compare registers and compute target early in the pipeline 在ID階段增加硬體來處理 Add hardware to do it in ID stage Chapter 4 — The Processor — 46 分支中的延遲Stall on Branch 等到分支結果來決定擷取下一指令 Wait until branch outcome determined before fetching next instruction Chapter 4 — The Processor — 47 分支預測Branch Prediction 較長的管線無法完全提早決定分之結果 Longer pipelines can’t readily determine branch outcome early 延遲時間變得無法接受 Stall penalty becomes unacceptable 分支預測結果Predict outcome of branch 預測錯誤只有造成延遲Only stall if prediction is wrong MIPS管線處理中In MIPS pipeline 可以預測分支未發生Can predict branches not taken 分支後的擷取指令沒有延遲 Fetch instruction after branch, with no delay Chapter 4 — The Processor — 48 MIPS with Predict Not Taken Prediction correct Prediction incorrect Chapter 4 — The Processor — 49 More-Realistic Branch Prediction 靜態分支預測Static branch prediction 基於典型分支行為Based on typical branch behavior 範例:迴圈與if指令Example: loop and if-statement branches 預測反向分支會發生Predict backward branches taken 預測前向分支不會發生Predict forward branches not taken 動態分支預測Dynamic branch prediction 硬體測量實際分支行為Hardware measures actual branch behavior 例如:記錄每一分支最近結果的歷史 e.g., record recent history of each branch 假設未來行為會持續趨勢 Assume future behavior will continue the trend 當猜錯時,使用重新擷取時延遲stall、並更新歷史紀錄 When wrong, stall while re-fetching, and update history Chapter 4 — The Processor — 50 管線處理總結Pipeline Summary The BIG Picture 管線處理增加效能是利用增加指令處理量 Pipelining improves performance by increasing instruction throughput 危障Subject to hazards 同時執行多個指令Executes multiple instructions in parallel 每一指令有相同延遲時間Each instruction has the same latency 結構、資料、控制Structure, data, control 指令集設計影響實現管線處理的複雜度 Instruction set design affects complexity of pipeline implementation Chapter 4 — The Processor — 51 §4.6 Pipelined Datapath and Control MIPS Pipelined Datapath MEM Right-to-left flow leads to hazards WB Chapter 4 — The Processor — 52 管線暫存器Pipeline registers 管線各階段間需要暫存器Need registers between stages 保存上一階段產生的資訊To hold information produced in previous cycle Chapter 4 — The Processor — 53 Pipeline Operation 管線化資料路徑「逐一週期」的流程 Cycle-by-cycle flow of instructions through the pipelined datapath “Single-clock-cycle” pipeline diagram c.f. “multi-clock-cycle” diagram Shows pipeline usage in a single cycle Highlight resources used Graph of operation over time We’ll look at “single-clock-cycle” diagrams for load & store Chapter 4 — The Processor — 54 IF for Load, Store, … Chapter 4 — The Processor — 55 ID for Load, Store, … Chapter 4 — The Processor — 56 EX for Load Chapter 4 — The Processor — 57 MEM for Load Chapter 4 — The Processor — 58 WB for Load Wrong register number Chapter 4 — The Processor — 59 Load指令之修正資料路徑 (Corrected Datapath for Load) Chapter 4 — The Processor — 60 EX for Store Chapter 4 — The Processor — 61 MEM for Store Chapter 4 — The Processor — 62 WB for Store Chapter 4 — The Processor — 63 Multi-Cycle Pipeline Diagram 顯示資源利用(Form showing resource) usage Chapter 4 — The Processor — 64 Multi-Cycle Pipeline Diagram 傳統形式(Traditional form) Chapter 4 — The Processor — 65 Single-Cycle Pipeline Diagram State of pipeline in a given cycle Chapter 4 — The Processor — 66 Pipelined Control (Simplified) Chapter 4 — The Processor — 67 Pipelined Control Control signals derived from instruction As in single-cycle implementation Chapter 4 — The Processor — 68 Pipelined Control Chapter 4 — The Processor — 69 Consider this sequence: sub and or add sw $2, $1,$3 $12,$2,$5 $13,$6,$2 $14,$2,$2 $15,100($2) We can resolve hazards with forwarding §4.7 Data Hazards: Forwarding vs. Stalling Data Hazards in ALU Instructions How do we detect when to forward? Chapter 4 — The Processor — 70 Dependencies & Forwarding Chapter 4 — The Processor — 71 Detecting the Need to Forward Pass register numbers along pipeline ALU operand register numbers in EX stage are given by e.g., ID/EX.RegisterRs = register number for Rs sitting in ID/EX pipeline register ID/EX.RegisterRs, ID/EX.RegisterRt Data hazards when 1a. EX/MEM.RegisterRd = ID/EX.RegisterRs 1b. EX/MEM.RegisterRd = ID/EX.RegisterRt 2a. MEM/WB.RegisterRd = ID/EX.RegisterRs 2b. MEM/WB.RegisterRd = ID/EX.RegisterRt Fwd from EX/MEM pipeline reg Fwd from MEM/WB pipeline reg Chapter 4 — The Processor — 72 Detecting the Need to Forward But only if forwarding instruction will write to a register! EX/MEM.RegWrite, MEM/WB.RegWrite And only if Rd for that instruction is not $zero EX/MEM.RegisterRd ≠ 0, MEM/WB.RegisterRd ≠ 0 Chapter 4 — The Processor — 73 Forwarding Paths Chapter 4 — The Processor — 74 Forwarding Conditions EX hazard if (EX/MEM.RegWrite and (EX/MEM.RegisterRd ≠ 0) and (EX/MEM.RegisterRd = ID/EX.RegisterRs)) ForwardA = 10 if (EX/MEM.RegWrite and (EX/MEM.RegisterRd ≠ 0) and (EX/MEM.RegisterRd = ID/EX.RegisterRt)) ForwardB = 10 MEM hazard if (MEM/WB.RegWrite and (MEM/WB.RegisterRd ≠ 0) and (MEM/WB.RegisterRd = ID/EX.RegisterRs)) ForwardA = 01 if (MEM/WB.RegWrite and (MEM/WB.RegisterRd ≠ 0) and (MEM/WB.RegisterRd = ID/EX.RegisterRt)) ForwardB = 01 Chapter 4 — The Processor — 75 Double Data Hazard Consider the sequence: add $1,$1,$2 add $1,$1,$3 add $1,$1,$4 Both hazards occur Want to use the most recent Revise MEM hazard condition Only fwd if EX hazard condition isn’t true Chapter 4 — The Processor — 76 Revised Forwarding Condition MEM hazard if (MEM/WB.RegWrite and (MEM/WB.RegisterRd ≠ 0) and not (EX/MEM.RegWrite and (EX/MEM.RegisterRd ≠ 0) and (EX/MEM.RegisterRd = ID/EX.RegisterRs)) and (MEM/WB.RegisterRd = ID/EX.RegisterRs)) ForwardA = 01 if (MEM/WB.RegWrite and (MEM/WB.RegisterRd ≠ 0) and not (EX/MEM.RegWrite and (EX/MEM.RegisterRd ≠ 0) and (EX/MEM.RegisterRd = ID/EX.RegisterRt)) and (MEM/WB.RegisterRd = ID/EX.RegisterRt)) ForwardB = 01 Chapter 4 — The Processor — 77 Datapath with Forwarding Chapter 4 — The Processor — 78 Load-Use Data Hazard Need to stall for one cycle Chapter 4 — The Processor — 79 Load-Use Hazard Detection Check when using instruction is decoded in ID stage ALU operand register numbers in ID stage are given by Load-use hazard when IF/ID.RegisterRs, IF/ID.RegisterRt ID/EX.MemRead and ((ID/EX.RegisterRt = IF/ID.RegisterRs) or (ID/EX.RegisterRt = IF/ID.RegisterRt)) If detected, stall and insert bubble Chapter 4 — The Processor — 80 How to Stall the Pipeline Force control values in ID/EX register to 0 EX, MEM and WB do nop (no-operation) Prevent update of PC and IF/ID register Using instruction is decoded again Following instruction is fetched again 1-cycle stall allows MEM to read data for lw Can subsequently forward to EX stage Chapter 4 — The Processor — 81 Stall/Bubble in the Pipeline Stall inserted here Chapter 4 — The Processor — 82 Stall/Bubble in the Pipeline Or, more accurately… Chapter 4 — The Processor — 83 Datapath with Hazard Detection Chapter 4 — The Processor — 84 Stalls and Performance The BIG Picture Stalls reduce performance But are required to get correct results Compiler can arrange code to avoid hazards and stalls Requires knowledge of the pipeline structure Chapter 4 — The Processor — 85 If branch outcome determined in MEM §4.8 Control Hazards Branch Hazards Flush these instructions (Set control values to 0) PC Chapter 4 — The Processor — 86 Reducing Branch Delay Move hardware to determine outcome to ID stage Target address adder Register comparator Example: branch taken 36: 40: 44: 48: 52: 56: 72: sub beq and or add slt ... lw $10, $1, $12, $13, $14, $15, $4, $3, $2, $2, $4, $6, $8 7 $5 $6 $2 $7 $4, 50($7) Chapter 4 — The Processor — 87 Example: Branch Taken Chapter 4 — The Processor — 88 Example: Branch Taken Chapter 4 — The Processor — 89 Data Hazards for Branches If a comparison register is a destination of 2nd or 3rd preceding ALU instruction add $1, $2, $3 IF add $4, $5, $6 … beq $1, $4, target ID EX MEM WB IF ID EX MEM WB IF ID EX MEM WB IF ID EX MEM WB Can resolve using forwarding Chapter 4 — The Processor — 90 Data Hazards for Branches If a comparison register is a destination of preceding ALU instruction or 2nd preceding load instruction lw Need 1 stall cycle $1, addr IF add $4, $5, $6 beq stalled beq $1, $4, target ID EX MEM WB IF ID EX MEM WB IF ID ID EX MEM WB Chapter 4 — The Processor — 91 Data Hazards for Branches If a comparison register is a destination of immediately preceding load instruction lw Need 2 stall cycles $1, addr IF beq stalled beq stalled beq $1, $0, target ID EX IF ID MEM WB ID ID EX MEM WB Chapter 4 — The Processor — 92 Dynamic Branch Prediction In deeper and superscalar pipelines, branch penalty is more significant Use dynamic prediction Branch prediction buffer (aka branch history table) Indexed by recent branch instruction addresses Stores outcome (taken/not taken) To execute a branch Check table, expect the same outcome Start fetching from fall-through or target If wrong, flush pipeline and flip prediction Chapter 4 — The Processor — 93 1-Bit Predictor: Shortcoming Inner loop branches mispredicted twice! outer: … … inner: … … beq …, …, inner … beq …, …, outer Mispredict as taken on last iteration of inner loop Then mispredict as not taken on first iteration of inner loop next time around Chapter 4 — The Processor — 94 2-Bit Predictor Only change prediction on two successive mispredictions Chapter 4 — The Processor — 95 Calculating the Branch Target Even with predictor, still need to calculate the target address 1-cycle penalty for a taken branch Branch target buffer Cache of target addresses Indexed by PC when instruction fetched If hit and instruction is branch predicted taken, can fetch target immediately Chapter 4 — The Processor — 96 “Unexpected” events requiring change in flow of control Different ISAs use the terms differently Exception Arises within the CPU e.g., undefined opcode, overflow, syscall, … Interrupt §4.9 Exceptions Exceptions and Interrupts From an external I/O controller Dealing with them without sacrificing performance is hard Chapter 4 — The Processor — 97 Handling Exceptions In MIPS, exceptions managed by a System Control Coprocessor (CP0) Save PC of offending (or interrupted) instruction In MIPS: Exception Program Counter (EPC) Save indication of the problem In MIPS: Cause register We’ll assume 1-bit 0 for undefined opcode, 1 for overflow Jump to handler at 8000 00180 Chapter 4 — The Processor — 98 An Alternate Mechanism Vectored Interrupts Example: Handler address determined by the cause Undefined opcode: Overflow: …: C000 0000 C000 0020 C000 0040 Instructions either Deal with the interrupt, or Jump to real handler Chapter 4 — The Processor — 99 Handler Actions Read cause, and transfer to relevant handler Determine action required If restartable Take corrective action use EPC to return to program Otherwise Terminate program Report error using EPC, cause, … Chapter 4 — The Processor — 100 Exceptions in a Pipeline Another form of control hazard Consider overflow on add in EX stage add $1, $2, $1 Prevent $1 from being clobbered Complete previous instructions Flush add and subsequent instructions Set Cause and EPC register values Transfer control to handler Similar to mispredicted branch Use much of the same hardware Chapter 4 — The Processor — 101 Pipeline with Exceptions Chapter 4 — The Processor — 102 Exception Properties Restartable exceptions Pipeline can flush the instruction Handler executes, then returns to the instruction Refetched and executed from scratch PC saved in EPC register Identifies causing instruction Actually PC + 4 is saved Handler must adjust Chapter 4 — The Processor — 103 Exception Example Exception on add in 40 44 48 4C 50 54 … sub and or add slt lw $11, $12, $13, $1, $15, $16, $2, $4 $2, $5 $2, $6 $2, $1 $6, $7 50($7) sw sw $25, 1000($0) $26, 1004($0) Handler 80000180 80000184 … Chapter 4 — The Processor — 104 Exception Example Chapter 4 — The Processor — 105 Exception Example Chapter 4 — The Processor — 106 Multiple Exceptions Pipelining overlaps multiple instructions Simple approach: deal with exception from earliest instruction Could have multiple exceptions at once Flush subsequent instructions “Precise” exceptions In complex pipelines Multiple instructions issued per cycle Out-of-order completion Maintaining precise exceptions is difficult! Chapter 4 — The Processor — 107 Imprecise Exceptions Just stop pipeline and save state Including exception cause(s) Let the handler work out Which instruction(s) had exceptions Which to complete or flush May require “manual” completion Simplifies hardware, but more complex handler software Not feasible for complex multiple-issue out-of-order pipelines Chapter 4 — The Processor — 108 Pipelining: executing multiple instructions in parallel To increase ILP Deeper pipeline Less work per stage shorter clock cycle Multiple issue Replicate pipeline stages multiple pipelines Start multiple instructions per clock cycle CPI < 1, so use Instructions Per Cycle (IPC) E.g., 4GHz 4-way multiple-issue 16 BIPS, peak CPI = 0.25, peak IPC = 4 But dependencies reduce this in practice §4.10 Parallelism and Advanced Instruction Level Parallelism Instruction-Level Parallelism (ILP) Chapter 4 — The Processor — 109 Multiple Issue Static multiple issue Compiler groups instructions to be issued together Packages them into “issue slots” Compiler detects and avoids hazards Dynamic multiple issue CPU examines instruction stream and chooses instructions to issue each cycle Compiler can help by reordering instructions CPU resolves hazards using advanced techniques at runtime Chapter 4 — The Processor — 110 Speculation “Guess” what to do with an instruction Start operation as soon as possible Check whether guess was right If so, complete the operation If not, roll-back and do the right thing Common to static and dynamic multiple issue Examples Speculate on branch outcome Roll back if path taken is different Speculate on load Roll back if location is updated Chapter 4 — The Processor — 111 Compiler/Hardware Speculation Compiler can reorder instructions e.g., move load before branch Can include “fix-up” instructions to recover from incorrect guess Hardware can look ahead for instructions to execute Buffer results until it determines they are actually needed Flush buffers on incorrect speculation Chapter 4 — The Processor — 112 Speculation and Exceptions What if exception occurs on a speculatively executed instruction? Static speculation e.g., speculative load before null-pointer check Can add ISA support for deferring exceptions Dynamic speculation Can buffer exceptions until instruction completion (which may not occur) Chapter 4 — The Processor — 113 Static Multiple Issue Compiler groups instructions into “issue packets” Group of instructions that can be issued on a single cycle Determined by pipeline resources required Think of an issue packet as a very long instruction Specifies multiple concurrent operations Very Long Instruction Word (VLIW) Chapter 4 — The Processor — 114 Scheduling Static Multiple Issue Compiler must remove some/all hazards Reorder instructions into issue packets No dependencies with a packet Possibly some dependencies between packets Varies between ISAs; compiler must know! Pad with nop if necessary Chapter 4 — The Processor — 115 MIPS with Static Dual Issue Two-issue packets One ALU/branch instruction One load/store instruction 64-bit aligned ALU/branch, then load/store Pad an unused instruction with nop Address Instruction type Pipeline Stages n ALU/branch IF ID EX MEM WB n+4 Load/store IF ID EX MEM WB n+8 ALU/branch IF ID EX MEM WB n + 12 Load/store IF ID EX MEM WB n + 16 ALU/branch IF ID EX MEM WB n + 20 Load/store IF ID EX MEM WB Chapter 4 — The Processor — 116 MIPS with Static Dual Issue Chapter 4 — The Processor — 117 Hazards in the Dual-Issue MIPS More instructions executing in parallel EX data hazard Forwarding avoided stalls with single-issue Now can’t use ALU result in load/store in same packet Load-use hazard add $t0, $s0, $s1 load $s2, 0($t0) Split into two packets, effectively a stall Still one cycle use latency, but now two instructions More aggressive scheduling required Chapter 4 — The Processor — 118 Scheduling Example Schedule this for dual-issue MIPS Loop: lw addu sw addi bne Loop: $t0, $t0, $t0, $s1, $s1, 0($s1) $t0, $s2 0($s1) $s1,–4 $zero, Loop # # # # # $t0=array element add scalar in $s2 store result decrement pointer branch $s1!=0 ALU/branch Load/store cycle nop lw 1 addi $s1, $s1,–4 nop 2 addu $t0, $t0, $s2 nop 3 bne sw $s1, $zero, Loop $t0, 0($s1) $t0, 4($s1) 4 IPC = 5/4 = 1.25 (c.f. peak IPC = 2) Chapter 4 — The Processor — 119 Loop Unrolling Replicate loop body to expose more parallelism Reduces loop-control overhead Use different registers per replication Called “register renaming” Avoid loop-carried “anti-dependencies” Store followed by a load of the same register Aka “name dependence” Reuse of a register name Chapter 4 — The Processor — 120 Loop Unrolling Example Loop: ALU/branch Load/store cycle addi $s1, $s1,–16 lw $t0, 0($s1) 1 nop lw $t1, 12($s1) 2 addu $t0, $t0, $s2 lw $t2, 8($s1) 3 addu $t1, $t1, $s2 lw $t3, 4($s1) 4 addu $t2, $t2, $s2 sw $t0, 16($s1) 5 addu $t3, $t4, $s2 sw $t1, 12($s1) 6 nop sw $t2, 8($s1) 7 sw $t3, 4($s1) 8 bne $s1, $zero, Loop IPC = 14/8 = 1.75 Closer to 2, but at cost of registers and code size Chapter 4 — The Processor — 121 Dynamic Multiple Issue “Superscalar” processors CPU decides whether to issue 0, 1, 2, … each cycle Avoiding structural and data hazards Avoids the need for compiler scheduling Though it may still help Code semantics ensured by the CPU Chapter 4 — The Processor — 122 Dynamic Pipeline Scheduling Allow the CPU to execute instructions out of order to avoid stalls But commit result to registers in order Example lw $t0, 20($s2) addu $t1, $t0, $t2 sub $s4, $s4, $t3 slti $t5, $s4, 20 Can start sub while addu is waiting for lw Chapter 4 — The Processor — 123 Dynamically Scheduled CPU Preserves dependencies Hold pending operands Results also sent to any waiting reservation stations Reorders buffer for register writes Can supply operands for issued instructions Chapter 4 — The Processor — 124 Register Renaming Reservation stations and reorder buffer effectively provide register renaming On instruction issue to reservation station If operand is available in register file or reorder buffer Copied to reservation station No longer required in the register; can be overwritten If operand is not yet available It will be provided to the reservation station by a function unit Register update may not be required Chapter 4 — The Processor — 125 Speculation Predict branch and continue issuing Don’t commit until branch outcome determined Load speculation Avoid load and cache miss delay Predict the effective address Predict loaded value Load before completing outstanding stores Bypass stored values to load unit Don’t commit load until speculation cleared Chapter 4 — The Processor — 126 Why Do Dynamic Scheduling? Why not just let the compiler schedule code? Not all stalls are predicable Can’t always schedule around branches e.g., cache misses Branch outcome is dynamically determined Different implementations of an ISA have different latencies and hazards Chapter 4 — The Processor — 127 Does Multiple Issue Work? The BIG Picture Yes, but not as much as we’d like Programs have real dependencies that limit ILP Some dependencies are hard to eliminate Some parallelism is hard to expose Limited window size during instruction issue Memory delays and limited bandwidth e.g., pointer aliasing Hard to keep pipelines full Speculation can help if done well Chapter 4 — The Processor — 128 Power Efficiency Complexity of dynamic scheduling and speculations requires power Multiple simpler cores may be better Microprocessor Year Clock Rate Pipeline Stages Issue width Out-of-order/ Speculation Cores Power i486 1989 25MHz 5 1 No 1 5W Pentium 1993 66MHz 5 2 No 1 10W Pentium Pro 1997 200MHz 10 3 Yes 1 29W P4 Willamette 2001 2000MHz 22 3 Yes 1 75W P4 Prescott 2004 3600MHz 31 3 Yes 1 103W Core 2006 2930MHz 14 4 Yes 2 75W UltraSparc III 2003 1950MHz 14 4 No 1 90W UltraSparc T1 2005 1200MHz 6 1 No 8 70W Chapter 4 — The Processor — 129 72 physical registers §4.11 Real Stuff: The AMD Opteron X4 (Barcelona) Pipeline The Opteron X4 Microarchitecture Chapter 4 — The Processor — 130 The Opteron X4 Pipeline Flow For integer operations FP is 5 stages longer Up to 106 RISC-ops in progress Bottlenecks Complex instructions with long dependencies Branch mispredictions Memory access delays Chapter 4 — The Processor — 131 §4.13 Fallacies and Pitfalls Fallacies Pipelining is easy (!) The basic idea is easy The devil is in the details e.g., detecting data hazards Pipelining is independent of technology So why haven’t we always done pipelining? More transistors make more advanced techniques feasible Pipeline-related ISA design needs to take account of technology trends e.g., predicated instructions Chapter 4 — The Processor — 132 Pitfalls Poor ISA design can make pipelining harder e.g., complex instruction sets (VAX, IA-32) e.g., complex addressing modes Significant overhead to make pipelining work IA-32 micro-op approach Register update side effects, memory indirection e.g., delayed branches Advanced pipelines have long delay slots Chapter 4 — The Processor — 133 ISA influences design of datapath and control Datapath and control influence design of ISA Pipelining improves instruction throughput using parallelism §4.14 Concluding Remarks Concluding Remarks More instructions completed per second Latency for each instruction not reduced Hazards: structural, data, control Multiple issue and dynamic scheduling (ILP) Dependencies limit achievable parallelism Complexity leads to the power wall Chapter 4 — The Processor — 134