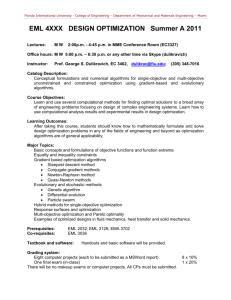

Agents: Definition, Classification and Structure

advertisement

Multi-Objective Non-linear

Optimization via

Parameterization and Inverse

Function Approximation

University of Regina

Industrial Systems Engineering

M.A.Sc. Thesis Defense

May 23, 2003

Mariano Arriaga Marín

1

Thesis Contributions

Novel technique for attaining the global

solution of nonlinear optimization

problems.

Novel technique for multi-objective

nonlinear optimization (MONLO).

Artificial Neural Networks (ANN)

Implementation

Methods tested in:

– Highly nonlinear optimization problems,

– MONLO problems, and

– Practical scheduling problem.

2

Current Global Optimization

Techniques

Common Techniques

Multistart

Clustering

Method

Genetic Algorithms

Simulated Annealing

Tabu Search

3

Multiple Objective Optimization

Current MONLO procedure:

– Divide the problem in two parts

1. Multi-Objective Single Objective

Problem

2. Solve with a Nonlinear Optimization

Technique

4

Multi-Objective Single Objective

Common Techniques

–

–

–

–

–

Weighting Method

E-Constraint

Interactive Surrogate Worth Trade-Off Solution

Lexicographic Ordering

Goal Programming

Problems

– Include extra parameters which might be

difficult to determine their value.

– Determining their value gets more difficult as

the number of objective functions increases

5

Proposed Optimization Algorithm

Min

F(x) = {f1(x),…,fm(x)}

– where x n

fi(x) ; i = 1,…,m

Optimization of:

– Non-Linear functions

– Multi-objective

– Avoid local minima and inflection

points

6

General Idea

Set an initial value for x and calculate f(x)

Decrease the value of the function via a

parameter

Calculate corresponding f -1(x)

– Note: The algorithm does not necessarily follow the

function

x0

7

General Idea

When the algorithm reaches a local minima

– it looks for a lower value

– if this value exists, the algorithm “jumps” to it and

continues the process

This process continues until the algorithm

reaches the global minimum.

x0

xf

8

Inverse Function Approximation

Inverse Function Approximation

Continuous functions

Full Theoretical Justification1

.

x(r ) J ( x(r ) J ( x(r )) J ( x(r ))

T

T

1 1

.

y J ( x(r )) v v

Mayorga R.V. and Carrera J., (2002), “A Radial Basis Function Network Approach for the Computation of Inverse Time

Variant Functions”, IASTED Int. Conf. on Artificial Intelligence & Soft Computing, Banff, Canada. (To appear in the

9

International Journal of Neural Systems).

1

Global Optimization Example

Consider the function:

f ( x) 3 (1 x1 ) e

2

( x12 ( x2 1) 2 )

(2x1 10x 10x )e

3

1

5

2

( x12 x22 )

1 ( ( x1 1) 2 x22 )

e

10

3

10

Initial Model – Part 1

Initial point in “front”

side of curve (1)

Gets out of two local

minima (2 & 3)

Converges to the

global minimum (4)

v=0 and Z-1=0

11

Initial Model – Part 2

Initial point in “back”

side of curve

Gets stuck in an

inflection point

Does not get to the

global minimum

v=0 and Z-1=0

12

Model with vector v and Z-1

Initial point in

“back” side of curve

Goes around the

curve (null space

vector)

Converges to the

global minimum

13

Artificial Neural Networks Model

Initial point in “back”

side of curve

Calculate J(x) and v

with ANNs

Follows almost the

same trajectory as

previous model

Converges to the

global minimum

14

The Griewank Function - Example

Consider the function:

1

x1 1002 x2 1002

f ( x1 , x2 )

4000

x 100

cosx1 100 cos 2

1

2

15

Griewank Function Optimization

Initial Model Z-1=0 & v=0

Model with Z-1 & v

Model Using

ANN

16

Multi-Objective Nonlinear Example

2

I-Beam Design Problem2

– Determine best tradeoff dimensions

Minimize conflicting

objectives

– Cross-sectional Area

– Static Deflection

Osyczka, A., (1984), Multicriterion Optimization in Engineering with FORTRAN programs. Ellis Horwood Limited.

17

What if both objectives are solved

separately?

↓

Cross-Sectional Area

↑ Static Deflection

↓ Static Deflection

↑ Cross-Sectional Area

18

I-Beam Results

―

Feasible Solutions

― Strong Pareto

Solutions

― Weak Pareto

Solutions

19

I-Beam Results

Result

– Proposed approach achieves very similar results to

state-of-the-art Genetic Algorithms (GA)

– Gives a diverse set of strong Pareto solutions

– The result of the ANN implementations varies by 0.88%

Computational Time3

– If compared to a standard floating point GA4, the

computational time decreases in 83%

– From 15.2 sec to 2.56 sec

3 Experiments performed in a Sun Ultra 4 Digital Computer. GA: 100 individuals and 50 generations.

4 Passino, K., (1998), Genetic Algorithms Code, September 21st,

http://eewww.eng.ohio-state.edu/~passino/ICbook/ic_code.html (accessed February 2003).

20

Multi-Objective Optimization:

Just-In-Time Scheduling Problem

Consider 5 products manufactured in 2 production

lines

Minimize:

– Cost

– Line Unbalance

– Plant Unbalance

Variables:

– Production Rate

– Level Loading

– Production Time

Production Constraints

21

Scheduling Problem – Optimization

Results

Minimize:

– Cost

22

Multi-Objective Optimization

Example

Minimize:

– Cost

– Line unbalance

Production

Rate

variance / line

23

Multi-Objective Optimization

Example

Minimize:

– Cost

– Line unbalance

Production

Rate

variance / line

– Plant unbalance

Distribute

production

in both production

lines

24

Conclusion

Novel global optimization method

It avoids local minima and inflection

points

The algorithm leads to convexities

via a null space vector v

It can also be used for constraint

nonlinear optimization

25

Conclusion (cont.)

Novel MONLO deterministic method

Starts from a single point instead of a

population

Computational Time

–

For the I-Beam example, the computational

time is 83% less than Genetic Algorithms

–

The implementation of ANN reduces the

number of calculations to compute the

Inverse Function

–

For the scheduling example, the ANN

implementation reduces computational time

by 70%

26

Publications

3rd ANIROB/IEEE-RAS International

Symposium of Robotics and Automation,

Toluca, Mexico. Sept 1-4, 2002

Three journal papers already submitted:

– Journal of Engineering Applications of Artificial

Intelligence

– International Journal of Neural Systems

– Journal of Intelligent Manufacturing

One paper to be published as a Chapter in

a book on Intelligent Systems.

Editor: Dr. Alexander M. Meystel

27