Assessing Assessment on the Program Level

advertisement

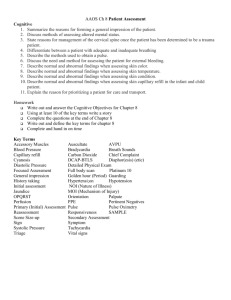

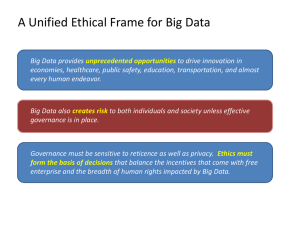

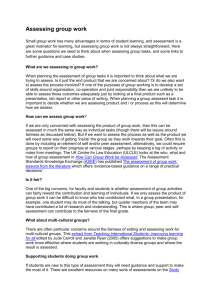

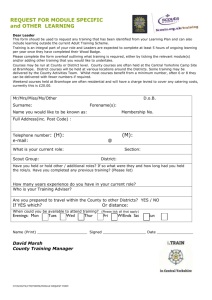

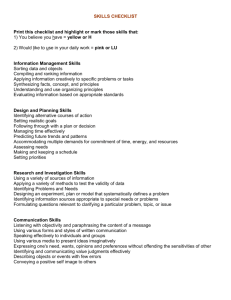

Assessment 201-Beyond the Basics Assessing Program Level Assessment Plans University of North Carolina Wilmington Susan Hatfield Winona State University Shatfield@winona.edu Outline • Language of Assessment • Assessing Assessment Plans • Mistakes to Avoid Language of Assessment Language of Assessment • A. General skill or knowledge category GOAL • B. Specific accomplishments to be achieved OUTCOME • C. Activities and Assignments to help students learn LEARNING EVENTS • D. The key elements related to the accomplishment of the outcome COMPONENTS Language of Assessment • E. The objects of analysis OBJECTS • F. Data indicating degree of achievement CHARACTERISTICS • G. Combination of data indicating relative degree of achievement of the learning outcome INDICATORS Goals Goals Organizing Principle Category or Topic Area Subjects Learning Outcomes Student Learning Outcomes Goal Outcome Outcome Outcome Outcome Outcome Learning Events Learning Events • • • • • • • Assignments (in class and out of class) Feedback on practice Self evaluation Peer evaluation Role Play Pre Tests Simulation Learning Objects Student Learning Outcomes Goal Outcome Outcome Outcome Outcome Outcome Learning events Object Components Student Learning Outcomes Goal Outcome Outcome Outcome Outcome Outcome Object component component component Evaluative elements Performance Characteristics Student Learning Outcomes Goal Outcome Outcome Outcome Outcome Outcome Evaluative criteria Object component component component Characteristics Indicators Student Learning Outcomes GOAL Outcome Outcome Outcome Outcome Outcome Degree to which outcome is achieved Object component component component component indicator Assessing Program Level Assessment Plans Overall Questions Overall Questions • • Why are we doing assessment? Who is responsible for assessment in your program? Assessing Program Level Assessment Plans • • • • Student Learning Outcomes Assessment Methods Implementation Strategy Data Interpretation Student Learning Outcomes Assessing Student Learning Outcomes • Has the program identified program level student learning outcomes? Assessing Student Learning Outcomes • • • • • Reasonable number? Tied to university mission / goals? Appropriate format? Do faculty and students understand what the outcomes mean? (components) Is there a common language for describing student performance? (characteristics) Student Learning Outcomes • • Supported by core courses in the curriculum? Are the student level learning outcomes developed throughout the curriculum? Assessing Student Learning Outcomes • • • • • Reasonable number? Tied to university mission / goals? Appropriate format? Do faculty and students understand what the outcomes mean? (components) Is there a common language for describing student performance? (characteristics) Professional Skills speak Problem Solving write Communication cooperate Research Methods relate Assessing Student Learning Outcomes • • • • • Reasonable number? Tied to university mission / goals? Appropriate format? Do faculty and students understand what the outcomes mean? (components) Is there a common language for describing student performance? (characteristics) UNC-W Mission • • • • • • • Intellectual curiosity Imagination Critical thinking Thoughtful expression Diversity International perspectives Service Assessing Student Learning Outcomes • • • • • Reasonable number? Tied to university mission / goals? Appropriate format? Do faculty and students understand what the outcomes mean? (components) Is there a common language for describing student performance? (characteristics) Student Learning Outcomes Students should be able to <<action verb>> <<something>> • Cite Count Define Draw Identify List Name Point Quote Read Recite Record Repeat Select State Tabulate Tell Trace Underline Associate Classify Compare Compute Contrast Differentiate Discuss Distinguish Estimate Explain Express Extrapolate Interpolate Locate Predict Report Restate Review Tell Translate Apply Calculate Classify Demonstrate Determine Dramatize Employ Examine Illustrate Interpret Locate Operate Order Practice Report Restructure Schedule Sketch Solve Translate Use Write Analyze Appraise Calculate Categorize Classify Compare Debate Diagram Differentiate Distinguish Examine Experiment Identify Inspect Inventory Question Separate Summarize Test Arrange Assemble Collect Compose Construct Create Design Formulate Integrate Manage Organize Plan Prepare Prescribe Produce Propose Specify Synthesize Write Appraise Assess Choose Compare Criticize Determine Estimate Evaluate Grade Judge Measure Rank Rate Recommend Revise Score Select Standardize Test Validate Student Learning Outcomes • • • • Learner Centered Specific Action oriented Cognitively appropriate for the program level Student Learning Outcomes • Students should be able to critically comprehend, interpret, and evaluate written, visual, and aural material. Student Learning Outcomes • Students will recognize, analyze, and interpret human experience in terms of personal, intellectual, and social contexts. Student Learning Outcomes • Students will demonstrate analytical, creative, and evaluative thinking in the analysis of theoretical or practical issues. Student Learning Outcomes • • • • Learner Centered Specific Action oriented Cognitively appropriate for the program level COMPREHENSION EVALUATION APPLICATION ANALYSIS SYNTHESIS KNOWLEDGE Cite Count Define Draw Identify List Name Point Quote Read Recite Record Repeat Select State Tabulate Tell Trace Underline Associate Classify Compare Compute Contrast Differentiate Discuss Distinguish Estimate Explain Express Extrapolate Interpolate Locate Predict Report Restate Review Tell Translate Apply Calculate Classify Demonstrate Determine Dramatize Employ Examine Illustrate Interpret Locate Operate Order Practice Report Restructure Schedule Sketch Solve Translate Use Write Analyze Appraise Calculate Categorize Classify Compare Debate Diagram Differentiate Distinguish Examine Experiment Identify Inspect Inventory Question Separate Summarize Test Arrange Assemble Collect Compose Construct Create Design Formulate Integrate Manage Organize Plan Prepare Prescribe Produce Propose Specify Synthesize Write Appraise Assess Choose Compare Criticize Determine Estimate Evaluate Grade Judge Measure Rank Rate Recommend Revise Score Select Standardize Test Validate Lower division course outcomes COMPREHENSION EVALUATION APPLICATION ANALYSIS SYNTHESIS KNOWLEDGE Cite Count Define Draw Identify List Name Point Quote Read Recite Record Repeat Select State Tabulate Tell Trace Underline Associate Classify Compare Compute Contrast Differentiate Discuss Distinguish Estimate Explain Express Extrapolate Interpolate Locate Predict Report Restate Review Tell Translate Apply Calculate Classify Demonstrate Determine Dramatize Employ Examine Illustrate Interpret Locate Operate Order Practice Report Restructure Schedule Sketch Solve Translate Use Write Upper division Course / Program outcomes Analyze Appraise Calculate Categorize Classify Compare Debate Diagram Differentiate Distinguish Examine Experiment Identify Inspect Inventory Question Separate Summarize Test Arrange Assemble Collect Compose Construct Create Design Formulate Integrate Manage Organize Plan Prepare Prescribe Produce Propose Specify Synthesize Write Appraise Assess Choose Compare Criticize Determine Estimate Evaluate Grade Judge Measure Rank Rate Recommend Revise Score Select Standardize Test Validate Assessing Student Learning Outcomes • • • • • Reasonable number? Tied to university mission / goals? Appropriate format? Do faculty and students understand what the outcomes mean? (components) Is there a common language for describing student performance? (characteristics) Components • • • • • Define student learning outcomes Provide a common language for describing student learning Must be outcome specific Must be shared across faculty Number of components will vary by outcome Communication Write Relate Speak Listen Participate Component Component Component Component Component Component Component Component Component Component Component Component Component Component Component Components Goal Outcome Outcome Outcome Outcome Outcome component component component Evaluative elements Components Communication Write Relate Lab report Speak mechanics style organization Listen Participate The Reality of Assessing Student Learning Outcomes Why you need common components Course Course Course Course Course Speaking eye contact style appearance gestures rate evidence volume poise organization sources transitions examples verbal variety organization attention getter Can our students deliver an effective Public Speech? eye contact style appearance gestures rate evidence volume poise conclusion sources transitions examples verbal variety organization attention getter a little quiz Example #1 Gather factual information and apply it to a given problem in a manner that is relevant, clear, comprehensive, and conscious of possible bias in the information selected BETTER: Students will be able to apply factual information to a problem COMPONENTS: Relevance Clarity Comprehensiveness Aware of Bias Example #2 Imagine and seek out a variety of possible goals, assumptions, interpretations, or perspectives which can give alternative meanings or solutions to given situations or problems BETTER: Students will be able to provide alternative solutions to situations or problems COMPONENTS: Variety of assumptions, perspectives, interpretations Analysis of comparative advantage Example #3 Formulate and test hypotheses by performing laboratory, simulation, or field experiments in at least two of the natural science disciplines (one of these experimental components should develop, in greater depth, students’ laboratory experience in the collection of data, its statistical and graphical analysis, and an appreciation of its sources of error and uncertainty) BETTER: Students will be able to test hypotheses. COMPONENTS Data collection Statistical Analysis Graphical Analysis Identification of sources of error Assessing Student Learning Outcomes • • • • • Reasonable number? Tied to university mission / goals? Appropriate format? Do faculty and students understand what the outcomes mean? (components) Is there a common language for describing student performance? (characteristics) Performance Characteristics • Scale or description for assessing each of the components • Two to Five-point scales for each component • Anchored with descriptions and supported by examples Performance Rubric Components Performance Characteristics Does not meet Meets Exceeds Expectations Expectations Expectations Communication Speak in public situations Verbal Delivery Nonverbal Delivery Structure Evidence Performance Characteristics Level or degree: • Accurate, Correct • Depth, Detail • Coherence, Flow • Complete, Thorough • Integration • Creative, Inventive • Evidence based, supported • Engaging, enhancing Performance Characteristics Description Anchors • • • • • • • • • Missing - Included Inappropriate - Appropriate Incomplete - Complete Incorrect - Partially Correct - Correct Vague - Emergent - Clear Marginal - Acceptable - Exemplary Distracting - Neutral - Enhancing Usual - Unexpected - Imaginative Ordinary - Interesting - Challenging Performance Characteristics Description Anchors • • • • • • • Simple - More fully developed - Complex Reports - Interprets - Analyzes Basic - Expected - Advanced Few - Some - Several - Many Isolated - Related - Connected - Integrated Less than satisfactory - satisfactory - more than satisfactory outstanding Never - Infrequently - Usually - Always Communication Speak in public situations Verbal Delivery Nonverbal Delivery Structure Evidence Several - Some - Few fluency problems Distracting - Enhancing Disconnected - Connected - Integrated Doesn’t support - Sometimes - Always supports Rubric Resource www.winona.edu/air/rubrics.htm Assessing Student Learning Outcomes • • Outcomes supported by core courses in the curriculum? Are the student level learning outcomes developed throughout the curriculum? Student Learning Outcomes Course 1 Course 2 X Course 3 X X X X X X X X X X X X X Course 5 X X X X Course 4 X X X Assessing Student Learning Outcomes • Supported by core courses in the curriculum? • • Orphan outcomes? Empty requirements? Student Learning Outcomes Course 1 Course 2 X Course 3 X X X X X Course 4 X X X X X X X X X X Course 5 X X Student Learning Outcomes Course 1 Course 2 Course 3 Course 4 X X X X X X X X X X X X X Course 5 X X X Student Learning Outcomes • Supported by core courses in the curriculum? • Orphan outcomes? • Empty requirements? • Developed through the curriculum? Knowledge / Comprehension Application / Analysis Synthesis / Evaluation Student Learning Outcomes Course 1 Course 2 K Course 3 A K A K A A S S A A S A A Cluster 1 S K K K Course 4 S S S Assessment Methodology Assessing Assessment Methodology • • • Does the assessment plan measure student learning, or program effectiveness? Does the plan rely on direct measures of student learning? Do the learning objects match the outcomes? Assessing Assessment Methodology • Is there a systematic approach to implementing the plan? Assessing Assessment Methodology • • • Does the assessment plan measure student learning, or program effectiveness? Does the plan rely on direct measures of student learning? Do the learning objects match the outcomes? Assessment of Program Effectiveness • What the program will do or achieve l l l l l Curriculum Retention Graduation Placement Satisfaction (graduate and employer) Assessment of Student Learning Outcomes • • What students will do or achieve Examines actual student work Assessing Assessment Methodology • • • Does the assessment plan measure student learning, or program effectiveness? Does the plan rely on direct measures of student learning? Do the learning objects match the outcomes? Direct Measures of Student Learning • • • • • • • • Capstone experiences Standardized tests Performance on national licensure certification or professional exams Locally developed tests Essay questions blind scored by faculty Juried review of senior projects Externally reviewed exhibitions performances Evaluation of internships based upon program learning outcomes Indirect Measures of Learning • • • • • • • Alumni, employer, and student surveys (including satisfaction surveys) Exit interviews of graduates and focus groups graduate follow up studies Retention and transfer studies Length of time to degree ACT scores Graduation and transfer rates Job placement rates Non-Measures of Student Learning • • • • • • • • Curriculum review reports Program review reports from external evaluators Faculty publications and recognition Course enrollments and course profiles Faculty / student ratios, percentage of students who study abroad Enrollment trends 5 year graduation rates Diversity of the student body Assessing Assessment Methodology • • • Does the assessment plan measure student learning, or program effectiveness? Does the plan rely on direct measures of student learning? Do the learning objects match the outcomes? Learning Objects • Standardized Exam, abstract, advertisement, annotated bibliography, biography, briefing, brochure, budget, care plan, case analysis, chart, cognitive map, court brief, debate, definition, description, diagram, dialogue, diary, essay, executive summary, exam, flow chart, group discussion, instruction manual, inventory, lab notes, letter to the editor, matching test, mathematical problem, memo, micro theme, multiple choice test, narrative, news story, notes, oral report, outline, performance review, plan, precis, presentation, process analysis, proposal, regulation, research proposal, review of literature, taxonomy, technical report, term paper, thesis, word problem, work of art. (Walvoord Anderson 1998). Matching Outcomes to Objects • • • • • Logical decision Demonstrate components ? Individual vs. group assignment ? Amount of feedback provided in preparation of the assignment ? Timing / program / semester Assessing Assessment Methodology • Is there a systematic approach to implementing the plan? • Not every outcome in every course by every faculty every semester • Identified Assessment Points? Student Learning Outcomes Course 1 Course 2 K Course 3 A K A K A A S S A A S A A Course 5 S K K K Course 4 S S S Implementation Strategy Assessing Implementation Strategy • • • Is the assessment plan being phased in? Is there a systematic method for assessing student learning objects? Is there a method for collecting and organizing data? Assessing Implementation Strategy • • • Is the assessment plan being phased in? Is there a systematic method for assessing student learning objects? Is there a method for collecting and organizing data? Student Learning Outcomes Phase 4 Course 1 Course 2 K Course 3 A K A K A A S S A A S A A Course 5 S K K K Course 4 S S S Assessment Methodology • Is there a systematic method for assessing student learning objects? Scoring Option 1 • Course instructor • Collects, assesses, and provides aggregate data • • Assessment committee (program) • • Consolidates and reports data to internal and external constituencies Community • • Discusses data, implications, priorities and (if necessary) plans for improvement Scoring Option 2 • Course instructor • • Assessment committee (program) • • Collects assignment Samples, evaluates, analyzes and reports data to internal and external constituencies Community • • Discusses data, implications, priorities and (if necessary) plans for improvement Assessing Implementation Strategy • Is there a method for collecting and organizing data? Reporting Methods • Tear off page • Carbonless copy paper • Reporting templates • Paper • WWW Communicate Effectively Demonstrate Oral Communication Skills Course 5 Verbal Delivery Nonverbal Delivery Organization Evidence Transitions Does not meet Meets Exceeds 13% 65% 17% 21% 72% 7% 14% 54% 32% Communicate Effectively Demonstrate Oral Communication Skills PROGRAM WIDE COMPETENCY REPORT Verbal Delivery Nonverbal Delivery Organization Evidence Transitions Does not meet Meets Exceeds 20% 65% 15% 13% 57% 30% 24% 58% 18% Data Interpretation Interpreting Data • First data collection in not about the data per se, but instead about testing the methods and tools. Interpreting Data • • • • • Did the assessment method distinguish between levels of student performance? Sampling issues? Inter-rater reliability? Multiple measures? Were patterns in the data examined? Interpreting Data • • • • Who was involved in the discussion of the data? What plans were made to address issues of concern? When will a result of the changes be measurable? How is the information communicated to constituent groups? Interpreting Data • • • • • Did the assessment method distinguish between levels of student performance? Sampling issues? Inter-rater reliability? Multiple measures? Were patterns in the data examined? Communicate Effectively Demonstrate Oral Communication Skills Course 5 Verbal Delivery Nonverbal Delivery Organization Evidence Transitions Does not meet Meets Exceeds 1% 3% 96% 0 2% 98% 3% 2% 95% Interpreting Data • • • • • Did the assessment method distinguish between levels of student performance? Sampling issues? Inter-rater reliability? Multiple measures? Were patterns in the data examined? Interpreting Data • • • Did the assessment method distinguish between levels of student performance? Sampling issues? Inter-rater reliability? • Training • Calibration • Decision rules • Support materials Interpreting Data • • • • • Did the assessment method distinguish between levels of student performance? Sampling issues? Inter-rater reliability? Multiple measures? Were patterns in the data examined? Multiple Measures DP1 DP2 DP3 Interpreting Data • • • • • Did the assessment method distinguish between levels of student performance? Sampling issues? Inter-rater reliability? Multiple measures? Were patterns in the data examined? Data Patterns • Consistency • Consensus • Distinctiveness Consistency Examines the same practice of and individual or group over time • Key question: • Has this person or group acted, felt, or performed this way in the past / over time? Consistency How well are students performing on the Outcome? High performance Low performance 00 01 02 03 04 05 Consensus • Comparison to or among groups of students • • Variation between disciplines, gender, other demographic variables Key questions: • • What is the general feeling, outcome, attitude, behavior? Do other groups of people act, perform or feel this way? Consensus How well are students performing on the Outcome? High performance Low performance Females Males Transfer OTA Distinctiveness • Examines individual or cohort perspectives across different situations, categories • Key Question: • Does a person or group respond differently based upon the situation, item, issue? Distinctiveness How well are students performing on the Outcome? High Performance Low Performance M E C H A N I C S O R G A N I Z A T I O N C O N T E N T Interpreting Data • • • • Who was involved in the discussion of the data? What plans were made to address issues of concern? When will a result of the changes be measurable? How is the information communicated to constituent groups? Discussion Questions Based upon your experience, does the data surprise you? Discussion Questions Does our students’ performance on the outcome meet our expectations? Discussion Questions How can this data be validated? What else can we do to find out if this is accurate? Interpreting Data • • • • Who was involved in the discussion of the data? What plans were made to address issues of concern? When will a result of the changes be measurable? How is the information communicated to constituent groups? Addressing Issues • Learning Opportunities • • • • • • New Revised Feedback Curriculum Faculty Development Student Development Interpreting Data • • • • Who was involved in the discussion of the data? What plans were made to address issues of concern? When will a result of the changes be measurable? How is the information communicated to constituent groups? How Assessment Works Year 1 O U T C O M E Compare Against Benchmarks, Standards, Past Component Performance Component Component Component BASELINE L Event 1 L Event 2 L Event 3 Year 2 Year 3 New / Revised Event 1 New / Revised Event 2 New / Revised Event 3 New / Revised Event 1 New / Revised Event 2 New / Revised Event 3 Component Component Component Component Component Component Component Component Interpreting Data • • • • Who was involved in the discussion of the data? What plans were made to address issues of concern? When will a result of the changes be measurable? How is the information communicated to constituent groups? Communicating Assessment • • • • • Poster Sessions Brown Bag Sessions WWW sites Meetings Newsletters Really Big Mistakes Big Mistakes in Assessment • Assuming that it will go away • Allowing assessment planning to become gaseous • Assuming you got it right -- or expecting to get it right -- the first time • Not considering implementation issues when creating plans Big Mistakes in Assessment • Borrowing plans and methods without acculturation • Setting the bar too low • Assuming that you’re done and everything’s OK or rushing to “Close the Loop” • Doing it for accreditation instead of improvement Big Mistakes in Assessment • Confusing program effectiveness with student learning • Making assessment the responsibility of one individual • Assuming collecting data is Doing Assessment Assessment 201-Beyond the Basics Assessing Program Level Assessment Plans University of North Carolina Wilmington Susan Hatfield Winona State University Shatfield@winona.edu