DIP - E

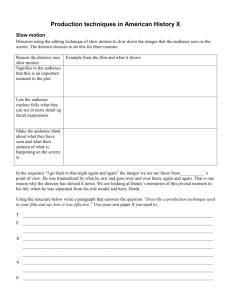

advertisement

UNIT I: DIGITAL IMAGE FUNDAMENTALS Elements of a digital image processing system – structure of the human eye – image formation and contrast sensitivity – sampling and quantization – neighbors of pixel – distance measure – photographic film structure and exposure – film characteristics – linear scanner – video camera – image processing applications. UNIT II: IMAGE TRANSFORMS Introduction to Fourier transform – DFT – properties of two-dimensional FT – separability, translation, periodicity, rotation, average value – FFT algorithm – Walsh transform – Hadamard transform – discrete cosine transform. UNIT III: IMAGE ENHANCEMENT Definition – spatial domain methods – frequency domain methods – histogram – modification techniques – neighborhood averaging – median filtering – low pass filtering – averaging of multiple images – image sharpening by differentiation and high pass filtering. UNIT IV: IMAGE ENCODING Objective and subjective fidelity criteria – basic encoding process – the mapping – the quantizer– the coder – differential – encoding – contour encoding – run length encoding image encoding – relative to fidelity criterion – differential pulse code modulation. UNIT V: IMAGE ANALYSIS AND COMPUTER VISION Typical computer vision system – image analysis techniques – spatial feature extraction – amplitude and histogram features - transforms features – edge detection – gradient operators – boundary extraction – edge linking – boundary representation – boundary matching – shape representation. TEXT BOOK 1. Rafael C. Gonzalez, Paul Wintz, “Digital Image Processing”, Addison-Westley Publishing Company, 1987 2. Rafael C. Gonzalez, Richard E Woods “Digital Image Processing”, Pearson, 2001 UNIT 1 Elements of a Digital Image Processing System This easy-to-follow textbook provides a modern, algorithmic introduction to digital image processing, designed to be used both by learners desiring a firm foundation on which to build, and practitioners in search of critical analysis and concrete implementations of the most important techniques. The text compiles the key elements of digital image processing, starting from the basic concepts and elementary properties of digital images through simple statistics and point operations, fundamental filtering techniques, localization of edges and contours, and basic operations on color images. Mastering these most commonly used techniques will enable the reader to start being productive straight away. The Anatomy of The Eye The anatomy and physiology of the human eye is an important part of many courses (e.g. in biology, human biology, physics, and practical courses in medicine, nursing, and therapies). This page is a very basic introduction the subjects of "The Eye" and "Visual Optics" more generally. It includes a simple diagram of the eye together with definitions of the parts of the eye labelled in the illustration. Term Definition / Description Aqueous Humour The aqueous humour is a jelly-like substance located in the anterior chamber of the eye. ... more about the Aqueous Humour The choroid layer is located behind the retina and absorbs unused radiation. ... more about the Choroid The ciliary muscle is a ring-shaped muscle attached to the iris. It is important because contraction and relaxation of the ciliary muscle controls the shape of the lens. ... more about the Ciliary Muscle The cornea is a strong clear bulge located at the front of the eye (where it replaces the sclera - that forms the outside surface of the rest of the eye). The front surface of the adult cornea has a radius of approximately 8mm. The cornea contributes to the image-forming process by refracting light entering the eye. ... more about the Cornea The fovea is a small depression (approx. 1.5 mm in diameter) in the retina. This is the part of the retina in which high-resolution vision of fine detail is possible. ... more about the Fovea The hyaloid diaphragm divides the aqueous humour from the vitreous humour. Choroid Ciliary Muscle Cornea Fovea Hyaloid ... more about the Hyaloid Membrane The iris is a diaphragm of variable size whose function is to adjust the size of the Iris pupil to regulate the amount of light admitted into the eye. The iris is the coloured part of the eye (illustrated in blue above but in nature may be any of many shades of blue, green, brown, hazel, or grey). ... more about the Iris The lens of the eye is a flexible unit that consists of layers of tissue enclosed in a Lens tough capsule. It is suspended from the ciliary muscles by the zonule fibers. ... more about the Lens The optic nerve is the second cranial nerve and is responsible for vision. Optic Each nerve contains approx. one million fibres transmitting information from the Nerve rod and cone cells of the retina. ... more about the Optic Nerve The papilla is also known as the "blind spot" and is located at the position from Papilla which the optic nerve leaves the retina. ... more about the Optic Papilla The pupil is the aperture through which light and hence the images we "see" and Pupil "perceive" - enters the eye. This is formed by the iris. As the size of the iris increases (or decreases) the size of the pupil decreases (or increases) correspondingly. ... more about the Pupil The retina may be described as the "screen" on which an image is formed by light Retina that has passed into the eye via the cornea, aqueous humour, pupil, lens, then the hyaloid and finally the vitreous humour before reaching the retina. The retina contains photosensitive elements (called rods and cones) that convert the light they detect into nerve impulses that are then sent onto the brain along the optic nerve. ... more about the Retina The sclera is a tough white sheath around the outside of the eye-ball. Sclera This is the part of the eye that is referred to by the colloquial terms "white of the eye". ... more about the Sclera Visual Axis A simple definition of the "visual axis" is "a straight line that passes through both the centre of the pupil and the centre of the fovea". However, there is also a stricter definition (in terms of nodal points) which is important for specialists in optics and related subjects. ... more about the Visual Axis Vitreous The vitreous humour (also known as the "vitreous body") is a jelly-like substance. Humour ... more about the Vitreous Humour Zonules The zonules (or "zonule fibers") attach the lens to the ciliary muscles. ... more about the Zonules To learn more about the eye and how it works it is useful to understand some simple but important aspects of ligh The ultimate stage in most optical imaging systems is the formation of an image on the retina, and the design of most optical systems takes this important fact into account. For example, the light output from optical systems is often limited (or should be limited) to the portion of the spectrum to which we are most sensitive (i.e., the visible spectrum). The light level of the final image is within a range that is not too dim or bright. Exit pupils of microscopes and binoculars are matched to typical pupil sizes, and images are often produced at a suitable magnification, so that they are easily resolved. We even incorporate focus adjustments that can adapt when the user is near- or farsighted. Of course, it is understandable that our man-made environment is designed to fit within our sensory and physical capabilities. But this process is not complete. There is still much to know about the optical system of the human eye, and as we increase our understanding of the eye, we learn better ways to present visual stimuli, and to design instruments for which we are the end users. This article focuses on the way images are formed in the eye and the factors in the optical system that influence Types of connectivity 2-dimensional 4-connected 4-Connected pixels are neighbors to every pixel that touches one of their edges. These pixels are connected horizontally and vertically. In terms of pixel coordinates, every pixel that has the coordinates or is connected to the pixel at . 6-connected 6-connected pixels are neighbors to every pixel that touches one of their corners (which includes pixels that touch one of their edges) in a hexagonal grid or stretcher bond rectangular grid. There are several ways to map hexagonal tiles to integer pixel coordinates. With one method, in addition to the 4-connected pixels, the two pixels at coordinates are connected to the pixel at and . 8-connected 8-connected pixels are neighbors to every pixel that touches one of their edges or corners. These pixels are connected horizontally, vertically, and diagonally. In addition to 4-Connected pixels, each pixel with coordinates or is connected to the pixel at . 3-dimensional 6-connected 6-connected pixels are neighbors to every pixel that touches one of their faces. These pixels are connected along one of the primary axes. Each pixel with coordinates , or is connected to the pixel at , . 18-connected 18-connected pixels are neighbors to every pixel that touches one of their faces or edges. These pixels are connected along either one or two of the primary axes. In addition to 6-Connected pixels, each pixel with coordinates , , , connected to the pixel at , , or is . 26-connected 26-connected pixels are neighbors to every pixel that touches one of their faces, edges, or corners. These pixels are connected along either one, two, or all three of the primary axes. In addition to 18-Connected pixels, each pixel with coordinates , , , , , , , or is connected to the pixel at Distance Measure Based on the digital image processing theory, a newmethod of measuring the leading vehicle distance wasproposed. The input image using the method of edgeenhancement and morphological transformation wasestablished, so the edges of objects were enhanced to identify.The target vehicle was identified and calibrated in the imageby using the method of the obstacle detection by segmentationand decision tree. The relationship between coordinates valuein image space and the data of the real space plane wasestablished by applying the ray angles. Thus, throughaccessing to image pixel coordinates of the vehicle, the vehicle'sactual position in the plane can be calculated. At last, theleading vehicle distance based on the calculating model ofinverse perspective mapping was measured. By using softwareVC++, an experiment program was made. The experimentresults prove that the method of measuring the leading vehicledistance is simple and effective. It can meet the requirement ofintelligent vehicle technologies. It is an more available andmore advanced method to calculate the leading vehicledistance. Keywords-Active Safety; Leading Vehicle Distance; DigitalImage Processing; Obstacle Detection; Monocular Ranging Medical images are recorded either in digital format on some form of digital media or on photographic film. Here we consider the process of recording on film. The active component of film is an emulsion of radiation-sensitive crystals coated onto a transparent base material. The production of an image requires two steps, as illustrated below. First, the film is exposed to radiation, typically light, which activates the emulsion material but produces no visible change. The exposure creates a so-called latent image. Second, the exposed film is processed in a series of chemical solutions that convert the invisible latent image into an image that is visible as different optical densities or shades of gray. The darkness or density of the film increases as the exposure is increased. This general relationship is shown in the second following figure. The Two Steps in the Formation of a Film Image The General Relationship between Film Density (Shades of Gray) and Exposure The specific relationship between the shades of gray or density and exposure depends on the characteristics of the film emulsion and the processing conditions. The basic principles of the photographic process and the factors that affect the sensitivity of film are covered in this chapter. FILM FUNCTIONS CONTENTS Film performs several functions in the medical imaging process. A knowledge of these functions and how they are affected by the characteristics of different types of film aids in selecting film for a specific clinical procedure and in optimizing radiographic techniques. Image Recording CONTENTS In principle, film is an image converter. It converts radiation, typically light, into various shades of gray or optical density values. An important characteristic of film is that it records, or retains, an image. An exposure of a fraction of a second can create a permanent image. The amount of exposure required to produce an image depends on the sensitivity, or speed, of the film being used. Some films are more sensitive than others because of their design or the way they are processed. The sensitivity of radiographic film is generally selected to provide a compromise between two very important factors: patient exposure and image quality, specifically image noise. A highly sensitive film reduces patient exposure but decreases image quality because of the increased quantum noise. Image Display CONTENTS Most filmed medical images are recorded as transparencies. In this form they can be easily viewed by trans-illumination on a viewbox. The overall appearance and quality of a radiographic image depends on a combination of factors, including the characteristics of the particular film used, the way in which it was exposed, and the processing conditions. When a radiograph emerges from the film processor, the image is permanent and cannot be changed. It is, therefore, important that all factors associated with the production of the image are adjusted to produce optimum image quality. Image Storage CONTENTS Film has been the traditional medium for medical image storage and archiving. If a film is properly processed it will have a lifetime of many years and will, in most cases, outlast its clinical usefulness. The major disadvantages of storing images on film are bulk and inaccessibility. Most clinical facilities must devote considerable space to film storage. Retrieving films from storage generally requires manual search and transportation of the films to a viewing area. Because film performs so many of the functions that make up the radiographic examination, it will continue to be an important element in the medical imaging process. Because of its limitations, however, it will be replaced by digital imaging media in many clinical applications. OPTICAL DENSITY CONTENTS Optical density is the darkness, or opaqueness, of a transparency film and is produced by film exposure and chemical processing. An image contains areas with different densities that are viewed as various shades of gray. CONTENT S The optical density of film is assigned numerical values related to the amount of light that penetrates the film. Increasing film density decreases light penetration. The relationship between density values and light penetration is exponential, as shown below. Light Penetration Relationship between Light Penetration and Film Density A clear piece of film that allows 100% of the light to penetrate has a density value of 0. Radiographic film is never completely clear. The minimum film density is usually in the range of 0.1 to 0.2 density units. This is designated the base plus fog density and is the density of the film base and any inherent fog not associated with exposure. Each unit of density decreases light penetration by a factor of 10. A film area with a density value of 1 allows 10% of the light to penetrate and generally appears as a medium gray when placed on a conventional viewbox. A film area with a density value of 2 allows 10% of 10% (1.0%) light penetration and appears as a relatively dark area when viewed in the usual manner. With normal viewbox illumination, it is possible to see through areas of film with density values of up to approximately 2 units. A density value of 3 corresponds to a light penetration of 0.1% (10% of 10% of 10%). A film with a density value of 3 appears essentially opaque when transilluminated with a conventional viewbox. It is possible, however, to see through such a film using a bright "hot" light. Radiographic film generally has a maximum density value of approximately 3 density units. This is designated the Dmax of the film. The maximum density that can be produced within a specific film depends on the characteristics of the film and processing conditions. Measurement CONTENTS The density of film is measured with a densitometer. A light source passes a small beam of light through the film area to be measured. On the other side of the film, a light sensor (photocell) converts the penetrated light into an electrical signal. A special circuit performs a logarithmic conversion on the signal and displays the results in density units. The primary use of densitometers in a clinical facility is to monitor the performance of film processors. CONTENT S Conventional film is layered, as illustrated in the following figure. The active component is an emulsion layer coated onto a base material. Most film used in radiography has an emulsion layer on each side of the base so that it can be used with two intensifying screens simultaneously. Films used in cameras and in selected radiographic procedures, such as mammography, have one emulsion layer and are called single-emulsion films. FILM STRUCTURE Cross-Section of Typical Radiographic Film Base CONTENTS The base of a typical radiographic film is made of a clear polyester material about 150 µm thick. It provides the physical support for the other film components and does not participate in the image-forming process. In some films, the base contains a light blue dye to give the image a more pleasing appearance when illuminated on a viewbox. Emulsion CONTENTS The emulsion is the active component in which the image is formed and consists of many small silver halide crystals suspended in gelatin. The gelatin supports, separates, and protects the crystals. The typical emulsion is approximately 10 µm thick. Several different silver halides have photographic properties, but the one typically used in medical imaging films is silver bromide. The silver bromide is in the form of crystals, or grains, each containing on the order of 109 atoms. Silver halide grains are irregularly shaped like pebbles, or grains of sand. Two grain shapes are generally used in film emulsions. One form approximates a cubic configuration with its three dimensions being approximately equal. Another form is tabular-shaped grains. The tabular grain is relatively thin in one direction, and its length and width are much larger than its thickness, giving it a relatively large surface area. The primary advantage of tabular grain film in comparison to cubic grain film is that sensitizing dyes can be used more effectively to increase sensitivity and reduce crossover exposure. CONTENT S The production of film density and the formation of a visible image is a two step process. The first step in this photographic process is the exposure of the film to light, which forms an invisible latent image. The second step is the chemical process that converts the latent image into a visible image with a range of densities, or shades of gray. THE PHOTOGRAPHIC PROCESS Film density is produced by converting silver ions into metallic silver, which causes each processed grain to become black. The process is rather complicated and is illustrated by the sequence of events shown below. Sequence of Events That Convert a Transparent Film Grain into Black Metallic Silver Each film grain contains a large number of both silver and bromide ions. The silver ions have a one-electron deficit, which gives them a positive charge. On the other hand, the bromide ions have a negative charge because they contain an extra electron. Each grain has a structural "defect" known as a sensitive speck. A film grain in this condition is relatively transparent. CONTENT S The invisible latent image is converted into a visible image by the chemical process of development. The developer solution supplies electrons that migrate into the sensitized grains and convert the other silver ions into black metallic silver. This causes the grains to become visible black specks in the emulsion. Development Radiographic film is generally developed in an automatic processor. A schematic of a typical processor is shown below. The four components correspond to the four steps in film processing. In a conventional processor, the film is in the developer for 20 to 25 seconds. All four steps require a total of 90 seconds. A Film Processor When a film is inserted into a processor, it is transported by means of a roller system through the chemical developer. Although there are some differences in the chemistry of developer solutions supplied by various manufacturers, most contain the same basic chemicals. Each chemical has a specific function in the development process. Reducer Chemical reduction of the exposed silver bromide grains is the process that converts them into visible metallic silver. This action is typically provided by two chemicals in the solution: phenidone and hydroquinone. Phenidone is the more active and primarily produces the mid to lower portion of the gray scale. Hydroquinone produces the very dense, or dark, areas in an image. Activator The primary function of the activator, typically sodium carbonate, is to soften and swell the emulsion so that the reducers can reach the exposed grains. Restrainer Potassium bromide is generally used as a restrainer. Its function is to moderate the rate of development. Preservative Sodium sulfite, a typical preservative, helps protect the reducing agents from oxidation because of their contact with air. It also reacts with oxidation products to reduce their activity. Hardener Glutaraldehyde is used as a hardener to retard the swelling of the emulsion. This is necessary in automatic processors in which the film is transported by a system of rollers. Fixing CONTENTS After leaving the developer the film is transported into a second tank, which contains the fixer solution. The fixer is a mixture of several chemicals that perform the following functions. Neutralizer When a film is removed from the developer solution, the development continues because of the solution soaked up by the emulsion. It is necessary to stop this action to prevent overdevelopment and fogging of the film. Acetic acid is in the fixer solution for this purpose. Clearing The fixer solution also clears the undeveloped silver halide grains from the film. Ammonium or sodium thiosulfate is used for this purpose. The unexposed grains leave the film and dissolve in the fixer solution. The silver that accumulates in the fixer during the clearing activity can be recovered; the usual method is to electroplate it onto a metallic surface within the silver recovery unit. Preservative Sodium sulfite is used in the fixer as a preservative. Hardener Aluminum chloride is typically used as a hardener. Its primary function is to shrink and harden the emulsion. Wash CONTENTS Film is next passed through a water bath to wash the fixer solution out of the emulsion. It is especially important to remove the thiosulfate. If thiosulfate (hypo) is retained in the emulsion, it will eventually react with the silver nitrate and air to form silver sulfate, a yellowish brown stain. The amount of thiosulfate retained in the emulsion determines the useful lifetime of a processed film. The American National Standard Institute recommends a maximum retention of 30 µg/in2. Dry CONTENTS The final step in processing is to dry the film by passing it through a chamber in which hot air is circulating. CONTENT S One of the most important characteristics of film is its sensitivity, often referred to as film speed. The sensitivity of a particular film determines the amount of exposure required to produce an image. A film with a high sensitivity (speed) requires less exposure than a film with a lower sensitivity (speed). SENSITIVITY The sensitivities of films are generally compared by the amount of exposure required to produce an optical density of 1 unit above the base plus fog density. The sensitivity of radiographic film is generally not described with numerical values but rather with a variety of generic terms such as "half speed," "medium speed," and "high speed." Radiographic films are usually considered in terms of their relative sensitivities rather than their absolute sensitivity values. Although it is possible to choose films with different sensitivities, the choice is limited to a range of not more than four to one by most manufacturers. The following figure compares two films with different sensitivities. Notice that a specific exposure, indicated by the relative exposure step values, produces a higher density in the high sensitivity film; therefore, the production of a specific density value (i.e., 1 density unit) requires less exposure. Comparison of Two Films with Different Sensitivities High sensitivity (speed) films are chosen when the reduction of patient exposure and heat loading of the x-ray equipment are important considerations. Low sensitivity (speed) films are used to reduce image noise. The relationship of film sensitivity to image noise is considered in the section titled, "Image Noise." The sensitivity of film is determined by a number of factors, as shown in the following figure, which include its design, the exposure conditions, and how it is processed. Factors That Affect Film Sensitivity Composition The basic sensitivity characteristic of a film is determined by the composition of the emulsion. The size and shape of the silver halide grains have some effect on film sensitivity. Increasing grain size generally increases sensitivity. Tabular-shaped grains generally produce a higher sensitivity than conventional grains. Although grain size may vary among the various types of radiographic film, most of the difference in sensitivity is produced by adding chemical sensitizers to the emulsion. Processing The effective sensitivity of film depends on several factors associated with the development: • the type of developer • developer concentration • developer replenishment rates • developer contamination • development time • development temperature. In most medical imaging applications, the objective is not to use these factors to chance or vary film sensitivity, but rather to control them to maintain a constant and predictable film sensitivity. Developer Composition The processing chemistry supplied by different manufacturers is not the same. It is usually possible to process a film in a variety of developer solutions, but they will not all produce the same film sensitivity. The variation in sensitivity is usually relatively small, but must be considered when changing from one brand of developer to another. Developer Concentration Developer chemistry is usually supplied to a clinical facility in the form of a concentrate that must be diluted with water before it is pumped into the processor. Mixing errors that result in an incorrect concentration can produce undesirable changes in film sensitivity. Developer Replenishment The film development process consumes some of the developer solution and causes the solution to become less active. Unless the solution is replaced, film sensitivity will gradually decrease. In radiographic film processors, the replenishment of the developer solution is automatic. When a sheet of film enters the processor, it activates a switch that causes fresh solution to be pumped into the development tank. The replenishment rate can be monitored by means of flow meters mounted in the processor. The appropriate replenishment rate depends on the size of the films being processed. A processor used only for chest films generally requires a higher replenishment rate than one used for smaller films. Developer Contamination If the developer solution becomes contaminated with another chemical, such as the fixer solution, abrupt changes in film sensitivity can occur in the form of either an increase or decrease in sensitivity, depending on the type and amount of contamination. Developer contamination is most likely to occur when the film transport rollers are removed or replaced. Development Time When an exposed film enters the developer solution, development is not instantaneous. It is a gradual process during which more and more film grains are developed, resulting in increased film density. The development process is terminated by removing the film from the developer and placing it in the fixer. To some extent, increasing development time increases film sensitivity, since less exposure is required to produce a specific film density. In most radiographic film processors, the development time is usually fixed and is approximately 20-25 seconds. However, there are two exceptions. So-called rapid access film is designed to be processed faster in special processors. Some (but not all) mammographic films will produce a higher contrast when developed for a longer time in an extended cycle processor. Development Temperature The activity of the developer changes with temperature. An increase in temperature speeds up the development process and increases film sensitivity because less exposure is required to produce a specific film density. The temperature of the developer is thermostatically controlled in an automatic processor. It is usually set within the range of 90-95°F. Specific processing temperatures are usually specified by the film manufacturers. Light Color (Wavelength) Film is not equally sensitive to all wavelengths (colors) of light. The spectral sensitivity is a characteristic of film that must be taken into account in selecting film for use with specific intensifying screens and cameras. In general, the film should be most sensitive to the color of the light that is emitted by the intensifying screens, intensifier tubes, cathode ray tubes (CRTs), or lasers. Blue Sensitivity A basic silver bromide emulsion has its maximum sensitivity in the ultraviolet and blue regions of the light spectrum. For many years most intensifying screens contained calcium tungstate, which emits a blue light and is a good match for blue sensitive film. Although calcium tungstate is no longer widely used as a screen material, several contemporary screen materials emit blue light. Green Sensitivity Several image light sources, including image intensifier tubes, CRTs, and some intensifying screens, emit most of their light in the green portion of the spectrum. Film used with these devices must, therefore, be sensitive to green light. Silver bromide can be made sensitive to green light by adding sensitizing dyes to the emulsion. Users must be careful not to use the wrong type of film with intensifying screens. If a blue-sensitive film is used with a green-emitting intensifying screen, the combination will have a drastically reduced sensitivity. Red Sensitivity Many lasers produce red light. Devices that transfer images to film by means of a laser beam must, therefore, be supplied with a film that is sensitive to red light. Safelighting Darkrooms in which film is loaded into cassettes and transferred to processors are usually illuminated with a safelight. A safelight emits a color of light the eye can see but that will not expose film. Although film has a relatively low sensitivity to the light emitted by safelights, film fog can be produced with safelight illumination under certain conditions. The safelight should provide sufficient illumination for darkroom operations but not produce significant exposure to the film being handled. This can usually be accomplished if certain factors are controlled. These include safelight color, brightness, location, and duration of film exposure. The color of the safelight is controlled by the filter. The filter must be selected in relationship to the spectral sensitivity of the film being used. An amberbrown safelight provides a relatively high level of working illumination and adequate protection for blue-sensitive film; type 6B filters are used for this application. However, this type of safelight produces some light that falls within the sensitive range of green-sensitive film. A red safelight is required when working with green-sensitive films. Type GBX filters are used for this purpose. Selecting the appropriate safelight filter does not absolutely protect film because film has some sensitivity to the light emitted by most safelights. Therefore, the brightness of the safelight (bulb size) and the distance between the light and film work surfaces must be selected so as to minimize film exposure. Since exposure is an accumulative effect, handling the film as short a time as possible minimizes exposure. The potential for safelight exposure can be evaluated in a darkroom by placing a piece of film on the work surface, covering most of its area with an opaque object, and then moving the object in successive steps to expose more of the film surface. The time intervals should be selected to produce exposures ranging from a few seconds to several minutes. After the film is processed, the effect of the safelight exposure can be observed. Film is most sensitive to safelight fogging after the latent image is produced but before it is processed. Exposure Time In radiography it is usually possible to deliver a given exposure to film by using many combinations of radiation intensity (exposure rate) and exposure time. Since radiation intensity is proportional to x-ray tube MA, this is equivalent to saying that a given exposure (in milliampere-seconds) can be produced with many combinations of MA and time. This is known as the law of reciprocity. In effect, it means that it is possible to swap radiation intensity (in milliamperes) for exposure time and produce the same film exposure. When a film is directly exposed to x-radiation, the reciprocity law holds true. That is, 100 mAs will produce the same film density whether it is exposed at 1,000 mA and 0.1 seconds or 10 mA and 10 seconds. However, when a film is exposed by light, such as from intensifying screens or image intensifiers, the reciprocity law does not hold. With light exposure, as opposed to direct xray interactions, a single silver halide grain must absorb more than one photon before it can be developed and can contribute to image density. This causes the sensitivity of the film to be somewhat dependent on the intensity of the exposing light. This loss of sensitivity varies to some extent from one type of x-ray film to another. The clinical significance is that MAS values that give the correct density with short exposure times might not do so with long exposure times. PROCESSING QUALITY CONTROL There are many variables, such as temperature and chemical activity, that can affect the level of processing that a film receives. Each type of film is designed and manufactured to have specified sensitivity (speed) and contrast characteristics. Under processing If a film is under processed its sensitivity and contrast will be reduced below the specified values. The loss of sensitivity can usually be compensated for by increasing exposure but the loss of contrast cannot be recovered. Over processing Over processing can increase sensitivity. The contrast of some films might increase with over processing, up to a point, and then decrease. A major problem with over processing is that it increases fog (base plus fog density) which contributes to a decrease in contrast. Processing Accuracy The first step in processing quality control is to set up the correct processing conditions and then verify that the film is being correctly processed. Processing Conditions A specification of recommended processing conditions (temperature, time, type of chemistry, replenishment rates, etc.) should be obtained from the manufacturers of the film and chemistry. Processing Verification After the recommended processing conditions are established for each type of film, a test should be performed to verify that the film is producing the design sensitivity and contrast characteristics as specified by the manufacturer. These specifications are usually provided in the form of a film characteristic curve that can be compared to one produced by the processor being evaluated. Processing Consistency The second step in processing quality control is to reduce the variability over time in the level of processing. Variations in processing conditions can produce significant differences in film sensitivity. One objective of a quality control program is to reduce exposure errors that cause either underexposed or overexposed film. Processors should be checked several times each week to detect changes in processing. This is done by exposing a test film to a fixed amount of light exposure in a sensitometer, running the film through the processor, and then measuring its density with a densitometer. It is not necessary to measure the density of all exposure steps. Only a few exposure steps are selected, as shown in the figure below, to give the information required for processor quality control. The density values are recorded on a chart (see the second figure below) so that fluctuations can be easily detected. Density Values from a Sensitometer Exposed Film Strip Used for Processor Quality Control A Processor Quality Control Chart Base Plus Fog Density One density measurement is made in an area that receives no exposure. This is a measure of the base plus fog density. A low density value is desirable. An increase in the base plus fog density can be caused by over processing a film. Speed A single exposure step that produces a film density of about 1 density unit (above the base plus fog value) is selected and designated the "speed step." The density of this same step is measured each day and recorded on the chart. The density of this step is a general indication of film sensitivity or speed. Abnormal variations can be caused by any of the factors affecting the amount of development. Contrast Two other steps are selected, and the difference between them is used as a measure of film contrast. This is the contrast index. If the two sensitometer steps that are selected represent a two-to-one exposure ratio (50% exposure contrast), the contrast index is the same as the contrast factor discussed earlier. This value is recorded on the chart to detect abnormal changes in film contrast produced by processing conditions. If abnormal variations in film density are observed, all possible causes, such as developer temperature, solution replenishment rates, and contamination, should be evaluated. If more than one processor is used for films from the same imaging device, the level of development by the different processes should be matched. Artifacts A variety of artifacts can be produced during the storage, handling, and processing of film. Bending unprocessed film can produce artifacts or "kink marks," which can appear as either dark or light areas in the processed image. Handling film, especially in a dry environment, can produce a build-up of static electricity; the discharge produces dark spots and streaks. Artifacts can be produced during processing by factors such as uneven roller pressure or the accumulation of a substance on the rollers. This type of artifact is often repeated at intervals corresponding to the circumference of the roller. Application of Digital Image Processing Image processing is any form of signal processing for which the input is an image, such as photographs or frames of video; the output of image processing can be either an image or a set of characteristics or parameters related to the image. Hence there are many ways in which it can be beneficial, hence discuss. UNIT II: IMAGE TRANSFORMS Introduction to Fourier transform – DFT – properties of two-dimensional FT – separability, translation, periodicity, rotation, average value – FFT algorithm – Walsh transform – Hadamard transform – discrete cosine transform. Fourier transform spectroscopy is a measurement technique whereby spectra are collected based on measurements of the coherence of a radiative source, using time-domain or spacedomain measurements of the electromagnetic radiation or other type of radiation. It can be applied to a variety of types of spectroscopy including optical spectroscopy, infrared spectroscopy (FTIR, FT-NIRS), nuclear magnetic resonance (NMR) and magnetic resonance spectroscopic imaging (MRSI),[1] mass spectrometry and electron spin resonance spectroscopy. There are several methods for measuring the temporal coherence of the light (see: fieldautocorrelation), including the continuous wave Michelson or Fourier transform spectrometer and the pulsed Fourier transform spectrograph (which is more sensitive and has a much shorter sampling time than conventional spectroscopic techniques, but is only applicable in a laboratory environment). The term Fourier transform spectroscopy reflects the fact that in all these techniques, a Fourier transform is required to turn the raw data into the actual spectrum, and in many of the cases in optics involving interferometers, is based on the Wiener–Khinchin theorem. n mathematics, the discrete Fourier transform (DFT) is a specific kind of discrete transform, used in Fourier analysis. It transforms one function into another, which is called the frequency domain representation, or simply the DFT, of the original function (which is often a function in the time domain). The DFT requires an input function that is discrete. Such inputs are often created by sampling a continuous function, such as a person's voice. The discrete input function must also have a limited (finite) duration, such as one period of a periodic sequence or a windowed segment of a longer sequence. Unlike the discrete-time Fourier transform (DTFT), the DFT only evaluates enough frequency components to reconstruct the finite segment that was analyzed. The inverse DFT cannot reproduce the entire time domain, unless the input happens to be periodic. Therefore it is often said that the DFT is a transform for Fourier analysis of finitedomain discrete-time functions. The input to the DFT is a finite sequence of real or complex numbers (with more abstract generalizations discussed below), making the DFT ideal for processing information stored in computers. In particular, the DFT is widely employed in signal processing and related fields to analyze the frequencies contained in a sampled signal, to solve partial differential equations, and to perform other operations such as convolutions or multiplying large integers. A key enabling factor for these applications is the fact that the DFT can be computed efficiently in practice using a fast Fourier transform (FFT) algorithm. Relationship between the (continuous) Fourier transform and the discrete Fourier transform. Left column: A continuous function (top) and its Fourier transform (bottom). Center-left column: Periodic summation of the original function (top). Fourier transform (bottom) is zero except at discrete points. The inverse transform is a sum of sinusoids called Fourier series. Center-right column: Original function is discretized (multiplied by a Dirac comb) (top). Its Fourier transform (bottom) is a periodic summation (DTFT) of the original transform. Right column: The DFT (bottom) computes discrete samples of the continuous DTFT. The inverse DFT (top) is a periodic summation of the original samples. The FFT algorithm computes one cycle of the DFT and its inverse is one cycle of the DFT inverse. Illustration of using Dirac comb functions and the convolution theorem to model the effects of sampling and/or periodic summation. The graphs on the right side depict the (finite) coefficients that modulate the infinite amplitudes of a comb function whose teeth are spaced at the reciprocal of the time-domain periodicity. The coefficients in the upper figure are computed by the Fourier series integral. The DFT computes the coefficients in the lower figure. Its similarities to the original transform, S(f), and its relative computational ease are often the motivation for computing a DFT. The lower left graph represents a discrete-time Fourier transform (DTFT). FFT algorithms are so commonly employed to compute DFTs that the term "FFT" is often used to mean "DFT" in colloquial settings. Formally, there is a clear distinction: "DFT" refers to a mathematical transformation or function, regardless of how it is computed, whereas "FFT" refers to a specific family of algorithms for computing DFTs. The terminology is further blurred by the (now rare) synonym finite Fourier transform for the DFT, which apparently predates the term "fast Fourier transform" (Cooley et al., 1969) but has the same initialism. The Fourier transform is a mathematical operation with many applications in physics and engineering that expresses a mathematical function of time as a function of frequency, known as its frequency spectrum; Fourier's theorem guarantees that this can always be done. For instance, the transform of a musical chord made up of pure notes (without overtones) expressed as amplitude as a function of time, is a mathematical representation of the amplitudes and phases of the individual notes that make it up. The function of time is often called the time domain representation, and the frequency spectrum the frequency domain representation. The inverse Fourier transform expresses a frequency domain function in the time domain. Each value of the function is usually expressed as a complex number (called complex amplitude) that can be interpreted as a magnitude and a phase component. The term "Fourier transform" refers to both the transform operation and to the complex-valued function it produces. In the case of a periodic function, such as a continuous, but not necessarily sinusoidal, musical tone, the Fourier transform can be simplified to the calculation of a discrete set of complex amplitudes, called Fourier series coefficients. Also, when a time-domain function is sampled to facilitate storage or computer-processing, it is still possible to recreate a version of the original Fourier transform according to the Poisson summation formula, also known as discrete-time Fourier transform. These topics are addressed in separate articles. For an overview of those and other related operations, refer to Fourier analysis or List of Fourier-related transforms. A discrete cosine transform (DCT) expresses a sequence of finitely many data points in terms of a sum of cosine functions oscillating at different frequencies. DCTs are important to numerous applications in science and engineering, from lossy compression of audio (e.g. MP3) and images (e.g. JPEG) (where small high-frequency components can be discarded), to spectral methods for the numerical solution of partial differential equations. The use of cosine rather than sine functions is critical in these applications: for compression, it turns out that cosine functions are much more efficient (as described below, fewer functions are needed to approximate a typical signal), whereas for differential equations the cosines express a particular choice of boundary conditions. In particular, a DCT is a Fourier-related transform similar to the discrete Fourier transform (DFT), but using only real numbers. DCTs are equivalent to DFTs of roughly twice the length, operating on real data with even symmetry (since the Fourier transform of a real and even function is real and even), where in some variants the input and/or output data are shifted by half a sample. There are eight standard DCT variants, of which four are common. The most common variant of discrete cosine transform is the type-II DCT, which is often called simply "the DCT"; its inverse, the type-III DCT, is correspondingly often called simply "the inverse DCT" or "the IDCT". Two related transforms are the discrete sine transform (DST), which is equivalent to a DFT of real and odd functions, and the modified discrete cosine transform (MDCT), which is based on a DCT of overlapping data. UNIT III: IMAGE ENHANCEMENT Definition – spatial domain methods – frequency domain methods – histogram – modification techniques – neighborhood averaging – median filtering – low pass filtering – averaging of multiple images – image sharpening by differentiation and high pass filtering. Image Enhancement Image enhancement is the improvement of digital image quality (wanted e.g. for visual inspection or for machine analysis), without knowledge about the source of degradation. If the source of degradation is known, one calls the process image restoration. Both are iconical processes, viz. input and output are images. Many different, often elementary and heuristic methods are used to improve images in some sense. The problem is, of course, not well defined, as there is no objective measure for image quality. Here, we discuss a few recipes that have shown to be useful both for the human observer and/or for machine recognition. These methods are very problem-oriented: a method that works fine in one case may be completely inadequate for another problem. Apart from geometrical transformations some preliminary greylevel adjustments may be indicated, to take into account imperfections in the acquisition system. This can be done pixel by pixel, calibrating with the output of an image with constant brightness. Frequently spaceinvariant greyvalue transformations are also done for contrast stretching, range compression, etc. The critical distribution is the relative frequency of each greyvalue, the greyvalue histogram . Examples of simple greylevel transformations in this domain are: Greyvalues can also be modified such that their histogram has any desired shape, e.g flat (every greyvalue has the same probability). All examples assume point processing, viz. each output pixel is the function of one input pixel; usually, the transformation is implemented with a lookup table: Physiological experiments have shown that very small changes in luminance are recognized by the human visual system in regions of continuous greyvalue, and not at all seen in regions of some discontinuities. Therefore, a design goal for image enhancement often is to smooth images in more uniform regions, but to preserve edges. On the other hand, it has also been shown that somehow degraded images with enhancement of certain features, e.g. edges, can simplify image interpretation both for a human observer and for machine recognition. A second design goal, therefore, is image sharpening. All these operations need neighbourhood processing, viz. the output pixel is a function of some neighbourhood of the input pixels: These operations could be performed using linear operations in either the frequency or the spatial domain. We could, e.g. design, in the frequency domain, one-dimensional low or high pass filters ( Filtering), and transform them according to McClellan's algorithm ([McClellan73] to the two-dimensional case. Unfortunately, linear filter operations do not really satisfy the above two design goals; in this book, we limit ourselves to discussing separately only (and superficially) Smoothing and Sharpening. Here is a trick that can speed up operations substantially, and serves as an example for both point and neighbourhood processing in a binary image: we number the pixels in a neighbourhood like: and denote the binary values (0,1) by bi (i = 0,8); we then concatenate the bits into a 9-bit word, like b8b7b6b5b4b3b2b1b0. This leaves us with a 9-bit greyvalue for each pixel, hence a new image (an 8-bit image with b8 taken from the original binary image will also do). The new image corresponds to the result of a convolution of the binary image, with a matrix containing as coefficients the powers of two. This neighbour image can then be passed through a look-up table to perform erosions, dilations, noise cleaning, skeletonization, etc. Apart from point and neighbourhood processing, there are also global processing techniques, i.e. methods where every pixel depends on all pixels of the whole image. Histogram methods are usually global, but they can also be used in a neighbourhood. Spatial domain methods The value of a pixel with coordinates (x,y) in the enhanced image is the result of performing some operation on the pixels in the neighbourhood of (x,y) in the input image, F. Neighbourhoods can be any shape, but usually they are rectangular. Grey scale manipulation The simplest form of operation is when the operator T only acts on a in the input image, that is transformation or mapping. pixel neighbourhood only depends on the value of F at (x,y). This is a grey scale The simplest case is thresholding where the intensity profile is replaced by a step function, active at a chosen threshold value. In this case any pixel with a grey level below the threshold in the input image gets mapped to 0 in the output image. Other pixels are mapped to 255. Other grey scale transformations are outlined in figure 1 below. Figure 1: Tone-scale adjustments. Histogram Equalization Histogram equalization is a common technique for enhancing the appearance of images. Suppose we have an image which is predominantly dark. Then its histogram would be skewed towards the lower end of the grey scale and all the image detail is compressed into the dark end of the histogram. If we could `stretch out' the grey levels at the dark end to produce a more uniformly distributed histogram then the image would become much clearer. Figure 2: The original image and its histogram, and the equalized versions. Both images are quantized to 64 grey levels. Histogram equalization involves finding a grey scale transformation function that creates an output image with a uniform histogram (or nearly so). How do we determine this grey scale transformation function? Assume our grey levels are continuous and have been normalized to lie between 0 and 1. We must find a transformation T that maps grey values r in the input image F to grey values s = T(r) in the transformed image . It is assumed that T is single valued and monotonically increasing, and for . The inverse transformation from s to r is given by r = T-1(s). If one takes the histogram for the input image and normalizes it so that the area under the histogram is 1, we have a probability distribution for grey levels in the input image Pr(r). If we transform the input image to get s = T(r) what is the probability distribution Ps(s) ? From probability theory it turns out that where r = T-1(s). Consider the transformation This is the cumulative distribution function of r. Using this definition of T we see that the derivative of s with respect to r is Substituting this back into the expression for Ps, we get for all want. .Thus, Ps(s) is now a uniform distribution function, which is what we Discrete Formulation We first need to determine the probability distribution of grey levels in the input image. Now where nk is the number of pixels having grey level k, and N is the total number of pixels in the image. The transformation now becomes Note that , the index ,and . The values of sk will have to be scaled up by 255 and rounded to the nearest integer so that the output values of this transformation will range from 0 to 255. Thus the discretization and rounding of sk to the nearest integer will mean that the transformed image will not have a perfectly uniform histogram. Image Smoothing The aim of image smoothing is to diminish the effects of camera noise, spurious pixel values, missing pixel values etc. There are many different techniques for image smoothing; we will consider neighbourhood averaging and edge-preserving smoothing. Neighbourhood Averaging Each point in the smoothed image, is obtained from the average pixel value in a neighbourhood of (x,y) in the input image. For example, if we use a neighbourhood around each pixel we would use the mask 1/9 1/9 1/9 1/9 1/9 1/9 1/9 1/9 1/9 Each pixel value is multiplied by , summed, and then the result placed in the output image. This mask is successively moved across the image until every pixel has been covered. That is, the image is convolved with this smoothing mask (also known as a spatial filter or kernel). However, one usually expects the value of a pixel to be more closely related to the values of pixels close to it than to those further away. This is because most points in an image are spatially coherent with their neighbours; indeed it is generally only at edge or feature points where this hypothesis is not valid. Accordingly it is usual to weight the pixels near the centre of the mask more strongly than those at the edge. Some common weighting functions include the rectangular weighting function above (which just takes the average over the window), a triangular weighting function, or a Gaussian. In practice one doesn't notice much difference between different weighting functions, although Gaussian smoothing is the most commonly used. Gaussian smoothing has the attribute that the frequency components of the image are modified in a smooth manner. Smoothing reduces or attenuates the higher frequencies in the image. Mask shapes other than the Gaussian can do odd things to the frequency spectrum, but as far as the appearance of the image is concerned we usually don't notice much. Edge preserving smoothing Neighbourhood averaging or Gaussian smoothing will tend to blur edges because the high frequencies in the image are attenuated. An alternative approach is to use median filtering. Here we set the grey level to be the median of the pixel values in the neighbourhood of that pixel. The median m of a set of values is such that half the values in the set are less than m and half are greater. For example, suppose the pixel values in a neighbourhood are (10, 20, 20, 15, 20, 20, 20, 25, 100). If we sort the values we get (10, 15, 20, 20, |20|, 20, 20, 25, 100) and the median here is 20. The outcome of median filtering is that pixels with outlying values are forced to become more like their neighbours, but at the same time edges are preserved. Of course, median filters are nonlinear. Median filtering is in fact a morphological operation. When we erode an image, pixel values are replaced with the smallest value in the neighbourhood. Dilating an image corresponds to replacing pixel values with the largest value in the neighbourhood. Median filtering replaces pixels with the median value in the neighbourhood. It is the rank of the value of the pixel used in the neighbourhood that determines the type of morphological operation. Figure 3: Image of Genevieve; with salt and pepper noise; the result of averaging; and the result of median filtering. Image sharpening The main aim in image sharpening is to highlight fine detail in the image, or to enhance detail that has been blurred (perhaps due to noise or other effects, such as motion). With image sharpening, we want to enhance the high-frequency components; this implies a spatial filter shape that has a high positive component at the centre (see figure 4 below). Figure 4: Frequency domain filters (top) and their corresponding spatial domain counterparts (bottom). A simple spatial filter that achieves image sharpening is given by -1/9 -1/9 -1/9 -1/9 8/9 -1/9 -1/9 -1/9 -1/9 Since the sum of all the weights is zero, the resulting signal will have a zero DC value (that is, the average signal value, or the coefficient of the zero frequency term in the Fourier expansion). For display purposes, we might want to add an offset to keep the result in the range. High boost filtering We can think of high pass filtering in terms of subtracting a low pass image from the original image, that is, High pass = Original - Low pass. However, in many cases where a high pass image is required, we also want to retain some of the low frequency components to aid in the interpretation of the image. Thus, if we multiply the original image by an amplification factor A before subtracting the low pass image, we will get a high boost or high frequency emphasis filter. Thus, Now, if A = 1 we have a simple high pass filter. When A > 1 part of the original image is retained in the output. A simple filter for high boost filtering is given by -1/9 -1/9 -1/9 -1/9 /9 -1/9 -1/9 -1/9 -1/9 where . UNIT IV: IMAGE ENCODING Objective and subjective fidelity criteria – basic encoding process – the mapping – the quantizer– the coder – differential – encoding – contour encoding – run length encoding image encoding – relative to fidelity criterion – differential pulse code modulation. The objective fidelity criteria used in comparing images do not always agree with the subjective fidelity criteria. A new objective fidelity criterion, which is called derivative SNR, is developed in this study and it is compared with a known objective criterion called a.c. SNR. The sensitivity of the human eye to the edges in an image is considered while developing the new criterion. Subjective tests performed on a group of observers show that the subjective decisions agree more with the new and less with the old objective criteria Memory has the ability to encode, store and recall information. Memories give an organism the capability to learn and adapt from previous experiences as well as build relationships. Encoding allows the perceived item of use or interest to be converted into a construct that can be stored within the brain and recalled later from short term or long term memory. Working memory stores information for immediate use or manipulation which is aided through hooking onto previously archived items already present in the long-term memory of an individual. olutions to your tough image processing and geospatial challenges require flexible tools for data exploration and analysis, a library of standard techniques, and a high-level language for algorithm development. Join us for this free seminar to discover how MATLAB provides a complete environment for image and video processing, mapping, geospatial data analysis, and parallel computing. During this half-day seminar, MathWorks engineers will deliver an introduction to MATLAB and related tools for image, video, and mapping applications. Specific demonstrations with realworld examples will show you how to: Analyze digital elevation models with image processing functionality Drape aerial imagery on a 3D map display Access data through Web Map Service (WMS) servers Create mosaics using video processing techniques for registration Process arbitrarily large images using block processing workflows Improve performance with Parallel Computing Toolbox Differential pulse-code modulation (DPCM) is a signal encoder that uses the baseline of pulsecode modulation (PCM) but adds some functionalities based on the prediction of the samples of the signal. The input can be an analog signal or a digital signal. If the input is a continuous-time analog signal, it needs to be sampled first so that a discrete-time signal is the input to the DPCM encoder. Option 1: take the values of two consecutive samples; if they are analog samples, quantize them; calculate the difference between the first one and the next; the output is the difference, and it can be further entropy coded. Option 2: instead of taking a difference relative to a previous input sample, take the difference relative to the output of a local model of the decoder process; in this option, the difference can be quantized, which allows a good way to incorporate a controlled loss in the encoding. Applying one of these two processes, short-term redundancy (positive correlation of nearby values) of the signal is eliminated; compression ratios on the order of 2 to 4 can be achieved if differences are subsequently entropy coded, because the entropy of the difference signal is much smaller than that of the original discrete signal treated as independent samples. DPCM was invented by C. Chapin Cutler at Bell Labs in 1950; his patent includes both methods.[1] Shannon's rate-distortion function provides a potentially useful lower bound against which to compare the rate-versus-distortion performance of practical encoding-transmission systems. However, this bound is not applicable unless one can arrive at a numerically-valued measure of distortion which is in reasonable correspondence with the subjective evaluation of the observer or interpreter. We have attempted to investigate this choice of distortion measure for monochrome still images. This investigation has considered a class of distortion measures for which it is possible to simulate the optimum (in a rate-distortion sense) encoding. Such simulation was performed at a fixed rate for various measures in the class and the results compared subjectively by observers. For several choices of transmission rate and original images, one distortion measure was fairly consistently rated as yielding the most satisfactory appearing encoded images. We describe novel methods of feature extraction for recognition of single isolated character images. Our approach is flexible in that the same algorithms can be used, without modification, for feature extraction in a variety of OCR problems. These include handwritten, machine-print, grayscale, binary and lowresolution character recognition. We use the gradient representation as the basis for extraction of lowlevel, structural and stroke-type features. These algorithms require a few simple arithmetic operations per image pixel which makes them suitable for real-time applications. A description of the algorithms and experiments with several data sets are presented in this paper. Experimental results using artificial neural networks are presented. Our results demonstrate high performance of these features when tested on data sets distinct from the training data. UNIT V: IMAGE ANALYSIS AND COMPUTER VISION Typical computer vision system – image analysis techniques – spatial feature extraction – amplitude and histogram features - transforms features – edge detection – gradient operators – boundary extraction – edge linking – boundary representation – boundary matching – shape representation. Computer vision is a field that includes methods for acquiring, processing, analysing, and understanding images and, in general, high-dimensional data from the real world in order to produce numerical or symbolic information, e.g., in the forms of decisions.[1][2][3] A theme in the development of this field has been to duplicate the abilities of human vision by electronically perceiving and understanding an image.[4] This image understanding can be seen as the disentangling of symbolic information from image data using models constructed with the aid of geometry, physics, statistics, and learning theory.[5] Computer vision has also been described as the enterprise of automating and integrating a wide range of processes and representations for vision perception.[6] Applications range from tasks such as industrial machine vision systems which, say, inspect bottles speeding by on a production line, to research into artificial intelligence and computers or robots that can comprehend the world around them. The computer vision and machine vision fields have significant overlap. Computer vision covers the core technology of automated image analysis which is used in many fields. Machine vision usually refers to a process of combining automated image analysis with other methods and technologies to provide automated inspection and robot guidance in industrial applications. As a scientific discipline, computer vision is concerned with the theory behind artificial systems that extract information from images. The image data can take many forms, such as video sequences, views from multiple cameras, or multi-dimensional data from a medical scanner. As a technological discipline, computer vision seeks to apply its theories and models to the construction of computer vision systems. Examples of applications of computer vision include systems for: Controlling processes, e.g., an industrial robot; Navigation, e.g., by an autonomous vehicle or mobile robot; Detecting events, e.g., for visual surveillance or people counting; Organizing information, e.g., for indexing databases of images and image sequences; Modeling objects or environments, e.g., medical image analysis or topographical modeling; Interaction, e.g., as the input to a device for computer-human interaction, and Automatic inspection, e.g., in manufacturing applications. Sub-domains of computer vision include scene reconstruction, event detection, video tracking, object recognition, learning, indexing, motion estimation, and image restoration. In most practical computer vision applications, the computers are pre-programmed to solve a particular task, but methods based on learning are now becoming increasingly common Image analysis is the extraction of meaningful information from images; mainly from digital images by means of digital image processing techniques. Image analysis tasks can be as simple as reading bar coded tags or as sophisticated as identifying a person from their face. Computers are indispensable for the analysis of large amounts of data, for tasks that require complex computation, or for the extraction of quantitative information. On the other hand, the human visual cortex is an excellent image analysis apparatus, especially for extracting higherlevel information, and for many applications — including medicine, security, and remote sensing — human analysts still cannot be replaced by computers. For this reason, many important image analysis tools such as edge detectors and neural networks are inspired by human visual perception models. In this paper, we present an improved method for detecting LSB matching steganography in gray-scale image. Our improvements focus on three aspects: (1) instead of using the amplitude of local extrema of the image's histogram in the previous work, we turn to considering the sum of the amplitude of each point in the histogram; (2) incorporating the calibration (downsample) technique with the current method; (3) the sum/difference image (which is defined as the sum or difference of two adjacent pixels in the original image) is taken into consideration to provide additional statistical features. Extensive experimental results show that the novel steganalyzer out-performs the previous ones.