data

Data Cleaning and

Transformation

Helena Galhardas

DEI IST

(based on the slides: “A Survey of Data Quality Issues in

Cooperative Information Systems”, Carlo Batini, Tiziana

Catarci, Monica Scannapieco, 23rd International Conference on Conceptual Modelling (ER 2004))

Agenda

Introduction

Data Quality Problems

Data Quality Dimensions

Relevant activities in Data Quality

When materializing the integrated data

(data warehousing)…

SOURCE DATA

TARGET DATA

...

Data

E xtraction

Data

Transformation

Data

Loading ...

ETL: Extraction, Transformation and Loading

70% of the time in a datawarehousing project is spent with the ETL process

Why Data Cleaning and Transformation?

Data in the real world is dirty incomplete : lacking attribute values, lacking certain attributes of interest, or containing only aggregate data

e.g., occupation=“” noisy : containing errors or outliers (spelling, phonetic and typing errors, word transpositions, multiple values in a single free-form field)

e.g., Salary=“-10” inconsistent : containing discrepancies in codes or names

(synonyms and nicknames, prefix and suffix variations, abbreviations, truncation and initials)

e.g., Age=“42” Birthday=“03/07/1997” e.g., Was rating “1,2,3”, now rating “A, B, C”

e.g., discrepancy between duplicate records

Why Is Data Dirty?

Incomplete data comes from:

non available data value when collected

different criteria between the time when the data was collected and when it is analyzed.

human/hardware/software problems

Noisy data comes from:

data collection: faulty instruments

data entry: human or computer errors

data transmission

Inconsistent (and redundant) data comes from:

Different data sources, so non uniform naming conventions/data codes

Functional dependency and/or referential integrity violation

Why is Data Quality Important?

Activity of converting source data into target data without errors, duplicates, and inconsistencies, i.e.,

Cleaning and Transforming to get…

High-quality data!

No quality data, no quality decisions !

Quality decisions must be based on good quality data (e.g., duplicate or missing data may cause incorrect or even misleading statistics)

Research issues related to DQ

• Source Selection

• Source Composition

• Query Result

Selection

• Time Syncronization

•…

• Conflict

Resolution

• Record Matching

• …

• Record

Matching(deduplication)

• Data Transformation

•…

• Error Localization

• DB profiling

• Patterns in text strings

•…

Data

Integration

Data

Cleaning

Statistical

Data Analysis

Data

Mining

Data Quality

Management

Information

Systems

Knowledge

Representation

•Conflict Resolution

•…

• Editimputation

• Record

Linkage

•…

• Assessment

• Process Improvement

• Tradeoff

Cost/Optimization

•…

Research areas in DQ systems

Research areas

Models

Measurement/Improvement

Techniques

Measurement/Improvement

Tools and Frameworks

Application

Domains

EGov

Scientific

Data

Web

Data

…

Application contexts

Integrate data from different sources

E.g.,populating a DW from different operational data stores

Eliminate errors and duplicates within a single source

E.g., duplicates in a file of customers

Migrate data from a source schema into a different fixed target schema

E.g., discontinued application packages

Convert poorly structured data into structured data

E.g., processing data collected from the Web

9

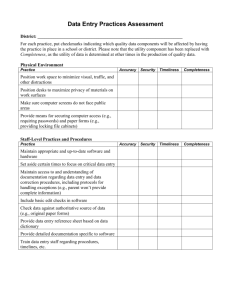

Data Quality Dimensions

Accuracy

Errors in data

Example :”Jhn” vs. “John”

Currency

Lack of updated data

Example : Residence (Permanent) Address: out-dated vs. up-todated

Consistency

Discrepancies into the data

Example : ZIP Code and City consistent

Completeness

Lack of data

Partial knowledge of the records in a table or of the attributes in a record

Example completeness

Existing technology

Ad-hoc programs written in a programming language like

C or Java or using an RDBMS proprietary language

Programs difficult to optimize and maintain

RDBMS mechanisms for guaranteeing integrity constraints

Do not address important data instance problems

Data transformation scripts using an ETL

(Extraction-Transformation-Loading) or data quality tool

Typical architecture of a DQ system

Human

Knowledge

SOURCE DATA

TARGET DATA

...

Data

Extraction

Data

Loading ...

Data

Analysis

Human

Knowledge

Metadata

Schema

Integration

Dictionaries

Data Quality problems

Several taxonomies

Barateiro and Galhardas, 2005. Barateiro, J. and Galhardas,

H. (2005). “A survey of data quality tools”. Datenbank-

Spektrum, 14:15-21 .

Oliveira, P. (2009). “Detecção e correcção de problemas de qualidade de dados: Modelo, Sintaxe e Semântica”. PhD thesis,

U. do Minho.

Kim, W., Choi, B.-J., Hong, E.-K., Kim, S.-K., and Lee, D. (2003).

“A taxonomy of dirty data. Data Mining and Knowledge

Discovery”, 7:81-99.

Mueller, H. and Freytag, J.C. (2003). “Problems, methods, and challenges in comprehensive data cleansing”. Technical report,

Humboldt-Universitaet zu Berlin zu Berlin.

Rahm, E. and Do, H. H. (2000). “Data cleaning: Problems and current approaches”. Bulletin of the Technical Committe on Data

Engineering, Special Issue on Data Cleaning, 23:3-13.

Data quality problems (1/3)

Schema level data quality problems prevented with better schema design, schema translation and integration.

Instance level data quality problems errors and inconsistencies of data that are not prevented at schema level

Data quality problems (2/3)

Schema level data quality problems

Avoided by an RDBMS

Missing data – product price not filled in

Wrong data type – “abc” in product price

Wrong data value – 0.5 in product tax (iva)

Dangling data – category identifier of product does not exist

Exact duplicate data – different persons with same ssn

Generic domain constraints – incorrect invoice price

Not avoided by an RDBMS

Wrong categorical data – countries and corresponding states

Outdated temporal data – just-in-time requirement

Inconsistent spatial data – coordinates and shapes

Name conflicts – person vs person or person vs client

Structural Conflicts addresses

Data quality problems (3/3)

Instance level data quality problems

Single record

Missing data in a not null field – ssn:-9999999

Erroneous data – price:5 but real price:50

Misspellings: José Maria Silva vs José Maria Sliva

Embedded values: Prof. José Maria Silva

Misfielded values: city: Portugal

Ambiguous data: J. Maria Silva; Miami Florida,Ohio

Multiple records

Duplicate records: Name:Jose Maria Silva, Birth:01/01/1950 and

Name:José Maria Sliva, Birth:01/01/1950

Contradicting records: Name:José Maria Silva, Birth:01/01/1950 and Name:José Maria Silva, Birth:01/01/1956

Non-standardized data: José Maria Silva vs Silva, José Maria

Data Quality

Dimensions

Traditional data quality dimensions

Accuracy

Completeness

Time-related dimensions: Currency, Timeliness , and Volatility

Consistency

Their definitions do not provide quantitative measures so one or more metrics have to be associated

For each metric, one or more measurement methods have to be provided regarding: (i) where the measurement is taken; (ii) what data are included; (iii) the measurement device; and (iv) the scale on which results are reported.

Schema quality dimensions are also defined

Accuracy

Closeness between a value v and a value v ’, considered as the correct representation of the real-world phenomenon that v aims to represent.

Ex: for a person name “John”, v’=John is correct, v=Jhn is incorrect

Syntatic accuracy : closeness of a value v to the elements of the corresponding definition domain D

Ex: if v=Jack, even if v’=John , v is considered syntactically correct

Measured by means of comparison functions (e.g., edit distance) that returns a score

Semantic accuracy : closeness of the value v to the true value v’

Measured with a <yes, no> or <correct, not correct> domain

Coincides with correctness

The corresponding true value has to be known

Ganularity of accuracy definition

Accuracy may refer to:

a single value of a relation attribute

an attribute or column

a relation

the whole database

Metrics for quantifying accuracy

Weak accuracy error

Characterizes accuracy errors that do not affect identification of tuples

Strong accuracy error

Characterizes accuracy errors that affect identification of tuples

Percentage of accurate tuples

Characterizes the fraction of accurate matched tuples

Completeness

“The extent to which data are of sufficient breadth, depth, and scope for the task in hand.”

Three types:

Schema completeness : degree to which concepts and their properties are not missing from the schema

Column completeness : evaluates the missing values for a specific property or column in a table.

Population completeness : evaluates missing values with respect to a reference population

Completeness of relational data

The completeness of a table characterizes the extent to which the table represents the real world.

Can be characterized wrt:

The presence/absence and meaning of null values

Example : Person(name, surname, birthdate, email), if email is null may indicate the person has no mail (no incompleteness), email exists but is not known

(incompletenss), is is not known whether Person has an email (incompleteness may not be the case)

Validity of open world assumption (OWA) or closed world assumption (CWA)

OWA : cannot state neither the truth or falsity of facts not represented in the tuples of a relation

CWA : only the values actually present in a relational table and no other values represent facts of the real world.

Metrics for quantifying completeness (1)

Model without null values with OWA

Need a reference relation r’ for a relation r, that contains all the tuples that satisfy the schema of r

C(r) = |r|/|ref(r)|

Example : according to a registry of Lisbon municipality, the number of citizens is 2 million. If a company stores data about Lisbon citizens for the purpose of its business and that number is

1,400,000 then C(r) = 0,7

Metrics for quantifying completeness (2)

Model with null values with CWA : specific definitions for different granularities:

Values : to capture the presence of null values for some fields of a tuple

Tuple : to characterize the completeness of a tuple wrt the values of all its fields:

Evaluates the % of specified values in the tuple wrt the total number of attributes of the tuple itself

Example : Student(stID, name, surname, vote, examdate)

Equal to 1 for (6754, Mike, Collins, 29, 7/17/2004)

Equal to 0.8 for (6578, Julliane, Merrals, NULL, 7/17/2004)

Metrics for quantifying completeness (3)

Attribute: to measure the number of null values of a specific attribute in a relation

Evaluates % of specified values in the column corresponding to the attribute wrt the total number of values that should have been specified .

Example : For calculating the average of votes in Student, a notion of the completeness of Vote should be useful

Relations : to capture the presence of null values in the whole relation

Measures how much info is represented in the relation by evaluating the content of the info actually available wrt the maximum possible content, i.e., without null values.

Time-related dimensions

Currency : concerns how promptly data are updated

Example : if the residential address of a person is updated (it corresponds to the address where the person lives) then the currency is high

Volatility : characterizes the frequency with which data vary in time

Example : Birth dates (volatility zero) vs stock quotes (high degree of volatility)

Timeliness : expresses how current data are for the task in hand

Example : The timetable for university courses can be current by containing the most recent data, but it cannot be timely if it is available only after the start of the classes.

Metrics of time-related dimensions

Last update metadata for currency

Straightforward for data types that change with a fixed frequency

Length of time that data remain valid for volatility

Currency + check that data are available before the planned usage time for timeliness

Consistency

Captures the violation of semantic rules defined over a set of data items, where data items can be tuples of relational tables or records in a file

Integrity constraints in relational data

Domain constraints, Key, inclusion and functional dependencies

Data edits : semantic rules in statistics

1.

2.

3.

Evolution of dimensions

Traditional dimensions are Accuracy,

Completeness, Timeliness, Consistency

With the advent of networks, sources increase dramatically, and data become often “found data”.

Federated data, where many disparate data are integrated, are highly valued

Data collection and analysis are frequently disconnected.

As a consequence we have to revisit the concept of DQ and new dimensions become fundamental.

Other dimensions

Interpretability : concerns the documentation and metadata that are available to correctly interpret the meaning and properties of data sources

Synchronization between different time series: concerns proper integration of data having different time stamps.

Accessibility : measures the ability of the user to access the data from his/her own culture, physical status/functions, and technologies availavle.

Relevant activities in DQ

Relevant activities in DQ

Standardization/normalization

Record Linkage/Object identification/Entity identification/Record matching

Data integration

Schema matching

Instance conflict resolution

Source selection

Result merging

Quality composition

Error localization/Data Auditing

Data editing-imputation/Deviation detection

Data profiling

Structure induction

Data correction/data cleaning/data scrubbing

Schema cleaning

Standardization/normalization

Modification of data with new data according to defined standards or reference formats

Example :

Change “Bob” to “Robert”

Change of “Channel Str.” to “Channel Street”

Record Linkage/Object identification/ Entity identification/Record matching/Duplicate detection

Activity required to identify whether data in the same source or in different ones represent the same object of the real world

Data integration

Task of presenting a unified view of data owned by heterogeneous and distributed data sources

Two sub-activities:

Quality-driven query processing : task of providing query results on the basis of a quality characterization of data at sources

Instance-level conflict resolution : task of identifying and solving conflicts of values referring to the same real-world objects.

Instance-level conflict resolution

Instance level conflicts can be of three types:

representation conflicts , e.g. dollar vs. Euro

key equivalence conflicts , i.e. same real world objects with different identifiers

attribute value conflicts , i.e. Instances corresponding to same real world objects and sharing an equivalent key, differ on other attributes

Error localization/Data Auditing

Given one/two/n tables or groups of tables, and a group of integrity constraints/qualities

(e.g. completeness, accuracy), find records that do not respect the constraints/qualities .

Data editing-imputation

Focus on integrity constraints

Deviation detection

data checking that marks deviations as possible data errors

Data Profiling

Evaluating statistical properties and intensional properties of tables and records

Structure induction of a structural description , i.e. “any form of regularity that can be found”

Data correction/data cleaning/data scrubbing

Given one/two/n tables or groups of tables, and a set of identified errors in records wrt to given qualities, generates probable corrections and correct the records , in such a way that new records respect the qualities.

Schema cleaning

Transform the conceptual schema in order to achieve or optimize a given set of qualities (e.g. Readability, Normalization), while preserving other properties (e.g. equivalence of content)

References

“Data Quality: Concepts, Methodologies and

Techniques”, C. Batini and M. Scannapieco,

Springer-Verlag, 2006 (Chapts. 1, 2, and 4).

“A Survey of Data Quality tools”, J. Barateiro,

H. Galhardas, Datenbank-Spektrum 14: 15-

21, 2005.

Next lectures

Data Cleaning and Transformation tools

The Ajax framework

Record Linkage

Data Fusion