Chaos - Computer Science

advertisement

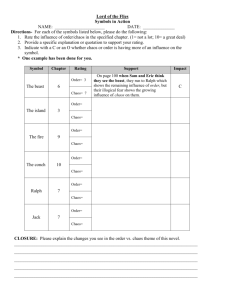

Chaos and Information Dr. Tom Longshaw SPSI Sector, DERA Malvern longshaw@signal.dera.gov.uk Some background information 2 DERA is an agency of the MoD Employs over 8000 scientists Over 30 sites around the country Largest research organisation in Europe SPSI Sector Parallel and Distributed Simulation Introduction A further example of chaos When is a system stable? Measuring chaos Energy, Avoiding chaos when not wanted How to avoid chaotic programs! Practical applications of chaos What 3 entropy and information. use can chaos be? Further reading Chaos Chaos: Making a new Science, James Gleick, Cardinal(Penguin), London 1987. http://www.around.com Chaos and Entropy The Quark and the Jaguar, Murray Gell-Mann, Little, Brown and Company, London 1994. Complexity Complexity, M. Mitchell Waldrop, Penguin, London, 1992. http://www.santafe.edu http://www-chaos.umd.edu/intro.html 4 When is a system stable? 5 A street has 16 houses in it, each house paints its front door red or green. Each year each resident chooses a another house at random and paints their door the same colour as that door. Initially there are 8 red and 8 green. Is this system stable…? What controls the chaos? 6 If we increase the size of the population (number of houses) does the system become more stable? If we increase the sample size (e.g. look at 3 of our neighbours) does the system become more stable? Sample results Varying the population size Time to converge 1 sample 50 40 30 20 10 0 1 2 3 4 5 6 7 Population size(log 2) 7 8 9 10 Varying the sample size Convergence time Population=16 10 8 6 4 2 0 1 2 3 Sample size 8 4 5 Varying both together 50 45 40 30 20 Convergence tim e 10 9 7 5 3 9 1 Sam ple size S4 0 Population (log) 40 35 30 25 20 15 10 5 0 1 9 2 3 4 5 6 7 8 9 10 Why is the system unstable? 10 Potential Energy The “potential to change” Initial state 80 Phase change 70 Energy 60 50 40 30 20 10 0 Number red 11 Landscapes of possibility 12 Watersheds ... Chaos and Entropy 13 Chaos and entropy are synonymous. Entropy was originally developed to describe the chaos in chemical and physical systems. In recent years entropy has been used to describe the ratio between information and data size. Information Measuring the ratio of information to bits. 00000000000000000000000000 01010101010101010101010101 More information 01101001110110000101101110 14 Little information 01011011101111011111011111 Low (0) information Random (0 information!) Measuring information Shannon entropy (1949) The ability to predict based on an observed sample. Algorithmic Information Content (Kolmogorov 1960) The size of program required to generate the sample Lempel-Ziv-Welch (1977,1984) The 15 zip it and see approach! When is a system stable? Complexity Information When there is insufficient energy in the system for the system to change its current behaviour. Paradoxically such systems are rarely interesting or useful. Simplicity 16 Total randomness Entropy Characteristics of a chaotic system Unpredictability Non-linear performance Small changes in the initial settings give large changes in outcome The 17 butterfly effect Elegant degradation Increased control increases the variation What makes a chaotic system? 18 Non-Markovian behaviour. Positive feedback: state(n) affects state(n+1). Any evolving solution. Simplicity of rules, complex systems are rarely chaotic, just unpredictable. Complex systems often hide simple chaotic systems inside. Dealing with chaos Avoid programming with integers! Avoid “while” loops Add damping factors Observers 19 and pre-conditions Add randomness into your programs! Practical Applications “Modern” economic theory [Brian Arthur 1990] Interesting images and games Fractals, 20 SimCity, Creatures II Genetic algorithms Advanced Information Systems “Immersive simulations” Information Systems 21 Conventional database store data in a orderly fashion. Reducing the data to its information content increases the complexity of the structure… … but it can be accessed much faster, and some queries can be greatly optimised. smallWorlds Developed to model political and economic situations Difficult to quantify Uses fuzzy logic and tight feedback loops If demand is high then price increases. If price is high then retailers grow. If supply is high then price decreases. If price is low then retailers shrink. 22 Conclusions 23 Computer scientists should recognise chaotic situations. Chaos can be avoided or forestalled. Chaos is not always “bad”. Sometimes a chaotic system is better than the alternatives.