Lecture25

advertisement

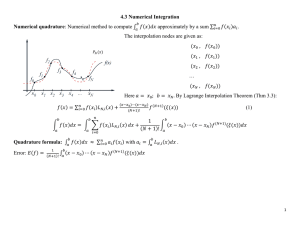

Engineering Analysis ENG 3420 Fall 2009 Dan C. Marinescu Office: HEC 439 B Office hours: Tu-Th 11:00-12:00 Lecture 25 Attention: The last homework HW5 and the last project are due on Tuesday November 24!! Last time: Today Cubic splines Searching and sorting Numerical integration (chapter 17) Next Time Numerical integration of functions (chapter 18). Lecture 25 2 Search algorithms Find an element of a set based upon some search criteria. Linear search: Compare each element of the set with the “target” Requires O(n) operations if the set of n elements is not sorted Binary search: Can be done only when the list is sorted. Requires O(log(n)) comparisons. Algorithm: Check the middle element. If the middle element is equal to the sought value, then the position has been found; Otherwise, the upper half or lower half is chosen for search based on whether the element is greater than or less than the middle element. 3 Sorting algorithms Algorithms that puts elements of a list in a certain order, e.g., numerical order and lexicographical order. Input: a list of n unsorted elements. Output: the list sorted in increasing order. Bubble sort complexity: average O(n2); )); worst case O(n2). Compare each pair of elements; swap them if they are in the wrong order. Go again through the list until no swaps are necessary. Quick sort complexity: average O(n log(n)); worst case O(n2). Pick an element, called a pivot, from the list. Reorder the list so that all elements which are less than the pivot come before the pivot and all elements greater than the pivot come after it (equal values can go either way). After this partitioning, the pivot is in its final position. Recursively sort the sub-list of lesser elements and the sub-list of greater elements. 4 Sorting algorithms (cont’d) Merge sort – invented by John von Neumann: 1. 2. 3. 4. 5. Complexity: average O(n log(n)); worst case O(n log(n)); If the list is of length 0 or 1, then it is already sorted. Otherwise: Divide the unsorted list into two sublists of about half the size. Sort each sublist recursively by re-applying merge sort. Merge the two sublists back into one sorted list. Tournament sort: Complexity: average O(n log(n)); worst case O(n log(n)); It imitates conducting a tournament in which two players play with each other. Compare numbers in pairs, then form a temporary array with the winning elements. Repeat this process until you get the greatest or smallest element based on your choice. 5 Integration I Integration: f x dx b a is the total value, or summation, of f(x) dx over the range from a to b: 6 Newton-Cotes formulas The most common numerical integration schemes. Based on replacing a complicated function or tabulated data with a polynomial that is easy to integrate: I f x dx f x dx b b a a n where fn(x) is an nth order interpolating polynomial. 7 Newton-Cotes Examples The integrating function can be polynomials for any order - for example, (a) straight lines or (b) parabolas. The integral can be approximated in one step or in a series of steps to improve accuracy. 8 The trapezoidal rule The trapezoidal rule is the first of the Newton-Cotes closed integration formulas; it uses a straight-line approximation for the function: I f x dx b a n f b f a I f (a) x adx a ba b f a f b I b a 2 9 Error of the trapezoidal rule An estimate for the local truncation error of a single application of the trapezoidal rule is: 1 3 Et f b a 12 where is somewhere between a and b. This formula indicates that the error is dependent upon the curvature of the actual function as well as the distance between the points. Error can thus be reduced by breaking the curve into parts. 10 Composite Trapezoidal Rule I Assuming n+1 data points are evenly spaced, there will be n intervals over which to integrate. The total integral can be calculated by integrating each subinterval and then adding them together: xn x0 fn x dx I x1 x0 x1 x0 fn x dx f x0 f x1 2 x2 x1 x2 x1 fn x dx f x1 f x2 2 xn x n 1 fn x dx xn xn1 f xn1 f xn 2 n1 h I f x0 2 f xi f xn 2 i 1 11 MATLAB Program 12 Simpson’s Rules One drawback of the trapezoidal rule is that the error is related to the second derivative of the function. More complicated approximation formulas can improve the accuracy for curves - these include using (a) 2nd and (b) 3rd order polynomials. The formulas that result from taking the integrals under these polynomials are called Simpson’s rules. 13 Simpson’s 1/3 Rule Simpson’s 1/3 rule corresponds to using second-order polynomials. Using the Lagrange form for a quadratic fit of three points: fn x x x1 x x2 f x x x0 x x2 f x x x0 x x1 f x x0 x1 x0 x2 0 x1 x0 x1 x2 1 x2 x0 x2 x1 2 Integration over the three points simplifies to: I I h f x0 4 f x1 f x2 3 x2 x0 fn x dx 14 Error of Simpson’s 1/3 Rule An estimate for the local truncation error of a single application of Simpson’s 1/3 rule is: 1 5 4 Et 2880 f b a where again is somewhere between a and b. This formula indicates that the error is dependent upon the fourth-derivative of the actual function as well as the distance between the points. Note that the error is dependent on the fifth power of the step size (rather than the third for the trapezoidal rule). Error can thus be reduced by breaking the curve into parts. 15 Composite Simpson’s 1/3 rule Simpson’s 1/3 rule can be used on a set of subintervals in much the same way the trapezoidal rule was, except there must be an odd number of points. Because of the heavy weighting of the internal points, the formula is a little more complicated than for the trapezoidal rule: I xn x0 fn x dx x2 x0 fn x dx x4 x2 fn x dx xn x n2 fn x dx h h f x 4 f x f x 0 1 2 f x2 4 f x3 f x4 3 3 n1 n2 h I f x0 4 f xi 2 f xi f xn 3 i1 j2 i, odd j, even I h f xn2 4 f xn1 f xn 3 16 Simpson’s 3/8 rule Simpson’s 3/8 rule corresponds to using third-order polynomials to fit four points. Integration over the four points simplifies to: x3 I I 3h f x0 3 f x1 3 f x2 f x3 8 x0 fn x dx Simpson’s 3/8 rule is generally used in concert with Simpson’s 1/3 rule when the number of segments is odd. 17 Higher-order formulas Higher-order Newton-Cotes formulas may also be used - in general, the higher the order of the polynomial used, the higher the derivative of the function in the error estimate and the higher the power of the step size. As in Simpson’s 1/3 and 3/8 rule, the even-segment-odd-point formulas have truncation errors that are the same order as formulas adding one more point. For this reason, the even-segment-oddpoint formulas are usually the methods of preference. 18 Integration with unequal segments The trapezoidal rule with data containing unequal segments: I xn x0 fn x dx x1 x0 fn x dx x2 x1 fn x dx f x0 f x1 f x1 f x2 I x1 x0 x2 x1 2 2 xn x n1 fn x dx f xn1 f xn xn xn1 2 19 Integration with unequal segments 20 Built-in functions MATLAB has built-in functions to evaluate integrals based on the trapezoidal rule z = trapz(y) z = trapz(x, y) produces the integral of y with respect to x. If x is omitted, the program assumes h=1. z = cumtrapz(y) z = cumtrapz(x, y) produces the cumulative integral of y with respect to x. If x is omitted, the program assumes h=1. 21 Multiple Integrals Multiple integrals can be determined numerically by first integrating in one dimension, then a second, and so on for all dimensions of the problem. 22 Richardson Extrapolation Richard extrapolation methods use two estimates of an integral to compute a third, more accurate approximation. If two O(h2) estimates I(h1) and I(h2) are calculated for an integral using step sizes of h1 and h2, respectively, an improved O(h4) estimate may be formed using: For the special case where the 1interval is halved (h2=h1/2), this I I(h2 ) I(h2 ) I(h1 ) 2 becomes: (h / h ) 1 1 2 4 1 I I(h2 ) I(h1 ) 3 3 23 Richardson Extrapolation (cont) For the cases where there are two O(h4) estimates and the interval is halved (hm=hl/2), an improved O(h6) estimate may be formed using: 16 1 I I m Il 15 15 For the cases where there are two O(h6) estimates and the interval is halved (hm=hl/2), an improved O(h8) estimate may be formed using: 64 1 I I m Il 63 63 24 The Romberg Integration Algorithm Note that the weighting factors for the Richardson extrapolation add up to 1 and that as accuracy increases, the approximation using the smaller step size is given greater weight. In general, I j,k 4 k1 I j1,k1 I j,k1 where ij+1,k-1 and ij,k-1 are the more 4 k1and 1less accurate integrals, respectively, and ij,k is the new approximation. k is the level of integration and j is used to determine which approximation is more accurate. 25 Romberg Algorithm Iterations The chart below shows the process by which lower level integrations are combined to produce more accurate estimates: 26 MATLAB Code for Romberg 27 Gauss Quadrature Gauss quadrature describes a class of techniques for evaluating the area under a straight line by joining any two points on a curve rather than simply choosing the endpoints. The key is to choose the line that balances the positive and negative errors. 28 Gauss-Legendre Formulas The Gauss-Legendre formulas seem to optimize estimates to integrals for functions over intervals from -1 to 1. Integrals over other intervals require a change in variables to set the limits from -1 to 1. The integral estimates are of the form: where the ci and calculated ensure thatf the exactly I xci are cn1 xn1method 0 f x 0 c1 f xto 1 integrates up to (2n-1)th order polynomials over the interval from -1 to 1. 29 Adaptive Quadrature Methods such as Simpson’s 1/3 rule has a disadvantage in that it uses equally spaced points - if a function has regions of abrupt changes, small steps must be used over the entire domain to achieve a certain accuracy. Adaptive quadrature methods for integrating functions automatically adjust the step size so that small steps are taken in regions of sharp variations and larger steps are taken where the function changes gradually. 30 Adaptive Quadrature in MATLAB MATLAB has two built-in functions for implementing adaptive quadrature: quad: uses adaptive Simpson quadrature; possibly more efficient for low accuracies or nonsmooth functions quadl: uses Lobatto quadrature; possibly more efficient for high accuracies and smooth functions q = quad(fun, a, b, tol, trace, p1, p2, …) fun : function to be integrates a, b: integration bounds tol: desired absolute tolerance (default: 10-6) trace: flag to display details or not p1, p2, …: extra parameters for fun quadl has the same arguments 31