slides

advertisement

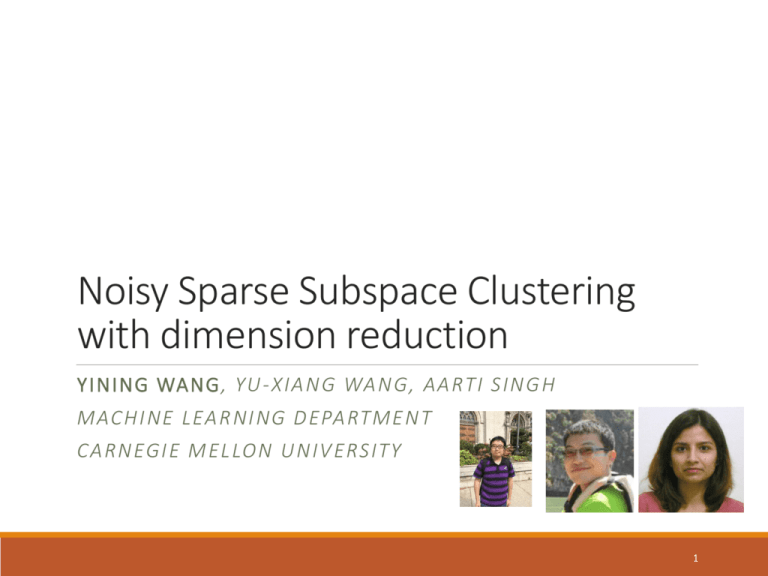

Noisy Sparse Subspace Clustering with dimension reduction YINING WANG , YU - X I A N G WA N G, A A RTI SI N GH MAC HI NE L EA R NING DE PARTMENT C A R N EGI E ME L LO N U N I V ERS ITY 1 Subspace Clustering 2 Subspace Clustering Applications Motion Trajectories tracking1 1 (Elhamifar and Vidal, 2013), (Tomasi and Kanade, 1992) 3 Subspace Clustering Applications Face Clustering1 Network hop counts, movie ratings, social graphs, … 1 (Elhamifar and Vidal, 2013), (Basri and Jacobs, 2003) 4 Sparse Subspace Clustering ◦ (Elhamifar and Vidal, 2013), (Wang and Xu, 2013). ◦ Data: 𝑋 = 𝑥1 , 𝑥2 ⋯ , 𝑥𝑁 ⊆ 𝑅𝑑 ◦ Key idea: similarity graph based on l1 self-regression 𝑥1 𝑥2 𝑥3 𝑥𝑁 𝑥1 𝑥2 𝑥3 𝑥𝑁 5 Sparse Subspace Clustering ◦ (Elhamifar and Vidal, 2013), (Wang and Xu, 2013). ◦ Data: 𝑋 = 𝑥1 , 𝑥2 , ⋯ , 𝑥𝑁 ⊆ 𝑅𝑑 ◦ Key idea: similarity graph based on l1 self-regression 𝑐𝑖 = argmin𝑐𝑖 𝑐𝑖 s.t. 𝑥𝑖 = 𝑗≠𝑖 𝑐𝑖𝑗 𝑥𝑗 𝑐𝑖 = argmin𝑐𝑖 𝑥𝑖 − 1 𝑗≠𝑖 𝑐𝑖𝑗 𝑥𝑗 Noiseless data +𝜆 𝑐𝑖 1 Noisy data 6 SSC with dimension reduction ◦ Real-world data are usually high-dimensional ◦ Hopkins-155: 𝑑 = 112~240 ◦ Extended Yale Face-B: 𝑑 ≥ 1000 ◦ Computational concerns ◦ Data availability: values of some features might be missing ◦ Privacy concerns: releasing the raw data might cause privacy breaches. 7 SSC with dimension reduction ◦ Dimensionality reduction: 𝑋 = Ψ𝑋, Ψ ∈ 𝑅 𝑝×𝑑 , 𝑝≪𝑑 ◦ How small can p be? ◦ A trivial result: 𝑝 = Ω(𝐿𝑟) is OK. ◦ L: the number of subspaces (clusters) ◦ r: the intrinsic dimension of each subspace ◦ Can we do better? 8 Pr ∀𝒙 ∈ 𝑺, Ψ𝑥 2 2 ∈ 1±𝜖 𝑥 2 2 ≥ 1−𝛿 Main Result 1. 𝑝 = Ω 𝐿𝑟 ⟹ 𝑝 = Ω(𝑟 log 𝑁),if Ψ is a subspace embedding ◦ ◦ ◦ ◦ ◦ 2. Random Gaussian projection Fast Johnson-Lindenstrauss Transform (FJLT) Uniform row subsampling under incoherence conditions Sketching …… Lasso SSC should be used even if data are noiseless. 9 Proof sketch ◦ Review of deterministic success conditions for SSC (Soltanolkotabi and Candes, 12)(Elhamifar and Vidal, 13) ◦ Subspace incoherence ◦ Inradius ◦ Analyze perturbation under dimension reduction ◦ Main results for noiseless and noisy cases. 10 Review of SSC success condition ◦ Subspace incoherence ◦ Characterizing inter-subspace separation 𝜇ℓ ≔ max(ℓ) max normalize 𝑣 𝑥𝑖 𝑖 𝑥∈𝑋\X ℓ ,𝑥 where 𝑣(𝑥) solves 𝜆 𝑣 22 2 𝑇 𝑋 𝑣 ∞≤1 max 𝑣, 𝑥 − 𝑣 𝑠. 𝑡. Lasso SSC formulation Dual problem of Lasso SSC 11 Review of SSC success condition ◦ Inradius ◦ Characterzing inner-subspace data point distribution Large inradius 𝜌 Small inradius 𝜌 12 Review of SSC success condition (Soltanolkotabi & Candes, 2012) Noiseless SSC succeeds (similarity graph has no false connection) if 𝝁<𝝆 With dimensionality reduction: 𝜇→𝜇, 𝜌→𝜌 Bound 𝝁 − 𝝁 , 𝝆 − 𝝆 13 Perturbation of subspace incoherence 𝜇 𝜈 = argmax 𝜈: 𝑋 𝑇 𝜈 ∞ ≤1 𝜈 = argmax 𝜈: 𝑋 𝑇 𝜈 ∞ ≤1 𝜆 𝜈, 𝑥 − 𝜈 2 𝜆 𝜈, 𝑥 − 𝜈 2 2 2 2 2 We know that 𝜈, 𝑥 ≈ 𝜈, 𝑥 … So 𝜈 ≈ 𝜈 because of strong convexity 14 Perturbation of inradius 𝜌 Main idea: linear operator transforms a ball to an ellipsoid 15 Main result SSC with dimensionality reduction succeeds (similarity graph has no false connection) if 𝝁 + 𝟑𝟐 𝝐/𝝀 + 𝟑𝝐 < 𝝆 Regularization Errorgap of approximate Lasso Noisy case: (𝜂the is the adversarial noise Takeaways: geometric Δ = parameter 𝜌level) − 𝜇isometry is aofresource ifrequired 𝑝 =dimension Ω(𝑟even log 𝑁) that can be traded-off for data LassoO(1) SSC forreduction noiseless problem 2 5𝜂 8(𝜖 + 3𝜂) 𝜇 + 16 + + 3𝜖 < 𝜌 𝜌ℓ 𝜆 16 Simulation results (Hopkins 155) 17 Conclusion ◦ SSC provably succeeds with dimensionality reduction ◦ Dimension after reduction 𝑝 can be as small as Ω(𝑟 log 𝑁) ◦ Lasso SSC is required for provable results. Questions? 18 References ◦ M. Soltanolkotabi and E. Candes. A Geometric Analysis of Subspace Clustering with Outliers. Annals of Statistics, 2012. ◦ E. Elhamifar and R. Vidal. Sparse Subspace Clustering: Algorithm, Theory and Applications. IEEE TPAMI, 2013 ◦ C. Tomasi and T. Kanade. Shape and Motion from Image Streams under Orthography. IJCV, 1992. ◦ R. Basri and D. Jacobs. Lambertian Reflection and Linear Subspaces. IEEE TPAMI, 2003. ◦ Y.-X., Wang and H., Xu. Noisy Sparse Subspace Clustering. ICML, 2013. 19