Duke

advertisement

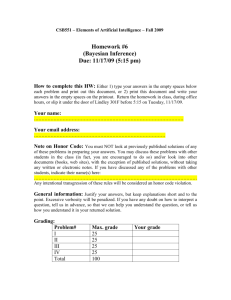

CS B553: ALGORITHMS FOR

OPTIMIZATION AND LEARNING

Bayesian Networks

AGENDA

Bayesian networks

Chain rule for Bayes nets

Naïve Bayes models

Independence declarations

D-separation

Probabilistic inference queries

PURPOSES OF BAYESIAN NETWORKS

Efficient and intuitive modeling of complex

causal interactions

Compact representation of joint distributions

O(n) rather than O(2n)

Algorithms for efficient inference with given

evidence (more on this next time)

INDEPENDENCE OF RANDOM VARIABLES

Two random variables A and B are independent

if

P(A,B) = P(A) P(B)

hence P(A|B) = P(A)

Knowing B doesn’t give you any information

about A

[This equality has to hold for all combinations of

values that A and B can take on, i.e., all events

A=a and B=b are independent]

SIGNIFICANCE OF INDEPENDENCE

If A and B are independent, then

P(A,B) = P(A) P(B)

=> The joint distribution over A and B can be

defined as a product over the distribution of A

and the distribution of B

=> Store two much smaller probability tables

rather than a large probability table over all

combinations of A and B

CONDITIONAL INDEPENDENCE

Two random variables a and b are conditionally

independent given C, if

P(A, B|C) = P(A|C) P(B|C)

hence P(A|B,C) = P(A|C)

Once you know C, learning B doesn’t give you

any information about A

[again, this has to hold for all combinations of

values that A,B,C can take on]

SIGNIFICANCE OF CONDITIONAL

INDEPENDENCE

Consider Grade(CS101), Intelligence, and SAT

Ostensibly, the grade in a course doesn’t have a

direct relationship with SAT scores

but good students are more likely to get good

SAT scores, so they are not independent…

It is reasonable to believe that Grade(CS101) and

SAT are conditionally independent given

Intelligence

BAYESIAN

NETWORK

Explicitly represent independence among propositions

Notice that Intelligence is the “cause” of both Grade and

SAT, and the causality is represented explicitly

P(I,G,S) = P(G,S|I) P(I)

= P(G|I) P(S|I) P(I)

P(I=x)

Intel.

P(G=x|I) I=low I=high

high

0.3

low

0.7

‘a’

0.2

0.74

P(S=x|I) I=low

I=high

‘b’

0.34

0.17

low

0.95

0.05

‘C’

0.46

0.09

high

0.2

0.8

Grade

SAT

6 probabilities, instead of 11

DEFINITION: BAYESIAN NETWORK

Set of random variables X={X1,…,Xn} with

domains Val(X1),…,Val(Xn)

Each node has a set of parents PaX

Graph must be a DAG

Each node also maintains a conditional

probability distribution (often, a table)

P(X|PaX)

2k-1 entries for binary valued variables

Overall: O(n2k) storage for binary variables

Encodes the joint probability over X1,…,Xn

CALCULATION OF JOINT PROBABILITY

Burglary

P(b)

Earthquake

0.001

P(jmabe) = ??

0.002

B E P(a|…)

Alarm

JohnCalls

P(e)

A

P(j|…)

T

F

0.90

0.05

T

T

F

F

T

F

T

F

0.95

0.94

0.29

0.001

MaryCalls

A P(m|…)

T 0.70

F 0.01

Burglary

Earthquake

Alarm

P(jmabe)

JohnCalls

= P(jm|a,b,e) P(abe)

= P(j|a,b,e) P(m|a,b,e) P(abe)

(J and M are independent given A)

P(j|a,b,e) = P(j|a)

(J and B and J and E are independent given A)

P(m|a,b,e) = P(m|a)

P(abe) = P(a|b,e) P(b|e) P(e)

= P(a|b,e) P(b) P(e)

(B and E are independent)

P(jmabe) =

P(j|a)P(m|a)P(a|b,e)P(b)P(e)

MaryCalls

CALCULATION OF JOINT PROBABILITY

Burglary

P(b)

Earthquake

0.001

P(jmabe)

= P(j|a)P(m|a)P(a|b,e)P(b)P(e)

= 0.9 x 0.7 x 0.001 x 0.999 xalarm

0.998

= 0.00062

JohnCalls

A

P(j|…)

T

F

0.90

0.05

P(e)

0.002

B E P(a|…)

T

T

F

F

T

F

T

F

0.95

0.94

0.29

0.001

MaryCalls

A P(m|…)

T 0.70

F 0.01

CALCULATION OF JOINT PROBABILITY

Burglary

P(b)

Earthquake

0.001

P(jmabe)

= P(j|a)P(m|a)P(a|b,e)P(b)P(e)

= 0.9 x 0.7 x 0.001 x 0.999 xalarm

0.998

= 0.00062

F

0.002

b e P(a|…)

T

T

F

F

T

F

T

F

0.95

0.94

0.29

0.001

P(x1x

= Pi=1,…,nP(x

johnCalls

maryCalls

2…xnT) 0.90

i|paXi)

a

P(e)

P(j|…)

a

0.05

T 0.70

F 0.01

full joint distribution

P(m|…)

CHAIN RULE FOR BAYES NETS

Joint distribution is a product of all CPTs

P(X1,X2,…,Xn) = Pi=1,…,nP(Xi|PaXi)

EXAMPLE: NAÏVE BAYES MODELS

P(Cause,Effect1,…,Effectn)

= P(Cause) Pi P(Effecti | Cause)

Cause

Effect1

Effect2

Effectn

ADVANTAGES OF BAYES NETS (AND OTHER

GRAPHICAL MODELS)

More manageable # of parameters to set and

store

Incremental modeling

Explicit encoding of independence assumptions

Efficient inference techniques

ARCS DO NOT NECESSARILY ENCODE

CAUSALITY

A

C

B

B

C

A

C

B

A

2 BN’s with the same expressive power, and a 3rd with

greater power (exercise)

READING OFF INDEPENDENCE

RELATIONSHIPS

A

Given B, does the value

of A affect the

probability of C?

B

C

P(C|B,A) = P(C|B)?

No!

C parent’s (B) are given,

and so it is independent

of its non-descendents

(A)

Independence is

symmetric:

C A | B => A C | B

BASIC RULE

A node is independent of its non-descendants

given its parents (and given nothing else)

WHAT DOES THE BN ENCODE?

Burglary

Earthquake

Alarm

JohnCalls

Burglary Earthquake

JohnCalls MaryCalls | Alarm

JohnCalls Burglary | Alarm

JohnCalls Earthquake | Alarm

MaryCalls Burglary | Alarm

MaryCalls Earthquake | Alarm

MaryCalls

A node is independent of

its non-descendents, given

its parents

READING OFF INDEPENDENCE

RELATIONSHIPS

Burglary

Earthquake

Alarm

JohnCalls

MaryCalls

How about Burglary Earthquake | Alarm ?

No! Why?

READING OFF INDEPENDENCE

RELATIONSHIPS

Burglary

Earthquake

Alarm

JohnCalls

MaryCalls

How about Burglary Earthquake | Alarm ?

No! Why?

P(BE|A) = P(A|B,E)P(BE)/P(A) = 0.00075

P(B|A)P(E|A) = 0.086

READING OFF INDEPENDENCE

RELATIONSHIPS

Burglary

Earthquake

Alarm

JohnCalls

MaryCalls

How about Burglary Earthquake | JohnCalls?

No! Why?

Knowing JohnCalls affects the probability of

Alarm, which makes Burglary and Earthquake

dependent

INDEPENDENCE RELATIONSHIPS

For polytrees, there exists a unique undirected

path between A and B. For each node on the

path:

Evidence on the directed road XEY or XEY

makes X and Y independent

Evidence on an XEY makes descendants

independent

Evidence on a “V” node, or below the V:

XEY, or

XWY with W… E

makes the X and Y dependent (otherwise they are

independent)

GENERAL CASE

Formal property in general case:

D-separation : the above properties hold for all

(acyclic) paths between A and B

D-separation independence

That is, we can’t read off any more independence

relationships from the graph than those that are

encoded in D-separation

The CPTs may indeed encode additional

independences

PROBABILITY QUERIES

Given: some probabilistic model over variables X

Find: distribution over YX given evidence E=e

for some subset E X / Y

P(Y|E=e)

Inference problem

ANSWERING INFERENCE PROBLEMS WITH

THE JOINT DISTRIBUTION

Easiest case: Y=X/E

P(Y|E=e)

= P(Y,e)/P(e)

Denominator

Determine

P(e) by marginalizing: P(e) = Sy P(Y=y,e)

Otherwise, let W=X/(EY)

P(Y|E=e)

P(e)

makes the probabilities sum to 1

= Sw P(Y,W=w,e) /P(e)

= Sy Sw P(Y=y,W=w,e)

Inference with joint distribution: O(2|X/E|) for binary

variables

NAÏVE BAYES CLASSIFIER

P(Class,Feature1,…,Featuren)

= P(Class) Pi P(Featurei | Class)

Spam / Not Spam

Class

English / French / Latin

…

Feature1

Given features,

what class?

Feature2

Featuren

Word occurrences

P(C|F1,….,Fn) = P(C,F1,….,Fn)/P(F1,….,Fn)

= 1/Z P(C) Pi P(Fi|C)

NAÏVE BAYES CLASSIFIER

P(Class,Feature1,…,Featuren)

= P(Class) Pi P(Featurei | Class)

Given some features, what is the distribution over class?

P(C|F1,….,Fk) = 1/Z P(C,F1,….,Fk)

= 1/Z

Sfk+1…fn P(C,F1,….,Fk,fk+1,…fn)

= 1/Z P(C) Sfk+1…fn Pi=1…k P(Fi|C)

= 1/Z P(C) Pi=1…k P(Fi|C)

= 1/Z P(C) Pi=1…k P(Fi|C)

Pj=k+1…n P(fj|C)

Pj=k+1…n Sfj P(fj|C)

FOR GENERAL QUERIES

For BNs and queries in general, it’s not that

simple… more in later lectures.

Next class: skim 5.1-3, begin reading 9.1-4