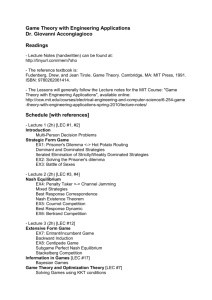

Lecture 1: Course Introduction and Review

advertisement

EENG 449bG/CPSC 439bG Computer Systems Lecture 19 Memory Hierarchy Design Part III Memory Technologies April 20, 2004 Prof. Andreas Savvides Spring 2004 http://www.eng.yale.edu/courses/eeng449bG 4/20/04 EENG449b/Savvides Lec 19.1 Announcements • Midterm 2 next time (20% of class grade) – Material from chapters 3,4,5 – Use lecture slides and HW exercises as a study guide • Project presentation (10% of grade) – April 26th (or May 4th) • Project reports (15% of grade) – Due May 6th 4/20/04 EENG449b/Savvides Lec 19.2 Main Memory Background • Performance of Main Memory: – Latency: Cache Miss Penalty » Access Time: time between request and word arrives » Cycle Time: time between requests – Bandwidth: I/O & Large Block Miss Penalty (L2) • Main Memory is DRAM: Dynamic Random Access Memory – Dynamic since needs to be refreshed periodically (8 ms, 1% time) – Addresses divided into 2 halves (Memory as a 2D matrix): » RAS or Row Access Strobe » CAS or Column Access Strobe • Cache uses SRAM: Static Random Access Memory – No refresh (6 transistors/bit vs. 1 transistor for DRAM Size: DRAM/SRAM 4-8, Cost/Cycle time: SRAM/DRAM 8-16 4/20/04 EENG449b/Savvides Lec 19.3 Main Memory Organizations • Simple: – CPU, Cache, Bus, Memory same width (32 or 64 bits) • Memory Performance Example – – – – 4 cycles to send address 56 cycles access time per word 4 clock cycles to send a word of data Cache block size 4, 8-byte words • Miss Penalty 4 x ( 4 + 56 + 4) = 256 clock cycles 4/20/04 EENG449b/Savvides Lec 19.4 Main Memory Organizations Wide Memory Organization: – CPU/Mux 1 word; Mux/Cache, Bus, Memory N words (Alpha: 64 bits & 256 bits; UtraSPARC 512) 4/20/04 EENG449b/Savvides Lec 19.5 Main Memory Organizations Wide Memory Organization: – CPU/Mux 1 word; Mux/Cache, Bus, Memory N words (Alpha: 64 bits & 256 bits; UtraSPARC 512) • Consider memory width of 2 words – miss penalty: 2 x ( 4 + 56 + 4 ) = 128 cycles • 4-word width => 64 4/20/04 EENG449b/Savvides Lec 19.6 Main Memory Organizations Wide Memory drawbacks – HW overhead – wider bus and multiplexers at each level – If error correction is supported, then the whole block has to be read at each byte write to compute a new code 4/20/04 EENG449b/Savvides Lec 19.7 Main Memory Organizations • Memory Interleaving: – CPU, Cache, Bus 1 word: Memory N Modules (4 Modules); example is word interleaved • New Miss Penalty: 4 + 56 + ( 4 x 4 ) = 76 cycles • Bank advantages – Can have up to 1-word/cycle writes if writes are not on the same bank 4/20/04 EENG449b/Savvides Lec 19.8 Memory Technologies 4/20/04 EENG449b/Savvides Lec 19.9 Memory Technologies DRAM • Dynamic Random Access Memory • Write – Charge bitline HIGH or LOW and set wordline HIGH • Read – Bit line is precharged to a voltage halfway between HIGH and LOW, and then the word line is set HIGH. – Depending on the charge in the cap, the precharged bitline is pulled slightly higher or lower. – Sense Amp Detects change – Destructive read! • Explains why Cap can’t shrink Word Line C . . . Bit Line Sense Amp – Need to sufficiently drive bitline – Increase density => increase parasitic capacitance 4/20/04 EENG449b/Savvides Lec 19.10 DRAM Charge Leakage • Need to have frequent refresh, rates vary from ms to ns, update approx. every 8 reads 4/20/04 EENG449b/Savvides Lec 19.11 Data In D Memory Array Q (16,384 x 16,384) Word Line Bit Line A0…A13 Column Decoder … Sense Amps & I/O Data Out DRAM logical organization (4 Mbit) Storage Cell • Square root of bits per RAS/CAS 4/20/04 EENG449b/Savvides Lec 19.12 DRAM-chip internal organization 64K x 1 DRAM 4/20/04 EENG449b/Savvides Lec 19.13 RAS/CAS operation • Row Address Strobe, Column Address Strobe – n address bits are provided in two steps using n/2 pins, referenced to the falling edges of RAS_L and CAS_L – Traditional method of DRAM operation for 20 years. – Now being supplanted by synchronous, clocked interfaces in SDRAM (synchronous DRAM). 4/20/04 EENG449b/Savvides Lec 19.14 DRAM read timing 4/20/04 EENG449b/Savvides Lec 19.15 DRAM read timing Read Latency 4/20/04 EENG449b/Savvides Lec 19.16 DRAM refresh timing 4/20/04 EENG449b/Savvides Lec 19.17 DRAM write timing 4/20/04 EENG449b/Savvides Lec 19.18 DRAM History • DRAMs: capacity +60%/yr, cost –30%/yr – 2.5X cells/area, 1.5X die size in 3 years • ‘98 DRAM fab line costs $2B – DRAM only: density, leakage v. speed • Rely on increasing no. of computers & memory per computer (60% market) – SIMM or DIMM is replaceable unit => computers use any generation DRAM • Commodity, second source industry => high volume, low profit, conservative – Little organization innovation in 20 years • Order of importance: 1) Cost/bit 2) Capacity – First RAMBUS: 10X BW, +30% cost => little impact 4/20/04 EENG449b/Savvides Lec 19.19 So, Why do I freaking care? • By it’s nature, DRAM isn’t built for speed – Reponse times dependent on capacitive circuit properties which get worse as density increases • DRAM process isn’t easy to integrate into CMOS process – DRAM is off chip – Connectors, wires, etc introduce slowness – IRAM efforts looking to integrating the two • Memory Architectures are designed to minimize impact of DRAM latency – Low Level: Memory chips – High Level memory designs. – You will pay $$$$$$ and then some $$$ for a good memory system. 4/20/04 EENG449b/Savvides Lec 19.20 So, Why do I freaking care? 1000 CPU 4/20/04 2000 1999 1998 1997 1996 1995 1994 1993 1992 1991 1990 1989 1988 1987 1986 1985 1984 DRAM 1983 1982 1981 1980 • 1960-1985: Speed = ƒ(no. operations) 100 • 1990 – Pipelined Execution & Fast Clock Rate 10 – Out-of-Order execution – Superscalar 1 Instruction Issue • 1998: Speed = ƒ(non-cached memory accesses) EENG449b/Savvides Lec 19.21 DRAM Future: 1 Gbit DRAM (ISSCC ‘96; production ‘02?) • • • • Blocks Clock Data Pins Die Size Mitsubishi 512 x 2 Mbit 200 MHz 64 24 x 24 mm Samsung 1024 x 1 Mbit 250 MHz 16 31 x 21 mm 3 0.15 micron 4 0.16 micron – Sizes will be much smaller in production • Metal Layers • Technology • Latency comparison 180ns in 1980, 40ns in 2002 4/20/04 EENG449b/Savvides Lec 19.22 Static RAM (SRAM) • Six transistors in cross connected fashion – Prevent the information from being disturbed when read – SRAM requires minimal power to retain the charges – better than SRAM – On the same process DRAM 4-8 times more capacity, SRAMs 8-16 times faster 4/20/04 Single Port 6-T SRAM Cell EENG449b/Savvides Lec 19.23 Fast Memory Systems: DRAM specific • Multiple CAS accesses: several names (page mode) – Extended Data Out (EDO): 30% faster in page mode • New DRAMs to address gap; what will they cost, will they survive? – RAMBUS: startup company; reinvent DRAM interface » Each Chip a module vs. slice of memory » Short bus between CPU and chips (300-400MHz < 4inches long) » Does own refresh » Variable amount of data returned » 1 byte / 2 ns (500 MB/s per chip) @1.6GB/sec bandwidth » 20% increase in DRAM area – Synchronous DRAM (SDRAM): 2 banks on chip, a clock signal to DRAM, transfer synchronous to system clock (66 - 150 MHz in 2001) 4/20/04 EENG449b/Savvides Lec 19.24 RAMBUS (RDRAM) • Protocol based RAM w/ narrow (16-bit) bus – High clock rate (400 Mhz), but long latency – Pipelined operation • Multiple arrays w/ data transferred on both edges of clock RAMBUS Bank 4/20/04 RDRAM Memory System EENG449b/Savvides Lec 19.25 RAMBUS vs. SDRAM • SDRAM comes in DIMMs, RAMBUS comes in RIMMs – similar in size but incompatible • SDRAMs have almost comparable performance to RAMBUS • Newer DRAM generations of DRAM such as RDRAM and DRDRAM provide more bandwidth at a price premium 4/20/04 EENG449b/Savvides Lec 19.26 Need for Error Correction! • Motivation: – Failures/time proportional to number of bits! – As DRAM cells shrink, more vulnerable • Went through period in which failure rate was low enough without error correction that people didn’t do correction – DRAM banks too large now – Servers always corrected memory systems • Basic idea: add redundancy through parity bits – Simple but wasteful version: » Keep three copies of everything, vote to find right value » 200% overhead, so not good! – Common configuration: Random error correction » SEC-DED (single error correct, double error detect) » One example: 64 data bits + 8 parity bits (11% overhead) » Papers up on reading list from last term tell you how to do these types of codes – Really want to handle failures of physical components as well » Organization is multiple DRAMs/SIMM, multiple SIMMs » Want to recover from failed DRAM and failed SIMM! » Requires more redundancy to do this » All major vendors thinking about this in high-end machines 4/20/04 EENG449b/Savvides Lec 19.27 More esoteric Storage Technologies? • Tunneling Magnetic Junction RAM (TMJ-RAM): – Speed of SRAM, density of DRAM, non-volatile (no refresh) – New field called “Spintronics”: combination of quantum spin and electronics – Same technology used in high-density disk-drives • MicroElecromechanicalSystems(MEMS) storage devices: – Large magnetic “sled” floating on top of lots of little read/write heads – Micromechanical actuators move the sled back and forth over the heads 4/20/04 EENG449b/Savvides Lec 19.28 Tunneling Magnetic Junction 4/20/04 EENG449b/Savvides Lec 19.29 MEMS-based Storage • Magnetic “sled” floats on array of read/write heads – Approx 250 Gbit/in2 – Data rates: IBM: 250 MB/s w 1000 heads CMU: 3.1 MB/s w 400 heads • Electrostatic actuators move media around to align it with heads – Sweep sled ±50m in < 0.5s • Capacity estimated to be in the 1-10GB in 10cm2 See Ganger et all: http://www.lcs.ece.cmu.edu/research/MEMS 4/20/04 EENG449b/Savvides Lec 19.30 Embedded Processor Memory Technologies • Read Only Memory (ROM) – programmed once at manufacture time – non-destructible • FLASH Memory – Non-volatile but re-programmable – Almost DRAM reading speeds but 10 – 100 slower writing » Typical access times 65ns for 16Mbit flash and 150ns for 128Mbit flash – Flash building blocks are based on NOR or NAND devices » NOR devices can be reprogrammed about 100,000 cycles » NAND devices can be reprogrammed up to 1,000,000 cycles 4/20/04 EENG449b/Savvides Lec 19.31 Project & Reports • Your final report should build up on the midterm report – Document your software architecture & approach – If you have hardware, document the hardware » Useful metrics: Power consumption (in mA current drawn from the power supply) • Projects dealing with evaluations – Report on your results » Experiments with negative results are as important as positive results. Explain what did not work and why • Demo your project status on the day of final presentation 4/20/04 EENG449b/Savvides Lec 19.32 Concluding Remarks • Processor internals – Performance, Pipelining, ILP SW & HW, Memory Hierarchies • Processor interfaces – Using microcontrollers, peripherals and tools 4/20/04 EENG449b/Savvides Lec 19.33