ECO-DNS

advertisement

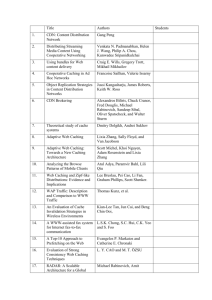

ECO-DNS: Expected Consistency Optimization for DNS Chen Chen Stephanos Matsumoto Adrian Perrig © 2013 Stephanos Matsumoto 1 Motivation . 128.2.136.200 Caching Server com edu ece.cmu.edu ece.cmu.edu cmu Client 1 Client 3 Client 2 cs ece 128.2.136.200 128.2.129.29 © 2013 Stephanos Matsumoto Inconsistency 2 Motivation • DNS caching is critical to reducing server load • However, cached records can be inconsistent • Currently, inconsistency can only be controlled by time-to-live (TTL) © 2013 Stephanos Matsumoto 3 Motivation • Inconsistent records for popular sites affect many users • Server operators "set and forget" TTLs • These problems will likely get worse due to… – Content delivery networks (CDNs) – Dynamic DNS (DDNS) – Future Internet proposals (Mobility First, SCION) © 2013 Stephanos Matsumoto 4 Contributions • Expected Aggregate Inconsistency (EAI) – Consistency metric that considers a record's update frequency and popularity • ECO-DNS – Consistency control mechanism for DNS – Preserves "pull-based" nature of DNS – Backwards-compatible © 2013 Stephanos Matsumoto 5 Key Ideas • Considering a record's update frequency and popularity tells us what inconsistency we need to focus on • ECO-DNS considers these factors to create an automated, backwards-compatible, flexible consistency control mechanism • Remember this if you remember nothing else © 2013 Stephanos Matsumoto 6 Outline • Model – Defining an Inconsistency Metric – Caching server topology – Assumptions – Model and optimization • System Design • Evaluation • Future Work and Conclusion © 2013 Stephanos Matsumoto 7 Defining an Inconsistency Metric • Inconsistency is caused by stale DNS records • How bad is this inconsistency? • More specifically: – How stale is a record when it is returned? – How many stale records are returned overall? • TTLs can only approximate these factors © 2013 Stephanos Matsumoto 8 Defining an Inconsistency Metric • Inconsistency: # of updates to r between t and tq • Expected aggregate inconsistency (EAI) All queries for r in time interval T © 2013 Stephanos Matsumoto 9 Outline • Model – Defining an Inconsistency Metric – Caching server topology – Assumptions – Model and optimization • System Design • Evaluation • Future Work and Conclusion © 2013 Stephanos Matsumoto 10 Caching Server Topology • Records are generally cached directly from the authoritative server • Caching from other caches is discouraged, but future schemes may change this • Thus we consider caching in a logical cache tree © 2013 Stephanos Matsumoto 11 Caching Server Topology Server Hierarchy Authoritative Server Intermediate Caching Server Leaf Caching Server Client © 2013 Stephanos Matsumoto 12 Caching Server Topology t0 t1 t2 T0 C0 T1 C1 tq T2 C2 Inconsistency "cascades" © 2013 Stephanos Matsumoto 13 Outline • Model – Defining an Inconsistency Metric – Caching server topology – Assumptions – Model and optimization • System Design • Evaluation • Future Work and Conclusion © 2013 Stephanos Matsumoto 14 Assumptions • Query and update arrivals can be modeled by a Poisson Process • Query patterns of different caching servers are independent • Caching servers proactively cache (prefetch) new record before expiration © 2013 Stephanos Matsumoto 15 Outline • Model – Defining an Inconsistency Metric – Caching server topology – Assumptions – Model and optimization • System Design • Evaluation • Future Work and Conclusion © 2013 Stephanos Matsumoto 16 Model and Optimization • How does our Poisson process assumption help us calculate the inconsistency? • Over time period of length ΔT: – Expected query arrival time: – Expected missed updates for each query: – Expected number of queries: • EAI for a single cache: © 2013 Stephanos Matsumoto 17 Model and Optimization t0 t1 t2 T0 C0 T1 C1 tq T2 C2 Inconsistency "cascades" © 2013 Stephanos Matsumoto 18 Model and Optimization © 2013 Stephanos Matsumoto 19 Model and Optimization • Based on the Poisson Process assumption, the EAI over a time interval is © 2013 Stephanos Matsumoto 20 Model and Optimization © 2013 Stephanos Matsumoto 21 Model and Optimization • The TTL minimizing the cost function is: © 2013 Stephanos Matsumoto 22 Outline • Model • System Design – Parameter Monitoring and Aggregation – Setting the TTL – Prefetching DNS Records • Evaluation • Future Work and Conclusion © 2013 Stephanos Matsumoto 23 Parameter Monitoring/Aggregation • What information is necessary to find the optimal TTL? • Which nodes need to keep track of this information? © 2013 Stephanos Matsumoto 24 Parameter Monitoring/Aggregation Set update frequency μ Estimate λ from queries Collect λ's from children © 2013 Stephanos Matsumoto 25 Parameter Monitoring/Aggregation • Estimate λ using a sliding window for queries • Send λ using special DNS message © 2013 Stephanos Matsumoto 26 Outline • Model • System Design – Parameter Monitoring and Aggregation – Setting the TTL – Prefetching DNS Records • Evaluation • Future Work and Conclusion © 2013 Stephanos Matsumoto 27 Setting the TTL • Two choices for TTL: – Record-specified TTL (current method) – Locally calculated optimal TTL • What are the pros and cons of each? © 2013 Stephanos Matsumoto 28 Setting the TTL • If we only use the record-specified TTL – Very little consistency control – Cache poisoning attacks can last a long time • If we only use the locally calculated TTL – Unpopular records have a very large TTL © 2013 Stephanos Matsumoto 29 Setting the TTL • Solution: set TTL to be the minimum of the two values • Record-specified TTL becomes an upper bound © 2013 Stephanos Matsumoto 30 Outline • Model • System Design – Parameter Monitoring and Aggregation – Setting the TTL – Prefetching DNS Records • Evaluation • Future Work and Conclusion © 2013 Stephanos Matsumoto 31 Prefetching DNS Records • Pros and cons of prefetching records – Latency for a cache miss can be an order of magnitude more than a cache hit – Prefetching an unpopular record provides little benefit for the extra cost in bandwidth overhead © 2013 Stephanos Matsumoto 32 Prefetching DNS Records • Solution: prefetch only the most popular records – Popularity can be measured by query rate parameter λ or relative to other records – Saves cache space by evicting unpopular records © 2013 Stephanos Matsumoto 33 Outline • Model • System Design • Evaluation – Goals and Methodology – Single-Level Caching – Multi-Level Caching – Dynamic Parameter Changes • Future Work and Conclusion © 2013 Stephanos Matsumoto 34 Goals and Methodology • Three main questions: – Does ECO-DNS achieve better consistency than today's DNS for the same bandwidth overhead? – Is multi-level caching more consistent than singlelevel caching for the same bandwidth overhead? – How well does ECO-DNS adapt to dynamic parameter changes? © 2013 Stephanos Matsumoto 35 Goals and Methodology • Simulations based on real-world data – KDDI (a large Japanese ISP) provided DNS trace data for 10 minutes every 4 hours over 2 days – CAIDA provided inferred AS relationships, which may model the topology of logical cache trees © 2013 Stephanos Matsumoto 36 Outline • Model • System Design • Evaluation – Goals and Methodology – Single-Level Caching – Multi-Level Caching – Dynamic Parameter Changes • Future Work and Conclusion © 2013 Stephanos Matsumoto 37 Single-Level Caching • Use KDDI traces for query arrival times • Compare normalized reduced cost U for various c and μ © 2013 Stephanos Matsumoto 38 Single-Level Caching 1 Normalized Reduced Target Cost 0.9 0.8 0.7 0.6 c=1/1K c=1/100K c=1/10M c=1/100M c=1/1G 0.5 0.4 0.3 0.2 0.1 3 10 4 10 5 10 6 10 Ave. Update Interval m−1 © 2013 Stephanos Matsumoto 7 10 8 10 39 Outline • Model • System Design • Evaluation – Goals and Methodology – Single-Level Caching – Multi-Level Caching – Dynamic Parameter Changes • Future Work and Conclusion © 2013 Stephanos Matsumoto 40 Multi-Level Caching • Generate logical cache trees from CAIDA data • Compare the cost of each node against the cost it would have in today's DNS • We set the TTL in today's DNS to be the best possible value and compared it to ECO-DNS © 2013 Stephanos Matsumoto 41 Multi-Level Caching © 2013 Stephanos Matsumoto 42 Outline • Model • System Design • Evaluation – Goals and Methodology – Single-Level Caching – Multi-Level Caching – Dynamic Parameter Changes • Future Work and Conclusion © 2013 Stephanos Matsumoto 43 Dynamic Parameter Changes • Simulate random queries at the mean rate for each 4-hour period recorded by KDDI • Use two schemes to estimate λ: – Time-based: divide number of queries in the past n seconds by n – Count-based: keep track of the nth most recent query time and divide n by the time interval © 2013 Stephanos Matsumoto 44 Dynamic Parameter Changes 1600 100s 1s 50 queries 5000 queries 1400 Estimated l 1200 1000 800 600 400 200 0 1 2 3 4 5 6 Time © 2013 Stephanos Matsumoto 7 8 9 x 10 4 45 Dynamic Parameter Changes 100s 1s 50 5000 1.12 1.1 Normalized Cost 1.02 1.001 1.0008 1.015 1.08 1.0006 1.01 1.0004 1.06 1.005 1 1.04 1.0002 0 1000 2000 3000 4000 5000 1 8 8.1 8.2 8.3 8.4 8.5 4 x 10 1.02 1 0 1 2 3 4 5 Time © 2013 Stephanos Matsumoto 6 7 8 x 10 4 46 Outline • • • • Model System Design Evaluation Future Work and Conclusion © 2013 Stephanos Matsumoto 47 Future Work and Conclusion • Future Work – Full implementation – Security considerations • What if nodes misrepresent their estimates of λ? • What if nodes do not respect their TTL? © 2013 Stephanos Matsumoto 48 Future Work and Conclusion • Conclusion – Our consistency metric EAI allows us to explore the tradeoff among various parameters – Out model based on EAI allows us to automate optimization of the TTL to specified inconsistency and bandwidth costs – ECO-DNS is lightweight, backwards-compatible, and flexible to real-time network changes © 2013 Stephanos Matsumoto 49 Thank you! Questions? smatsumoto@cmu.edu © 2013 Stephanos Matsumoto 50