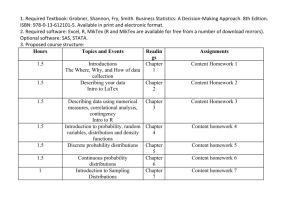

Exam 1 - BYU Department of Economics

advertisement

First Exam: Economics 388, Econometrics

spring 2011 in R. Butler’s class

YOUR NAME:________________________________________

Section I (30 points) Questions 1-10 (3 points each)

Section II (40 points) Questions 11-15 (10 points each)

Section III (30 points) Question 16 (20 points)

Section I. Define or explain the following terms (3 points each)

1. If Y ~ N ( , ) for a nx1 random vector Y, then for the square matrix of constants, A, AY ~ N (?,?)

Derive what the “?” terms are:

2. sample regression vs. population regression function

3. STATA code that tests for multi-collinearity (just after a regression is run)?-

4. sample regression vs. population regression function-

5. T or F: “the F-distribution and Chi-squared distributions are two symmetric (not-skewed) distributions

used for testing hypotheses” -

6. T or F: “the F-distribution and Chi-squared distributions are two symmetric (not-skewed) distributions

used for testing hypotheses” -

7. STATA code to create a dummy variable for male (=1 when male and 0 otherwise), from a SEX

variable which has values=1 when its male and 2 when its female.

8. STATA code for getting descriptive statistics-

9. estimator vs. estimate (for some unknown parameter 𝜃)-

10. Var(w) where w is a nx1 vector of random variables1

II. Some STATA code

11-12. Indicate whether the following statements are True, False or Uncertain (indicate which, you are

graded only on your explanation for your answer):

11a. . “If all my sample observations have exactly the same level of ability, then omitting ability from the

empirical specification where I regress wages on education will lead to no omitted variable bias.”

11b. “The most important model assumption is that the population errors are normally distributed. Unless

this assumption holds, we can do no testing in our regression models.”

12. “For the simple regression population model yi 0 1 xi i , the normal equations imply both

that the simple regression line always passes through the sample means:

N

y ˆ0 ˆ1 x where y

yi

i

N

N

and x

x

i

N

, and that the residuals always sum to zero.”

2

13. Let y1, y2, y3, …yn be a random sample of size n from a normal distribution with mean 𝜐 and variance

𝜎 2 , 𝑁(𝜐, 𝜎 2 ), independently distributed. Consider the following point estimators of 𝜐:

𝜐̂1 = ̅𝑦 , the sample mean

𝜐̂2 =𝑦1 , just use the first sample value as the estimator

𝜐̂3 =

y1

2

1

+ 2(n−1) [y2 + y3 + y4 + y5 + y6 + ⋯ + yn ]

a. Which are unbiased?

b. Which are consistent?

c. What are their respective variances?

3

14. Assume that “skipping class” variable should be tested using a one tailed test, where the null

hypothesis is that skipping class has no effect on college GPA, and the alternative hypothesis is that it is

not good for the college GPA.

a. What is the mathematical way of stating the null hypothesis and the alternative hypothesis?

b. What is the probability of making a type-II error assuming i) that the critical value of the type I error is

5 percent, and ii) that the true coefficient on the skipping variable is

a. -.07?

b. -.09 ?

given the following:

VARIABLE ESTIMATED STANDARD

NAME

COEFFICIENT ERROR

HSGPA

0.41182

0.09367

ACT

0.014720

0.01056

SKIPPED

-0.083113

0.02600

CONSTANT

1.3896

0.3316

T-RATIO

137 DF P-VALUE

4.396

0.000

1.393

0.166

-3.197

0.002

4.191

0.000

4

15. Draw a diagram to illustrate the three “classical” tests based on likelihood estimation, with a

parameter on the horizontal axis (say, 𝛽2) and the log-likelihood (and constraint) on the vertical axis.

Suppose that the constraint to be tested is that 𝛽2 = 0 and that the unconstrained likelihood value is

greater than the likelihood value under the constraint. Indicate what the three classical tests are, roughly,

on your diagram and what their asymptotic distribution is under the null hypothesis that 𝛽2 = 0.

5

16. Suppose that the population model is yi 1 xi i where i is distributed as a normal random

variable with a mean of zero and a variance of 2 . Note that there is only a slope coefficient but no

intercept coefficient. Also assume that the population errors are uncorrelated (independent).

a. Use the orthogonality condition (that the length of the error vector is minimized) to get the “normal”

equation, and then solve that normal equation for the least squares estimator for 𝛽1 , which we denote

by 𝛽̂1.

b. Show that 𝛽̂1 is unbiased.

c. Show that 𝛽̂1 is the BEST (meaning minimum variance) LINEAR UNBIASED ESTIMATOR among

all linear (in Y), unbiased estimators. (HINT: consider estimator 𝛽̃ = {(𝑋 ′ 𝑋)−1 𝑋 ′ + 𝐶}𝑌, an estimator

linear in Y (nx1), where X is nx1, C is arbitrary matrix with size nx1.

6