AACC NCIA Presentation: Institutional Effectiveness

advertisement

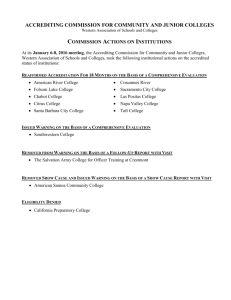

National Council for Instructional Administrators April 4, 2008 Institutional Effectiveness: Developing an Institutional Philosophy for the Quest for Quality Terri M. Manning, EdD. Central Piedmont Community College Institutional Effectiveness (IE) • A philosophy with a set of processes • A combination of the content from the following fields: – Assessment – Program Evaluation – Quality Assurance – Organizational Development • You combine these areas and apply the result to higher education and you get IE A Definition • Institutional Effectiveness is: • an ongoing, integrated and systematic set of institutional processes that include planning, the evaluation of programs and services, the identification and measurement of learning outcomes, the use of data and assessment results for decisionmaking that results in improvements in programs, service and institutional quality. You have had a speaker on this topic for the last several years – yet you ask for additional training on this topic. Why is this hard for institutions to accomplish? Why This is Hard for Many Colleges • Colleges want someone to tell them exactly how to do this – what is expected. • They think they are doing it for their accrediting agencies. • They don’t create processes that are valuable to the institution. • They are either too simple or too complex. • They try to drive it from the top down – administration tries to tell the faculty what needs to be done. • It has no central coordination of efforts – no natural connection between processes. Why It’s Hard, cont. • Their approach is like students in the classroom - “what exactly do we have to do to get an A on the test.” They completely miss the concept. They don’t “get it.” • We do not measure learning outcomes so we can say we did it. • This is not an end into itself. • It is part of a process leading to a much greater end…. Improvements in institutional quality. What You Are Really Doing • Is attempting to create institutional change by: – Getting faculty to value something different – Measure themselves differently – Have a different philosophy – Reward themselves for something different – Shake up their power structure • Is it any wonder we have trouble with this? Lewen’s Organizational Change Model • Unfreezing – reducing the forces that maintain the organization’s current behavior. One introduces information that points out discrepancies between “what we have” and “what we want.” This is supposed to motivate members of the organization to engage in change behaviors. • Moving – shifts the desired behavior to a new level by intervening in the system to develop new values, attitudes and behaviors through changes in processes. • Refreezing – stabilizing the new behaviors by the use of support mechanisms such as a new culture, policies, norms and structures. Source: Essentials of Organization Development & Change, Cummings & Worley What Holds Us Back • Barriers to Individual and Organizational Change – Failure to recognize the need for change • We are fine like we are – no need for improvement – Habit • That is not how we have always done it – Security • My position or rank or the department’s standing could be affected – Fear of the unknown • What if we don’t meet our outcomes (our students aren’t learning), we might be held accountable for it – Previous unsuccessful efforts • We tried that before and it failed What Holds Us Back, cont. – Threats to expertise • Assessment results are not going to force me to teach my class differently or to improvement – Threats to social and power relationships • The one on top may no longer be on top, no longer the favorite or in control – Threats to resource allocation • My department or program might lose funding if we do not perform as well as other departments or programs (from Greenberg & Baron, 2000; Jones, 2004; Robbins 2000) Mandates for Institutional Effectiveness • In 2006, U.S. Secretary of Education Margaret Spellings released “A Test of Leadership: Charting the Future of U.S. Higher Education.” This report addresses the need to transform higher education. – “There is inadequate transparency and accountability for measuring institutional performance, which is more and more necessary to maintaining public trust in higher education.” (p. 14) – “To meet the challenges of the 21st century, higher education must change from a system primarily based on reputation to one based on performance. We urge the creation of a robust culture of accountability and transparency throughout higher education.” (p. 21) Mandates for Institutional Effectiveness – “The commission supports the development of a privacy-protected higher education information system that collects, analyzes and uses studentlevel data as a vital tool for accountability, policymaking, and consumer choice.” (p. 22) – “Faculty must be at the forefront of defining educational objectives for students and developing meaningful, evidence-based measures of their progress toward these goals.” (p. 24) – “The results of student learning assessments, including value-added measurements that indicate how students’ skills have improved over time should be made available to students and reported in the aggregate publicly.” (p. 24) Accreditation Has Changed • Over the past 3-5 years, all six accrediting agencies have made significant changes in their processes – all requiring assessment and evaluation and the inclusion of student learning outcomes. The words “institutional effectiveness” are mentioned in the criteria for most accrediting agencies. • College and universities can no longer wait until 1-2 years before an accreditation visit and “gear up” for it. • Colleges and universities are starting to get in trouble with their accrediting agencies. • It’s going to get worse before it gets better . What Do The Accrediting Agencies Want Mandate for Identifying, Measuring and Using Outcome Data Accrediting Body Identify Assess Analyze Use For Outcomes Outcomes Results Improvement X X X X X X X X X X X X X X X X X X X X Middle States 7, 11, 12, 13, 14 New England 4.18, 4.28, 4.44, 4.45 North Central 2c, 3a, 4b, 4c Northwest 2b, policy 2.2 Southern 2.5, 3.3.1, 3.5.1 Western 4.6, 4.7, 4.8 Source: Gita Wijesinghe, Florida A&M The Reality • At our institutions, our policies and practices are set up to perfectly deliver the results we are currently achieving. • Are they good enough? I ask you: … if you are going to ask your faculty to participate in and embrace institutional effectiveness, to invest the time to develop effective processes, to assess, analyze and use results - - ….once it is all done, what would you want to know? Here is what others have said… • What characteristics should our graduates have and do they have them? • Are our students learning? • Are our students succeeding? • Are some programs having trouble? • What will programs need to succeed in the future? • What is new and innovative coming our way (by discipline)? • Where should our enrollments be (growing vs. declining)? • What are the costs? Institutional Effectiveness • Is a way of thinking…a philosophy • Is the way an institution keeps its finger to the pulse of its various communities. • It is about student success and student learning. • It is mostly about an institution’s continuous quest for quality, efficiency, effectiveness and innovation. Institutional Effectiveness • It answers the questions: – Are our students learning what we intended for them to learn – Can they apply what they learned in the real world – Are we serving our students well – How can we improve, innovate and create – What is the real value of an education obtained from this institution Institutional Effectiveness • There is no prescription – no commonly accepted set of practices or processes • Can be culturally changing to serious institutions • Is a unique system of questions and inquiry • Means looking at the questions before we come up with answers • Means looking at the data • It facilitates a culture of evidence Educating Students • • • • Is a developmental process Unique to each institution Yet with some common core values It is like parenting – Would you want anyone to give your children an exam that measured how good of a parent you were, the quality of your family life… – Your first question would be: who made the decision on what a “good parent” is or what qualities are acceptable in families. While we all hold some common values about families and children, we are very different. Difficulty for Faculty • • • • Issues to discuss/work on: What do we value? How do we operationally define it? Can we all agree on a few characteristics we value and measure them? • Is that enough? • Who decides if it is enough? • How much more are we going to add to their plate without removing anything? Effective Institutions • Don’t make decisions, change policy or practice without the evidence to give them direction • When they start something new, they evaluate it heavily to make sure it produces the desired effect • When things don’t work they fix it • Don’t set students up to fail (policy and practice) • They create a continuous flow of information to the opinion leaders, decision-makers and stakeholders • Anticipate change and are prepared • Listen to their front-line employees – those most in touch with their students/customers/clients (faculty and counselors/advisors) Good Practices • Institutions who fully participate in IE: – Figure out why students are not progressing and make changes – Discover student barriers to success and remove them – Assess effective teaching modalities and expand and enhance them – Openly uncover their weaknesses and work toward strengths It is all about… • • • • • Your students Their success Faculty and staff as facilitators The quality of your institution The responsibility we have to tax payers, students, our community and state • A desire for continuous improvement It is Not About • Doing what we have always done but in a different format • A prescribed set assessment tools • Filling out forms and putting documents in order • Paying lip-service to processes • Getting through an accreditation visit • This is so much bigger than an accreditation process or agency Four Most Important Elements • Support from the administration • “Buy in” and trust from the grassroots level – the development of relationships • Freedom and fairness for those being reviewed • Effective processes that work for your institution - that are supported and followed Support from the Administration • If you don’t have it nothing works • It is hardest where there is the unspoken rule “no bad news” • Faculty/staff can’t fight like salmon swimming upstream • You have to be creative and develop perseverance • You are there to get the job done • Some times it takes more than once for your message to get across Support from the Administration • To obtain this support, you have to: – Know what you are doing – Develop simple and clear processes – Fit the “administrative response” into your processes – Report results to gain visibility – Show how this relates to the bottom line and will increase enrollment growth and retention Buy In From the Grassroots Level • Allow the faculty to create the process themselves (as much as possible) • You have to believe that what they are doing is critical to the success of the institution • You have to understand and validate that “they don’t have time for this” • Build friendships and trust. Function as a helper. • Be a faculty advocate and be proactive for their issues Buy In From the Grassroots Level • Never be perceived as the one who is “grading their performance” • Roll up your sleeves and get involved • Seek to understand their issues • Give them tools and templates – put things online • Create rewards • Realize their #1 role is to teach, not to be assessment experts Freedom and Fairness • Never use data against them • Give them as much input as possible – give them flexibility when you can • Allow them to measure what they believe is important • Aggregate data when necessary • Try to get the message across “This is a process with the goal of a continuous quest for quality. It is okay if things are not perfect – then we know where strategies for improvement need to be utilized.” Effective Processes • Why develop a process? – There is an expectation of a due date – There is a format to follow that utilizes standards – Processes usually have outlines and calendars – Processes can be revised to include everything important to the institution Typical Processes • A Mission Statement • A Strategic Plan – Institutional Goals and Outcomes • Annual Goal/Objective Cycle for All College Units/Departments • Annual Research and Assessment Reports • Program, Learning and Administrative Outcomes – Collect through processes • General Education Assessment • Program/Unit Review • Outcome Assessment Matrices Today we are going to talk about: • Two areas critical to instruction/academic affairs: – The Development and Assessment of Learning Outcomes – The Development and Assessment of General Education Learning Outcomes Why are We Moving from Goals to Outcomes? • Outcomes are program-specific • They measure the effect of classroom activities and services provided. • Outcomes represent a new way of thinking • Outcomes have become widely accepted by our various publics • They are here to stay • They are skills-based variables you can observe, measure, scale or score Program Outcome Model INPUTS ACTIVITIES OUTPUTS Resources Services Products or Results of Activities Staff FTE Facilities State funds Ability of Students Education (classes) Services Counseling Student activities Numbers served FTE (input next year) # Classes taught # Students recruited Constraints Laws State regulations Theory of Change Model Program Outcomes Model INPUTS ACTIVITIES OUTPUTS OUTCOMES Benefits for People (Outcomes answer the “so what” question) *New knowledge *Increased skills *Changes in values *Modified behavior *Improved condition *Altered status *New opportunities Inputs through Outcomes: The Conceptual Chain Long-range Intermediate Initial Outputs Activities Inputs OUTCOMES Different Types of Outcomes • Learning Outcomes (can be at course, program or institutional level) • Program Outcomes • Administrative Outcomes Definitions and Examples • Learning Outcomes: – What changes in knowledge, skills, attitude, awareness, condition, position (etc.) occur as a result of the learning that takes place in the classroom. These are direct benefits to students. – Examples: general learning skills (e.g. improved writing and speaking abilities), ability to apply learning to the work environment (e.g. demonstrate skills in co-op), programspecific skills developed or enhanced (e.g. take blood pressure.) Definitions and Examples • Program Outcomes: – The benefits that results from the completion of an entire program or series of courses. Are there benefits for students who get the entire degree versus those who take a few courses? If so what are they? – Typical examples are: licensure pass rates, employment rates, acceptance into 4-year schools or graduate programs, lifelong learning issues, content mastery, contributions to society, the profession, etc.) Definitions and Examples • Administrative Outcomes – Units/programs want to improve services or approach an old problem in a new way. – They want to become more efficient and effective. – They establish an outcome objective for the administration. • Typical examples are: – All faculty will attend one professional meeting annually so they can stay up-to-date in their field, or: – Counseling wants to recruit a new counselor with expertise in working with first-generation students, or: – Facilities services wants students, faculty and staff to feel that they are safe on campus. Why is This Hard? • Because it is education • Because the best results may not happen for years • Because we are so busy doing what we are doing…. we forget why we are doing it Assessment • “The assessment of student learning can be defined as the systematic collection of information about student learning, using the time, knowledge, expertise, and resources available, in order to inform decisions about how to improve learning. (p.2)” Source:Assessment Clear and Simple: A Practical Guide for Institutions, Departments and General Education by Barbara E. Walvoord, 2004 Assessment Characteristics • It is intended not to generate broad theories but to inform action. • Educational situations contain too many variables to make “proof” possible. • Assessment gathers indicators that will be useful for decision making. • It is not limited to learning that can be objectively tested. A department can state its highest goals and seek the best available indicators about whether those goals are being met. • It does not require standardized test or objective measures. • Faculty regularly assess complex work in their fields and make judgments about its quality. • Faculty can make informed professional judgments about critical thinking, scientific reasoning, or other qualities in student work, and use those judgments to inform departmental and institutional decisions. (page 2) Source:Assessment Clear and Simple: A Practical Guide for Institutions, Departments and General Education by Barbara E. Walvoord, 2004 Assessment Characteristics • Assessment means basing decisions about curriculum, pedagogy, staffing, advising and student support on the best possible data about student learning and the factors that affect it. • A lot of assessment is already going on in responsible classrooms, departments, and institutions, though we have not always called it that. • Assessment can move beyond the classroom to become program assessment: – Classroom assessment – faculty evaluates her own students’ assignments in the capstone course and uses the information to improve her own teaching the next semester – Program assessment – faculty evaluated her own students’ assignments in the capstone course, outlining the strengths and weaknesses of the students’ work in relationship to departmental learning goals. The department uses the data to inform decisions about curriculum and other factors that affect student learning. (page 2-3) Source:Assessment Clear and Simple: A Practical Guide for Institutions, Departments and General Education by Barbara E. Walvoord, 2004 Methods of Assessment • • • • • • • • Course imbedded assessments Written works Student journals Speeches Skills-based assessment (demonstrated skills) Observation checklists Teamwork assessments Surveys that ask about specific behaviors indicative of changes in values and attitudes – Not self-evaluation of actual learning Great Fallacy #1 • Grades – In this day of social promotion, grade inflation and different teaching/learning philosophies, grades tell you virtually nothing. – They are not a measure of outcome achievement. – Two teachers will grade a student differently for the exact same work. – They cannot be used! Great Fallacy #2 • The evaluation of teaching – Only tells you who is happy…”happiness coefficient” – Measures more about the business and science of teaching – not learning – Learning outcomes are not measures of teacher effectiveness or students satisfaction with the teaching/learning process – Learning outcomes are skills based – Students opinions are poor indicators if asked to self-assess • “In your opinion, are you more accepting of cultural differences in people since completing your coursework at the college?” • It is why we don’t ask faculty “On a scale of 1-5, how good of a teacher do you feel you are?” Great Fallacy #3 • I have 65 learning objectives on my syllabus • The faculty has established that when students complete ENG 111, they will have met these 65 objectives – so we are establishing and measuring outcomes • What is the problem with this? How to Get Faculty On-board • • • • • • • • • • Get them involved at the first step Give them as much control as possible Allow for a few tantrums – then hit it again Allow them to create over an adequate period of time Make use of opinion leaders Help them understand “why” Expect them to do an excellent job Start with what you have – what you’re already doing There is no magic bullet There is no one-size-fits-all with outcomes To Move Quickly • Create Several Committees made up of mostly faculty and your IR/IE/Assessment officers – Examples • General education committee • Learning outcomes committee • Program review committee • Give them an assignment: – Find as many good examples of learning outcomes from various sources as you can…. other colleges, accrediting agencies, the literature, standardized tests (my least favorite), national organizations and bring it back to the group. From That List – Establish Values • Are any of these relevant to our students? • Can we reword them to make them relevant to our students? • Are we teaching these concepts/constructs? • If so, where? • How can we assess them? • How can we use the results? • Of this list, what is the most important to us? • What would it be best to know? • How much can we do with our current staffing and resources? Sources of Ideas for Outcomes * * * * * * * * * * program documents program faculty and staff national associations/credentialing boards key volunteers former students parents of students records of complaints programs/agencies/employers that are the next step for your students other colleges with similar programs, services and students as yours outside observers of your program in action How Often • Should we measure student learning outcomes every year – every semester? • Benefits and barriers with timing? • When does measurement become too time consuming? • Units need time to put into effect the changes made as a result of outcome assessment before they are thrown back into another cycle. They need time to reflect on changes and results. Disappointing Outcome Findings: Why Didn’t We Meet Our Objectives? Internal Factors: * * * * * Sudden faculty/staff turnover New teaching philosophy/strategy Curricular change (campus move) Unrealistic outcome targets Measurement problems (lack of follow-through, no effective tracking) Disappointing Outcome Findings External Factors: * * * * * Community unemployment increases State funding changes Related programs (BS or MS programs) close Public transportation increases fares or shuts down some routes serving your campus or time slot Employment trends change Failing to meet your learning outcome objectives is sometimes the best thing that can happen to you! Why? Use Your Findings Internal Uses for Outcome Findings • • • • • • • • • Provide direction for curricular changes Improve educational and support programs Identify training needs for staff and students Support annual and long-range planning Guide budgets and justify resource allocations Suggest outcome targets (expected change) Focus board members’ attention on programmatic issues Help the college expand its most effective services Facilitates an atmosphere of change within the institution An Example from One College Program • Workplace Literacy Program – This program is a literacy initiative that goes directly into the worksite and teaches ESL classes, GED prep and GED classes. Serves mostly immigrants. • During their first attempt at outcome assessment, they surveyed both employers and students. • This was the first time they had ever done this. What They Learned • Employers said: – 43.8% of employers reported increases in employee performance as a result of participation in the program. – 31.3% reported a reduction in absenteeism by participants. – 87.5% said classes improved the morale of their employees – 37.5% said participants received raises – 50% said communication had improved. What Students Said • 70.2% reported being able to fill out job forms better • 35.5% said they could now help their children with their homework • 91.1% said they felt better about themselves • 44.4% said they had received a raise, promotion or opportunity as a result of the courses • 86.3% said their ability to communicate in the workplace had improved What Has Happened Since • Their assessment data has shown up in their marketing brochures to employers. • Their enrollment has grown dramatically. • They have received funding and marketing support from their local Chamber and are considered a model adult literacy program. External Uses of Outcome Findings • Recruit talented faculty and staff • Promote college programs to potential students • Identify partners for collaboration (hospitals, businesses, etc....) • Enhance the college’s public image • Retain and increase funding • Garner support for innovative efforts • Win designation as a model or demonstration site Good Sources for Outcomes (besides your own faculty) If you can’t come up with anything good….. • Steal it from someone who else who did. • Two Good Sources: – Longwood University 201 High Street Farmville, Virginia 23909 Phone: (434) 395-2000 http://www.longwood.edu/gened/15goals.html – Pellissippi State Technical Community College 10915 Hardin Valley Road Knoxville, TN 37933-0990 865-694-6400 http://www.pstcc.edu/departments/curriculum_and_ instruction/currinfo/general-ed-outcomes.html Typical General Education Goals • • • • • • • • • • • • • • • • Basic computer/technology skills Information literacy Critical thinking/analytical thinking Effective communication (both oral and written) Moral character and values Cultural diversity Workforce skills Reading and comprehension Appreciation for the fine arts Computational skills Oral presentation skills Teamwork skills Leadership skills Social awareness Interpersonal skills Self management Understand scientific reasoning/the scientific process or way of knowing • Lifelong learning skills Look at Two General Education Processes in Your Handouts Top Ten Skills for the Future • • • • • • • Work ethic, including self-motivation and time management. Physical skills, e.g., maintaining one's health and good appearance. Verbal (oral) communication, including one-on-one and in a group Written communication, including editing and proofing one's work. Working directly with people, relationship building, and team work. Influencing people, including effective salesmanship and leadership. Gathering information through various media and keeping it organized. • Using quantitative tools, e.g., statistics, graphs, or spreadsheets. • Asking and answering the right questions, evaluating information, and applying knowledge. • Solving problems, including identifying problems, developing possible solutions, and launching solutions. The Futurist Update (Vol. 5, No. 2), an e-newsletter from the World Future Society, quotes Bill Coplin on the “ten things employers want [young people] to learn in college” Learning Outcomes for the 21st Century Students in the 21st Century will need to be proficient in: • Reading, writing, speaking and listening • Applying concepts and reasoning • Analyzing and using numerical data • Citizenship, diversity/pluralism • Local, community, global, environmental awareness • Analysis, synthesis, evaluation, decision-making, creative thinking • Collecting, analyzing and organizing information • Teamwork, relationship management, conflict resolution and workplace skills • Learning to learn, understand and manage self, management of change, personal responsibility, aesthetic responsiveness and wellness • Computer literacy, internet skills, information retrieval and information management (The League for Innovation’s 21st Century Learning Outcomes Project.) Challenges • Identifying and defining outcomes is the easy part. • The devil is in the details. • How do we track it, where does it all go, how do we score it, compile it, turn it into a comprehensive report. • How do we “demonstrate improvement in institutional quality.” Things to Remember • Outcome measurement must be initiated from the unit/department level (promotes ownership of process). • Measure only what you are teaching or facilitating. • Measure what is “important” to you or your program. • Be selective (2-3 outcomes only for a course, a select list for programs and institutional outcomes). • Put as much time in to “thinking through” the tracking process as you do into the definition of outcomes. • Spend the time up front in planning and the process will flow smoothly. • It will prove to be energy well spent. Remember • We do not do outcome assessment/evaluation so we can say we did it. • We do it only for one reason: – To Improve Programs and Services Where Colleges Get In Trouble • Overkill – they evaluate everything that walks and breaths every semester in every area. • No time to “reflect” before they enter back into another assessment cycle. • No focus on “use of results.” • No ability to track results and tally them across the College. Contact Information • Copy of presentation: – www.cpcc.edu/planning – Click on “studies and reports” – Posted as “AACC NCIA session” – CPCC’s IE site – www.cpcc.edu/IE – Terri Manning • terri.manning@cpcc.edu • (704) 330-6592