(1) for the - University of Oxford Department of Education

advertisement

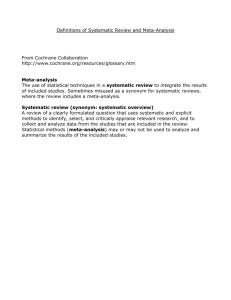

ESRC Workshop Researcher Development Initiative Prof. Herb Marsh Ms. Alison O’Mara Dr. Lars-Erik Malmberg 2 June 2008 Department of Education, University of Oxford 1 What is meta-analysis, When and why we use meta-analysis, Examples of meta-analyses, Benefits and pitfalls of using meta-analysis, Defining a population of studies and finding publications, Coding materials, Inter-rater reliability, Computing effect sizes, Structuring a database, A conceptual introduction to analysis and interpretation of results based on fixed effects, random effects, and multilevel models, and Supplementary analyses ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 2 Traditionally, education researchers collect and analyse their own data (referred to as primary data). Secondary data analysis is based on data collected by someone else (or, perhaps, reanalysis of your own published data). There are at least four logical perspectives to this issue: 1. Meta-analysis -- systematic, quantitative review of published research in a particular field, the focus of this presentation. 2. Systematic review -- systematic, qualitative review of published research in a particular field 3. Secondary Data Analyses -- using large (typically public) databases 4. Reanalyses of published studies -- (often in ways critical of the original study). ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 3 Wilson & Lipsey (2001) synthesised 319 meta-analyses of intervention studies. Across the studies, roughly equal amounts of variance were due to: substantive features of the intervention (true differences), method effects (idiosyncratic study features and potential biases – particularly research design and operationalisation of outcome measures), and sampling error. They concluded: These results underscore the difficulty of detecting treatment outcomes, the importance of cautiously interpreting findings from a single study, and the importance of meta-analysis in summarizing results across studies (p.413). 4 ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) Meta-analysis is an increasingly popular tool for summarising research findings Cited extensively in research literature Relied upon by policymakers Important that we understand the method, whether we conduct or simply consume meta-analytic research Should be one of the topics covered in all introductory research methodology courses ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 5 What is meta-analysis? When and why we use meta-analysis? 6 Systematic synthesis of various studies on a particular research question Do boys or girls have higher self-concepts? Collect all studies relevant to a topic Find all published journal articles on the topic An effect size is calculated for each outcome Determine the size/direction of gender difference for each study “Content analysis” Code characteristics of the study; age, setting, ethnicity, selfconcept domain (math, physical, social), etc. Effect sizes with similar features are grouped together and compared; tests moderator variables Do gender differences vary with age, setting, ethnicity, self-concept, domain, etc? ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 7 Coding: the process of extracting the information from the literature included in the meta-analysis. Involves noting the characteristics of the studies in relation to a priori variables of interest (qualitative) Effect size: the numerical outcome to be analysed in a meta-analysis; a summary statistic of the data in each study included in the meta-analysis (quantitative) Summarise effect sizes: central tendency, variability, relations to study characteristics (quantitative) ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 8 1904: quant. lit. review by Pearson 1977: first modern metaanalysis published by Smith & Glass (1977) Mid-1980s, methods develop: E.g., Hedges, Olkin, Hunter, & Schmidt 1990s: explosion in popularity, esp. in medical research ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 9 Karl Pearson conducted what is reputed to be the first metaanalysis (although not called this) comparing effects of inoculation in different settings. 10 ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) Gene Glass coined the phrase meta-analysis in classic study of the effects of psychotherapy. Because most individual studies had small sample sizes, the effects typically were not statistically significant. Results of 375 controlled evaluations of psychotherapy and counselling were coded and integrated statistically. The findings provide convincing evidence of the efficacy of psychotherapy. On the average, the typical therapy client is better off than 75% of untreated individuals. Few important differences in effectiveness could be established among many quite different types of psychotherapy (e.g., behavioral and non-behavioral). ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 11 • The essence of good science is replicable and generalisable results. • Do we get the same answer to important research questions when we run the study again? • The primary aims of meta-analysis is to test the generalisability of results across a set of studies designed to answer the same research question. • Are the results consistent? If not, what are the differences in the studies that explain the lack of consistency? ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 12 A primary aim is to reach a conclusion to a research question from a sample of studies that is generalisable to the population of all such studies. Meta-analysis tests whether study-to-study variation in outcomes is more than can be explained by random chance. When there is systematic variation in outcomes from different studies, meta-analysis tries to explain these differences in terms of study characteristics: e.g. measures used; study design; participant characteristics; controls for potential bias. ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 13 There exists a critical mass of comparable studies designed to address a common research question. Data are presented in a form that allows the metaanalyst to compute an effect size for each study. Characteristics of each study are described in sufficient detail to allow meta-analysts to compare characteristics of different studies and to judge the quality of each study. ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 14 The number of metaanalyses is increasing at a rapid rate. ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 15 Where are metaanalyses done? All over the world. ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 16 16 All disciplines do metaanalyses, but very popular in medicine ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 17 The number & frequency of citations are increasing in Education ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 18 The number & frequency of citations are increasing in Psychology ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 19 19 20 Amato, P. R., & Keith, B. (1991). Parental divorce and the well-being of children: A meta-analysis . Psychological Bulletin, 110, 26-46. Times Cited: 471 Linn, M. C., & Petersen, A. C. (1985). Emergence and characterization of sex differences in spatial ability: A meta-analysis . Child Development, 56, 1479-1498. Times Cited: 570 Johnson, D. W., & et al (1981). Effects of cooperative, competitive, and individualistic goal structures on achievement: A meta-analysis . Psychological Bulletin, 89, 47-62. Times Cited: 426 Tett, R. P., Jackson, D. N., & Rothstein, M. (1991). Personality measures as predictors of job performance: A meta-analytic review . Personnel Psychology, 44, 703-742 Times Cited: 387 Hyde, J. S., & Linn, M. C. (1988). Gender differences in verbal ability: A meta-analysis . Psychological Bulletin, 104, 53-69. Times Cited: 316 Iaffaldano, M. T., & Muchinsky, P. M. (1985). Job satisfaction and job performance: A meta-analysis . Psychological Bulletin, 97, 251-273. Times Cited: 263. ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 21 De Wolff, M., & van IJzendoorn, M. H. (1997). Sensitivity and attachment: A meta-analysis on parental antecedents of infant attachment . Child Development, 68, 571-591. Times Cited: 340 Wellman, H. M., Cross, D., & Watson, J. (2001). Meta-analysis of theoryof-mind development: The truth about false belief . Child Development, 72, 655-684. Times Cited: 276 Cohen, E. G. (1994). Restructuring the classroom: Conditions for productive small groups . Review of Educational Research, 64, 1-35. Times Cited: 235 Hansen, W. B. (1992). School-based substance abuse prevention: A review of the state of the art in curriculum, 1980-1990 . Health Education Research, 7, 403-430. Times Cited: 207 Kulik, J. A., Kulik, C-L., Cohen, P. A. (1980). Effectiveness of ComputerBased College Teaching: A Meta-Analysis of Findings. Review of Educational Research, 50, 525-544. Times Cited: 198. ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 22 Sheppard, B. H., Hartwick, J., & Warshaw, P. R. (1988). The theory of reasoned action: A meta-analysis of past research with recommendations for modifications and future research . Journal of Consumer Research, 15, 325-343. Times Cited: 515 Jackson, S. E., & Schuler, R. S. (1985). A meta-analysis and conceptual critique of research on role ambiguity and role conflict in work settings . Organizational Behavior and Human Decision Processes, 36, 16-78. Times Cited: 401 Tornatzky Lg, Klein Kj. (1994). Innovation characteristics and innovation adoption-implementation - A meta-analysis of findings . IEEE Transactions On Engineering Management, 29, 28-4. Times Cited: 269. Lowe KB, Kroeck KG, Sivasubramaniam N. (1996). Effectiveness correlates of transformational and transactional leadership: A metaanalytic review of the MLQ literature. Leadership Quarterly, 7, 385-425. Times Cited: 203. Churchill GA, Ford NM, Hartley SW, et al. (1985). Title: The determinants of salesperson performance - A meta-analysis . Journal Of Marketing Research, 22, 103-118. Times Cited: 189. ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 23 Jadad AR, Moore RA, Carroll D, et al. (1996). Assessing the quality of reports of randomized clinical trials: Is blinding necessary? Controlled Clinical Trials, 17, 1-12. Times Cited: 2,008 Boushey Cj, Beresford Saa, Omenn Gs, Et . Al. (1995). A quantitative assessment of plasma homocysteine as a risk factor for vascular-disease - Probable benefits of increasing folic-acid intakes. JAMA-journal Of The American Medical Assoc, 274, 10491057. Times Cited: 2,128 Alberti W, Anderson G, Bartolucci A, et al. (1995). Chemotherapy in non-small-cell lung-cancer - A metaanalysis using updated data on individual patients from 52 randomized clinical-trials. British Medical Journal, 311, 899-909. Times Cited: 1,591 Block G, Patterson B, Subar A (1992). Fruit, vegetables, and cancer prevention - A review of the epidemiologic evidence. Nutrition And Cancer-an International Journal, 18, 1-29. Times Cited: 1,422 ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 24 Question: Does feedback from university students’ evaluations of teaching lead to improved teaching? Teachers are randomly assigned to experimental (feedback) and control (no feedback) groups Feedback group gets ratings, augmented, perhaps, with personal consultation Groups are compared on subsequent ratings and, perhaps, other variables Feedback teachers improved their teaching effectiveness by .3 standard deviations compared to control teachers on the Overall Rating item; even larger differences for ratings of Instructor Skill, Attitude Toward Subject, Student Feedback Studies that augmented feedback with consultation produced substantially larger differences, but other methodological variations had little effect. 25 ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) Question: What is the correlation between university teaching effectiveness and research productivity? Based on 58 studies and 498 correlations: The mean correlation between teaching effectiveness (mostly based on Students’ evaluations of teaching) and research productivity was almost exactly zero; This near-zero correlation was consistent across different disciplines, types of university, indicators of research, and components of teaching effectiveness. This meta-analysis was followed by Marsh & Hattie (2002) primary data study to more fully evaluate theoretical model ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 26 Contention about global self-esteem versus multidimensional, domainspecific self-concept Traditional reviews and previous meta-analyses of self-concept interventions have underestimated effect sizes by using an implicitly unidimensional perspective that emphasizes global self-concept. We used meta-analysis and a multidimensional construct validation approach to evaluate the impact of self-concept interventions for children in 145 primary studies (200 interventions). Overall, interventions were significantly effective (d = .51, 460 effect sizes). However, in support of the multidimensional perspective, interventions targeting a specific self-concept domain and subsequently measuring that domain were much more effective (d = 1.16). This supports a multidimensional perspective of self-concept ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 27 Examined predictors of sexual, nonsexual violent, and general (any) recidivism 82 recidivism studies Identified deviant sexual preferences and antisocial orientation as the major predictors of sexual recidivism for both adult and adolescent sexual offenders. Antisocial orientation was the major predictor of violent recidivism and general (any) recidivism Concluded that many of the variables commonly addressed in sex offender treatment programs (e.g., psychological distress, denial of sex crime, victim empathy, stated motivation for treatment) had little or no relationship with sexual or violent recidivism28 ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) “Epidemiologic studies have suggested that folate intake decreases risk of cardiovascular diseases. However, the results of randomized controlled trials on dietary supplementation with folic acid to date have been inconsistent”. Included 12 studies with randomised control trials. The overall relative risks of outcomes for patients treated with folic acid supplementation compared with controls were non-significant for cardiovascular diseases, coronary heart disease, stroke, and for all-cause mortality. Concluded folic acid supplementation does not reduce risk of cardiovascular diseases or all-cause mortality among participants with prior history of vascular disease. ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 29 In lekking species (those that gather for competitive mating), a male's mating success can be estimated as the number of females that he copulates with. Aim of the study was to find predictors of lekking species’ mating success through analysis of 48 studies. Behavioural traits such as male display activity, aggression rate, and lek attendance were positively correlated with male mating success. The size of "extravagant" traits, such as birds tails and ungulate antlers, and age were also positively correlated with male mating success. Territory position was negatively correlated with male mating success, such that males with territories close to the geometric centre of the leks had higher mating success than other males. Male morphology (measure of body size) and territory size showed small effects on male mating success. 30 ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 31 Compared to traditional literature reviews: (1) there is a definite methodology employed in the research analysis (more like that used in primary research); and (2) the results of the included studies are quantified to a standard metric thus allowing for statistical techniques for further analysis. Therefore process of reviewing research literature is more objective, transparent, and replicable; less biased and idiosyncratic to the whims of a particular researcher ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 32 Cameron, J., & Pierce, W. D (1994). Reinforcement, reward, and intrinsic motivation: A meta-analysis. Review of Educational Research, 64, 363423. Ryan, R., & Deci, E. L. (1996). When paradigms clash: Comments on Cameron and Pierce's claim that rewards do not undermine intrinsic motivation. Review of Educational Research, 66, 33-38 Cameron, J., & Pierce, W. D (1996). The debate about rewards and intrinsic motivation: Protests and accusations do not alter the results. Review of Educational Research, 66, 39-51. Deci, E. L., Koestner, R., & Ryan, R. (2001). Extrinsic rewards and intrinsic motivation in education: reconsidered once again. Review of Educational Research, 71, 1-27. Cameron, J. (2001). Negative effects of reward on intrinsic motivation: a limited phenomenon: comment on Deci, Koestner, and Ryan. Review of Educational Research, 71, 29-42. ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 33 Increased power: by combining information from many individual studies, the meta-analyst is able to detect systematic trends not obvious in the XLS individual studies. Conclusions based on the set of studies are likely to be more accurate than any one study. Improved precision: based on information from many studies, the meta-analyst can provide a more precise estimate of the population effect size (and a confidence interval). Provides potential corrections for potential biases, measurement error and other possible artefacts Identifies directions for further primary studies to address unresolved issues. ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 34 Able to establish generalisability across many studies (and study characteristics). Typically there is study-to-study variation in results. When this is the case, the meta-analyst can explore what characteristics of the studies explain these differences (e.g., study design) in ways not easy to do in individual studies. Easy to interpret summary statistics (useful if communicating findings to a non-academic audience). ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 35 Studies that are published are more likely to report statistically significant findings. This is a source of potential bias. The debate about using only published studies: peer-reviewed studies are presumably of a higher quality VERSUS significant findings are more likely to be published than non-significant findings There is no agreed upon solution. However, one should retrieve all studies that meet the eligibility criteria, and be explicit with how they dealt with publication bias. Some methods for dealing with publication bias have been developed (e.g., Failsafe N, Trim and Fill method). ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 36 Meta-analyses are mostly limited to studies published in English. Juni et al. (2002) evaluated the implications of excluding non-English publications in meta-analyses of randomised clinical trials in 50 meta-analyses treatment effects were modestly larger in non-English publications (16%). However, study quality was also lower in non-English publications. Effects were sufficiently small not to have much influence on treatment effect estimates, but may make a difference in some reviews. ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 37 Increasingly, meta-analysts evaluate the quality of each study included in a meta-analysis. Sometimes this is a global holistic (subjective) rating. In this case it is important to have multiple raters to establish inter-rater agreement (more on this later). Sometimes study quality is quantified in relation to objective criteria of a good study, e.g. larger sample sizes; more representative samples; better measures; use of random assignment; appropriate control for potential bias; double blinding, and low attrition rates (particularly for longitudinal studies) ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 38 In a meta-analysis of Social Science meta-analyses, Wilson & Lipsey (1993) found an effect size of .50. They evaluated how this was related to study quality; For meta-analyses providing a global (subjective) rating of the quality of each study, there was no significant difference between high and low quality studies; the average correlations between effect size and quality was almost exactly zero. Almost no difference between effect sizes based on random- and non-random assignment (effect sizes slightly larger for random assignment). Only study quality characteristic to make a difference was positively biased effects due to one-group pre/post design with no control group at all ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 39 Goldring (1990) evaluated the effects of gifted education programs on achievement. She found a positive effect, but emphasised that findings were questionable because of weak studies; 21 of the 24 studies were unpublished and only one used random assignment. Effects varied with matching procedures: largest effects for achievement outcomes were for studies in which all non-equivalent groups' differences controlled by only one pretest variable. Effect sizes reduced as the number of control variables increase and disappeared altogether with random assignment. Goldring (1990, p. 324) concluded policy makers need to be aware of the limitations of the GAT literature. 40 Schulz (1995) evaluated study quality in 250 randomized clinical trials (RCTs) from 33 meta-analyses. Poor quality studies led to positively biased estimates: lack of concealment (30-41%), lack of double-blind (17%), participants excluded after randomization (NS). Moher et al. (1998) reanalysed 127 RCTs randomized clinical trials from 11 meta-analyses for study quality. Low quality trials resulted in significantly larger effect sizes, 30-50% exaggeration in estimates of treatment efficacy. Wood et al. (2008) evaluated study quality (1346 RCTs from 146 meta-analyses. subjective outcomes: inadequate/unclear concealment & lack of blinding resulted in substantial biases. objective outcomes: no significant effects. conclusion: Systematic reviewers should assess risk of bias. 41 Meta-analyses should always include subjective and/or objective indicators of study quality. In Social Sciences there is some evidence that studies with highly inadequate control for preexisting differences leads to inflated effect sizes. However, it is surprising that other indicators of study quality make so little difference. In medical research, studies largely limited to RCTs where there is MUCH more control than in social science research. Here there is evidence that inadequate concealment of assignment and lack of double-blind inflate effect sizes, but perhaps only for subjective outcomes. These issues are likely to be idiosyncratic to individual discipline areas and research questions. 42 Defining a population of studies and finding publications Coding materials Inter-rater reliability Computing effect sizes Structuring a database 43 Establish research question Define relevant studies Develop code materials Data entry and effect size calculation Pilot coding; coding Locate and collate studies Main analyses Supplementary analyses ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 44 Comparison of treatment & control groups? What is the effectiveness of a reading skills program for treatment group compared to an inactive control group? Pretest-posttest differences? Is there a change in motivation over time? What is the correlation between two variables? What is the relation between teaching effectiveness and research productivity? Moderators of an outcome? Does gender moderate the effect of a peer-tutoring program on academic achievement? ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 45 Do you wish to generalise your findings to other studies not in the sample? Do you have multiple outcomes per study? e.g.: achievement in different school subjects 5 different personality scales multiple criteria of success Such questions determine the choice of metaanalytic model fixed effects random effects multilevel ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 46 Need to have explicit inclusion and exclusion criteria The broader the research domain, the more detailed they tend to become Refine criteria as you interact with the literature Components of a detailed search criteria distinguishing features research respondents key variables research methods cultural and linguistic range time frame publication types ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 47 Search electronic databases (e.g., ISI, Psychological Abstracts, Expanded Academic ASAP, Social Sciences Index, PsycINFO, and ERIC) Examine the reference lists of included studies to find other relevant studies If including unpublished data, email researchers in your discipline, take advantage of Listservs, and search Dissertation Abstracts International ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 48 The following is one possible way to write up the search procedure (see LeBlanc & Ritchie, 2001) 1. Electronic search strategy (e.g., PsycINFO & Dissertation Abstracts). Provide years included in database 2. Keywords and limitations of the search (e.g., language) 3. Additional search methods (e.g., mailing lists) 4. Exclusion criteria (e.g., must contain control group) 5. Yield of the search—number of studies found. Ideally should also mention how many were excluded from the meta-analysis and why ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 49 1 2 3 4 5 ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 50 Inclusion process usually requires several steps to cull inappropriate studies Example from Bazzano, L. A., Reynolds, K., Holder, K. N., & He, J. (2006).Effect of Folic Acid Supplementation on Risk of Cardiovascular Diseases: A Metaanalysis of Randomized Controlled Trials. JAMA, 296, 2720-2726 ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 51 You can report the inclusion/exclusion process using text rather than a flow chart, but is not as easy to follow if it is an elaborate process. Should report original sample and final yield as a minimum (in this case, original = 139, final = 22) ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 52 Code Sheet __ 1 Study ID _99_ Year of publication __ 2 Publication type (1-5) __ 1 Geographical region (1-7) _87_ _ _ Total sample size _41_ _ Total number of males _46_ _ Total number of females Code Book/manual Publication type (1-5) 1. Journal article 2. Book/book chapter 3. Thesis or doctoral dissertation 4. Technical report 5. Conference paper ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 53 Mode of therapy, Duration of therapy, Participant characteristics, Publication characteristics, Design characteristics Coding characteristics should be mentioned in the paper. If the editor allows, a copy of the actual coding materials can be included as an appendix ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 54 Random selection of papers coded by both coders (e.g., 30% of publications are doublecoded) Meet to compare code sheets Where there is discrepancy, discuss to reach agreement Amend code materials/definitions in code book if necessary May need to do several rounds of piloting, each time using different papers ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 55 Percent agreement: Common but not recommended Cohen’s kappa coefficient Kappa is the proportion of the optimum improvement over chance attained by the coders, where a value of 1 indicates perfect agreement and a value of 0 indicates that agreement is no better than that expected by chance Kappa’s over .40 are considered to be a moderate level of agreement (but no clear basis for this “guideline”) Correlation between different raters Intraclass correlation. Agreement among multiple raters corrected for number of raters using Spearman-Brown formula (r) ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 56 The purpose of this exercise is to explore various issues of meta-analytic methodology Discuss in groups of 3-4 people the following issues in relation to the gender differences in smiling study (LaFrance et al., 2003) 1. Did the aims of the study justify conducting a meta- analysis? 2. Was selection criteria and the search process explicit? 3. How did they deal with interrater (coder) reliability? ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 57 1. Extend previous meta-analyses, include previously 2. 3. untested moderators based on theory/empirical observations Search process: detailed databases and 5 other sources of studies, search terms. Selection criteria: justification provided (e.g., for excluding under the age of 13). However, not clear how many studies were retrieved and then eventually included (compare with flow chart on slide 51) Multiple coders (group of coders consisted of four people with two raters of each sex coding each moderator). Interrater reliability was calculated by taking the aggregate reliability of the four coders at each time using the Spearman–Brown formula ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 58 59 The effect size makes meta-analysis possible It is based on the “dependent variable” (i.e., the outcome) It standardizes findings across studies such that they can be directly compared Any standardized index can be an “effect size” (e.g., standardized mean difference, correlation coefficient, odds-ratio), but must be comparable across studies (standardization) represent magnitude & direction of the relation be independent of sample size Different studies in same meta-analysis can be based on different statistics, but have to transform each to a standardized effect size that is comparable across different studies ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 60 Study 1 N M SD t p d Cntr 10 100 15 Exp 10 105 15 Study 3 N M SD t p d Study 2 N M SD t p d Cntr 100 100 15 Cntr 50 100 15 Exp 50 105 15 Exp 100 105 15 ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 61 Study 1 N M SD t p d Cntr 10 100 15 Exp 10 105 15 -0.750 0.466 0.333 Study 3 N M SD t p d Study 2 N M SD t p d Cntr 100 100 15 Cntr 50 100 15 Exp 50 105 15 -1.667 0.099 0.333 Exp 100 105 15 -2.360 0.019 0.333 ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) XLS 62 O’Mara (2004) ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 63 Within the one meta-analysis, can include studies based on any combination of statistical analysis (e.g., t-tests, ANOVA, multiple regression, correlation, odds-ratio, chi-square, etc). However, you have to convert each of these to a common “effect size” metric. Lipsey & Wilson (2001) present many formulae for calculating effect sizes from different information. The “art” of meta-analysis is how to compute effect sizes based on non-standard designs and studies that do not supply complete data. However, need to convert all effect sizes into a common metric, typically based on the “natural” metric given research in the area. E.g. standardized mean difference; odds-ratio; correlation, etc. ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 64 Standardized mean difference Group contrast research Treatment groups Naturally occurring groups Inherently continuous construct Odds-ratio Group contrast research Treatment groups Naturally occurring groups Inherently dichotomous construct Correlation coefficient Association between variables research ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 65 Represents a standardized group contrast on an inherently continuous measure Uses the pooled standard deviation (some situations use control group standard deviation) Commonly called “d” X X G2 ES G1 s pooled If n1 n2 s pooled In a gender difference study, the effect size might be: In an intervention study with experimental and control groups, the effect size might be: ES X Males X Females SD pooled ES s12 s22 2 X Exper X Control SD pooled ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 66 Means and standard deviations Correlations P-values F-statistics t-statistics d Almost all test statistics can be transformed into an standardized effect size “d” “other” test statistics ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 67 ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 68 Represents the strength of association between two inherently continuous measures Generally reported directly as r (the Pearson product moment coefficient) ES r ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 69 The odds-ratio is based on a 2 by 2 contingency table The odds-ratio is the odds of success in the treatment group relative to the odds of success in the control group Frequencies Success Failure Treatment Group a b Control Group c d ad ES OR bc ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 70 265 75 ES OR 3.044 32 204 log e (3.044) 1.113 1.113 / 1.83 0.61 ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 71 Alternatively: transform rs into Fisher’s Zr-transformed rs, which are more normally distributed r 0.90 0.80 0.70 0.60 0.50 0.40 0.30 0.20 0.10 0.00 -0.10 -0.20 -0.30 -0.40 -0.50 -0.60 -0.70 -0.80 -0.90 d 4.13 2.67 1.96 1.50 1.15 0.87 0.63 0.41 0.20 0.00 -0.20 -0.41 -0.63 -0.87 -1.15 -1.50 -1.96 -2.67 -4.13 ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 72 Hedges proposed a correction for small sample size bias (n < 20) Must be applied before analysis ES ' sm 3 ES sm 1 4 N 9 ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 73 The effect sizes are weighted by the inverse of the variance to give more weight to effects based on large sample sizes Variance is calculated as d i2 (n1 n 2 ) vi (n1 n 2 ) 2( n1 n 2 ) The standard error of each effect size is given by the square root of the sampling variance SE = vi ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 74 Population N - ‘size’ M - ‘mean’ d = effect size Sample n - ‘size’ m - ‘mean’ d = effect size Interval estimates The “likely” population parameter is the sample parameter ± uncertainty Standard errors (s.e.) Confidence intervals (C.I.) ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 75 ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 76 Each study is one lineVariance in of the effect size Sample sizes the data base Effect size DurationReliability of the instrument ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 77 Fixed effects model Random effects model Multilevel model 78 Includes the entire population of studies to be considered; do not want to generalise to other studies not included (e.g., future studies). All of the variability between effect sizes is due to sampling error alone. Thus, the effect sizes are only weighted by the within-study variance. Effect sizes are independent. ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 79 There are 2 general ways of conducting a fixed effects meta-analysis: ANOVA & multiple regression The analogue to the ANOVA homogeneity analysis is appropriate for categorical variables Looks for systematic differences between groups of responses within a variable Multiple regression homogeneity analysis is more appropriate for continuous variables and/or when there are multiple variables to be analysed Tests the ability of groups within each variable to predict the effect size Can include categorical variables in multiple regression as dummy variables. (ANOVA is a special case of multiple regression) ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 80 ES i The homogeneity (Q) test asks whether the different effect sizes are likely to have all come from the same population (an assumption of the fixed effects model). Are the differences among the effect sizes no bigger than might be expected by chance? Q wi ES iES 2 ES i = effect size for each study (i = 1 to k) ES = mean effect size wi = a weight for each study based on the sample size However, this (chi-square) test is heavily dependent on sample size. It is almost always significant unless the numbers (studies and people in each study) are VERY small. This means that the fixed effect model will almost always be rejected in favour of a random effects model. ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 81 Run MATRIX procedure: ***** Meta-Analytic Results ***** ------- Distribution Description --------------------------------N 15.000 Min ES .050 Max ES 1.200 Wghtd SD .315 ------- Fixed & Random Effects Model ----------------------------Mean ES -95%CI +95%CI SE Z P Fixed .4312 .3383 .5241 .0474 9.0980 .0000 Random .3963 .2218 .5709 .0890 4.4506 .0000 ------- Random Effects Variance Component -----------------------v = .074895 ------- Homogeneity Analysis ------------------------------------Q df p 44.1469 14.0000 .0001 Random effects v estimated via ------ END MATRIX ----- Significant heterogeneity in the effect sizes therefore random noniterative method of moments. effects more appropriate and/or moderators need to be modelled ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 82 Model moderators by grouping effect sizes that are similar on a specific characteristic For example, group all effect size outcomes that come from studies using a placebo control group design and compare with effect sizes from studies using a waitlist control group design So in this example, ‘Design’ is a dichotomous variable with the values 0 = placebo control and 1 = waitlist control Exp. cond ES design ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 83 On the next slide, we will look at the outcomes of a study to show the importance of various moderator variables Do Psychosocial and Study Skill Factors Predict College Outcomes? A Meta-Analysis Robbins, Lauver, Le, Davis, Langley, & Carlstrom (2004). Psychological Bulletin, 130, 261–288 Aim: To examine the relationship between psychosocial and study skill factors (PSFs) and college retention by metaanalyzing 109 studies ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 84 N = sample size for that variable k = number of correlation coefficients on which each distribution was based r = mean observed correlation CIr 10% = lower bound of the confidence interval for observed r CIr 90% = upper bound of the confidence interval for observed r Institutional size smallest effect size Not statistically significant because CI Statistically significant contains zero Academic related skills because CI does not largest effect size ESRC RDI One 85 contain zeroworkshop (Marsh, O’Mara, Malmberg) Day Meta-analysis Target self-concept domains are those that are directly relevant to the intervention Target-related are those that are logically relevant to the intervention, but not focal Non-target are domains that are not expected to be enhanced by the intervention Regression Coefficients and their standard errors B SE Target .4892 .0552 Target-related .1097 .0587 Non-target .0805 .0489 Sig? yes no no From O’Mara, Marsh, Craven, & Debus (2006) ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 86 Is only a sample of studies from the entire population of studies to be considered. As a result, do want to generalise to other studies not included in the sample (e.g., future studies). Variability between effect sizes is due to sampling error plus variability in the population of effects. Effect sizes are independent. ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 87 If the homogeneity test is rejected (it almost always will be), it suggests that there are larger differences than can be explained by chance variation (at the individual participant level). There is more than one “population” in the set of different studies. Now we turn to the random effects model to determine how much of this between-study variation can be explained by study characteristics that we have coded. The total variance associated with the effect sizes has two components, one associated with differences within each study (participant level variation) and one between study variance: vTi v vi ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 88 Do Self-Concept Interventions Make a Difference? A Synergistic Blend of Construct Validation and MetaAnalysis O’Mara, Marsh, Craven, & Debus. (2006). Educational Psychologist, 41, 181–206 Aim: To examine what factors moderate the effectiveness of self-concept interventions by meta-analyzing 200 interventions ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 90 QB = between group homogeneity. If the QB value is significant, then the groups (categories) are significantly different from each other QW = within group homogeneity. If QW is significant, then the effect sizes within a group (category) Note that differ significantly from each other the fixed Only 2 variables had significant QB in the random effects model. ‘Treatment characteristics’ also had significant QW. effects are more significant than random effects 91 ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) Run MATRIX procedure: ***** Meta-Analytic Significant heterogeneity in Results ***** the effect sizes therefore need to model moderators Description --------------------------------- ------- Distribution N Min ES 15.000 .050 Max ES 1.200 Wghtd SD .315 ------- Fixed & Random Effects Model ----------------------------Mean ES -95%CI +95%CI SE Z P Fixed .4312 .3383 .5241 .0474 9.0980 .0000 Random .3963 .2218 .5709 .0890 4.4506 .0000 ------- Random Effects Variance Component -----------------------v = .074895 ------- Homogeneity Analysis ------------------------------------Q df p 44.1469 14.0000 .0001 v Random effects v estimated via noniterative method of moments. ------ END MATRIX ----- Q ( k 1) w i wi 2 wi92 Meta-analytic data is inherently hierarchical (i.e., effect sizes nested within studies) and has random error that must be accounted for Effect sizes are not necessarily independent Allows for multiple effect sizes per study ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 93 New technique that is still being developed Provides more precise and less biased estimates of between-study variance than traditional techniques ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 94 Level 1: outcome-level component Effect sizes Level 2: study component Publications ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 95 Intercept-only model, which incorporates both the outcome-level and the study-level components (similar to a random effects model) Expand model to include predictor variables, to explain systematic variance between the study effect sizes ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 96 Acute Stressors and Cortisol Responses: A Theoretical Integration and Synthesis of Laboratory Research Dickerson & Kemeny (2004). Psychological Bulletin, 130, 355–391. Aim: To examine methodological predictors of cortisol responses in a meta-analysis of 208 laboratory studies of acute psychological stressors ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 97 Only 2 variables significant (Quad Time between stress onset & assessment; Time of day). The quadratic component is difficult to interpret as an unstandardized regression coefficient, but the graph suggests it is meaningfully large Quadratic Function of time since Onset ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 98 Fixed, random, or multilevel? Generally, if more than one effect size per study is included in sample, multilevel should be used However, if there is little variation at study level, the results of multilevel modelling meta-analyses are similar to random effects models ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 99 Do you wish to generalise your findings to other studies not in the sample? Yes – random No – fixed effects or effects multilevel Do you have multiple outcomes per study? Yes – multilevel No – random effects or fixed effects ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 100 The purpose of this exercise is to consider choice of meta-analytic method Discuss in groups of 3-4 people the question in relation to the gender differences in smiling study (LaFrance et al., 2003) Is there independence of effect sizes? What are the implications for model choice (fixed, random, multilevel)? ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 101 Fail-safe N Power analysis Trim-and-fill method 102 The fail-safe N (Rosenthal, 1991) determines the number of studies with an effect size of zero needed to lower the observed effect size to a specified (criterion) level. For example, assume that you want to test the assumption that an effect size is at least .20. If the observed effect size was .26 and the fail-safe N was found to be 44, this means that 44 unpublished studies with a mean effect size of zero would need to be included in the sample to reduce the observed effect size of .26 to .20. ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 103 Power is a term used to describe the probability of a statistical test committing Type II error. That is, it indicates the likelihood that the test has failed to reject the null hypothesis, which implicitly suggests that there is no effect when in reality there is. Power, sample size, significance level, and effect size are inter-related. A lower powered study has to exhibit a much larger effect size to produce a significant finding. This has ramifications for publication bias. Muncer, Craigie, & Holmes (2003) recommend conducting a power analysis on all studies included in the meta-analysis Compare the observed value (d) against a theoretical value (includes information about sample size) ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 104 Trim and fill procedure (Duval & Tweedie, 2000a, 2000b) calculates the effect of potential data censoring (including publication bias) on the outcome of the meta-analyses. Nonparametric, iterative technique examines the symmetry of effect sizes plotted by the inverse of the standard error. Ideally, the effect sizes should mirror on either side of the mean. ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 105 Examining the methods and output of published meta-analysis 106 Discuss in groups of 3-4 people the following question in relation to the gender differences in smiling study (LaFrance et al., 2003) How did they deal with publication bias? Does this seem appropriate? ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 107 The purpose of this exercise is to practice reading meta-analytic results tables. This study, by Reger et al. (2004), examines the relationship between neuropsychological functioning and driving ability in dementia. 1. In Table 3, which variables are homogeneous for the “on-road tests” driving measure in the “All Studies” column? What does this tell you about those variables? 2. In Table 4, look at the variables that were homogeneous in question (1) for the “on-road tests” using “All Studies”. Which variables have a significant mean ES? Which variable has the largest mean ES? 108 ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 1. Homogeneous variables (non-significant Q2. values): Mental status–general cognition, Visuospatial skills, Memory, Executive functions, Language All of the relevant mean effect sizes are significant. Memory and language are tied as the largest mean ESs for homogeneous variables (r = .44) ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 109 110 We established what meta-analysis is, when and why we use meta-analysis, and the benefits and pitfalls of using meta-analysis Summarised how to conduct a meta-analysis Provided a conceptual introduction to analysis and interpretation of results based on fixed effects, random effects, and multilevel models Applied this information to examining the methods of a published meta-analysis ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 111 Comparing apples and oranges Quality of the studies included in the meta-analysis What to do when studies don’t report sufficient information (e.g., “non-significant” findings)? Including multiple outcomes in the analysis (e.g., different achievement scores) Publication bias ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 112 With meta-analysis now one of the most popularly published research methods, it is an exciting time to be involved in meta-analytic research The hottest topics in meta-analysis are: Multilevel modelling to address the issue of independence of effect sizes New methods in publication bias assessment (Trim-andfill method, post hoc power analysis) Also receiving attention: Establishing guidelines for conducting meta-analysis (best practice) Meta-analyses of meta-analyses ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 113 Purpose-built Comprehensive Meta-analysis (commercial) Schwarzer (free, http://userpage.fuberlin.de/~health/meta_e.htm) Extensions to standard statistics packages SPSS, Stata and SAS macros, downloadable from http://mason.gmu.edu/~dwilsonb/ma.html Stata add-ons, downloadable from http://www.stata.com/support/faqs/stat/meta.html HLM – V-known routine MLwiN Mplus Please note that we do not advocate any one programme over another, and cannot guarantee the quality of all of the products downloadable from the internet. This list is not exhaustive. ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 114 Cooper, H., & Hedges, L. V. (Eds.) (1994). The handbook of research synthesis (pp. 521–529). New York: Russell Sage Foundation. Hox, J. (2003). Applied multilevel analysis. Amsterdam: TT Publishers. Hunter, J. E., & Schmidt, F. L. (1990). Methods of meta-analysis: Correcting error and bias in research findings. Newbury Park: Sage Publications. Lipsey, M. W., & Wilson, D. B. (2001). Practical metaanalysis. Thousand Oaks, CA: Sage Publications. ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 115 Pick up a brochure about our intermediate and advanced meta-analysis courses Visit our website http://www.education.ox.ac.uk/research/resgroup/self/training.php ESRC RDI One Day Meta-analysis workshop (Marsh, O’Mara, Malmberg) 116