SHAKEN: Knowledge Base (KB) Authoring Environment for Subject

advertisement

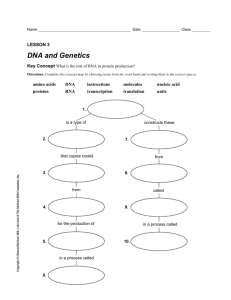

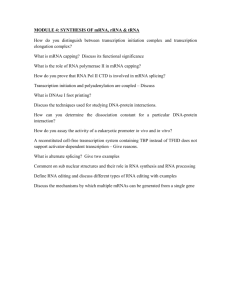

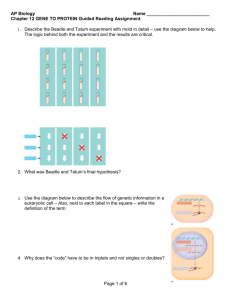

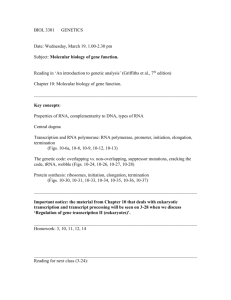

Enabling Domain Experts to Convey Questions to a Machine: A Modified, Template-Based Approach Peter Clark (Boeing Phantom Works) Ken Barker, Bruce Porter (Univ Texas at Austin) Vinay Chaudhri, Sunil Mishra, Jerome Thomere (SRI International) How can End-Users Pose Questions? Start-to-Finish Knowledge Capture: • User needs to express: – domain knowledge – questions posed to that domain knowledge • Posing questions: – can be straightforward, e.g., single-task systems: • “What disease does this patient have?” – or, can itself be a major “knowledge capture” challenge • This talk: – How to pose questions (not how to answer them!) Some Example Questions… 1. When during RNA translation is the movement of a tRNA molecule from the A- to the P-site of a ribosome thought to occur? 2. What are the functions of RNA? 3. What happens to the DNA during RNA transcription? 4. In a cell, what factors affect the rate of protein production? 5. A mutation in DNA generates a UGA stop codon in the middle of the RNA coding for a particular protein. What nucleotide change has probably occurred ? Some Previous Approaches • Just allow one question to be asked – “What disease does this patient have?” – But: inappropriate for multifunctional systems • Ask in Natural Language – e.g., for databases • “How many employees work for Joe?” – But: lacks sufficient constraint • Use Question Templates – e.g., HPKB • “What risks/rewards would <country> face/expect in taking hostage citizens of <country>?” – But: domain-specific A Modified, Template-Based Approach Claims: 1. Complex questions can be factored into – the question scenario (“Imagine that…”) – query to that scenario (“Thus, what is…”) 2. The scenario contains most of the complexity – The “raw query” itself is usually simple 3. The query can be mapped into one of a small number of domain-general templates – grouped around different modeling paradigms A Modified, Template-Based Approach basis for a modified, template-based approach: Full Question = Scenario + Query Capture using graphical tools (Shaken) Capture with a finite set of domain-general templates Full Question = Scenario + Query • Create using a graphical “representation builder” – Select objects from an ontology – Connect them together using small library of relations – Graph converted to ground logic assertions “A DNA virus invades the cell of a multicellular organism” Full Question = Scenario + Query • Huge variety of possible queries – But can be grouped according to reasoning paradigms the KB supports Catalog of 29 Domain-General Question Types – based on analysis of 339 cell biology questions – have a fill-in-the-blank template – “blanks” are (often complex) objects from the scenario Paradigms and Some Templates… 1. Lookup & Simple Deductive Reasoning q2 “What is/are the function of RNA?” q4 “Is a ribosome a cytoplasmic organelle?” q6 “How many membranes are in the parts relationship to the ribosome?” 2. Discrete Event Simulation q12 “What happens to the DNA during RNA transcription?” 3. Qualitative Reasoning q25 “In cell protein synthesis, what factors affect the rate of protein production?” q26 “In RNA transcription, what factors might cause the transcription rate to increase?” 4. Analogical/Comparitive Reasoning q29 “What is the difference between procaryotic mRNA and eucaryotic mRNA?” Question Reformulation • Small set of question types users often must re-cast original question in terms of those types • For example… 7.1.5-270: “Where in a eucaryotic cell does RNA transcription take place?” “What is/are the site of RNA transcription?” 7.1.4.118: “When is the sigma factor of bacterial RNA polymerase released with respect to RNA transcription?” “During RNA transcription, when does the RNA polymerase | release | the sigma factor?” Posing questions Posing questions (cont) Receiving Answers Receiving Answers (cont) Evaluation and Lessons Learned • Large-scale trials in 2001 • 4 biology students used system for 4 weeks • Their goals: – Encode 11-page subsection on cell biology – Test their representations using a set of 70 questions • Qns expressed in English • High-school level of difficulty • Qns set independently, no knowledge of our templates • 18 of the 29 templates implemented at time of trials Results • It works… – All 4 users able to pose most (~80%) of the qns – Answer score (average) = 2.23 (2 = “mostly correct”) – Exposes what the system is able to do • …but three major challenges… Challenges 1. Users had difficulty reformulating their questions to match a template, e.g. (Original) “Where in a eucaryotic cell does RNA transcription take place?” (Desired) “What is/are the site of RNA transcription?” (User) “What is RNA transcription?” Heavy use of a few generic templates: Challenges • Reformulation is not just a rewording task • Rather, requires user to view problem in terms of one of the KB’s modeling paradigms • Easier for us than for the users Challenges 2. Users need to be fluent with the graph tool and KB ontology for specifying scenarios • Not an problem in this case Challenges 3. Sometimes, the template approach breaks down • Some questions require identifying the scenarios: • • Similarly, identifying the right viewpoint/level of detail: • • • e.g., DNA as a line vs. sequence vs. two strands Some topics not covered by templates • Uncertainty, causal event structure • Diagnosis, abduction Some questions go beyond concepts in the KB • • “What kinds of final products result from mRNA?” “What are the building blocks of proteins?” Can’t specify “impossible objects” • “Is <object> possible?” Summary • Conveying questions can itself be a major “knowledge capture” challenge • A modified, template-based approach: – Factor full questions into scenarios + templates – Templates are domain-general, and based on modeling paradigms available – Balances flexibility vs. interpretability • Results: – A catalog of templates – Approach works! but with significant caveats.