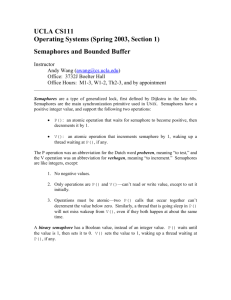

Module 7: Process Synchronization

Chapter 7: Process Synchronization

Background

The Critical-Section Problem

Synchronization Hardware

Semaphores

Classical Problems of Synchronization

Critical Regions

Monitors

Synchronization in Solaris 2 & Windows 2000

Background

Concurrent access to shared data may result in data inconsistency.

Maintaining data consistency requires mechanisms to ensure the orderly execution of cooperating processes.

Shared-memory solution to bounded-butter problem

(Chapter 4) allows at most n – 1 items in buffer at the same time. A solution, where all N buffers are used is not simple.

Suppose that we modify the producer-consumer code by adding a variable counter , initialized to 0 and incremented each time a new item is added to the buffer

Bounded-Buffer

Shared data

#define BUFFER_SIZE 10 typedef struct {

. . .

} item ; item buffer[BUFFER_SIZE]; int in = 0; int out = 0; int counter = 0;

Bounded-Buffer

Producer process item nextProduced; while (1) { while (counter == BUFFER_SIZE)

; /* do nothing */ buffer[in] = nextProduced; in = (in + 1) % BUFFER_SIZE; counter++;

}

Bounded-Buffer

Consumer process item nextConsumed; while (1) { while (counter == 0)

; /* do nothing */ nextConsumed = buffer[out]; out = (out + 1) % BUFFER_SIZE; counter--;

}

Bounded Buffer

The statements counter++; counter--; must be performed atomically .

Atomic operation means an operation that completes in its entirety without interruption.

Bounded Buffer Analysis

Although “P” and “C” routines are logically correct in themselves, they may not work correctly running concurrently.

The counter variable is shared between the two processes

When ++counter and --count are done concurrently, the results are unpredictable because the operations are not atomic

Assume ++counter, --counter compiles as follows:

++counter --counter register1 = counter register2 = counter register1 = register1 + 1 register2 = register2 - 1 counter = register1 counter = register2

Bounded Buffer Analysis

If both the producer and consumer attempt to update the buffer concurrently, the assembly language statements may get interleaved.

.

Interleaving depends upon how the producer and consumer processes are scheduled

P and C get unpredictable access to CPU due to time slicing

Bounded Buffer Analysis

Assume counter is initially 5. One interleaving of statements is: producer: register1 = counter ( register1 = 5 ) producer: register1 = register1 + 1 ( register1 = 6 ) consumer: register2 = counter ( register2 = 5 ) consumer: register2 = register2 – 1 ( register2 = 4 ) producer: counter = register1 ( counter = 6 ) consumer: counter = register2 ( counter = 4 )

The value of count may be either 4 or 6, where the correct result should be 5.

We have a “ race condition ” here.

Race Condition

Race condition : The situation where several processes access – and manipulate shared data concurrently. The final value of the shared data depends upon which process finishes last.

To prevent race conditions, concurrent processes must be synchronized .

Is there any logical difference in a race condition if the two “racing” processes are on two distinct CPU’s in an SMP environment vs. both running concurrently on a single uniprocessor? … is one worse than the other?

The Critical-Section Problem

n processes all competing to use some shared data

Each process has a code segment, called critical section , in which the shared data is accessed.

Problem – ensure that when one process is executing in its critical section, no other process is allowed to execute in its critical section.

Solution to Critical-Section Problem

1.

Mutual Exclusion . If process P i is executing in its critical section, then no other processes can be executing in their critical sections – unless it is by deliberate design by the programmer – see readers/writer problem later.

2.

* Progress . If there exist some processes that wish to enter their critical section, then processes outside of their critical sections cannot participate in the decision of which one will enter its CS next, and this selection cannot be postponed indefinitely.

In other words: No process running outside its CS may block other processes from entering their CS’s – ie., a process must be in a CS to have this privilege.

3.

Bounded Waiting . A bound must exist on the number of times that other processes are allowed to enter their critical sections after a process has made a request to enter its critical section and before that request is granted

- no starvation.

Assume that each process executes at a nonzero speed

No assumption concerning relative speed of the n processes.

* Item 2 is A modification of the rendition given in Silberschatz which appears to be “muddled” – see “Modern Operating Systems”, 1992, by

Tanenbaum, p. 35.

See next slide ffrom other author’s rendition of these conditions for clarification:

Solution to Critical-Section Problem

From: “Operating systems”, by William Stallings, 4 th ed., page 207

Mutual exclusion must be enforced: Only one process at a time is allowed into the critical section (CS)

A process that halts in its noncritical section must do so without interfering with other processes

It must not be possible for a process requiring access to a CS to be delayed indefinitely: no deadlock or starvation.

When no process is in a critical section, any process that requests entry to its CS must be permitted to enter without delay.

No assumptions are made about relative process speeds or number of processors.

A process remains inside its CS for a finite time only.

Also From “Modern Operating Systems”, 1992, by Tanenbaum, p. 35.

No two processes may be simultaneously inside their critical sections.

- No assumptions may be made about speeds or the numbers of CPUs

No process running outside its CS may block other processes from entering their CS’s

- No process should have to wait forever to enter its CS

Initial Attempts to Solve Problem

Only 2 processes, P

0 and P

1

General structure of process P i

(other process P j

) do { entry section critical section exit section reminder section

} while (1) ;

Processes may share some common variables to synchronize their actions.

Algorithm 1

Assume two processes: P

0

Shared variables:

and P

1 int turn ; initially turn = 0 /* let P0 have turn first */ if (turn == i)

P i can enter its critical section, i = 0, 1

For process P i

: // where the “other” process is P j do { while (turn != i) ; // wait while it is your turn critical section turn = j ; // allow the “other” to enter after exiting CS reminder section

} while (1) ;

Satisfies mutual exclusion, but not progress

if P j decides not to re-enter or crashes outside CS, then P i cannot ever get in

A process cannot make consecutive entries to CS even if “other” is not in CS other prevents a proc from entering while not in CS a “no-no”

Algorithm 2

Shared variables

boolean flag[2] ; // “interest” bits initially flag [0] = flag [1] = false.

flag [i] = true

P i declares interest in entering its critical section

Process P i

// where the “other” process is P j do { flag[i] = true; // declare your own interest while (flag[ j]) ; //wait if the other guy is interested critical section flag [i] = false; // declare that you lost interest remainder section // allows other guy to enter

} while (1);

Satisfies mutual exclusion, but not progress requirement.

If flag[ I] == flag[ j] == true, then deadlock - no progress but barring this event, a non-CS guy cannot block you from entering

Can make consecutive re-entries to CS if other not interested

Uses “mutual courtesy”

Algorithm 3

Peterson’s solution

Combined shared variables & approaches of algorithms 1 and 2.

Process P i

// where the “other” process is P do { j flag [i] = true; // declare your interest to enter turn = j; // assume it is the other’s turn-give P

J while (flag [ j ] and turn == j) ; a chance critical section flag [i] = false; remainder section

} while (1);

Meets all three requirements; solves the critical-section problem for two processes ( the best of all worlds almost !).

Turn variable breaks any deadlock possibility of previous example,

AND prevents “hogging” – P i setting turn to j gives P

J a chance after each pass of P i

’s CS

Flag[ ] variable prevents getting locked out if other guy never re-enters or crashes outside and allows CS consecutive access other not intersted in entering.

Down side is that waits are spin loops.

Question: what if the setting flag[i], or turn is not atomic?

Bakery Algorithm

… or the Supermarket deli algorithm

Critical section for n processes

Before entering its critical section, process receives a number. Holder of the smallest number enters the critical section.

If processes P i then P i and P j receive the same number, if is served first; else P j is served first.

i < j ,

The numbering scheme always generates numbers in increasing order of enumeration; i.e., 1,2,3,3,3,3,4,5...

Bakery Algorithm

Notation <

lexicographical order (ticket #, process id #)

( a,b ) < c,d ) if a < c or if a = c and b < d max ( a

0

,…, a n -1

) is a number, k , such that k

a i for i

= 0, … , n

– 1

Shared data boolean choosing[n]; int number[n];

Data structures are initialized to false and 0 respectively

Bakery Algorithm For Process P

i do { /* Has four states: choosing, scanning, CS, remainder section */ choosing[i] = true; //process does not “compete” while choosing a # number[i] = max(number[0], number[1], …, number [n – 1]) + 1; choosing[i] = false;

/*scan all processes to see if if P i has lowest number: */ for (k = 0; k < n; k++) { /* check process k, book uses dummy variable “j” */ while (choosing[ k ]);

/*if P k is choosing a number wait till done */ while ((number[k] != 0) && (number[k], k < number[ i ], i)) ;

/* if (P k is waiting (or in CS) and P k is “ahead” of P i then P i waits */

/*if P k is not in CS and is not waiting, OR P number, then skip over P k

- when P number and will fall thru to the CS */ i k is waiting with a larger gets to end of scan, it will have lowest

/* If P i is waiting on P k

, then number[k] will go to 0 because P eventually get served – thus causing P i check out the status of the next process if any */ k will to break out of the while loop and

}

/* the while loop skips over the case k == i */ critical section number[i] = 0; /* no longer a candidate for CS entry */ remainder section

} while (1);

Synchronization Hardware

Test and Set

targer is the lock: target ==1 means cs locked, else open

Test and modify the content of a word atomically .

boolean TestAndSet(boolean target) { boolean rv = target; target = true; return rv; // return the original value of target

}

See “alternate definition” in “Principles of Concurrency” notes

if target ==1 (locked), target stays 1 and return 1, “wait”

if target ==0 (unlocked), set target to 1(lock door), return 0, and enter “enter CS”

Mutual Exclusion with Test-and-Set

Shared data: boolean lock = false;

// if lock == 0, door open, if lock == 1, door locked

Process P i do { while (TestAndSet(lock)) ; critical section lock = false; remainder section

}

See Flow chart (“Alternate Definition” ) in Instructors notes:

“Concurrency and Mutual exclusion”, Hardware Support for details on how this works.

Synchronization Hardware swap

Atomically swap two variables.

void Swap(boolean &a, boolean &b) { boolean temp = a; a = b; b = temp;

}

Mutual Exclusion with Swap for process P

i

Shared data (initialized to false ): boolean lock = false; /* shared variable - global */

// if lock == 0, door open, if lock == 1, door locked

Private data boolean key_i = true;

Process P i do { key_i = true; /* not needed if swap used after CS exit */ while ( key_i == true)

Swap(lock, key_i ); critical section /* remember key_i is now false */ lock = false; /* can also use: swap(lock, key_i ); */ remainder section

}

See Flow chart (“Alternate Definition” ) in Instructors notes:

“Concurrency and Mutual exclusion”, Hardware Support for details on how this works.

NOTE: The above applications for mutual exclusion using both test and set and swap do not satisfy bounded waiting requirement - see book pp.199-

200 for resolution..

Semaphores

Synchronization tool that does not require busy waiting.

Semaphore S – integer variable can only be accessed via two indivisible ( atomic ) operations wait ( S ): while S

0 do no-op ;

S --; signal ( S ):

S++;

Note: Test before decrement

Normally never negative

No queue is used (see later)

What if more than 1 process is waiting on S and a signal occurs?

Employs a spin loop (see later)

Critical Section of

n

Processes

Shared data: semaphore mutex; // initially mutex = 1

Process Pi: do { wait(mutex); critical section signal(mutex); remainder section

} while (1);

Semaphore Implementation

Get rid of the spin loop, add a queue, and increase functionality (counting semaphore)

Used in UNIX

Define a semaphore as a record typedef struct { int value; struct process *L;

} semaphore;

Assume two simple operations:

Block (P) suspends (blocks) the process P .

wakeup( P ) resumes the execution of a blocked process P .

Must be atomic

Implementation - as in UNIX

Semaphore operations called by process P now defined as wait ( S ):

S.value--; //Note: decrement before test if (S.value < 0) { add this process to S.L; block (P); //block the process calling wait(S)

}

// “door” is locked if S.value <= 0; signal ( S ):

S.value++; if (S.value <= 0) { // necessary condition for non-empty queue remove a process P from S.L; wakeup(P); //move P to ready queue

} /* use some algorithm for choosing a process to remove from queue, ex: FIFO */

Implementation - continued

Compare to original “classical” definition:

Allows for negative values (“counting” semaphore)

Absolute value of negative value is number of processes waiting in the semaphore

Positive value is the number of processes that can call wait and not block

Wait() blocks on negative

Wait() decrements before testing

Remember: signal moves a process to the ready queue, and not necessarily to immediate execution

How is something this complex made atomic?

Most common: disable interrupts

Use some SW techniques such as Peterson’s solution inside wait and signal – spin-loops would be very short (wait/signal calls are brief) – see p. 204 … different than the spin-loops in classical definition!

Implementation - continued

The counting semaphore has two fundamental applications:

Mutual exclusion for solving the “CS” problem

Controlling access to resources with limited availability, for example in the bounded buffer problem:

block consumer if the buffer empty

block the producer if the buffer is full.

Both applications are used for the bounded buffer problem

Semaphore as a General Synchronization Tool

Execute B in P j only after A executed in P i

Serialize these events (guarantee determinism) :

Use semaphore flag initialized to 0

Code:

P i

P

j

A signal ( flag ) wait ( flag )

B

Deadlock and Starvation

Just because semaphores can solve the “CS” problem, they still can be misused and cause deadlock or starvation

Deadlock – two or more processes are waiting indefinitely for an event that can be caused by only one of the waiting processes.

Let S and Q be two semaphores initialized to 1

P

0

P

1

(1) wait ( S ); (2) wait ( Q );

(3) w ait ( Q ); (4) wait ( S );

signal ( S ); signal ( Q ) signal signal

(

(

Q

S

Random sequence 1, 2, 3, 4 causes deadlock

But sequence 1, 3, 2, 4 will not deadlock

);

);

Starvation – indefinite blocking. A process may never be removed from the semaphore queue in which it is suspended.

Can be avoided by using FIFO queue discipline.

Two Types of Semaphores

Counting semaphore – integer value can range over an unrestricted domain.

Binary semaphore – integer value can range only between 0 and 1; can be simpler to implement.

Can implement a counting semaphore S as a binary semaphore.

Binary Semaphore Definition

Struct b-semaphore { int value; /* boolean, only 0 or 1 allowed */ struct process queue;

}

Void wait-b (b-semaphore s) { /* see alternate def. Below */ if (s.value == 1) s.value = 0; // Lock the “door” & let process enter CS else { place this process in s.queue and block it;}

// no indication of size of queue ... compare to general semaphore

} // wait unconditionally leaves b-semaphore value at 0

Void signal-b(b-semaphore s) { //s.value==0 is necessary but not sufficient if (s.queue is empty) s.value = 1; // condition for empty queue else move a process from s.queue to ready list;

}

// if only 1 proc in queue, leave value at 0, “moved” proc will go to CS

*********************************************************************

Alternate definition of wait-b (simpler):

Void wait-b (b-semaphore s) { if (s.value == 0) {place this process in s.queue and block it;}

} s.value =0; // value was 1, set to 0

// compare to test & set

Implementing Counting Semaphore

S

using Binary

Semaphores

Data structures: binary-semaphore S1, S2; int C:

Initialization:

S1 = 1

S2 = 0

C = initial value of semaphore S

Implementing Counting Semaphore

S

using Binary

Semaphores (continued)

wait operation wait(S1);

C--; if (C < 0) { signal(S1); wait(S2);

} else?

signal(S1); signal operation wait(S1); //should this be a “different” S1?

C ++; if (C <= 0) signal(S2); else signal(S1);

// else should be removed?

See a typical example of semaphore operation given in “ Chapter 7 notes by Guydosh ” posted along with these notes.

Classical Problems of Synchronization

Bounded-Buffer Problem

Readers and Writers Problem

Dining-Philosophers Problem

Bounded-Buffer Problem

Uses both aspects of a counting semaphore:

Mutual exclusion: the mutex semaphore

Limited resource control: full and empty semaphores

Shared data: semaphore full, empty, mutex; where:

full represents the number of “full” (used) slots in the buffer

empty represents the number if “empty” (unused) slots in the buffer

- mutex is used for mutual exclusion in the CS

Initially: full = 0, empty = n, mutex = 1 /* buffer empty, “door” is open */

Bounded-Buffer Problem Producer Process

do {

… produce an item in nextp

… wait(empty); wait(mutex);

… add nextp to buffer

… signal(mutex); signal(full);

} while (1);

Question: what may happen if the mutex wait/signal calls were done before and after the corresponding calls for empty and full respectively?

Bounded-Buffer Problem Consumer Process

do { wait(full) wait(mutex);

… remove an item from buffer to nextc

… signal(mutex); signal(empty);

… consume the item in nextc

…

} while (1);

Same Question: what may happen if the mutex wait/signal calls were done before and after the corresponding calls for empty and full respectively?

See the trace of a bounded buffer scenario given in “ Chapter 7 notes by Guydosh ” posted along with these notes.

Readers-Writers Problem

Semaphores used purely for mutual exclusion

No limits on writing or reading the buffer

Uses a single writer, and multiple readers

Multiple readers allowed in CS

Readers have priority over writers for these slides.

As long as there is a reader(s) in the CS, no writer may enter

– CS must be empty for a writer to get in

An alternate (more fair approach) is: even if readers are in the CS, no more readers will be allowed in if a writer “declares an interest” to get into the CS

– this is the writer preferred approach. It is discussed in more detail in Guydosh’s notes: “Readers-Writers problems Scenario” on the website.

Shared data semaphore mutex, wrt;

Initially mutex = 1, wrt = 1, readcount = 0 /* all “doors” open, no readers in */

Readers-Writers Problem Writer Process

wait(wrt);

… writing is performed

… signal(wrt);

Readers-Writers Problem Reader Process

wait(mutex); // 2nd, 3rd, … readers queues on mutex if 1st reader

// waiting for a writer to leave (blocked on wrt).

// also guards the modification of readcount readcount++; if (readcount == 1) wait(wrt); //1st reader blocks if any writers in CS signal(mutex); //1st reader is inopen the “floodgates” for its companions

… reading is performed

… wait(mutex); // guards the modification of readcount readcount--; if (readcount == 0) signal(wrt); // last reader out lets any queued writers in signal(mutex):

See Guydosh’s notes: “Readers-Writers problems Scenario” (website) for a detailed trace of this algorithm.

Dining-Philosophers Problem

Shared data semaphore chopstick[5];

Initially all values are 1

Chopstick i+1

Process i

Chopstick i

Dining-Philosophers Problem

Philosopher i : do { wait(chopstick[i]) // left chopstick wait(chopstick[(i+1) % 5]) // right chopstick

… eat

… signal(chopstick[i]); signal(chopstick[(i+1) % 5]);

… think

…

} while (1);

This scheme guarantees mutual exclusion but has deadlock – what if all 5 philosophers get hungry simultaneously and all grab for the left chopstick at the same time? .. See Monitor implementation for deadlock free solution … sect 7.7

Critical Regions - omit

High-level synchronization construct

A shared variable v of type T , is declared as: v: shared T

Variable v accessed only inside statement region v when B do S where B is a boolean expression.

While statement S is being executed, no other process can access variable v .

Critical Regions - omit

Regions referring to the same shared variable exclude each other in time.

When a process tries to execute the region statement, the

Boolean expression B is evaluated. If B is true, statement

S is executed. If it is false, the process is delayed until B becomes true and no other process is in the region associated with v .

Critical Regions Example – Bounded Buffer - omit

Shared data: struct buffer { int pool[n]; int count, in, out;

}

Critical Regions Bounded Buffer Producer Process - omit

Producer process inserts nextp into the shared buffer region buffer when( count < n) { pool[in] = nextp; in:= (in+1) % n; count++;

}

Critical Regions Bounded Buffer Consumer Process - omit

Consumer process removes an item from the shared buffer and puts it in nextc region buffer when (count > 0) { nextc = pool[out]; out = (out+1) % n; count--;

}

Critical Regions: Implementation region x when B do S - omit

Associate with the shared variable x , the following variables: semaphore mutex, first-delay, second-delay; int first-count, second-count;

Mutually exclusive access to the critical section is provided by mutex .

If a process cannot enter the critical section because the

Boolean expression B is false, it initially waits on the first-delay semaphore; moved to the second-delay semaphore before it is allowed to reevaluate B .

Critical Regions: Implementation – omit

Keep track of the number of processes waiting on firstdelay and second-delay , with first-count and secondcount respectively.

The algorithm assumes a FIFO ordering in the queuing of processes for a semaphore.

For an arbitrary queuing discipline, a more complicated implementation is required.

Monitors

High-level synchronization construct that allows the safe sharing of an abstract data type among concurrent processes.

Closely related to P- thread synchronization – see later.

monitor monitor-name

{ // procedures internal to the monitor shared variable declarations procedure body P1 (…) {

. . .

} procedure body P2 (…) {

. . .

} procedure body Pn (…) {

. . .

}

{ initialization code

}

}

Monitors

Rationale for the properties of a monitor :

Mutual exclusion: A monitor implements mutual exclusion by allowing only one process at a time to execute in one of the internal procedures .

Calling a monitor procedure will automatically cause the caller to block in the entry queue if the monitor is occupied, else it will let it in.

On exiting the monitor, another waiting process will be allowed in.

When inside the monitor , management of a limited resource which may not always be available for use:

A process can unconditionally block on a “condition variable”

(CV) by calling a wait on this CV. This also allows another process to enter the Monitor while the process calling the wait is blocked.

When a process using a resource protected by a CV is done with it, it will signal on the associated CV to allow another process waiting in this CV to use the resource.

The signaling process must “get out of the way” and yield to the released process receiving the signal by blocking. This preserves mutual exclusion in the monitor Hoare’s scheme.

Monitors

Summary

To allow a process to wait within the monitor, a condition variable must be declared, as condition x, y; // condition variables

Condition variable can only be used with the operations wait and signal .

The operation x.wait(); means that the process invoking this operation is unconditionally suspended until another process invokes x.signal();

The x.signal

operation resumes exactly one suspended process. If no process is suspended, then the signal operation has no effect .

Schematic View of a Monitor : entry queue

Monitor With Condition Variables

Entry queue is for

Mutual exclusion

Condition variable queues may be used for resource management and are the processes waiting on a condition variable.

Dining Philosophers Example

monitor dp

{ enum {thinking, hungry, eating} state[5]; condition self[5]; void pickup(int i) void putdown(int i) void test(int i)

// following slides

// following slides

// following slides

} void init() { for (int i = 0; i < 5; i++) state[i] = thinking;

}

Philosopher i code ( written by user ): loop at random times { think dp.pickup(i) //simply calling monitor proc

…

// invokes mutual exclusion eat;

...

// automatically dp.putdown(i)

}

Dining Philosophers

void pickup(int i) { state[i] = hungry; test[i]; // if neighbors are not eating, set own state to eating

// signal will go to waste - not blocked if (state[i] != eating) self[i].wait(); // test showed a neighbor was eating - wait

} void putdown(int i) { state[i] = thinking;

// test left and right neighbors … gives them chance to eat test((i+4) % 5); // signal left neighbor if she is hungry test((i+1) % 5); // signal right neighbor if she is hungry

}

Dining Philosophers

Does more than passively test: changes state and cause a philospher to start eating.

void test(int i) { if ( (state[(I + 4) % 5] != eating) && // left neighbor

(state[i] == hungry) && // the process being tested

(state[(i + 1) % 5] != eating)) { // right neighbor state[i] = eating; self[i].signal(); // i starts eating if blocked

}

} // else do nothing

// self[i] is a condition variable

Monitor Implementation Using Semaphores

Variables semaphore mutex; // (initially = 1) for mutual exclusion on entry semaphore next; // (initially = 0) signaler waits on this int next-count = 0; // # processes (signalers) suspended on next

// Entering the monitor:

Each external procedure F will be replaced by (ie., put in monitor as): wait(mutex); // lemme into the monitor!

… // block if occupied body of F ; // same as a critical section

...

// exiting the monitor: if (next-count > 0) // any signalers suspended on next?

signal(next) // let suspended signalers in first

// same as getting out of line to fill something

// out at the DMV (maybe) else signal(mutex); // then let in any new guys

// Above is the overall monitor:

Mutual exclusion within a monitor is ensured.

Monitor Implementation

For each condition variable x , we have: semaphore x-sem; // (initially = 0) causes a wait on x to block int x-count = 0; // number of processes waiting on CV x

The operation x.wait

can be implemented as: x-count++; // Time for a nap if (next-count > 0) // any signalers queued up?

else signal(next); // release a waiting signaler first signal(mutex); //else let a new guy in wait(x-sem); x-count--;

// unconditionally block on the CV

// I’m outa here

Monitor Implementation

The operation x.signal

can be implemented as: if (x-count > 0) { //do nothing if nobody is waiting on the CV next-count++; // I am (a signaler) gonna be suspended on next signal(x-sem); // release a process suspended on the CV wait(next); // get out of the way of the released process (Hoare) next-count--; // released process done & it signaled me in

}

Monitor Implementation - optional

Conditional-wait construct : x.

wait (c);

c – integer expression evaluated when the wait operation is executed.

value of c (a priority number ) stored with the name of the process that is suspended.

when x.signal is executed , process with smallest associated priority number is resumed next.

Check two conditions to establish correctness of system:

User processes must always make their calls on the monitor in a correct sequence.

Must ensure that an uncooperative process does not ignore the mutual-exclusion gateway provided by the monitor, and try to access the shared resource directly, without using the access protocols.

Solaris 2 Synchronization

Implements a variety of locks to support multitasking, multithreading (including real-time threads), and multiprocessing.

Uses adaptive mutexes for efficiency when protecting data from short code segments.

Uses condition variables and readers-writers locks when longer sections of code need access to data.

Uses turnstiles to order the list of threads waiting to acquire either an adaptive mutex or reader-writer lock.

Windows 2000 Synchronization

Uses interrupt masks to protect access to global resources on uniprocessor systems.

Uses spinlocks on multiprocessor systems.

Also provides dispatcher objects which may act as wither mutexes and semaphores.

Dispatcher objects may also provide events . An event acts much like a condition variable.