Massive Parallelism

advertisement

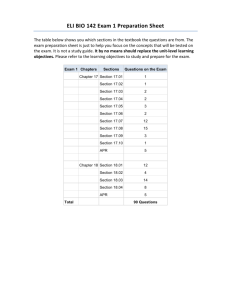

Brief History • Major Computers/Developers • Technologies Modern Uses • Tasks Performed • Relevant Fields Architecture • Massive Parallelism • Hybrid Architecture IBM Watson • Hardware • Artificial Intelligence CRAY XK6 • Hardware • Gemini Interconnect 1E+16 Power of Historical Computers (FLOPS) 1E+14 1E+12 1E+10 1E+08 1E+06 * Numbers from Extremetech.com and IPSJ Computer Museum 1970/80s • Control Data Corporation • Cray Research • (NASA) 1990s • • • • • Fujitsu NEC Hitachi Intel Off-the-shelf Development 2000s • IBM • China PRIMARY USES: Research Tools • Large amounts of data Simulations & Models • Nuclear weapons • Modeling movement of atoms and molecules Brute Force Calculations • Decryption SUPERCOMPUTERS are used in a variety of fields High Performance • High amount of FLOPS • Gigaflops High Reliability • Low Average Temperature • Stable State • Error Recovery Function • Little to no interruption of instructions • Redundancy Low Power Consumption • Smaller Chips • 45 nanometer or less • Individual CPU power consumption is important to whole system 3-4GHz limit on processors To increase performance… PARALLELISM • We had to re-think the way we wrote programs • Sequential code vs. Parallel code • Performance limited by sequential code • Amdahl’s Law • Supercomputers have many hardware components • Applications must be designed for massive parallelism 1970s • Multiple Vector processors 1980s • Many singlecore processors • Massively parallel 1990s-now • Computer clusters of x86 based servers • Many multicore processors MASSIVE PARALLELISM CPU ONLY Thousands of multi-core processors Massively Parallel HYBRID Thousands of multi-core processors Accelerators General Purpose Graphics Accelerators (GPGPU) Millions of cores including both CPU and GPU cores General Purpose Graphics Accelerators More Processing Cores Power Efficiency Higher Performance Low Cost Difficult to Program For • Won Jeopardy! • Greatest technological breakthrough of the century • Natural Language Processing (NLP) • Decision support tool • Paradigm shift • Implications for society * Image Courtesy of IBM Ninety IBM Power 750 Servers (ten server racks) 16 TB Main Memory 4 TB clustered storage • Each server has four Power7 Processors • 8 Cores each • 3.55 GHz clock frequency 2880 Power7 Cores 80 Teraflops (trillion floating-point operations per second) Workload Optimized • Massively Parallel • Tuned for the task NLP Algorithms • Unstructured Information Management Architecture (UIMA) • C, C++, Java & Prolog Artificial Intelligence • Raw unstructured data • Machine Learning • Pattern Recognition * Image Courtesy Cray, Inc. • Connects pairs of Compute Nodes • Utilizes HyperTransport 3.0 Technology • Eliminates PCI Bottlenecks • Peak of 20GB/s bandwidth per node • Provides 48 Port Router • Provides 4 Lanes per Compute Node with ECC • Auto-detects errors in Lanes • Utilizes Connectionless Protocol o Scales from 100’s to 100,000’s of compute nodes o No increase in buffer * Image Courtesy of Cray, Inc. • Connected via 3D Torus Link • Supports Millions of MPI messages per Second * Image Courtesy of Nersc * Image Courtesy Cray, Inc. Manages Gemini Interconnects Blade WarmSwap Capability Hardware Supervisory System Unhealthy Node Removal Automatic System Diagnostics • Network Connectivity • InfiniBand • Fibre Channel • 10 Gigabit Ethernet • Ethernet • Cooling Options • Air-Cooled • ECOphlex Liquid Cooling • Disk Array Connectivity • Fibre Channel • SATA • Power • 45-54.1 kW per cabinet • 3 Phase 100 Amp 480 Volt and 125 Amp 400 Volt Compute Node Memory • Quad Channel 16GB or 32GB DDR3 per Processor • 6GB GDDR5 per GPU System Cabinets • Can support up to 96 Compute Nodes • 3TB of Main Memory • 576GB of GPU Memory • Peak Performance of 70+ Teraflops Complete System • Scalable up to 500,000 Total Scalar Processing Cores • Up to 50 Petaflops hybrid peak performance Primary Uses Massive Parallelism • • • • Weather Modeling Fluid Dynamics Medical & Genetics Other • Sequential Code vs. Parallel Code • Follows Amdahl’s Law Various Architectures • 1970’s – Multiple Vector Processors • 1980’s – Many Single Core Processors • 1990’s – Cluster, Many Multi-Core, Hybrid Systems Today’s Supercomputers • Hybrid Architectures • More Processing Cores • Power Efficiency, Higher Performance, Lower Cost Future Trends • Exaflop Supercomputers? Anthony, Sebastian. “The History of Supercomputers" EXTREMETECH.com. Ziff Davis Media, 10 April 2012. Web. 30 Apr. 2012. <http://www.extremetech.com/extreme/125271-the-history-of-supercomputers>. Anthony, Sebastian. "What Can You Do with a Supercomputer?" EXTREMETECH.com. Ziff Davis Media, 15 Mar. 2012. Web. 23 Apr. 2012. <http://www.extremetech.com/extreme/122159-what-can-you-do-with-a-supercomputer/2>. Betts, B. "Supercomputing To Go." Engineering & Technology (17509637) 7.2 (2012): 42 -45. Academic Search Premier. Web. 13 Apr. 2012. Blaise Barney, L. L. (2012, 2 14). Message Passing Interface. Retrieved 4 29, 2012, from High Performance Computing: High Performance Computing: <https://computing.llnl.gov/tutorials/mpi/#What>. Cray, Inc. (2012). Cray XK6 Specifications. Retrieved 4 29, 2012, from Cray, Inc.: <http://www.cray.com/Products/XK6/Specifications.aspx>. Cray, Inc. (2012). Scalable Many-Core Performance. Retrieved 4 29, 2012, from Cray, Inc.: <http://www.cray.com/Products/XK6/Technology.aspx>. Cray, Inc. - The Supercomputer Company - Products - Cray XK6 System. (2012). Retrieved 4 29, 2012, from Cray, Inc.: < http://www.cray.com/Products/XK6/XK6.aspx>. CrayXK6Brocure.pdf. (2011). Retrieved 4 29, 2012, from Cray, Inc.: <http://www.cray.com/Assets/PDF/products/xk/CrayXK6Brochure.pdf>. Edwards, C. "The Exascale Challenge." Engineering & Technology (17509637) 5.18 (2010): 53-55. Academic Search Premier. Web. 22 Apr. 2012. Farber, Robert M. "Topical Perspective On Massive Threading And Parallelism." Journal Of Molecular Graphics & Modelling 30.(2011): 82-89. Academic Search Premier. Web. 22 Apr. 2012. Geller, Tom. "Supercomputing's Exaflop Target." Communications Of The ACM 54.8 (2011): 16-18. Business Source Complete. Web. 13 Apr. 2012. "Historical Computers in Japan: Supercomputers." IPSJ Computer Museum. Web. 30 Apr. 2012. <http://museum.ipsj.or.jp/en/computer/super/index.html>. Hopper Interconnect. (2012, 3 2). Retrieved 4 29, 2012, from NERSC: National Energy Research Scientific Computing Center: <http://www.nersc.gov/users/computational-systems/hopper/configuration/interconnect/>. Kroeker, Kirk L. "Weighing Watson's Impact." Communications Of The ACM 54.7 (2011): 13-15. Business Source Complete. Web. 20 Apr. 2012. Manfield, Lisa. "Dr. Watson Will See You Now." Backbone (2011): 32-34. Business Source Complete. Web. 20 Apr. 2012. Schor, Marshall. "Open Architecture Helps Watson Understand Natural Language." IBMRESEARCHNEWS.com. 6 Apr. 2011. Web. 29 Apr. 2012 . <http://ibmresearchnews.blogspot.com/2011/04/open-architecture-helps-watson.html>. "SPARC64 VIIIfx": A Fast, Reliable, Low-Power CPU : Fujitsu Global."SPARC64 VIIIfx": A Fast, Reliable, Low-Power CPU : Fujitsu Global. Fujitsu. Web. 30 Apr. 2012. <http://www.fujitsu.com/global/about/tech/k/whatis/processor/>. Smartest Machine on Earth. Dir. Nova. PBS. PBS, 9 Feb. 2011. Web. 23 Apr. 2012. <http://www.pbs.org/wgbh/nova/tech/smartest-machine-on-earth.html>. Taft, Daryl K. "IBM's Watson: 'Jeopardy' Win Is Just The Beginning." Eweek 28.5 (2011): 22-23. Academic Search Premier. Web. 20 Apr. 2012. Vaughan-Nichols, Steven J. "What Makes IBM's Watson Run?" ZDNET.com. CBS Interactive, 4 Feb. 2011. Web. 23 Apr. 2012. <https://www.zdnet.com/blog/open-source/what-makes-ibms-watson-run/8208>. Willard, Chris. "Revisiting Supercomputer Architectures." HPCWIRE.com. Tabor Communications Inc., 8 Dec. 2011. Web. 26 Apr. 2 012. <http://www.hpcwire.com/hpcwire/2011-12-08/revisiting_supercomputer_architectures.html>.