Slides 08

Lecture 08

27/12/2011

Shai Avidan

.

תגצמב עיפומ אל / עיפומה הז אלו התיכב דמלנה רמוחה אוה בייחמה רמוחה : הרהבה

Today

• Hough Transform

• Generalized Hough Transform

• Implicit Shape Model

• Video Google

Hough Transform & Generalized Hough Transform

Hough Transform

• Origin: Detection of straight lines in clutter

– Basic idea: each candidate point votes for all lines that it is consistent with.

– Votes are accumulated in quantized array

– Local maxima correspond to candidate lines

• Representation of a line

– Usual form y = a x + b has a singularity around 90º.

– Better parameterization: x cos(

) + y sin(

) =

y

y x

K. Grauman, B. Leibe x

ρ

θ

Examples

– Hough transform for a square (left) and a circle

(right)

K. Grauman, B. Leibe

Hough Transform: Noisy Line

ρ

Tokens

• Problem: Finding the true maximum

Votes

K. Grauman, B. Leibe

θ

Hough Transform: Noisy Input

ρ

Tokens

• Problem: Lots of spurious maxima

Votes

K. Grauman, B. Leibe

θ

Generalized Hough Transform

[Ballard81]

• Generalization for an arbitrary contour or shape

– Choose reference point for the contour (e.g. center)

– For each point on the contour remember where it is located w.r.t. to the reference point

– Remember radius r and angle relative to the contour tangent

– Recognition: whenever you find a contour point, calculate the tangent angle and ‘vote’ for all possible reference points

– Instead of reference point, can also vote for transformation

The same idea can be used with local features!

K. Grauman, B. Leibe

Slide credit: Bernt Schiele

Implicit Shape Model

Gen. Hough Transform with Local

Features

• For every feature, store possible “occurrences”

• For new image, let the matched features vote for possible object positions

K. Grauman, B. Leibe

3D Object Recognition

• Gen. HT for Recognition [Lowe99]

– Typically only 3 feature matches needed for recognition

– Extra matches provide robustness

– Affine model can be used for planar objects

K. Grauman, B. Leibe

Slide credit: David Lowe

View Interpolation

• Training

– Training views from similar viewpoints are clustered based on feature matches.

– Matching features between adjacent views are linked.

• Recognition

– Feature matches may be spread over several training viewpoints.

Use the known links to “transfer votes” to other viewpoints.

K. Grauman, B. Leibe

[Lowe01]

Recognition Using View Interpolation

K. Grauman, B. Leibe

Training

Location Recognition

K. Grauman, B. Leibe

• Sony Aibo

(Evolution Robotics)

Applications

• SIFT usage

– Recognize docking station

– Communicate with visual cards

• Other uses

– Place recognition

– Loop closure in SLAM

K. Grauman, B. Leibe 16

Slide credit: David Lowe

Video Google

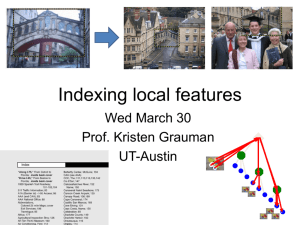

Indexing local features

• Each patch / region has a descriptor, which is a point in some high-dimensional feature space (e.g., SIFT)

K. Grauman, B. Leibe

Indexing local features

• When we see close points in feature space, we have similar descriptors, which indicates similar local content.

Figure credit: A. Zisserman

K. Grauman, B. Leibe

Indexing local features

• We saw in the previous section how to use voting and pose clustering to identify objects using local features

K. Grauman, B. Leibe

Figure credit: David Lowe

Indexing local features

• With potentially thousands of features per image, and hundreds to millions of images to search, how to efficiently find those that are relevant to a new image?

– Low-dimensional descriptors : can use standard efficient data structures for nearest neighbor search

– High-dimensional descriptors: approximate nearest neighbor search methods more practical

– Inverted file indexing schemes

K. Grauman, B. Leibe

Indexing local features: inverted file index

• For text documents, an efficient way to find all pages on which a word occurs is to use an index…

• We want to find all images in which a feature occurs.

• To use this idea, we’ll need to map our features to

“visual words”.

K. Grauman, B. Leibe

Visual words

• More recently used for describing scenes and objects for the sake of indexing or classification.

Sivic & Zisserman 2003; Csurka, Bray,

Dance, & Fan 2004; many others.

K. Grauman, B. Leibe

Inverted file index for images comprised of visual words

Image credit: A. Zisserman

K. Grauman, B. Leibe

Word number

List of image numbers

Bags of visual words

• Summarize entire image based on its distribution

(histogram) of word occurrences.

• Analogous to bag of words representation commonly used for documents.

K. Grauman, B. Leibe

Image credit: Fei-Fei Li

Video Google System

1. Collect all words within query region

2. Inverted file index to find relevant frames

3. Compare word counts

4. Spatial verification

Sivic & Zisserman, ICCV 2003

• Demo online at : http://www.robots.ox.ac.uk/~vgg/ research/vgoogle/index.html

K. Grauman, B. Leibe 26

Query region

Visual vocabulary formation

Issues:

• Sampling strategy

• Clustering / quantization algorithm

• What corpus provides features (universal vocabulary?)

• Vocabulary size, number of words

K. Grauman, B. Leibe

Sampling strategies

Sparse, at interest points

Multiple interest operators

Image credits: F-F. Li, E. Nowak, J. Sivic

Dense, uniformly Randomly

• To find specific, textured objects, sparse sampling from interest points often more reliable.

• Multiple complementary interest operators offer more image coverage.

• For object categorization, dense sampling offers better coverage.

[See Nowak, Jurie & Triggs, ECCV 2006]

K. Grauman, B. Leibe

Clustering / quantization methods

• k-means (typical choice), agglomerative clustering, mean-shift,…

K. Grauman, B. Leibe 29