9 th year, and still going at it

advertisement

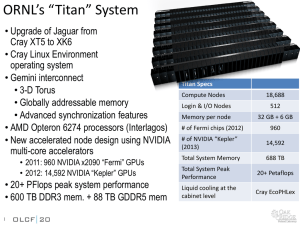

HPC Achievement and Impact – 2012 a personal perspective Thomas Sterling Professor of Informatics and Computing Chief Scientist and Associate Director Center for Research in Extreme Scale Technologies (CREST) Pervasive Technology Institute at Indiana University Fellow, Sandia National Laboratories CSRI June 20, 2012 HPC Year in Review • A continuing tradition at ISC – • (9th year, and still going at it) As always, a personal perspective – how I’ve seen it – Highlights – the big picture • Previous Years’ Themes: – – – – • But not all the nitty details, sorry – Necessarily biased, but not intentionally so – Iron-oriented, but software too – Trends and implications for the future • And a continuing predictor: The Canonical HEC Computer – – – – 2004: “Constructive Continuity” 2005: “High Density Computing” 2006: “Multicore to Petaflops” 2007: “Multicore: the Next Moore’s Law” 2008: “Run-up to Petaflops” 2009: “Year 1 A. P. (After Petaflops)“ 2010: “Igniting Exaflops” 2011: “Petaflops: the New Norm” • This Year’s Theme: ? 2 Trends in Highlight - 2012 • International across the high end • Reversal of power growth – green screams! • GPU increases in HPC community – Everybody is doing software (apps or systems) for them • But … resurgence of multi-core – IBM BG – Intel MIC (oops I mean Xeon Phi) • Exaflops takes hold of international planning • Big data moves to a driving command • Commodity Linux clusters still the norm among the common computer 3 HPC Year in Review • A continuing tradition at ISC – • (9th year, and still going at it) As always, a personal perspective – how I’ve seen it – Highlights – the big picture • Previous Years’ Themes: – – – – • But not all the nitty details, sorry – Necessarily biased, but not intentionally so – Iron-oriented, but software too – Trends and implications for the future • And a continuing predictor: The Canonical HEC Computer – – – – 2004: “Constructive Continuity” 2005: “High Density Computing” 2006: “Multicore to Petaflops” 2007: “Multicore: the Next Moore’s Law” 2008: “Run-up to Petaflops” 2009: “Year 1 A. P. (After Petaflops)“ 2010: “Igniting Exaflops” 2011: “Petaflops: the New Norm” • This Year’s Theme: – 2012: “The Million Core Computer” 4 I. ADVANCEMENTS IN PROCESSOR ARCHITECTURES 5 Processors: Intel • Intel’s 22nm processors: Ivy Bridge die shrink, Sandy Bridge microarchitecture, based on tri-gate (3D) transistors. • • Die size of 160 mm2 with 1.4 billion transistors Intel Xeon Processor E7-8870: • • • • • • • 10 cores, 20 threads 112 GFLOPS max turbo single core 2.4 GHz 30 MB cache 130 W max TDP 32 nm Supports up to 4 TB of DDR3 memory • Next generation 14nm Intel processors are planned for 2013 http://download.intel.com/support/processors/xeon/sb/xeon_E7-8800.pdf http://download.intel.com/newsroom/kits/22nm/pdfs/22nm-Details_Presentation.pdf http://www.intel.com/content/www/us/en/processor-comparison/processorspecifications.html?proc=53580 6 Processors: AMD • AMD’s 32nm processors: Trinity • • Die size of 246 mm2 with 1.3 billion transistors AMD Opteron 6200 Series: • • • • • • 16 cores 2.7 GHz 32 MB cache 140 W max TDP 32 nm 239.1 GFLOPS 2 x AMD Opteron 6276 processors Linpack benchmark(2P) http://www.amd.com/us/Documents/Opteron_6000_QRG.pdf http://www.anandtech.com/show/5831/amd-trinity-review-a10-4600m-a-new-hope 7 Processors: IBM • IBM Power7 - • Power7 Processor - • Released in 2010 The main processor in the PERCS 45 nm SOI Process, 567 mm2, 1.2 billion transistors 3.0-4.25 GHz clock speed, 4 chips per quad module, 4,6,8 cores per chip, 64 kB L1, 256 kB L2, 32 MB L3 cache / core Max 33.12 GFLOPS per core, 264.96 GFLOPS per chip IBM Power8 • Successor to Power7 currently under development. Anticipated imporved SMT, reliability, larger cache size, more cores. Will be built on 22nm process. http://www-03.ibm.com/systems/power/hardware/710/specs.html http://arstechnica.com/gadgets/2009/09/ibms-8-core-power7-twice-the-muscle-half-the-transistors/ 8 Processors: Fujitsu SPARC IXfx • • • Introduced in November 2011 Used in the PRIMEHPC FX10 (an HPC system that is a follow up to Fujitsu’s K computer) Architecture: • • • • • • SPARC v9 ISA extended for HPC with increased amounts of registers 16 cores 236.5 GFLOPS peak performance 12 MB shared L2 cache 1.85 GHz 115 W TDP http://img.jp.fujitsu.com/downloads/jp/jhpc/primehpc/primehpc-fx10-catalog-en.pdf 9 Many Integrated Core (MIC) Architecture – Xeon Phi • Multiprocessor architecture • • • • Prototype products (named Knights Ferry) were announced and released in 2010 to European Organization for Nuclear Research, Korea Institute of Science and Technology Information, and Leibniz Supercomputing Center among others Knights Ferry prototype: • 45 nm process • 32 in order cores with up to 1.2 GHz with 4 threads per core • 2 GB GDDR5 memory, 8MB L2 cache • Single board performance exceeds 750 GFLOPS Commercial release (codenamed Knights Corner) to be built on a 22nm process is proposed for release in 2012-2013 Texas Advanced Computing Center (TACC) will use Knights Corner cards in 10 PetaFLOPS “Stampede” supercomputer http://www.intel.com/content/www/us/en/architecture-and-technology/many-integrated-core/intel-many-integrated-core-architecture.html http://inside.hlrs.de/htm/Edition_02_10/article_14.html 10 Processors: ShenWei SW1600 • • Third generation CPU by Jiāngnán Computing Research Lab Specs: • Frequency -- 1.1 GHz • 140 GFLOPS • 16 core RISC architecture • Up to 8TB virtual memory and 1TB physical memory supported • L1 cache: 8KB, L2 cache: 96KB • 128-bit system bus http://laotsao.wordpress.com/2011/10/29/sunway-bluelight-mpp-%E7%A5%9E%E5%A8%81%E8%93%9D%E5%85%89/ 11 Processors: ARM • • • • Industry leading provider of 32-bit embedded microprocessors Wide portfolio of more than 20 processors is available Applications processors are capable of executing a wide range of OSs: Linux, Android/Chrome, Microsoft Windows CE, Symbian and others NVIDIA: The CUDA on ARM Development Kit • A high performance energy efficient kit featuring NVIDIA Tegra 3 Quad-Core ARM A9 CPU • CPU Memory: 2 GB • 270 GFLOPS Single Precision Performance 12 II. ADVANCES IN GPUs 13 GPUs: NVIDIA • Current: Kepler Architecture – GK110 • • • • • • • 7.1 billion transistors 192 CUDA cores 32 Special Function Units 32 Load/Store Units More than 1 TFLOPS Upcoming: Maxwell Architecture – 2013 • ARM instruction set • 20 nm Fab process ??? (expected) • 14-16 GFLOPS in double precision per watt HPC systems with NVIDIA GPUs: • Tianhe-1 (2nd on TOP 500): 7,168 Nvidia Tesla M2050 general purpose GPUs • Titan (currently 3rd on TOP500): 960 NVIDIA GPUs • Nebulae (4th on TOP500): 4640 NVIDIA Tesla C2050 GPUs • Tsubame 2.0 (5th on TOP500): 4224 NVIDIA Tesla M2050; 34 NVIDIA Tesla S1070; http://www.xbitlabs.com/news/cpu/display/ 20110119204601_Nvidia_Maxwell_Graphics_Processors_to_Have_Integrated_ARM_General_Purpose_Cores.html 14 GPUs: AMD • Current: AMD Radeon HD 7970 (Southern Islands HD7xxx series) • • • • • • • Released in January 2012 28 nm Fab process 352 mm2 die size with 4.3 billion transistors Up to 925 MHz engine clock 947 GFLOPS double precision compute power 230 W TDP Latest AMD architecture – Graphic Core Next (GCN): • • • • 28 nm GPU architecture Designed both for graphics and general computing 32 compute nodes (1,048 stream processors) Handles workloads of the processor 15 GPU Programming Models • Current: Cuda 4.1 • • • • • Direct communication between GPUs and other PCI devices Easily acceleratable parallel nested loops starting with Tesla K20 Kepler GPU Current: OpenCL 1.2 • • • • • http://developer.nvidia.com/cuda-toolkit Coming in Cuda 5 • • • Share GPUs across multiple threads Unified Virtual Addressing Use all GPUs from a single host thread Peer-to-Peer communication Open royalty-free standard for cross-platform parallel computing Latest version released in November 2011 Host-thread safety, enabling OpenCL commands to be enqued from multiple host threads Improved OpenGL interoperability by linking OpenCL event objects to OpenGL OpenACC • • • • Programming standard developed by Cray, NVIDIA, CAPS and PGI Designed to simplify parallel programming of heterogeneous CPU/GPU systems The programming is done through some pragmas and API functions Planned supported compilers – Cray, PGI and CAPS 16 III. HPC SYSTEMS around the World 17 IBM Blue Gene/Q • • • • • • • IBM PowerPC A2 @ 1.6 GHz CPU 16 cores per node 16 GB SDRAM-DDR3 per node 90% water cooling 10% air cooling 209 TFLOPS peak performance 80 KW per rack power consumption HPC Systems powered by Blue Gene/Q: • Mira, Argonne National Lab • • • • 786,432 cores 8.162 PFLOPS Rmax, 10.06633 PFLOPS Rpeak 3rd on TOP500 (June 2012) SuperMUC, Leibniz Rechenzentrum • • • 147,456 cores 2.897 PFLOPS Rmax, 3.185 PFLOPS Rpeak 4th on TOP500 (June 2012) http://www-03.ibm.com/systems/technicalcomputing/solutions/bluegene/ http://www.alcf.anl.gov/mira USA: Sequoia • • • • • • • • • • First on the TOP500 as of June 2012 Fully deployed in June 2012 16.32475 PFLOPS Rmax and 20.13266 PLOPS Rpeak 7.89 MW Power consumption IBM Blue Gene/Q 98,304 compute nodes 1.6 million processor cores 1.6 PB of memory 8 or 16 core Power Architecture processors built on a 45 nm fabrication method Lustre Parallel File System Japan: K computer • • • • • • • • First on the TOP500 as of November 2011 Located at RIKEN Advanced Institute for Computational Science in Kobe, Japan Distributed memory architecture Manufactured by Fujitsu Performance: 10.51 PFLOPS Rmax and 11.2804 PFLOPS Rpeak 12.65989 MW Power Consumption, 824.6 GFLOPS/Kwatt Annual running costs - $10 million 864 cabinets: • • • • 88,128 8-core SPARC64 VIIIfx processors @ 2.0 GHz 705,024 cores 96 computing nodes + 6 I/O nodes per cabinet Water cooling system minimizes failure rate and power consumption http://www.fujitsu.com/global/news/pr/archives/month/2011/20111102-02.html China: Tianhe-1A • Tianhe-1A: • • • • • • #5 on Top-500 2.566 PFLOPS Rmax and 4.701 PFLOPS Rpeak 112 computer cabinets, 12 storage cabinets, 6 communication cabinets and 8 I/O cabinets: • 14,336 Xeon X5670 processors • 7,168 Nvidia Tesla M2050 general purpose GPUs • 2,048 FreiTeng 1000 SPARC-based processors • 4.04 MW Chinese custom-designed NUDT proprietary high-speed interconnect, called Arch (160 Gbit/s) Purpose: petroleum exploration, aircraft design $88 million to build, $20 million to maintain annually, operated by about 200 workers China: Sunway Blue Light • Sunway Blue Light: • • • • • • • • 26th on the TOP500 795.90 TFLOPS Rmax and 1.07016 PFLOPS Rpeak 8,704 ShenWei SW1600 processors @ 975 MHz 150 TB main memory 2 PB external storage Total power consumption 1.074 MW 9-rack water-cooled system Infiniband Interconnect, Linux OS USA: Intrepid • • • • • • • • • Located in Argonne National Laboratory IBM Blue Gene/P 23rd in TOP500 list 40 racks 1024 nodes per rack 850 MHz quad-core processors 640 I/O nodes 458 TFLOPS Rmax and 557 TFLOPS Rpeak 1260 KW Russia: Lomonosov • • • • • • • • Located at Moscow State University Research Computer Center Most powerful HPC system in Eastern Europe 1.373 PFLOPS Rpeak and and 674.11 TFLOPS Rmax (TOP500) 1.7 PFLOPS Rpeak and 901.9 TFLOPS Rmax (according to the system’s website) 22nd on the TOP500 5,104 CPU nodes (T-Platforms T-Blade2 system) 1,065 GPU nodes (T-Platforms TB2-TL) Applications: regional and climate research, nanoscience, protein simulations http://www.t-platforms.com/solutions/lomonosov-supercomputer.html http://www.msu.ru Cray Systems • Cray XK6 • • • Cray’s Gemini interconnect, AMD’s multicore scalar processors, and NVIDIA’s GPU processors Scalable up to 50 Pflops of combined performance 70 Tflops per cabinet • • • • 16 core AMD Opteron 6200 (96 /cabinet) 1536 cores/cabinet NVIDIA Tesla 2090 (96 per cabinet) OS: Cray Linux Environment Cray XE6 • • Cray’s Gemini interconnect, AMD’s Opteron 6100 processors 20.2 Tflops per cabinet • • 12 core AMD Opteron 6100 (192/cabinet) 2034 cores/cabinet 3D torus interconnect 25 ORNL’s “Titan” System • Upgrade of Jaguar from Cray XT5 to XK6 • Cray Linux Environment operating system • Gemini interconnect • 3-D Torus • Globally addressable memory • Advanced synchronization features • AMD Opteron 6274 processors (Interlagos) • New accelerated node design using NVIDIA multi-core accelerators • 2011: 960 NVIDIA x2090 “Fermi” GPUs • 2012: 14,592 NVIDIA “Kepler” GPUs • 20+ PFlops peak system performance • 600 TB DDR3 mem. + 88 TB GDDR5 mem 26 Titan Specs Compute Nodes 18,688 Login & I/O Nodes 512 Memory per node 32 GB + 6 GB # of Fermi chips (2012) 960 # of NVIDIA “Kepler” (2013) 14,592 Total System Memory 688 TB Total System Peak Performance 20+ Petaflops Liquid cooling at the cabinet level Cray EcoPHLex USA: Blue Waters • • • • Petascale supercomputer to be deployed at NCSA at UIUC IBM terminated the contract in August 2011 Cray was chosen to deploy the system Specifications: • • • • • • Over 300 cabinets (Cray XE6 and XK6) Over 25,000 compute nodes 11.5 PFLOPS peak performance Over 49,000 AMD processors (380,000 cores) Over 3,000 NVIDIA GPUs Over 1.5 PB overall system memory (4 GB per core) UK: HECToR • • • • Located in University of Edinburgh in Scotland 32nd on the TOP500 list 660.24 TFLOPS Rmax and 829.03 TFLOPS Rpeak Currently 30 cabinets • 704 compute blades • Cray XE6 system • 16-core Interlagos Opteron @ 2.3 GHz • 90,112 cores total http://www.epcc.ed.ac.uk/news/hector-leads-the-way-the-world%E2%80%99s-first-production-cray-xt6-system http://www.hector.ac.uk/ Germany: HERMIT • • • • • Located at High Performance Computing Center Stuttgart 24th on the TOP500 list 831.40 TFLOPS Rmax and 1043.94 TFLOPS Rpeak Currently 38 cabinets • Cray XE6 • 3552 compute nodes, 113,664 compute cores • Dual socker AMD Interlagos @ 2.3 GHz (16 cores) • 2 MW max power consumption Research at HPCCS: • OpenMP Validation Suite, MPI-Start, Molecular Dynamics, Biomechanical simulations, Microsoft Parallel Visualization, Virtual Reality, Augmented reality http://www.hlrs.de/ NEC SX-9 • • Supercomputer, an SMP system Single-chip vector processor: • • • • • 3.2 GHz 8-way replicated vector pipes (each has 2 multiply, 2 addition units) 102.4 GFLOPS peak performance 65 nm CMOS technology Up to 64 GB of memory h t t p : / / w w 30 Passing of the Torch IV. In Memoriam 31 Alan Turing - Centennial Alan Turing - Centennial • Father of computability with the abstract “Turing Machine” • Father of Colossus – Arguably first digital electronic supercomputer – Broke German enigma codes – Saved 10s of thousands of lives; Passing of the Torch V. The Flame Burns On 34 Key HPC Awards • Seymour Cray Award: Charles L. Seitz • • • • • For innovation in high-performance message passing architectures and networks A member of National Academy of Engineering President of Myricom, Inc. until last year Projects he worked on include VLSI design, programming and packet-switching techniques for 2nd generation multicomputers, Myrinet high-performance interconnects Sidney Fernbach Award: Cleve Moler • • • • • • For fundamental contribution to linear algebra, mathematical software, and enabling tools for computational science Professor at the University of Michigan, Stanford University and University of New Mexico Co-founder of MathWorks, Inc. (in 1984) One of the authors of LINPACK and EISPACK Member of National Academy of Engineering Past president of Society for Industrial and Applied Mathematics 35 Key Awards • Ken Kennedy Award: Susan L. Graham • • • • • For foundational compilation algorithms and programming tools; research and discipline leadership; and exceptional mentoring Fellow: Association for Computing Machinery, American Association for the Advancement of Science, American Academy of Arts and Sciences, National Academy of Engineering Awards: IEEE John von Neumann Medal in 2009 Research includes work on Harmonia, a language-based framework for interactive software development and Titanium, a Java-based parallel programming language, compiler and runtime system ACM Turing Award: Judea Pearl • • • • • • For fundamental contributions to artificial intelligence through the development of a calculus for probabilistic and causal reasoning Professor of Computer Science at UCLA Pioneered probabilistic and causal reasoning Created computational foundation for processing information under certainty Awards: IEEE intelligent systems, ACM Allen Newell, and many others Member: AAAI, IEEE, National Academy of Engineering, and others 36 VII. EXASCALE 37 Picking up the Exascale Gauntlet • International Exascale Software Project – 7th meeting in Cologne, Germany – 8th meeting in Kobe, Japan • European Exascale Software Initiative – EESI initial study completed – EESI-2 inaugurated • US DOE X-stack Program – 4 teams selected to develop system software stack – To be announced • Plans in formulation by many nations Strategic Exascale Issues • • • • • • Next Generation Execution Model(s) Runtime systems Numeric parallel algorithms Programming models and languages Transition of legacy codes and methods Computer architecture – Highly controversial – Must be COTS, but can’t be the same • Managing power and reliability • Good fast machines or just machines fast IX. CANONICAL HPC SYSTEM 40 Canonical Machine: Selection Process • Calculate histograms for selected non-numeric technical parameters • • • • • • • Architecture: Cluster – 410, MPP – 89, Constellations - 1 Processor technology: Intel Nehalem – 196, other Intel EM64T – 141, AMD x86_64 – 63, Power – 49, etc. Interconnect family: GigE – 224, IB – 209, Custom – 29, etc. OS: Linux – 427, AIX – 28, CNK/SLES 9 – 11, etc. Find the largest intersection including single modality for each parameter • • (Cluster, Intel Nehalem, GigE, Linux): 110 machines matching Note: this does not always include the largest histogram buckets. For example, in 2003 the dominant system architecture was cluster, but he largest parameter subset is generated for (Constellations, PA-RISC, Myrinet, HP-UX) In the computed subset, find the centroid for selected numerical parameters • • • • Rmax Number of compute cores Processor frequency Other useful metric would be power, but it is not available for all entries in the list Order machines in the subset by increasing distance (least square error) from the centroid • • The parameter values have to be normalized due to different units used The canonical machine is found at the head of the list Canonical Subset with Machine Ranks Centroid Placement in Parameter Space Centroid coordinates: #cores: 10896 Rmax: 64.3 TFlops Processor frequency: 2.82 GHz The Canonical HPC System – 2012 • Architecture: commodity cluster • Dominant class of HEC system • Processor: 64-bit Intel Xeon Westmere-EP • E56xx (32nm die shrink of E55xx) in most deployed machines • Older E55xx Nehalem in the second place by machine count • Minimal changes since the last year: strong Intel dominance, with AMD and POWER based systems in distant second and third place • Intel maintains the strong lead in total number of cores deployed (4,337,423), followed by AMD Opteron (2,038,956) and IBM POWER (1,434,544) • Canonical example: #315 in the TOP500 • Rmax of 62.3 TFlops • Rpeak of 129 Tflops • 11016 cores • System node • Based on HS22 BladeCenter (3rd most popular system family) • Equipped with Intel X5670 6C clocked at 2.93 GHz (6 cores/12 threads per processor) • Homogeneous • Only 39 accelerated machines in the list; accelerator cores constitute 5.7% of all cores in TOP 500 • IBM systems integrator • Remains the most popular vendor (225 machines in the list) • Gigabit Ethernet interconnect • Infiniband still did not surpass GigE in popularity • Linux • By far #1 OS • • Power consumption: 309 kW, 201.6 MFLOPS/W Industry owned and operated (telecommunication company) X. CLOSING REMARKS 45 Next Year (if invited) • Leipzig • 10th Anniversary of Retrospective Keynotes • Focus on Impact and Accomplishments across an entire Decade • Application oriented: science and industry • System Software looking forward