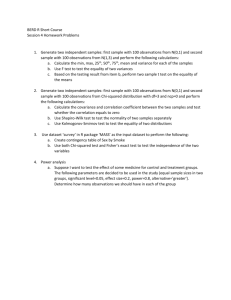

Overview of Biostatistical Methods

advertisement

Randomized Clinical Trials (RCT) Randomized Clinical Trials (RCT) Designed to compare two or more treatment groups for a statistically significant difference between them – i.e., beyond random chance – often measured via a “p-value” (e.g., p < .05). Examples: Drug vs. Placebo, Drugs vs. Surgery, New Tx vs. Standard Tx Let X = decrease (–) in cholesterol level (mg/dL); possible expected distributions: Experiment Treatment population Control population significant? 1 2 H 0 : 1 2 X 0 Patients satisfying inclusion criteria R A N D O M I Z E Treatment Arm End of Study RANDOM SAMPLES Control Arm T-test F-test (ANOVA) Randomized Clinical Trials (RCT) Designed to compare two or more treatment groups for a statistically significant difference between them – i.e., beyond random chance – often measured via a “p-value” (e.g., p < .05). Examples: Drug vs. Placebo, Drugs vs. Surgery, New Tx vs. Standard Tx Let T = Survival time (months); population survival curves: S(t) = P(T > t) 1 survival probability Kaplan-Meier estimates AUC difference significant? S2(t) Control S1(t) Treatment 0 H 0 : S1 (t ) S2 (t ) T End of Study Log-Rank Test, Cox Proportional Hazards Model Case-Control studies Cohort studies Observational study designs that test for a statistically significant association between a disease D and exposure E to a potential risk (or protective) factor, measured via “odds ratio,” “relative risk,” etc. Lung cancer / Smoking Case-Control studies Cohort studies PRESENT PAST FUTURE cases E+ vs. E– ? controls reference group D+ vs. D– E+ vs. E– relatively easy and inexpensive subject to faulty records, “recall bias” D+ vs. D– ? measures direct effect of E on D expensive, extremely lengthy… Example: Framingham, MA study Both types of study yield a 22 “contingency table” of data: D+ D– E+ a b a+b E– c d c+d a+c b+d n where a, b, c, d are the numbers of individuals in each cell. H0: No association between D and E. End of Study Chi-squared Test McNemar Test As seen, testing for association between categorical variables – such as disease D and exposure E – can generally be done via a Chi-squared Test. But what if the two variables – say, X and Y – are numerical measurements? Y Furthermore, if sample data does suggest that one exists, what is the nature of that association, and how can it be quantified, or modeled via Y = f (X)? Correlation Coefficient JAMA. 2003;290:1486-1493 Scatterplot measures the strength of linear association between X and Y r –1 X 0 negative linear correlation +1 positive linear correlation As seen, testing for association between categorical variables – such as disease D and exposure E – can generally be done via a Chi-squared Test. But what if the two variables – say, X and Y – are numerical measurements? Y Furthermore, if sample data does suggest that one exists, what is the nature of that association, and how can it be quantified, or modeled via Y = f (X)? Correlation Coefficient JAMA. 2003;290:1486-1493 Scatterplot measures the strength of linear association between X and Y r –1 X 0 negative linear correlation +1 positive linear correlation As seen, testing for association between categorical variables – such as disease D and exposure E – can generally be done via a Chi-squared Test. But what if the two variables – say, X and Y – are numerical measurements? Y Furthermore, if sample data does suggest that one exists, what is the nature of that association, and how can it be quantified, or modeled via Y = f (X)? Correlation Coefficient JAMA. 2003;290:1486-1493 Scatterplot measures the strength of linear association between X and Y r –1 X 0 negative linear correlation +1 positive linear correlation As seen, testing for association between categorical variables – such as disease D and exposure E – can generally be done via a Chi-squared Test. But what if the two variables – say, X and Y – are numerical measurements? Furthermore, if sample data does suggest that one exists, what is the nature of that association, and how can it be quantified, or modeled via Y = f (X)? Correlation Coefficient measures the strength of linear association between X and Y For this example, r = –0.387 (weak, negative linear correl) As seen, testing for association between categorical variables – such as disease D and exposure E – can generally be done via a Chi-squared Test. But what if the two variables – say, X and Y – are numerical measurements? Furthermore, if sample data does suggest that one exists, what is the nature of that association, and how can it be quantified, or modeled via Y = f (X)? residuals Simple Linear Regression gives the “best” line that fits the data. Regression Methods Want the unique line that minimizes the sum of the squared residuals. For this example, r = –0.387 (weak, negative linear correl) As seen, testing for association between categorical variables – such as disease D and exposure E – can generally be done via a Chi-squared Test. But what if the two variables – say, X and Y – are numerical measurements? Furthermore, if sample data does suggest that one exists, what is the nature of that association, and how can it be quantified, or modeled via Y = f (X)? residuals Simple Linear Regression gives the “least squares” regression line. Regression Methods Want the unique line that minimizes the sum of the squared residuals. For this example, r = –0.387 (weak, negative linear correl) Y = 8.790 – 4.733 X (p = .0055) Furthermore however, the proportion of total variability in the data that is accounted for by the line is only r 2 = (–0.387)2 = 0.1497 (15%). • Polynomial Regression – predictors X, X2, X3,… • Multilinear Regression – independent predictors X1, X2,… w/o or w/ interaction (e.g., X5 X8) • Logistic Regression – binary response Y (= 0 or 1) • Transformations of data, e.g., semi-log, log-log,… • Generalized Linear Models • Nonlinear Models • many more… Sincere thanks to • Judith Payne • Heidi Miller • Rebecca Mataya • Troy Lawrence • YOU!