Link to SCADA history

advertisement

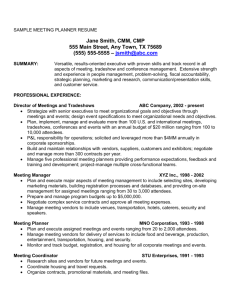

SCADA/EMS History Table of Contents Supervisory Control Systems Pilot Wire Stepping Switches Relay/Tones Solid State Vendors Telemetry Current Balance/transducers Pulse Rate/variable frequency Selective Continuous Scanning Automatic Data Logger Vendors SCADA Computer master Remote Terminal Units User Interface Communication channels Vendors Automatic Generation Control (AGC) Recorders Servos Mag amps/ operational amplifiers Computer Controlled Analog Vendors System Operation Computers SCADA Forecast and scheduling functions Configurations Vendors Energy Management Systems Network Analysis Functions Configurations Consultants Vendors Projects Supervisory Control Systems Pilot Wire Systems(1940's and earlier) Supervisory control in electric utility systems evolved from the need to operate equipment located in remote substations. In the past it was necessary to have personnel stationed at the remote site, or send a line crew out to operate equipment. The first approach was to use a pair of wires or a multi-pair cable between the sites. Each pair of wires operated a unique piece of equipment. This was expensive but justified if the equipment needed to be operated often or in order to restore service rapidly. Stepping switch systems It soon became obvious that if they could multi-plex one pair of wires and control several devices, the process would become much more efficient. However in doing this it would be necessary to have very good security, because the affect of operating the wrong piece of equipment could be severe. During the 30's the telephone company developed magnetic stepping switch’s for switching telephone circuits. The electric utilities used this technology as a early form of supervisory control. However to assure security they developed a select/check/operate scheme. This scheme had the master station send out a selection message, which when received by the remote would cause it to send out a corresponding check message for the selected device. If this was received by the master station it would indicate this to the dispatcher who would then initiate a operate message which when received by the remote would cause the device to operate. The remote would then send a confirmation message to the master station to complete the action. This select before operate scheme has been used in supervisory control systems for many years and forms of it are still in use today. Relay/Tone systems Relay systems used telephone type relays to create pulses that were sent over a communication channel to the remote. They typically used a coding scheme either based on the number of pulses in a message, or a fixed message size with combinations of short or long pulses. Westinghouse in conjunction with North Electric Company, developed Visicode supervisory control based the pulse count approach. I am most familiar with this equipment since I spent over five years doing installation and trouble shooting on it. Most of the installations were with electric utilities, some were pipelines, gas companies and even done an installation in the control tower of O Hara airport in Chicago, to control the landing lights. Visicode used two time delay relays to create pulses, and timing chain of relays to count them. It used the select/check/operate scheme for security. There were two sizes the “junior” used one select/check sequence, but the larger used a select/check sequence for a group and a second for the point. Thousands of this equipment went in service during the years from 1950 until about 1965. I worked on it so often that I could trouble shoot it often by just listening to the relay sequence, in fact more than once I was able to solve their problem over the telephone. It was very reliable and had very few false operations, although it could happen. One I investigated was caused because the equipment was installed in a diesel generating station with the master station cabinet beside the generator. The was a lot of low frequency vibration, and the battery connection was loose. As the battery connection would make and break with the vibration, the master was sending out a constant stream of pulses 24 hours a day. The remote was going crazy, and it finally hit the right combination for a false operation. General electric also had a supervisory control system that was a competitor of Visicode. It operated similarly but used a fixed length message type and relied on two different pulse lengths for the coding. Both used the select before operate scheme. Control Corporation supplied a supervisory system that used combinations of tones for the coding. They were created with a “Vibrasender” and received with a “Vibrasponder”. In 1957 I was installing a Visicode system in Watertown South Dakota with the Bureau of Reclamation and a Control Corp field engineer was there installing one of their systems also. We spent a lot of time together at lunch and in the evenings. It was fun listening to him trouble shoot because he put a speaker on the line and listened to the tones. He turned my name over to their personnel department who contacted me and I went to Minneapolis to interview, but since I had only been at Westinghouse for two years I decided to stay. Ironically I went with CDC ,who had bought Control Corp, 13 years later. Solid State (60's and 70's) Westinghouse developed their solid state supervisory system around 1960. It was called REDAC. It used a fixed word length format with a checksum character with each message. It still used the select/check/operate security technique. GE came out with a similar system about that time that I believe was called GETAC. Control Corporations solid state system was called Supertrol. These systems were basically solid state versions of their previous systems. Vendors The predominate vendors during this evolution of supervisory control were Westinghouse, General Electric, and Control Corporation. There were European vendors such as Siemens but they were not a significant factor in the US market. Telemetry Current Balance/transducers Telemetry in electric utilities began like supervisory control because it became necessary to obtain readings of power values like volts, amps, watts etc. from remote locations. As power systems became larger and more complex and the need for central operation developed, telemetry was required. The first approach was to utilize a pair of wires and pass a current proportional to the reading. This first required a transducer to convert the power system value to a DC voltage or current proportional to the power system quantity. The first transducers for voltage or current were relatively simple since the AC value from metering transformers could be rectified to create a DC value. Watt and Var readings were more complex however, and the first successful transducers commonly used for these were Thermal Converters. These units used instrument transformer inputs from voltage and current readings to heat a small thermal element which was then detected by thermocouples to create a proportional voltage. Later on, a different type of transducer was invented which used the Hall Effect to create the proportional voltage. Unfortunately these transducers could not be applied directly to a communication channel consisting of a pair of wires. The variations in resistance of the long communication channel ,with temperature, prevented a consistent accurate reading. Therefore a telemetry transmitter was required that would convert the transducer voltage to a constant current source that would be nearly independent of the variations in resistance. This was done with a current balance type of telemetry transmitter. It was first implemented using vacuum tubes and later on transistors. Pulse rate/Variable frequency telemetry systems. In order to transmit telemetry across other types of communication channels such as power line carrier, and to improve accuracy, telemetry schemes were devised using pulse rate and variable frequency methods. The pulse rate equipment generated a pulse rate that between the minimum rate and the maximum rate was proportional to the telemetered value. The variable frequency systems worked similar, as the telemetered value was proportional to the range between the minimum and maximum frequency. While at Westinghouse in the late 50's I worked on the development of their system, which was called Teledac. The solid state system generated a signal with a frequency that varied from 15 to 35 cycles, proportional to the telemetered value. There was another pulse based system that was used during these years that was supplied by the Bristol Company. They used a mechanical variable length pulse that was generated by a rotating serrated disk. The value in this scheme was proportional to the pulse width. This equipment was used more in gas and pipeline systems because of it’s slow time response. All of these systems had the advantage of being able to send the signal over a variety of communication media. They were typically used over pilot wire, power line carrier, and micro-wave. Selective Telemetry systems. Since it is usually only necessary to read these values periodically, and to reduce the number of communication channels needed, it became necessary to multiplex several readings over a single communication channel. This need was consistent with the requirements of supervisory control. So the usage of selective telemetry over supervisory control became common. The dispatcher could take his hourly readings by selecting the readings on a supervisory/telemetry system. Continuous Scanning systems. Since the equipment was by now implemented with solid state technology, and in some cases on dedicated communication channels, it was possible to continuously scan the values. With no mechanical parts involved the gear would not wear out. Various vendors developed continuous scanning systems with a solid state master system. Automatic data loggers. Automatic data loggers relieved the dispatcher from the tedious task of recording all the readings each hour. Early versions came before solid state and continuous scan telemetry, although they were unusual until the solid state or computer master evolved.. I installed a Automatic Data Logger in 1958 at the dispatch office of Omaha Public Power District, that was a good example of these early attempts. This system used stepping switches at each end to select the points. The telemetry scheme used was Bristol pulse duration. Once a point was selected it would take at least three pulses before the telemetry would settle down, so that had to be added to the logic in the field. The telemetry receiver was a Bristol Metamaster which was a big mechanical beast that had a shaft position output and a Gianini shaft position analog to digital converter, was coupled to it. The digital output of the converter was typed out on a IBM long carriage typewriter. It was a mess to get working but it did the job. However because of all the complexity and electro-magnetic components, it was difficult to maintain and was replaced after a few years. Solid state equipment and computer based master stations replaced this type of equipment. Vendors The vendors of early telemetry systems included Westinghouse, General Electric, and Control Data who were extending their supervisory control business. A few other vendors were telemetry suppliers like Bristol, and as the equipment turned to solid state continuous scanning, several new vendors such as Pacific Telephone and Moore Associates appeared. SCADA System(1965 and later) The term SCADA for Supervisory Control and Data Acquisition Systems, came into use after the use of a computer based master station became common. By the middle 60's there were computers that were capable of real time functions. Westinghouse and GE both built processors that could be used by this time. The Westinghouse computers were called PRODAC and GE processors GETAC. The complexity of the logic required to make a hardwired master that would provide all the necessary functions of a SCADA system was so great, that advantage of using a computer became apparent. Other computer companies came out with computers that were applicable to master station SCADA included Digital Equipment Company and Scientific Data Systems. The functions now included scanning data, monitoring the data or status, and alarming for changes, displaying the data, on digital displays, and later, on CRT displays and periodic data logging. Most SCADA systems worked on a continuous scan basis with the master sending requests for data and the remotes only responding. A few systems ,mostly in Europe, had the remote issue data continuously without a master station request. This was possible if the communications channel was full duplex. (Could send and receive simultaneously). Remote Terminal Units The remote station of the SCADA systems took the nomenclature, Remote Terminal Units (RTU’s), during the 60's. RTU’s were supplied primarily by the SCADA vendor, however later there developed a market for RTU’s from vendors other than complete SCADA vendors. RTU’s used solid state components, mounted on printed circuit cards and typically housed in card racks installed in equipment cabinets, suitable for mounting in a remote power substations They needed to operate, even if the power was out at the station, so they were connected to the substation battery, which was usually 129volt DC.. Since most RTU’s operated on a continuous scan basis, and since it is important to have fast response to control operations in event of a system disturbance, the communication protocol had to be both efficient and very secure. Security was a primary factor, so sophisticated checksum security characters were transmitted with each message, and the select/before/operate scheme used on control operations. The most common security check code used was BCH, which was a communication check code developed in the 60's. During the 60's and 70's most RTU communication protocol’s were unique to the RTU vendor i.e. proprietary. Because of the need for both very high security and efficiency, common protocols used in other industries such as ASCII were not used. In order to allow different types of RTU’s on a SCADA system there was a effort to standardize protocols undertaken by the IEEE. The development of the Micro Processor based communication interface at the master station solved some of the compatibility problems however, as it now became possible for the master station to communicate with RTU’s of different protocols as the micro processor based communication interface could be programmed to handle the different protocols. The basic structure of a RTU consisted of the communication interface, central logic controller, and input/output system with analog inputs, digital inputs, control digital outputs and sometimes analog control outputs. They were typically supplied in 90 high steel cabinets, with room for terminal blocks for field wiring to substation equipment. Large SCADA systems would have several hundred RTU’s. User Interface(Man/machine Interface) Early SCADA systems used a wired panel with pushbuttons for selecting points and doing control operations. Data was displayed using digital displays, and alarms were listed on printers or teletypewriters. A major evolution came during the late sixties when the Cathode Ray Tube, CRT, was applied to the Man/machine interface (MMI). The original versions were black and white character mode CRT’s. They offered the ability to display data and status in tabular format, and to list alarms as well as acknowledge them, and clear them. A keyboard was used instead of the pushbutton panel for interacting with the system. At first there was resistance from the dispatchers to the new methods. They felt they were too complicated and too “computer like”, although it did not take them very long to appreciate the added flexibility and capability. Then the Limited Graphic color CRT’s became available in the early seventies. These CRT’s had a graphic character set that would allow presenting one line diagrams of electric circuits with dynamic representation of circuit breakers, switches etc. Power system values such as volts, amps, megawatts etc can be presented on the one lines drawings and periodically up-dated. Up-date rates were typically in the area of five seconds. In the 80's Full Graphic CRT’s became available. Now one-line diagrams became much more sophisticated as you could pan across a large diagram or zoom in to a substation to present more detailed data. Control operations were accomplished by selecting the device on the diagram, confirming the selection visually and initiating the control operation. The select/before/operate scheme still prevailed. Selection was done by moving the CRT cursor to the device on the diagram. There were several devices used to move the cursor that evolved over the years. Early systems used a keypad with directional arrows, then a track/ball was used were the dispatcher rolled the ball to position the cursor. Another approach was to use a light pen that was used to touch the screen and b ring the cursor to that position. Finally the computer “mouse” that is so common today evolved as the most useful, although lots of dispatchers resisted it for the same reasons they resisted CRT’s. With the alarm lists being presented on CRT’s alarm printing became just a record keeping function and so the printers were now in the computer room instead of the dispatch office, and were usually line printers. Mapboards have been used in Dispatch offices for many years. They are typically mounted on the wall in front of the dispatchers. They serve various purposes but one of the most important is to keep the dispatchers constantly familiar with the power system. In the early days they were static boards, showing lines, stations, and salient equipment. Sometimes they were manually updated with tags or flags to equipment out of service or on maintenance. As computer based SCADA systems proliferated, it became possible to make these boards dynamic. They could show the status of devices such as circuit breakers and, using digital displays, indicate line flows and voltage. By the middle seventies these boards became very elaborate and expensive. However the capability of the Full Graphic CRT’s, by the late seventies and early eighties reduced the need for a elaborate dynamic board, and they became less common, instead going with less dynamics on the board and making more use of the CRT. Communication Channels. In the early days the most common communications media was a pair of dedicated wires. These were sometimes laid by the utility, and sometimes provided by the telephone company. A modem was provided by the SCADA vendor that used audio range frequency, and was frequency modulated to connect to the channel. For a while in the late sixties, power line carrier was used. Although the limited number of frequencies limited the usage. In the later seventies and eighties the most common channel became utility owned micro- wave equipment. It was expensive but had the advantage of providing a large number of channels. Vendors: The vendors for SCADA systems evolved from the vendors of supervisory control. These included Westinghouse, General Electric and Control Corporation. Early Westinghouse supervisory control was a combination of Westinghouse personnel and people from North Electric Co. In the sixties a few people from both operations split off and joined a company in Melbourne Florida, called Radiation to develop SCADA systems. This company evolved into Harris Systems. A company in San Jose California, Moore Associates began making digital telemetry equipment evolved into a SCADA vendor during the seventies. This company later became Landis&Gyre. Automatic Generation Control (AGC) AGC Evolved from the use of telemetry and recorders to record and display data such as tie line flows and generation values. Dispatch offices in the fifties, and earlier, typically had recorders that showed all major generation MW values and tie line flows. They often used retransmitting slidewires on the recorders to add the tie line flows together and display Net Interchange on a separate recorder. Also total generation was often summed up using a similar method from the various generation recorders. System frequency was also typically displayed on a recorder, and measured by a frequency transducer. By using a slidewire on the Net Interchange recorder and comparing it to a Net Interchange setter consisting of a digital dial driven potentiometer, a value representing Net Interchange Error was developed and displayed.. This error signal was then applied to a mechanical device that would generate pulses of either a raise or lower direction. These pulses were then transmitted to the various generating plants, and applied to a governor motor control panel that would drive the governor motor and hence raise or lower generation. The control pulses would move the generating units to bring the Net Interchange Error to zero. This was the beginning of AGC. Frequency error was developed from the frequency recorder, and a desired frequency setter. Frequency error was applied through a frequency bias setter calibrated in MW/0.1 cycle. This allowed the control system to control interchange and contribute to intra-system control of frequency. By the late fifties, there were other vendors that got involved in AGC, primarily Westinghouse and GE. It was at this time I moved out to Pittsburgh and joined the Westinghouse group that was developing analog AGC. The technology at this time used a combination of recorder retransmitting slide wires and Magnetic Amplifiers. During this period the concept of Incremental cost and Economic dispatch was refined. The incremental cost curves were implemented with resistors and diodes, such that when a analog voltage was applied, the output was a nonlinear curve representing the incremental cost curve for the unit or plant. Economic Dispatch is based on the fact that a unit once on the line, can be loaded with respect to the other units on line according to its incremental cost. However it’s commitment to be put on line, is a function of production cost, start-up cost, and other factors. There were several large AGC/EDC systems built by the late fifties, a few even included large “B” matrix systems to compensate Economic Dispatch for transmission losses. These systems were large, expensive and complicated, using hundreds of Mag Amps, recorders, servo mechanisms, etc. There is a large justification for the complexity and expense of using an automatic Economic Dispatch System with transmission loss compensation. If these systems can result in a savings of a few percent in a large utilities production cost, it would amount to several million dollars a year. By the late 50's/early 60's solid state operational amplifiers became available. These made developing the analog computing methods needed by these systems much easier. There were hundreds of systems shipped in the 60's using this technology. These systems were usually similar in control configuration. They were a type 1 control system. The systems Area Control Error, value was developed by comparing net interchange to desired interchange, and adding in a frequency bias term. Later a Time Error Bias was added in. The ACE value was then filtered and processed. It was then added to total generation to develop a total requirement value that was allocated to the individual generating units by the Economic Dispatch circuitry. This developed a Unit Generation requirement that when compared to actual generation, developed a unit error signal which was usually used to create control pulses which were transmitted to the plant and applied to the governor motors to adjust generation in response to the error. By the middle 60's there were enough advances in real time oriented digital computers that the Economic Dispatch function could be done on the computer. Economic Dispatch was typically done at approximately three minute periods, while the other portion of AGC ( typically called Load Frequency Control) must execute as often as every few seconds. Thus was developed the Computer Controlled Analog type of system where Economic Dispatch with transmission loss compensation was done in the computer and the result output on a digital controlled set-point to the analog LFC system. There were dozens of systems like this shipped in the late 60's. About this time the digital computers with real time operating systems evolved to the point where the entire AGC algorithm could be implemented on the computer. By this time I had been in the Systems Control group at Westinghouse for ten years working on mostly analog systems, but was fortunate to work on the earliest of the all digital systems. Also at about this time I left Westinghouse to go with Control Data Corporation who had been a SCADA vendor but wanted to develop AGC. Within the year we had a AGC system at CDC and our first order for a System Operation Computer. Vendors: AGC evolved from recorders and re-transmitting slidewires in the 40's and 50's. The primarily vendor at the start was Leeds and Northrup, although by the late fifties both General Electric and Westinghouse had developed analog systems. These three were the primary vendors through the 60's. After the 60's Control Data became a active vendor, and by the early70's Harris Controls, TRW, IBM, and others became involved. System Operation Computers: By the late sixties the term System Operation Computers (SOC) became common. These systems combined the SCADA, and AGC functions, with the forecast and scheduling functions. The SCADA function was still the most important and basic function. It became more sophisticated with RTU’s capable of more sophisticated functions such as local analog data monitoring, local Sequence of Events Reporting (down to a few milli-seconds), and local display and logging functions. There were even a few cases of substation computers taking over the RTU function. One of the first, was at PG&E, a job I was fortunate to work on. Also computer based AGC became common, with the algorithm becoming more capable, such as reallocating generation limited by maneuvering limits. The major addition was the inclusion of the forecast and scheduling functions. Forecast and scheduling functions. The three major functions involved are system Load Forecast, Unit Scheduling or Unit Commitment, and Interchange Negotiation. These functions are so important to the evolution of System Operation Computers that I will go into some detail about each. Load Forecast: In order for a utility to schedule the generation it needs to meet the load each day it needs a accurate forecast of the system load. Since correct scheduling of system resources can result in the savings of hundreds of thousands of dollars each day to a large utility (more on this later), a accurate load forecast is essential. During the 40's and 50's the load forecasting function was generally done by a very experienced scheduling person in the system operation group. They used patterns of loads that had actually occurred for different types of days recently. They then considered the detailed weather forecasts for the scheduling period, usually derived from the National Weather Service, as well as any events scheduled for the forecast period, such as ball games, fairs etc. They then put together a hourly forecast for the scheduling period, usually two or three days. Typically the forecast was put together the night before and adjusted early in the morning. As the load grew during the day it typically became necessary to adjust the forecast, however the adjustments hopefully were minor since much of the Unit Scheduling had already occurred by then. A experienced accurate forecaster was highly regarded. In the 60's the computer became fast enough to take over the forecasting function. Load Forecast programs were adaptive in that they used load data from the past several years to create a forecast based on the day of the year. This forecast was then adjusted according to the weather forecast, and various scheduler entered data. The programs typically ran a forecast for a forecasting period up to a week, although only the first several days were accurate enough for use. This is a very complex function and the early programs often had so much complexity that, on the computer of the time ,they would take longer than the forecast period to run (i.e. to run a 24 hour forecast could take 30 hours). Simplifications were made and the programs results then compared over time with the forecasts made by the experienced operator who had been forecasting. The computer generated forecast were as good or better, for the second or third day but rarely beat the scheduler for the next day. However they were consistently reasonable and they didn’t depend on your forecaster working past his retirement date. The load forecast programs of the 70's and 80's became ever more sophisticated and accurate. Unit Scheduling (Unit Commitment). It is necessary for a Utility to schedule enough generation to be on line at any time to meet the load plus the spinning reserve requirements. For a large utility this is a complex task. Each unit has a wide variety of constraints, such as start-up crew scheduling time. Time to minimum load, ramp loading time once on line, minimum on line time, etc. The units need to be scheduled hour by hour over the scheduling period, meeting all the constraints, and the load. Since many of the constraints are over several hours, the schedule for one hour affects the next few hours. For any hourly load level, there may be 20 combinations of units that will meet the load. Cost are incurred for each unit that is committed., such as crew start-up costs, unit start-up costs, fuel production cost, maintenance costs etc.. The scheduling task is to find the combination of units for each hour of the scheduling period that meets all the constraints, and is at the minimum over all cost. Since there may be twenty valid combinations to meet the load each hour, and each must be dispatched and coasted, there are thousands of unique paths through the scheduling period of several days. However there is only one optimal path, or in other words the one with the lowest over-all cost. It is very important to find the right schedule because the difference between the best schedule and others may be tens of thousand dollars a day , or millions in a years time for a large utility. Before the use of computers this task was done by a generation scheduling group, more or less manually, and based on experience. By the late 50's there were computer programs developed to help with this problem. The early Unit Commitment programs attempted to find the optimum path by investigating all combinations. The problem was the running time of the program was longer than the scheduling period, (the running time for a 24 hour schedule, might be 40 hours or more). Obviously the problem had to be simplified. Engineers developed the technique of Dynamic Programming which studied the combinations for the current hour and a few in the future and past to pick a optimum path. Studies showed the path was not quite as good as checking all paths, but it was close and much better than could be done manually. There were many variants of this approach developed in the 70' and 80's, and each required a lot of computing capability, but were major cost justifications for a larger System Operation Computer. Interchange Negotiation. During the 40's and 50's most utilities were connected with their neighbors, and entered into interchange agreements to buy and sell power. There were two types of contracts, short term(usually next hour), called Economy A, and longer term (several hours to up to six months) Economy B. Economy A or/and economy B contracts were often available at any time and the decision on whether to enter in a contract to buy or sell was complex. The Economy A contracts were easier to evaluate as they primarily only affected the load level of the units on line. Therefore they could be evaluated by using a study version of the Economic Dispatch function to determine the system production cost with and without the contract. Since these opportunities occurred often a separate program was developed called Economy A Interchange Negotiations. This program would be run often during the day to evaluate contract opportunities, or to propose contracts to the neighboring utilities. However evaluating or proposing Economy B interchange contracts was a much more complex task. These contracts directly affected the Unit Commitment schedule, and therefore had to be evaluated including unit commitment. A interchange contract has different constrains than a generating unit so it cannot just be considered as another potential unit to commit. To properly evaluate each contract you have to run unit commitment with and without the interchange. If there are many potential contracts this can result in a lot of runs of the function. To alleviate the possible long run times of the function, several versions of the Economy B Interchange Negotiation program utilize a simplified version of unit commitment. Another complication that happens with some utilities, is the need to include Hydro generating units. Hydro units have a completely new list of constrains, such as dam outflow, river level, pond level scheduling etc. They require including a function to optimize hydro with respect to thermal usage. A few utilities even have some large units that can be used as pump storage units, this adds a whole new level of complexity, as now not only do you have to figure out a way to commit a variety of units, but when to pump and when to generate with the pump storage units. During the 70's, and later, these programs became more and more sophisticated. They were a major justification for a large computer system. They were relatively easy to justify because you could take actual scheduling done manually and compare it to corresponding runs of the programs and compare the production costs. Every time one of these studies were done the results showed the programs would save millions of dollars over a years time. Configurations: The most common control system configuration for these early SOC systems, consisted of a dual computer configuration, with dual ported process I/O, and dual ported communication interfaces. Because of the requirement for very high availability for the critical power system control functions, complete redundancy was required. One of the two process control computers acted as the primary processor in the configuration. The primary system periodically passed all time critical data to the secondary, in a process called “check-pointing.” This was done usually over a high speed data link between the processors. Data was check-pointed as soon as it was received by the primary system. It was required to complete a fail-over to the secondary system for a primary system failure, in a matter of seconds. The requirement was to achieve a “bump-less” transfer from the dispatchers view. Periodic functions such as AGC and SCADA would not be significantly interrupted. It would not be necessary to complete man/machine functions such as display call-ups in process, although it was necessary to continue execution of long running time programs. To achieve these reliability requirements meant all process I/O, man machine equipment, and communication interfaces, had to be dual ported and connected to each computer. One of the most important and complex functions of these systems was Configuration Control, which monitored the system, initiated fail-over, and allowed operator interaction with these functions. The computers used were process control capable processors with real time operating systems. By the late 60's the user interface had evolved from push-buttons to CRT’s. First the CRT’s were character format black and white units, and were used in conjunction with lighted push-button panels. By the early 70's, colored CRT’s with a limited graphic character set, came into use. These could display dynamic one line electric system diagrams. For Cursor control, the trackball was used in conjunction with the keyboard cluster. Dispatchers were reluctant to give up lighted push-button panels for keyboards, but quickly adapted to them for the greatly increased functionality. Vendors: In the late 60's the major vendors for SOC systems were General Electric, Westinghouse, and Leeds and Northrup. CDC, IBM, Harris Controls and TRW became a factor by the middle 70's. GE used their GETAC 400 series computers, Westinghouse their PRODAC 500 series, and L&N used the Scientific Data Systems SDS 900 series. CDC in the 70's used their 1700 series, IBM their 1800 series and Harris and TRW used various machines. Energy Management Systems Network Analysis Functions The addition of network Analysis functions in the middle 70's brought on the nomenclature Energy Management Systems, (EMS). Dispatchers always had a need for a fast load flow calculation that they could use to investigate requests being made to allow clearances of equipment. They needed to know what the affect would be on the system, if certain equipment was taken out of service. In the 40's and 50's, the only help for them were engineering studies done by the system planning group using analog network analyzers, or later on early power flows. These studies could not be done rapidly and were therefore not of great value for daily operations use. In the middle sixties, there was a lot of work to develop a faster load flow program for the computer. A load flow calculation for a large network (i.e. 300 nodes, 700 branches for example), is a difficult calculation, as the network is complex, involving both real and imaginary values. A direct solution of the network equations could take several days on the computers available in the sixties. A iterative approach was developed that greatly reduced the computing time. The first commonly used method was a “Gaus Siedel” method which involved solving the equations for each node or “bus” and then iterating through the network. This typically required several hundred iterations for a solution, but was still much faster than a direct solution. Later a method know as “Neuton Raphson” was developed which developed a change matrix relating the change in bus injections to line flows. Iterating with this method resulted in solutions with four to six iterations. By the late sixties it was possible to get load flow programs that would solve in 10 to 15 minutes, thus making them useful to Dispatchers. I was involved in an interesting project in the late sixties attempting to get a fast power flow for dispatchers use it was a hybrid load flow.. We developed a analog representation of the network, using operational amplifiers. We then used the computer to output bus injections to the analog model and read in the line flows with analog inputs. The analog calculation solved the IYV portion of the calculation and we iterated through the computer for the bus injections. It took about five or six iterations, and we were able to get solutions in about 10 seconds for a 300 bus system. However it was difficult to maintain the analog and digital hardware and the system was not very reliable. Also the digital computers were rapidly getting faster and faster by the late sixties making a all digital approach the best choice. I was presenting a paper on the hybrid load flow at a conference in Minneapolis, when CDC contacted me and I ended up going with them. The Dispatchers Load Flow was a main part of the Network Analysis functions but there was another function that became a cornerstone of these systems. It involved the concept of on line security analysis. The idea was to build a model of the power system, using load flow techniques, then up-date the model with real time data so that it closely resembled the actual system in real time. The model then could be periodically modified with possible contingencies that could happen to the system and each of these would be checked to report any failures. Possible failures then could be handled as further contingencies, until a major outage is predicted. If all contingencies that were feasible or likely, and significant were checked, it would result in the dispatcher being aware of the current risks to the system. The dispatcher could then make changes to avoid these risks. It was desired to operate the system such that no first contingencies would cause further trouble. It was difficult to use a conventional load flow as the model however, as the telemetered data did not ordinarily include all the bus injection data as is necessary to up-date a load flow. Instead the telemetered data included primarily line flows. To help resolve this problem, a group of engineers at MIT led by Fred Schweppe developed the State Estimator. This program took the telemetered data base and matched it to a load flow calculation, using a least squares method, to create a model of the system that could use all of the telemetered data. It even used redundant values, and indicated which measurements seemed to be out of line or erroneous. Thus not only did the function recreate the needed model, it served to verify the telemetered data base. Know that a model was created that was consistent with a load flow solution and could be checked with contingencies using a load flow. However for a large system there may be hundreds of valid contingencies to check, and it was needed to check them as often as every fifteen minutes. A very high-speed load flow was needed. This resulted in much work to develop a higher speed load flow. Since the power system network model matrix is sparse (I. E. not nearly a line between every bus), sparse matrix solution techniques were developed to speed up the solution. Another technique that was developed by Brian Stout. Mark Enns and others, was the Decoupled Power Flow where the real and reactive portions of the solution were separated during the iterations. The result was simplified load flows that solved in seconds for large networks and allowed the function of Security Analysis to be accomplished. Later additions to this grouping of functions included the Optimal Power Flow which allowed production cost to be minimized considering line losses, security constraints etc. Configurations; EMS configurations were made up of various numbers of pairs of computers. Redundancy is required in order to achieve a high availability. A typical specification requires 99.8% or better availability for critical functions. One processor was generally the on-line processor, and the other standby. The standby processor is kept -up-to-date by a “checkpoint” process that run at frequent intervals. Some vendors configurations were made up of as few as two main computers while others used as many as six dual processors of different sizes. One technique used by CDC and others was to use smaller process control oriented computers for the “front end” functions such as SCADA and larger computers more capable of engineering functions such as network analysis, and forecast and scheduling functions. Often the User/Interface functions were also distributed to two or more separate computers. It was not un-common to see configurations with up to a dozen computers. The configuration control software was very sophisticated to control these large distributed processor configurations. In the late 80’s and 90’s, very powerful server oriented processors became available, and the trend towards distributed processing increased. Some configurations now used thirty or more of the processors. Consultants: As these systems became larger and more complex, it became more and more difficult for a Utility to specify and purchase a system. Starting in the late sixties to early seventies there developed a group of consulting companies who were capable of helping the utility specify, purchase and in some cases implement these systems. The consultants developed expertise that was difficult for the utility to acquire since the Utility went through the process of acquiring a new system only ever 10 years or so, and the consultants worked on several systems a year. Most of the consulting companies in this business evolved from personnel who worked for vendors, as that was were the detailed work was initially done. One early consulting firm was MACRO, which was formed mostly from people that left Leeds and Northrup. ECC was formed from people that left Westinghouse, EMCA from people that left CDC. Other smaller firms such as Stagg, were from people that used to work at GE. Systems Control Incorporated, was associated with CDC, and then became a consultant, and later a vendor. A split off from SCI became ESCA, who again developed from a consultant to become a vendor. In the later years, late 90’s and later, there was much consolidation in these consulting firms, and many were bought out by European companies. ECC and Macro were purchased by KEMA, a Dutch company and ESCA by a French company. Vendors: Systems in the class to be called EMS developed in the seventies. The EMS market developed rapidly in the seventies and seemed to peak during the late eighties. At it’s peak, in the eighties it was not un-common for there to be a dozen bidders on a EMS specifications, and the spec. could have come from as any as eight different consultants. Westinghouse , General Electric and Leeds and Northrup evolved into the market from their involvement with System Operation computers as did Control Data Corporation. CDC became driver in the market because of their Cyber computers offered the large scale computing requirements of the network analysis functions. Harris Controls got involved in the middle seventies, and IBM was a factor several times during the EMS heaviest activity, becoming active and then fading out. TRW was a major vendor trough out the period. During the energy crisis of the late seventies, and subsequent reduction in government defense contracts several defense contractors tried to gain a foot hold. Boeing and North American Rockwell took several large contracts each during this period, but left quickly when they found out utilities were not as apt to fore-give cost over-runs as the government was. Other vendors who were active for a while were Stagg Systems, and Systems Control Inc Later in the period a split off from SCI, ESCA became a major factor in the market. Their were close to a dozen vendors active by the late eighties. By the early nineties there was consolidation in the market as it could not sustain that many vendors. Westinghouse and GE left, and foreign vendors began to buy out some of the US vendors. This was at a time, during the energy crisis, when electric utility load decreased, and traditional US utility apparatus vendors like Westinghouse, GE etc either got out of the business or combined with foreign vendors. ESCA was bought out by the French, and CDC by the Germans. By the mid nineties their were fewer vendors, ESCA, Siemens (CDC), Harris, and other foreign vendors. However as time went on and the market consolidated, there were some smaller companies that were split offs of other companies who became active. Two of these that evolved from CDC are OATI and OSI. Projects These projects were large and expensive. Some large EMS systems cost over 40 million dollars. And they would go on for five or more years. A typical project would take up to a year for the specification to be released and, the negotiation period with the vendors often lasted a year before the contract was awarded. Then the project detailed definition took another year or so, and implementation and testing up to three years. After the system was shipped, the installation and testing often went on for several years. Therefore the total involvement between the utility, consultant and vendor on a project could last as long as ten years, and involve four or five consultants, 10 to 15 utility personnel and up to fifty vendor people. At different stages of a project, consultants and utility personnel lived at the vendors site and later vendor people stayed at the utility location. Therefore their developed a close relationship between the various project staffs that lasted for long periods of time. As time went on during the project, all of the people involved usually took ownership of the project and they all worked together for a satisfactory conclusion. Similar government projects usually resulted in large delays and cost over runs, however because of the mutual involvement this was usually avoided on these projects.