PPT'02 format - The High performance Computing and Simulation

advertisement

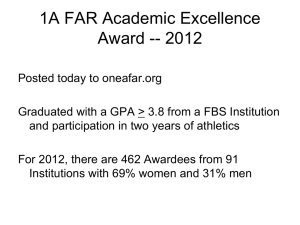

UPC Research Activities at UF Presentation for UPC Workshop ’04 Alan D. George Hung-Hsun Su Burton C. Gordon Bryan Golden Adam Leko HCS Research Laboratory University of Florida Outline FY04 research activities Objectives Overview Design Experimental Setup Results Conclusions New activities for FY05 Introduction Approach Conclusions 2 Research Objective (FY04) Goal Extend Berkeley UPC support to SCI – a new SCI Conduit. Compare UPC performance on platforms with various interconnects using existing and new benchmarks. UPC Code Translator Platformindependent Translator-Generated C Code Networkindependent Berkeley UPC Runtime System Compilerindependent GASNet Communication System Languageindependent Network Hardware 3 GASNet SCI Conduit - Design Control 1 Local (In use) ... Control X Control X Command X-1 Control Segments (N total) AM Header ... ... ... Command Y-X Command X-X Physical Address Control Command Y-1 Medium AM Payload Control N ... ... Check Command Y-N Command X-N Command 1-X Long AM Payload Payload X Payload Y Extract new message information ... DMA Queues (Local) Command X-X Command Segments (N*N total) Start Polling Memory Wait for Completion ... Local (free) Message Ready Flags Command N-X Yes 1st Message Pointer = NULL? Yes New Message Available? Enqueue all new messages Control 1 ... Other processing Dequeue 1st message ... Virtual Address No Payload 1 Control X ... Control N Command 1-X Payload X ... Payload Segments (N total) Command X-X Process reply message Handle message Exit Polling The queue is constructed by placing a next pointer in the header that points to the next available message ... ... Command N-X Node X Payload N AM reply or control reply Node Y Node X Importing Exporting SCI Space 4 Experimental Testbed Elan, VAPI (Xeon), MPI, and SCI conduits Nodes: Dual 2.4 GHz Intel Xeons, 1GB DDR PC2100 (DDR266) RAM, Intel SE7501BR2 server motherboard with E7501 chipset. SCI: 667 MB/s (300 MB/s sustained) Dolphin SCI D337 (2D/3D) NICs, using PCI 64/66, 4x2 torus. Quadrics: 528 MB/s (340 MB/s sustained) Elan3, using PCI-X in two nodes with QM-S16 16-port switch. InfiniBand: 4x (10Gb/s, 800 MB/s sustained) Infiniserv HCAs, using PCI-X 100, InfiniIO 2000 8-port switch from Infinicon. RedHat 9.0 with gcc compiler V 3.3.2, SCI uses MP-MPICH beta from RWTH Aachen Univ., Germany. Berkeley UPC runtime system 1.1. VAPI (Opteron) Nodes: Dual AMD Opteron 240, 1GB DDR PC2700 (DDR333) RAM, Tyan Thunder K8S server motherboard. InfiniBand: Same as in VAPI (Xeon). GM (Myrinet) conduit (c/o access to cluster at MTU) Nodes*: Dual 2.0 GHz Intel Xeons, 2GB DDR PC2100 (DDR266) RAM. Myrinet*: 250 MB/s Myrinet 2000, using PCI-X, on 8 nodes connected with 16-port M3FSW16 switch. RedHat 7.3 with Intel C compiler V 7.1., Berkeley UPC runtime system 1.1. ES80 AlphaServer (Marvel) Four 1GHz EV7 Alpha processors, 8GB RD1600 RAM, proprietary inter-processor connections. Tru64 5.1B Unix, HP UPC V2.1 compiler. * via testbed made available courtesy of Michigan Tech 5 IS (Class A) from NAS Benchmark IS (Integer Sort), lots of fine-grain communication, low computation. Poor performance in the GASNet communication system does not necessary indicate poor performance in UPC application. 1 Thread 2 Threads 4 Threads 8 Threads 30 25 Execution Time (sec) 20 15 10 5 0 GM Elan GigE mpi VAPI (Xeon) SCI mpi SCI Marvel 6 DES Differential Attack Simulator S-DES (8-bit key) cipher (integer-based). Creates basic components used in differential cryptanalysis. S-Boxes, Difference Pair Tables (DPT), and Differential Distribution Tables (DDT). Bandwidth-intensive application. Designed for high cache miss rate, so very costly in terms of memory access. Sequential 1 Thread 2 Threads 4 Threads 3500 Execution Time (msec.) 3000 2500 2000 1500 1000 500 0 GM Elan GigE mpi VAPI (Xeon) SCI mpi SCI Marvel 7 DES Analysis With increasing number of nodes, bandwidth and NIC response time become more important. Interconnects with high bandwidth and fast response times perform best. Marvel shows near-perfect linear speedup, but processing time of integers an issue. VAPI shows constant speedup. Elan shows near-linear speedup from 1 to 2 nodes, but more nodes needed in testbed for better analysis. GM does not begin to show any speedup until 4 nodes, then minimal. SCI conduit performs well for high-bandwidth programs but with the same speedup problem as GM. MPI conduit clearly inadequate for high-bandwidth programs. 8 Differential Cryptanalysis for CAMEL Cipher Uses 1024-bit S-Boxes. Given a key, encrypts data, then tries to guess key solely based on encrypted data using differential attack. Has three main phases: Compute optimal difference pair based on S-Box (not very CPU-intensive). Performs main differential attack (extremely CPU-intensive). Gets a list of candidate keys and checks all candidate keys using brute force in combination with optimal difference pair computed earlier. Analyze data from differential attack (not very CPU-intensive). Computationally (independent processes) intensive + several synchronization points. 1 Thread 2 Threads 4 Threads 8 Threads 16 Threads Execution Time (s) 250 200 150 100 50 0 Parameters MAINKEYLOOP = 256 NUMPAIRS = 400,000 Initial Key: 12345 SCI (Xeon) VAPI (Opteron) Marvel 9 CAMEL Analysis Marvel Attained almost perfect speedup. Synchronization cost very low. Berkeley UPC Speedup decreases with increasing number of threads. Run time varied greatly as number of threads increased. Cost of synchronization increases with number of threads. Hard to get consistent timing readings. Still decent performance for 32 threads (76.25% efficiency, VAPI). Performance is more sensitive to data affinity. 10 Conclusions (FY04) SCI conduit Functional, optimized version is available. Although limited by current driver from vendor, it is able to achieve performance comparable to other conduits. Enhancements to resolve driver limitation are being investigated in close collaboration with Dolphin. Support access of all virtual memory on remote node. Minimize transfer setup overhead. Paper accepted by 2004 IEEE Workshop on High-Speed Local Networks. Performance comparison Marvel Provides better compiler warnings. Has better speedup. Berkeley UPC system a promising COTS cluster tool Performance on par with HP UPC. VAPI and Elan are initially found to be strongest. 11 Introduction to New Activity (FY05) UPC Performance Analysis Tool (PAT) Motivations UPC program does not yield the expected performance. Why? Due to the complexity of parallel computing, difficult to determine without tools for performance analysis. Discouraging for users, new & old; few options for sharedmemory computing in UPC and SHMEM communities. Goals Identify important performance “factors” in UPC computing. Develop framework for a performance analysis tool. As new tool or as extension/redesign of existing non-UPC tools. Design with both performance and user productivity in mind. Attract new UPC users and support improved performance. 12 Approach Define layers to divide the workload. Conduct existing-tool study and performance layers study in parallel to: Survey of existing tools with list of features as end result Minimize development time Maximize usefulness of PAT Survey of existing literature plus ideas from past experience Tool Study Literature Study / Intuition Develop model to predict optimal performance. Preliminary experiments designed to test the validity of each factor Application Layer Language Layer Compiler Layer Middleware Layer Hardware Layer Measurable Factor List Features from tool study plus analyses and factors from literature study that are measurable Experimental Study I Performance Analysis Tool Updated list including factors shown to have effect on program performance Relevant Factor List Irrelevant Factor List Experimental Study II Additional experiments to understand the degree of sensitivity, condition, etc. of each factor Factors shown not to be applicable to performance Major Factor List Collection of factors shown to have significant effect on program performance Minor Factor List Collection of factors shown NOT to have significant effect on program performance PAT 13 Conclusions (FY05) PAT development cannot be successful without UPC developer and user input. Develop a UPC user pool to obtain user input. Require extensive knowledge on how each UPC compiler works to support each of them successfully. Compilation strategies. Optimization techniques. List of current and future platforms. Propose the idea of a standard set of performance measurements for all UPC platforms and implementations. What kind of information is important? Familiarity with any existing PAT? Preference if any? Why? Past experience on program optimization. Computation (local, remote). Communication. Develop a repository of known performance bottleneck issues. 14 Comments and Questions? 15 Appendix – Sample User Survey Are you currently using any performance analysis tools? If so, which ones? Why? What features do you think are most important in deciding which tool to use? What kind of information is most important for you when determining performance bottlenecks? Which platforms do you target most? Which compiler(s)? From past experience, what coding practices typically lead to most of the performance bottlenecks (for example: bad data affinity to node) ? 16 Appendix - GASNet Latency on Conduits GM put VAPI put HCS SCI put GM get VAPI get HCS SCI get Elan put MPI SCI put Elan get MPI SCI get 40 Round-trip Latency (usec) 35 30 25 20 15 10 5 0 1 2 4 8 16 32 64 128 256 512 1K Message Size (bytes) 17 Appendix - GASNet Throughput on Conduits GM put VAPI put HCS SCI put GM get VAPI get HCS SCI get Elan put MPI SCI put Elan get MPI SCI get 800 700 Throughput (MB/s) 600 500 400 300 200 100 0 128 256 512 1K 2K 4K 8K 16K 32K 64K 128K 256K Message Size (bytes) 18