TheLVIkNNAnalysisProcess_Mar_27_2014

advertisement

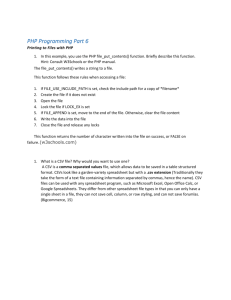

The Landscape Vegetation Inventory (LVI) Nearest Neighbour Analysis Process1 1. Overview These instructions are developed with reference to completing nearest neighbour assignments for the purpose of estimating missing Y-variable’s in a Target Dataset (that contains X- but not Yvariables). X-variables are selected from a Reference Dataset (that contains both X- and Yvariables) to best estimate combinations of Y-variables. In this particular version of the process combinations of Y-variables are grouped into classes. The next step is to explore different numbers and combinations of variables for the purpose of explaining differences in classifications (based on the Y-variable set). Certain metrics are produced to help the analyst determine the best number and combination of variables to use, and what weights to use (if any). Having selected a preferred option, the chosen variables ( weights) are used to identify k= 1 to n Nearest Neighbours (and associated distances) for each of the observations in the Target Data (Y-variables are absent or missing). A Y-variable dataset is then generated for each observation in the Target dataset, using the k = 1 to n nearest neighbours and the associated Yvariables (in the Reference dataset). The entire process may also be applied to the Reference Dataset and further analysis (Bias; Signed Relative Bias or SRB; Root Mean Squared Prediction Error or RMSPE) carried out to compare different numbers and combinations of variables, distance metrics (Euclidean, Mahalanobis, and a slightly twisted Most Similar Neighbour or MSN), with or without distance weighting, with or without variable weighting, with or without variable transformations, with or without normalizing the data, and including or excluding the (k=1) nearest neighbours . There is room for improvement in this process including: a) Developing testing procedures that make use of an independent Training and Test data set, wrapped up within a Monte Carlo process for extracting different subsets from the Reference Data to represent Training and Test datasets. This process can be implemented on a small scale by manually creating Training and Test datasets and running these through the process. b) Extending the process to make use of ensemble modeling. This would extend the process to make use of multiple models in determining nearest neighbours with heuristics to select the k-nearest neighbours based on distance or by some kind of voting procedure (much like Random Forests). c) Integrating other procedures like Random Forests into the overall framework. d) There is some legacy code that requires refactoring to be fully compliant with the design standards (discussed briefly below) for this system. One of the key strengths of this process is the programming framework. To begin with it makes use of both R and Python. R has tremendous depth in terms of different statistical packages that are available but the data handling routines are not as flexible as Python. Within the 1 Prepared by: Ian Moss PhD, Tesera Systems Inc., 619 Goldie Avenue, Victoria, BC V9B 6C1. Phone: 250.391.0975. email: ian.moss@tesera.com April 10, 2014 1 Python environment extensive use is made of numpy that has been developed to manage matrix algebra and provides significant advantages in terms of processing speed. The integration of these two different coding environments has been achieved by the launching of Python code from within R (which is relatively simple to do) and with communication by way of input and output files using standard naming conventions. The modular approach to the development of this code, along with a few basic coding standards (use of configuration files; internal (program) and external (application) documentation; separation of routines designed to manage workflows from routines designed to carry out particular functions or analysis; use of a few standard data management files like the data dictionary and the files used to manage variable selection), allows individuals to add to the framework. Individuals can do so by making use of existing code with a minimum of additional new code and new code should be developed along similar lines to further enhance the toolset and continue the spirit of sharing and save collective time (How many times does one have to write a read.csv statement in R and attach variable names?). The concept here is that the framework could continue to be built and shared with others in an open source environment. Of course there is need for one (or more) gate keeper(s) to ensure that the code remains reasonably well organized and to ensure that any new code does indeed meet the requirements. Ultimately the design of this system has also been established with the idea that it can be web-enabled. 2. The Process 2.1. Data preparation Y-Variable Dataset (Reference Data) 0.1.1. Natural log and log odds transformations 2.2. Data preparation X-Variable Dataset (Reference & Target Data) 2.2.1. Remote sensing data 2.2.2. Ancillary variables/datasets 2.2.2.1. Stage Terrain Variables (Slope, Aspect, Elevation): /Rwd/Python/Config/stageTerrainConfig.txt /Rwd/Python/ComputeStageAspectElevVariables.py To join variables to original dataset: /Rwd/Python/Config/innerJoinConfig.txt /Rwd/Python/innerJoinLargeDataset.py 2.2.2.2. ClimateWNA Variables: http://climatewna.com/ [Accessed April 3 2014] 2.2.2.3. Biogeoclimatic-Ecosystem Classification (BEC): Terrestrial Ecosystem Mapping (TEM) Predictive Ecosystem Mapping (PEM) http://www.env.gov.bc.ca/ecology/tem/ [Accessed April 3 2014] 2.2.2.4. Existing Vegetation Resource Inventory (VRI) Data 2.2.2.5. UTM Coordinates and/or latitude and longitude 2 2.2.2.6. Terrain Indices2 2.2.3. About Config Files 2.2.3.1. Detailed descriptions for each data entry contained in: /Rwd/Python/…ConfigInfo.txt 2.2.4. Natural log and log odds transformations: 2.2.4.1. Natural Log Transformations (A is an untransformed variable): Recommended for HT3, AGE4, G5, N6, V7 A’ = LN(A+1) This transformation works as long as A is non negative. 2.2.4.2. Log odds transformations (for proportions between 0 and 1): Reccommended for CC8. if p = 0 then: lodds = ln(0.01/0.99) else: if p = 1 then: lodds = ln(0.99/0.01) else: lodds = ln(p/(1-p)) 2.2.5. Data dictionaries standard format (*mandatory): (May be required for quality control/error detection) TVID* TABLENAME* VARNAME* NEWVARNAME VARTYPE* VARDEFAULT SELECT DESCRIPTION 2.3. Fuzzy C-Means Classification When applying this process for the purpose of selecting an X-Variable set to best explain differences in selected Y-Variables it is recommended (as a general guideline) that 4 systems of classification be developed, each with 5 (4-6) classes. The next step (after 0.4, 0.5) 2 Wilson, J.P. 2012. Digital terrain modeling. Geomorphology 137:107-121. Hijmans, R.J. and Etten, J. 2013. Package raster. http://cran.at.r-project.org/web/packages/raster/raster.pdf [Accessed April 3 2014] 3 Height (m) 4 Age (y) 5 Basal area (m2ha-1) 6 Numbers of trees per hectare (ha-1) 7 Volume (m3ha-1) 8 Crown closure expressed as a proportion rather than percent. 3 involves using these classifications in Multiple Discriminant Analysis (MDA) to select an XVariable set. 2.3.1. Classifications9: SPECIES (proportions) HEIGHT-AGE (log transformed and normalized) STRUCTURE10 (G,N,CC, …; log transformed and normalized; log odds for CC) DEAD11(G,N; log transformed and normalized ) 2.3.2. Variable Selection: 2.3.2.1. XVARSELV1.csv: TVID VARNAME VARSEL (Enter X, Y or N or …) 2.3.3. Fuzzy C-Means Algorithm: \Rwd\Python\config\cmeansConfig.txt \Rwd\Python\lviClassification_v2.py 2.3.4. Outputs xCLASS.csv xCENTROID.csv xMEMBERSHIP.csv Assignment of classes to each observation CENTROID statistics Class memberships for each observation 2.4. Join Classes (xCLASS.csv) to (Reference) dataset and produce box plots: 2.4.1. Join datasets: /Rwd/Python/Config/innerJoinConfig.txt /Rwd/Python/innerJoinLargeDataset.py 2.4.2. Produce box plots 2.4.2.1. Indicate (Y) variables to be displayed with reference to (each) classification: 2.4.2.1.1. Fill out XVARSELV1.csv (for the Reference Dataset) 2.4.2.1.2. Run the R Boxplot Routine: /Rwd/RScript/XCreateXorYVariableClassificationBoxPlots.R Complete definition of the user inputs at the top of the routine Run the script. 2.5. Variable Selection The objective of this process is to identify the X-Variables that best explain the Y-Variables, where the Y-Variables have been recast as classifications in accordance with 0.3. 9 Note that a system of site productivity classification should also be mentioned as a potential Y-variable (e.g. site index) representation, but in the LVI case this system is included as part of the X-variable dataset. 10 Live trees 11 Dead trees 4 2.5.1. Select the X-Variables eligible for use (in both the Reference and Target data) in MDA using the XVARSELV1.csv file format: Enter an X in the VARSEL column if eligible otherwise enter Y or N. 2.5.2. Make ac copy of XVARSELV1.csv … the next R script will make changes to it. 2.5.3. Using the Reference dataset, run the variable selection procedure: /Rwd/RScript/XIterativeVarSelCorVarElimination.R Complete review and entry of user inputs. Run the script. See Appendix A for details regarding this procedure. 2.6. Run linear discriminant analysis for multiple X-variable datasets. /Rwd/RScript/ XRunLinearDiscriminantAnalysisForMultipleXVariableSets.R The results of this process are used by the analyst to then select a (set of) discriminant function(s) with n-number of variables to determine similarities and differences amongst the observations with respect to one (or more) systems of classification. In most cases the number of variables to be included in a given set of discriminant functions (for a given classification) will be between 5 and 10. The goal is to find the largest KHAT statistic (Appendix B, Step 12) associated with a relatively small number of variables (with the latter designed to reduce the potential for overfitting). In addition, when looking at the variances (BTWTWCR4 in BWRATIO.csv; Appendix B Step 11) explained in association with each classification; a better solution can be identified as having the proportions of variance explained across a range of discriminant functions, rather than heavily weighted toward the first axis, and so too, with a greater total variance explained across all of the functions. Lastly it is useful to also review the variables included in the equations, and to look at the selected parameter relationships to distinguish amongst the classes. This can be obtained as follows: 2.6.1. Create X- or Y-variable classification box plots /Rwd/RScript/XCreateXorYVariableClassificationBoxPlots.R 2.6.1.1. Input file name (lviFileName); e.g. lviFileName <- "E:\\Rwd\\QNREFFIN10.csv" 2.6.1.2. Input excludeRowValue and excludeRowVarName (in the lviFileName); e.g. excludeRowValue = -1 excludeRowVarName <- 'SORTGRP' 2.6.1.3. Input the variable (in the lviFileName) containing the classes assigned to each observations; e.g. classVariableName <- 'CLASS5' 5 2.6.1.4. Input the name of the file containing the X or Y variable name selections; e.g. xVarSelectFileName <- "E:\\Rwd\\XVARSELV1_BoxPlot.csv" Note that this is the standard format XVARSELV1.csv with a filename suffix (_BoxPlot or some other user defined suffix) before the .csv to indicate that this is to be used for this purpose only. 2.6.1.5. Enter the variable selection criteria (“X” or “Y” or some other criteria); e.g. xVarSelectCriteria <- "Y" The routine will produce box plots for viewing only where the box plots describe the range of data associated with each of the classes listed under the classVariableName. This can help the analyst understand the influence of the indicator in terms of explaining similarities and differences amongst the classes and as a result, provides a more intuitive understanding of what the discriminant functions are doing. 2.7. Select preferred discriminant functions (VARSET) for each classification (including or excluding dead tree attributes). 2.7.1. 6 Appendix A. The Variable Selection Process /Rwd/RScript/xIterativeVarSelCorVarElimination.R This routine requires some explanation because it has three layers with the top layer initializing and managing an iterative routine, the second layer managing the main routine including running of both Python and R scripts, and the bottom layer containing the individual scripts that are to be activated as directed within the second layer. There are a few restrictions on the current code that should be specified in advance: 1. Certain file names are identified as being flexible in naming and location convention, but as a result of prior decisions, it is suggested these names not be changed. 2. Similarly all files that are held in common between R and Python routines should remain the same name and in the same location, i.e. in the top of the “\\Rwd” directory. 3. Flexibility has been built into the process in anticipation of future developments. The following is a more detailed description of what this routine is doing. TOP LAYER User inputs a) The name of the file containing the data: lviFileName <- “E:\\Rwd\\fileName.csv” The user enters information to the right of: <- (an equals, “=”, sign in R). Always include drive letter followed by colon (Best practice). Always use double quotes when specifying locations of files. Always use double backslash to indicate change in directory. Filenames include full address of where file is located plus file extension. Must be a comma delimited file. b) User may choose to exclude certain rows (observations according to a criteria) excludeRowValue <- 1 excludeRowVarName <- ‘SORTGRP’ The user enters information to the right of: <- (this won’t be repeated again) If the file does not have a column labeled with the excludeRowNameVarName or there is no value equal to the excludeRowValue then nothing will be excluded. This program only allows for simple exclusions (according to variable name and 1 value); more complicated exclusions should be done in preprocessing of the data if users are going to use this script. c) Indicate the classification (Y) variable to be used in the Discriminant Analysis classVariableName <- ‘aClassification’ 7 The classes are assigned to each variables according to those listed under a column named ‘aClassification’ The classes may be numeric or characters in this case. R requires that numeric assignments be declared as factors. This declaration is always applied to the variable name entered here. d) Identify the file containing the (X) variables to be selected for inclusion in the analysis. xVarSelectFileName <- “E:\\Rwd\\\\XVARSELV1.csv” xVarSelectCriteria <- “X” This is a standard variable selection file used in many different analyses and workflows. This document refers to the filename as XVARSELV1.csv but the file name can be changed; for this specific routine the name should not be changed. This file must contain the following column names: TVID; VARNAME; VARSEL TVID is a unique id assigned to each row: must be integer. VARNAME must be a variable name that is also contained in the data file. Note that not all of the variable names in the data file must be included in XVARSELV1.csv but if you want to select a variable to be included for analysis then it must be included herein. VARSEL use an X to indicate those variables that are to be selected as X-Variables, a Y- to indicate those variables selected as a Y-Variable (this is generally true but not applicable in this case); otherwise use an N to indicate that the variable is not selected. e) Set the minimum and maximum number of variables to select. minNvar <- 1 maxNVar <- 20 f) The variable selection routine will explore different numbers of variables ranging from the minimum, minNvar, to the maximum, maxNVar, as defined by the user (must be integers). It is generally recommended that the minimumNVar always be set to 1 since this provides a benchmark for the single most important variable for explaining most of the differences within a group. Generally speaking 20 variables will be too many, in part because as the number of variables increases there is a danger of overfitting (i.e. variables appear to add some explanatory power when in reality they add nothing and may in fact produce worse results; see http://en.wikipedia.org/wiki/Overfitting ). Set the number of solutions to be generated for each number of variables. nSolutions <- 10 For each number of variables between minNvar and maxNvar generate a given number of variable selections equal to nSloutions. The routine uses the R subselect package … using the ldaHmat routine. This routine selects a specified number of variables at random and then starts substituting other variables, also at random for those that already exist. If the substitution indicates a gain 8 according to a given criteria (see below) then the new variable replaces the old one; the old one is then precluded from further inclusion thereafter. With only 1 variable (e.g. 1) the tendency is of course to produce the same result for all nSolutions but as the number of eligible variables to be included in the model increases, so too does the number of different combinations of variables. g) Set the criteria by which a given variable will be identified as being preferred over another and therefore determining when there is no further gain to be found. criteria <- "xi2" There are 4 different criteria by which variables may be selected. The current process only allows 1 criteria to be included at a time. Each of the criteria is to be identified in quotation marks as indicated above. The Chi squared routine was adopted as the preferred choice after some early testing, but this could be further explored. There would be nothing wrong with running this routine repeatedly starting with different criteria and then combining the results. The different criteria are as follows: o “ccr12” Maximize Roy's first root statistic (i.e. largest eigenvalue of HE^(-1) where His the effects matrix and E the error residual). o “Wilkes” Minimize Wilks Lamda where lamda = det(E)/det(T) where E is the error matrix and T is the total variance. o "xi2" Maximize the Chi squared (xi2) index based on the Bartlett-Pillai trace test statistic. o "zeta2" Maximize Zeta2 (zeta2) coefficient where V = trace(HE^(-1)) and zeta2 = V/(V+r), and where r is rank. h) Enter the name of the unique variable selection file name and path. uniqueVaqrPath <- “E:\\Rwd\\UNIQUEVAR.csv” i) Enter the name of the correlation matrix print file. printFileName <- “UCORCOEF.csv” j) This file path and name should not be changed; it is included here only for the purpose that someday this restriction will be changed. The file is used to identify the unique variable names amongst all of the variables combinations and it is one of those files (like XVARSELV1.csv) that is referred to in both Python and R environments. This the file that contains the Pearson correlation coefficients amongst allpairs of variables contained within UNIQUEVAR.csv This file name should not be changed. Note that the file path is not included – the file will be written to the current working directory (see below). Enter the file containing a count of the number of eligible X-variables in XVARSELV1.csv xVarCountFileName <- "E:\\Rwd\\XVARSELV1_XCOUNT.csv" 9 The use of this file is explained below. The file will not exist at the start of the run – it is to be initiated as part of the process, and … the file name should not change. k) Set working directory. wd <- “E:\\Rwd” Note that the working directory is where R expects read files, unless otherwise directed, and to write files. Note also that there are no double backslashes, “\\” at the end in this case. Lastly where files are being used by both Python and R they should generally be in the top of the Rwd directory. Files that are being read into R such as the data file can be located anywhere. The reason for this goes back to the original design of the process with integration of R and Python code … starting with the idea that all of the input and output files would be placed in a common directory. Interactions between the Python and R code would be also based on the use of a standard set of filenames. Recent developments have been moving toward a more flexible input-output file naming and location convention but this has not been fully implemented so the recommendation is that the older standard and design rules be maintained until such time as there is a change. Begin Processing Step 1. Initialize the XVARSELV1_XCOUNT.csv file This simply counts the number of X-variables in the XVARSEL1.csv file. This is needed to stop the routine when there are no more variables to be removed as a result of high correlations. The file consists of only 1 number. A python module (COUNT_XVAR_IN_XVARSELV1.py) is called to do this. Step 2. Read XVARSELV1_XCOUNT.csv This step involves reading the XVARSELV1_XCOUNT.csv to initializes the count of X-variables within the R programming environment. Step 3. (A) Initialize the variable selection process This process calls another set of R scripts (Middle Layer): /Rwd/RScript/ZCompleteVariableSelectionPlusRemoveCorrelationVariables.r This routine is described in more detail below. It runs through the entire variable selection process exploring solutions with a number of variables ranging from minNvar to maxNvar, and with nSolutions generated for each number of variables. The criteria used to select the best variable set is also defined under the User Input section. Once the task is completed the results are written to a file: XVARSELV.csv; a unique list of variables is extracted and written to UNIQUEVAR.csv (or as defined by users), the variables are ranked according to their relative importance and written to VARRANK.csv; the Pearson correlation coefficients are calculated for each pair of variables in UNIQUEVAR.csv; where the correlations are 0.8 or ≤-0.8 the variables within each pair that are of least importance (VARRANK.csv) are deselected from XVARSELV1.csv 10 as being eligible for selection the next time around; XVARSELV1_XCOUNT.csv is updated with the new X-variable count. Step 3. (B) Bring the newly updated X-variable count into the R environment. Step 4. Iterate steps 3(A) and 3(B). Stop when there are no more changes in the number of selected X-variables indicated in XVARSEV1_COUNT.csv; correlations amongst the unique set of variables are all < 0.8 and > -0.8. MIDDLE LAYER /Rwd/RScript/ZCompleteVariableSelectionPlusRemoveCorrelationVariables.R This is a layer that the user does not generally have to see unless there are certain errors that are hard to detect. All of the user inputs to this layer are set in the top layer. However if the process fails to run completely then it can be helpful to open and run this script in order to reveal more about the probable source of the error. By running the top layer, the user inputs will have already been initiated; beyond that point errors are more easily isolated to specific steps by then (re)running the middle layer. Diagnostics will then indicate where the failure occurred according to each of the steps highlighted below, and if necessary the individual R scripts or Python modules can then be run in an interpreter to further diagnose the cause of the error. Generally the error will be associated with an issue relating to the data or user defined inputs and not the programming as a result of this code having been tested and used on many different machines. Most of the experience is in a Windows 7 operating environment. As an additional point the use of routines prefaced by Z are to indicate that these scripts are called by other scripts and should not be altered unless the routines are then also going to be renamed and incorporated into a new workflow process (outside of this routine that is currently under discussion). Step 1. Load dataset (as assigned to lviFileName under User Inputs identified above) source("E:\\Rwd\\RScript\\ZLoadDatasetAndAttachVariableNames.r") This middle layer is formulated almost entirely by source (or system) commands that call specific R scipts (or Python modules in the case of the system command). This script loads the dataset into R and “attaches” the variable names in the first row to the corresponding columns. It is generally recommended that the first column consist of unique (primary) identification numbers or names assigned to each row (observation). Step 2. Exclude rows with excludeRowValue associated with excludeRowVarName source("E:\\Rwd\\RScript\\ZExcludeRowsWithCertainVariableValues.r") Step 3. Declare classification variable as Factor (as assigned to classVariableName under User Inputs) source("E:\\Rwd\\RScript\\ZDeclareClassificationVariableAsFactor.r") 11 Step 4. Complete variable selection (xVarSelectFileName; XVARSEV1.csv) according to criteria (xVarSelectCriteria) source("E:\\Rwd\\RScript\\ZSelectXVariableSubset_v1.r") Step 5. Remove variables with 0 standard deviation source("E:\\Rwd\\RScript\\ZRemoveVariablesWithZeroStandardDeviation.r") Step 6. Load the subselect R package source("E:\\Rwd\\RScript\\Loadsubselect-R-Package.r") This is the R package containing the key routines used to do the variable selection. There are a number of routines available to do variable selection in this package, all of which are loaded into memory when the package is called as in this case. Step 7. Run the subselect linear discriminant analysis source("E:\\Rwd\\RScript\\RunLinearDiscriminantAnalysis_subselect_ldaHmat.r") This routine initializes linear discriminant analysis using the selected X-Variable data from lviFileName and the class assignments made according to the classVariableName. The classVariableNames form the Y-variables in multiple discriminant analysis. Step 8. Run the subselect linear discriminant analysis variable improvement routine source("E:\\Rwd\\RScript\\ZRun-ldaHmat-VariableSelection-Improve.r") This routine implements the variable selection procedure. Step 9. Extract the variable name subsets from the variable selection process source("E:\\Rwd\\RScript\\ExtractVariableNameSubsets.r") This script creates the file for writing to VARSELECT.csv in the next step. Step 10. Overwrite VARSELECT.csv source("E:\\Rwd\\RScript\\ZWriteVariableSelectFileToCsvFile.r") Note that if VARSELECT.csv already exists in the R working directory (/Rwd/) then it will be overwritten. The VARSELECT.csv contains the results of the variable selection as follows: UID This is a unique id associated with each row. MODELID The model number represents a particular solution as defined in terms of a particular solution with a certain number and kind of variables selected. A particular solution in terms of number and kinds of variables may occur more than once and therefore be represented under more than 1 MODELID. SOLTYPE This represents the number of variables associated with each MODELID. 12 SOLNUM This is 1 to nSolutions (user defined; see Top Layer-f) for each solution type to which a unique MODELID has been assigned. KVAR This is the variable number within a given MODELID. VARNUM This is the variable number that is identified as being in the equation using the subselect R package. It relates to the location of the variable in the dataframe. VARNAME This is the variable name associated with VARNUM. Step 11. Run python code EXTRACT_RVARIABLE_COMBOS_v2.py system("E:\\Anaconda\\python.exe E:\\Rwd\\Python\\EXTRACT_RVARIABLE_COMBOS_v2.py") This module identifies the unique variable sets and organizes them into a new file VARSELV.csv; The purpose of this file is to allow for all of the unique variable selection models to be processed using discriminant analysis for comparison purposes. Another file, UNIQUEVAR.csv provides a list of all of the variables referred to in VARSELV.csv. Note that this is the first example of the use of a system command to call a program written in another language. It is critical that the full path be identified for the compiler at the start of the statement followed by the full path of the python (or other kind) of routine. Note the use of the double backslashes and the use of double quotation marks, not single quotation marks. These rules can be violated and may still work on any given machine but it has been found that these rules are needed to ensure that the program works properly on most (if not all) machines. Step 12. Run python code RANKVAR.py system("E:\\Anaconda\\python.exe E:\\Rwd\\Python\\RANKVAR.py") VARSELECT.csv (output from step 10) is used as input into this module. This module ranks variables based on their position (where they first appear in the process starting from the user defined (Top Layer – e) minVar and moving toward variable selections with an increasing number of variables up to maxVar. The results are printed to VARRANK.csv. Note that if the file already exists in the working directory (/Rwd/) then it will be overwritten. VARRRANK.csv contains the following fields: VARNAME: The name of each unique variable identified in all of the model runs. Note that this is somewhat redundant with the same listing of variables in UNIQUEVAR.csv; this routine was developed at a later stage and performs a different function. RANK This is ranking by way of association with the number of variables that enter into the equation by way of its first appearance amongst all of the models. The lowest number of variables is recognized as the highest rank, e.g. 1 is ranked higher than 2. 13 p This is the number of models that contain a given variable divided by the total number of variables. IMPORTANCE This is equal to: SQRT(1/RANK * SQRT(p)) ranging between 0 and 1 with a high number indicating a high importance. The highest ranked variables also tend to occur more frequently and therefore of greatest importance, but as the number of variables increases this relationship starts to break down. This breakdown is related to the notion of over fitting, re-enforcing the idea that a lesser number of X-variables may provide the most consistent and reliable indicators of the class to which a given observation should belong to. Step 13. Reload LVI dataset source("E:\\Rwd\\RScript\\ZLoadDatasetAndAttachVariableNames.r") This is a repeat of step 1 in the Middle Layer. This may not be necessary but is designed to ensure that all of the data is available to (re)select the unique variables listed in UNIQUEVAR.csv (see steps below). Step 14. Exclude rows with excludeRowValue associated with excludeRowVarName source("E:\\Rwd\\RScript\\ZExcludeRowsWithCertainVariableValues.r") Repeat of step 2 above. Step 15. Select the variable set as identified in UNIQUEVAR.csv source("E:\\Rwd\\RScript\\ZSelectUniqueXVariableSubset.r") This is a new step using UNIQUEVAR.csv instead of XVARSELV1.csv to do the variable selection. Step 16. Generate Pearson correlations amongst each and every pair of variables source("E:\\Rwd\\RScript\\CompileUniqueXVariableCorrelationMatrixSubset.r") Step 17. Create a correlation matrix for printing source("E:\\Rwd\\RScript\\CreateUniqueVarCorrelationMatrixFileForPrinting.r") Step 18. Write correlation matrix (to current working directory) source("E:\\Rwd\\RScript\\ZWriteUniqueVarCorrelationMatrix.r") This routine creates a new file UCORCOEF.csv. The following fields are created: VARNAME1 The first variable name. VARNAME2 The second variable name. Note that the first variable name can be the same as the second variable name. When eliminating variables (implemented in the steps below) with high correlations ( 0.8 or ≤ -0.8) the conditions where VARNAME1 and VARNAME2 are the same are ignored. 14 CORCOEF The Pearson correlation coefficient. When the program is finished iterations to arrive at a final solution there should be no correlation coefficients 0.8 except those where VARNAME1=VARNAME2 and there should be correlation coefficients ≤ -0.8. As a result, one way to verify whether or not the process has run to completion is to check this file to ensure that this condition has been met. If the condition has not been met then some problem has been encountered that stopped the process from completion. Step 19. Update XVARSELV1.csv to remove least important variables from variable pairs that exceed the correlation coefficient limits. system("E:\\Anaconda\\python.exe E:\\Rwd\\Python\\REMOVE_HIGHCORVAR_FROM_XVARSELV.py") This routine uses UCORCOEF.csv, VARRANK.csv, and XVARSELV1.csv as inputs. XVARSELV1.csv is modified and then the file is replaced by a new file with the same name. The process involves checking for the condition where correlation coefficients are 0.8 (excluding the condition where VARNAME1 and VARNAME2 are the same) or ≤-0.8; where one of these conditions holds true it then checks the IMPORTANCE of each variable and finally changes the least important variable assignment from an X to N, effectively deselecting the variable. Step 20. Count the number of X-Variables remaining in XVARSELV1.csv system("E:\\Anaconda\\python.exe E:\\Rwd\\Python\\COUNT_XVAR_IN_XVARSELV1.py") This routine counts the number of variables with an X assigned to each one and then writes the number to XVARSEV1_COUNT.csv. In the step that follows, this same number is then picked up by the routines to determine if there has been any change from the previous run, as a result of having found (once again, more) X-variables that were highly correlated at the end of the current run. If there is no change between the previous and current run then the iterations are stopped in the TOP LAYER BOTTOM LAYER The bottom layer is described in sufficient detail under the middle layer. If you want to know more it is please refer to the individual pieces of underlying code that make up each of the steps in the middle and top layers. 15 Appendix B. Discriminant Analysis for Multiple Variable Sets /Rwd/RScript/ XRunLinearDiscriminantAnalysisForMultipleXVariableSets.R TOP LAYER User inputs a) Identify the reference dataset: lviFileName <- "E:\\Rwd\\interp_c2.csv" b) Exclude certain rows from dataset: excludeRowValue = -1 excludeRowVarName <- 'SORTGRP' If there is a variable name that corresponds with the excludeRowVarName (‘SORTGRP’) and if there are 1 or more rows where the excludeRowVarName is equal to the excludeRowValue (‘SORTGRP’) then those rows will be excluded from the analysis. c) Enter the name of the classification variable with the assigned class to each observation: classVariableName <- 'CDEADL5’ d) Enter ‘UNIFORM’ or ‘SAMPLE’ to indicate the type of prior distribution: priorDistribution <- 'SAMPLE' Note that the ‘SAMPLE’ distribution is usually indicated when the data is represents a random sample from the population. A ‘UNIFORM’ distribution is used to indicate that the distribution of responses within the population is unknown. The prior distribution is used to calculate a weighted covariance matrix. Another way to think of this is that the prior distribution determines the relative weight assigned to each class in the process of developing the discriminant functions. e) Indicate the file name used to select the various sets of X-Variables. xVarSelectFileName <- "E:\\Rwd\\XVARSELV.csv" This file was an output from 2.5.3. f) Set working directory. wd <- “E:\\Rwd” Begin Processing Step 0. Set working directory setwd("E:\\Rwd") 16 Step 1. Load dataset called lvinew source("E:\\Rwd\\RScript\\ZLoadDatasetAndAttachVariableNames.r") Step 2. Exclude rows with excludeRowValue associated with excludeRowVarName source("E:\\Rwd\\RScript\\ZExcludeRowsWithCertainVariableValues.r") Step 3. Declare classification variable as Factor (i.e. identify variable as classification variable) source("E:\\Rwd\\RScript\\ZDeclareClassificationVariableAsFactor.r") Step 4. Get prior distribution to be used in Discriminant Analysis source("E:\\Rwd\\RScript\\ZComputePriorClassProbabilityDistribution.r") Step 5. Write prior distribution to file source("E:\\Rwd\\RScript\\WritePriorDistributionToFile.r") Step 6. Load MASS package source("E:\\Rwd\\RScript\\LoadMASS-R-Package.r") Step 7. Get the multiple variable subsets XVARSELV.csv source("E:\\Rwd\\RScript\\ZSelectXVariableSubset_v2.1.r") Step 8. Run Multiple Discriminant Analysis - Take One - Leave One source("E:\\Rwd\\RScript\\RunMultipleLinearDiscriminantAnalysis_MASS_lda_TakeOneLeaveO ne.r") Step 9. Write two new files to the working directory - CTABULATION.csv - POSTERIOR.csv source("E:\\Rwd\\RScript\\WriteMultipleLinearDiscriminantAnalysis_MASS_lda_TOLO_to_File.r ") CTABULATION.csv is a cross tabulation of the reference data class versus the predicted class produced as a result of a take-one –leave-one analysis. VARSET This refers to VARSET in XVARSELV.csv REFCLASS The class as originally identified in the reference data. PREDCLASS The predicted class. CTAB The number of observations associated with the REFCLASS-PREDCLASS combination. POSTERIOR.csv reports the UERROR associated with the take one leave one routine. 17 VARSET This refers to VARSET in XVARSELV.csv NVAR The number of X-variables associated with the variable set. UERROR Note that the posterior error of estimation is calculated following the procedures of Hora and Wilcox (198212; Equation 9): 𝑒̂ = 1 − 𝑁 −1 ∑𝑁 𝑖=1 𝑚𝑎𝑥 [𝑃(𝑌 |𝑋𝑖 )] Eq. 1 Where, 𝑒̂ is the estimated (posterior) error 𝑁 is the total number of observations 𝑃(𝑌 |𝑋𝑖 ) is the probability of class Y, where Y is equal to 1 to m classes, given a set of variables, Xi , where i equals 1 to n observations. Hora and Wilcox (1982; P.p 57, 58) explain the calculation of this error as follows: “… A superior alternative and one that is most frequently mentioned in the marketing literature (Crask abd Perreault 1977; Dillon 1979) is the U-method or hold-one out method. The ERE (error rate estimator) with the U-method is calculated by reserving one observation from the training sample, calculating the classification rule without the reserved observation, and then classifying the reserved observation and noting whether the classification is correct. This process is repeated for each available observation. A very small amount of bias is introduced because the classification rule is calculated with n-1 rather than n observations. For large samples the ERE is nearly unbiased.” “The posterior probability ERE for the jth population is simply 1 minus the average posterior probabilities of all observations assigned to the jth population by the discriminant function.” Note that: 𝑒̂𝑗 = 1 − (𝑁𝑝𝑗 ) −1 ∑𝑁𝑖=1 𝑌𝑖𝑗 Eq. 2 Where pj Yij is the a priori probability of belonging to population j. equals the probability the observation belongs to class j given Xi. 12 Hora, S.C. and Wilcox, W.B. 1982. Estimation of error rates in several-population discriminant analysis. Journal of Marketing Research 19(1):57-61. 18 And 𝑒̂ = ∑𝑚𝑗=1 𝑝𝑗 𝑒̂𝑗 Eq. 3 Hora and Wilcox (1982) point out that while the formula does not explicitly include consideration of the a priori probabilities (pj) , this is implied by equations 2 and 3, that were used to derive equation 1. It is assumed that Equation 1 is implemented using the normal distribution and variances associated with each class based on the class assignments derived from the take-one-leave-one process. In general as the number of variables increases the UERROR decreases; this is the opposite of Cohen’s (1960) Coefficient of Agreement (also referred to as the KHAT statistic, described in more detail below). Step 10. Run Multiple Discriminant Analysis - All Data source("E:\\Rwd\\RScript\\RunMultipleLinearDiscriminantAnalysis_MASS_lda.r") Step 11. Write files - CTABALL.csv VARMEANS.csv DFUNCT.csv BWRATIO.csv source("E:\\Rwd\\RScript\\WriteMultipleLinearDiscriminantAnalysis_MASS_lda.r") CTABALL contains the contingency table data from which the Cohen’s (1960) Coefficient of Agreement can be calculated. VARSET REFCLASS PREDCLASS CTAB Refers to the variable sets in XVARSELV. Refers to the reference or actual class. Contains the predicted class. Provides a cross tabulation indicating the number of observations in the associated combination of REFCLASS and PREDCLASS. VARMEANS contains the mean values for each variable by class. VARSET2 CLASS2 VARNAMES2 MEANS2 Refers to VARSET in XVARSELV. Is the class as originally assigned (not predicted). Refers to the variable names in the original dataset and as selected for inclusion in the X-variable set. Refers to the mean value associated with each variable (VARNAMES2) and class (CLASS2) combination. Note that the same variable may occur in several variable sets – producing redundancies. DFUNCT contains the discriminant functions for each axis and combination of variables. VARSET3 Refers to VARSET in XVARSELV. VARNAMES3 Refers to the variable names in the original dataset and as selected for inclusion in the X-variable set. FUNCLABEL3 Refers to the discriminant functions in order of priority (ranked from highest to lowest eigenvalue as follows: LN1, LN2, … up to n-1 functions where n is equal 19 to number of classes or the number of variables selected for inclusion in an equation, whichever is the lesser of the two. BWRATIO contains the between-to-within variance ratios of the differences in class Z-statistics associated with each discriminant function. VARSET4 FUNCLABEL4 BTWTWCR4 Refers to VARSET in XVARSELV. Is identical to FUNCLABEL3 in DFUNCT. Are the ratios of between to within group variances explained by each discriminant function (FUNCLABEL4). These can be converted to proportions by dividing each ratio by the sum of all ratios for each variable set13. These are similar to, but not the same as eigenvectors14. Step 12. Generate Cohens (1960) Coefficient of Agreement (KHAT Statistic15,16) system("E:\\Anaconda\\python.exe E:\\Rwd\\Python\\COHENS_KHAT_R.py") 𝐾𝐻𝐴𝑇 = N.. Nij Nij=I Ri Cj 𝑛 𝑁.. ∑𝑛 𝑖=1 𝑁𝑖𝑗=𝑖 −∑𝑖=1 𝑅𝑖 𝐶𝑗=𝑖 𝑚 𝑁..2 −∑𝑛 𝑖=1 𝑅𝑖 ∑𝑗=1 𝐶𝑗 Eq. 4 is the total number of observations across all categories. is the total number of observations assigned to (user) class i and (producer) class j. is the sum of the observations assigned to the same class (j=i) by both the user and the producer along the diagonal. is the total number of observations assigned to (user) class i (in rows) equals 1 to n. is the total number of observations assigned to (producer) class j (in columns) equals 1 to m. Note that the user in this case refers to the original class assigned to a given observation and the producer refers to the estimated class by way of discriminant analysis using a take-one-leaveone type of process. CTABSUM.csv contains the following: VARSET OA KHAT MINPA MAXPA Refers to VARSET in XVARSELV Overall accuracy (the proportion of observations where users and producers agree on classification) Overall accuracy minus the proportion of observations that could have been agreed to by chance Minimum producer accuracy Maximum producer accuracy 13 http://www.r-bloggers.com/computing-and-visualizing-lda-in-r/ [accessed April 23 2014] http://en.wikipedia.org/wiki/Singular_value_decomposition [accessed April 23 2014] 15 Cohen, J. 1960. A Coefficient of Agreement for Nominal Scales. Educational and Psychological Measurement 20(1): 37 – 46. 16 Paine, D.P. and Kiser, J.D. 2003. Aerial photography and image interpretation. Second Edition. John Wiley and Sons, Hoboken, NJ, US. Pp.465-480. 14 20 MINUA MAXUA Minimum user accuracy Maximum user accuracy Step 13. Combine evaluation datasets into one file: ASSESS.csv system("E:\\Anaconda\\python.exe E:\\Rwd\\Python\\COMBINE_EVALUATION_DATASETS_R.py") ASSESS.csv contains information assembled from CTABSUM.csv; POSTERIOR.csv; and XVARSELV.csv 21

![[#DTC-130] Investigate db table structure for representing csv file](http://s3.studylib.net/store/data/005888493_1-028a0f5ab0a9cdc97bc7565960eacb0e-300x300.png)