PPT

advertisement

Parallel Computing

Fundamentals

Course TBD

Lecture TBD

Term TBD

Module developed Fall 2014

by Apan Qasem

This module created with support form NSF

under grant # DUE 1141022

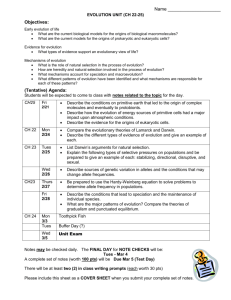

Why Study Parallel Computing?

1000000

longer issue

pipeline

double speed

arithmetic

Improvements in chip architecture

Increases in clock speed

theoretical maximum performance

(millions of operations per second)

100000

Full Speed Level

2 Cache

10000

speculative out-oforder execution

MMX

(multimedia

extensions)

quad-core

dual-core

hyper

threading

3 GHz

1000

10

more responsibility

on software

2.6 GHz

3.3 GHz

2000 MHz

multiple instructions

per cycle

internal

733 MHz

memory cache

instruction

300 MHz

200 MHz

pipeline

100

16 MHz

hex-core

2.93 GHz

50 MHz 66 MHz

33 MHz

25 MHz

1

1986

1988

1990

1992

1994

1996

1998

2000

2002

2004

2006

2008

2010

Source : Scientific American 2005, “A Split at the Core”

Extended by Apan Qasem

TXST TUES Module : #

Why Study Parallel Computing?

Parallelism is

mainstream

In future, all software

will be parallel

We are dedicating all of our

future product development to

multicore designs. … This is a sea

change in computing

Requires fundamental

change in almost all

layers of abstraction

Paul Otellini,

President, Intel (2004)

Andrew Chien,

CTO, Intel (~2007)

Dirk Meyer

CEO, AMD (2006)

TXST TUES Module : #

Why Study Parallel Computing?

Parallelism is Ubiquitous

TXST TUES Module : #

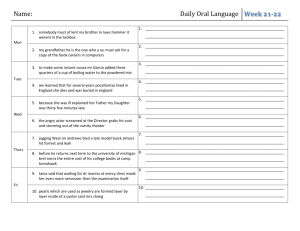

Basic Computer Architecture

image source: Gaddis, Starting our with C++

TXST TUES Module : #

Program Execution

.text

.globl _main

_main:

LFB2:

pushq %rbp

LCFI0:

movq %rsp, %rbp

LCFI1:

movl $17, -4(%rbp)

movl $13, -8(%rbp)

movl -8(%rbp), %eax

addl -4(%rbp), %eax

movl %eax, -12(%rbp)

movl -12(%rbp), %eax

leave

ret

TXST TUES module #

…11001010100…

execute

int main() {

int x, y, result;

x = 17;

y = 13;

result = x + y;

return result;

}

Program Execution

int main() {

int x, y, result;

x = 17;

y = 13;

result = x + y;

return result;

}

compile

Processor executes one

instruction at a time*

Instruction execution follows

program order

TXST TUES module #

11001010100

return result;

11001110101

result = x + y;

00001010100

y = 13;

10101010100

x = 17;

Program Execution

return result;

result = x + y;

int main() {

int x, y, result;

x = 17;

y = 13;

result = x + y;

return result;

}

y = 13;

compile

The two assignment statements

x = 17; and y = 13; will execute in parallel

TXST TUES module #

x = 17;

Parallel Program Execution

int main() {

int x, y, result;

x = 17;

y = 13;

result = x + y;

return result;

}

return result;

result = x + y;

Cannot arbitrarily assign

instructions to processors

y = 13;

TXST TUES module #

x = 17;

Dependencies in Parallel Code

• If statement si needs the value produced by statement sj

then, si is said to be dependent on sj

sj y = 17;

…

si x = y + foo();

• All dependencies in the program must be preserved

• This means that if si is dependent on sj then we need to

ensure that sj completes execution before si

responsibility lies with

programmer (and software)

TXST TUES Module : #

Running Quicksort in Parallel

int quickSort(int values[], int left, int right) {

if (left < right) {

int pivot = (left + right)/2;

int pivotNew = partition(values, left, right, pivot);

quickSort(values, left, pivotNew - 1);

quickSort(values, pivotNew + 1, right);

}

}

...partition()...

quickSort(values, left, pivotNew - 1);

To get benefit from parallelism

want run “big chunks” of code

in parallel

TXST TUES module #

quickSort(values, pivotNew + 1,

right);

Also need to balance the load

Parallel Programming Tools

• Most languages have extensions that support parallel

programming

• A collection of APIs

• pthreads, OpenMP, MPI

• Java Threads

• Some APIs will perform some of the dependence checks

for you

• Some languages specifically designed for parallel

programming

• Cilk, Charm++, Chapel

TXST TUES Module : #

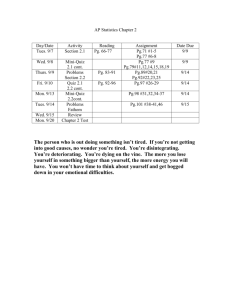

Parallel Performance

• In the ideal case, the more processors we add to the system the

higher the performance speedup

• If a sequential program runs in T seconds on one processor then

the parallel program should run in T/N seconds on N processors

• In reality this almost never happens. Almost all parallel programs

will have some parts that must run sequentially

Amount of speedup obtained is limited by the

amount of parallelism available in the program

• aka Amdahl’s Law

Gene Amdahl

image source : Wikipedia

TXST TUES Module : #

Reality is often different!

max speedup in relation to

number of processors

max theoretical speedup

TXST TUES module #