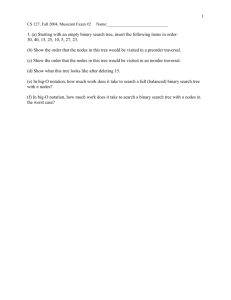

23FL20heap[1] - Department of Computer Science

advertisement

![23FL20heap[1] - Department of Computer Science](http://s3.studylib.net/store/data/009684679_1-26e16be240ff6e6bd1bb6593392860bb-768x994.png)

Heaps and Heapsort

Prof. Sin-Min Lee

Department of Computer

Science

San Jose State University

Heaps

•

•

•

•

•

•

Heap Definition.

Adding a Node.

Removing a Node.

Array Implementation.

Analysis

Source Code.

What is Heap?

A heap is like a binary search tree. It is a

binary tree where the six comparison

operators form a total order semantics that

can be used to compare the node’s entries.

But the arrangement of the elements in a

heap follows some new rules that are

different from a binary search tree.

Heaps

A heap is a certain kind of

complete binary tree. It is

defined by the following

properties.

Heaps (Priority Queues)

A heap is a data structure which supports efficient

implementation of three operations: insert, findMin, and

deleteMin. (Such a heap is a min-heap, to be more precise.)

Two obvious implementations are based on an array

(assuming the size n is known) and on a linked list. The

comparisons of their relative time complexity are as follows:

insert

findMin

Sorted array O(n)

O(1)

(from large (to move (find at end)

to small)

elements)

Linked list

deleteMin

O(1)

(delete the end)

O(1)

O(1)

O(n)

(append) (a pointer to Min) (reset Min pointer)

Heaps

Root

1. All leaf

nodes occur

at adjacent

levels.

When a complete

binary tree is built,

its first node must be

the root.

Heaps

2. All levels of

the tree, except

for the bottom

level are

completely filled.

All nodes on the

bottom level

occur as far left

as possible.

Left child

of the

root

The second node is

always the left child

of the root.

Heaps

3. The key of

the root node

is greater than

or equal to the

key of each

child. Each

subtree is also

a heap.

Right child

of the

root

The third node is

always the right child

of the root.

Heaps

The next nodes

always fill the next

level from left-to-right.

Heaps

The next nodes

always fill the next

level from left-to-right.

Heaps

Complete

binary tree.

Heap Storage Rules

• A heap is a binary tree where the entries of the

nodes can be compared with a total order

semantics. In addition, these two rules are

followed:

• The entry contained by a node is greater than

or equal to the entries of the node’s children.

• The tree is a complete binary tree, so that every

level except the deepest must contain as many

nodes as possible; and at the deepest level, all

the nodes are as far left as possible.

Heaps

45

A heap is a

certain

kind of

complete

binary tree.

35

27

19

23

21

22

The "heap property"

requires that each

node's key is >= the

keys of its children

4

Heaps

45

This tree

is not a

heap.

It breaks rule

number 1.

35

27

19

23

Heaps

45

This tree

is not a

heap.

It breaks rule

number 2.

35

23

21

Heaps

45

This tree

is not a

heap.

It breaks rule

number 3.

27

35

23

21

Heap implementation of priority

queue

• Each node of the heap contains one entry along

with the entry’s priority, and the tree is maintained

so that it follows the heap storage rules using the

entry’s priorities to compare nodes. Therefore:

• The entry contained by a node has a priority that is

greater than or equal to the priority of the entries

of the nodes children.

• The tree is a complete binary tree.

Adding a Node to a Heap

45

Put

the new

node in the

next

available spot.19

35

27

23

21

42

22

4

Adding a Node to a Heap

45

Push the new

node upward,

swapping with its

parent until the

new node reaches

19

an acceptable

location.

35

42

23

21

27

22

4

Adding a Node to a Heap

45

Push the new

node upward,

swapping with its

parent until the

new node reaches

19

an acceptable

location.

42

35

23

21

27

22

4

Adding a Node to a Heap

45

The parent has a key

that is >= new node, or

The node reaches the

root.

The process of pushing

the new node upward

is called

19

reheapification

upward.

42

35

23

21

27

22

4

Removing the Top of a Heap

45

Move the last

node onto the root.

42

35

19

23

21

27

22

4

Removing the Top of a Heap

27

Move the last

node onto the root.

42

35

19

23

21

22

4

Removing the Top of a Heap

Push the out-ofplace node

downward,

swapping with its

larger child until

the new node

reaches an

19

acceptable

location.

27

42

35

23

21

22

4

Removing the Top of a Heap

Push the out-ofplace node

downward,

swapping with its

larger child until

the new node

reaches an

19

acceptable

location.

42

27

35

23

21

22

4

Removing the Top of a Heap

Push the out-ofplace node

downward,

swapping with its

larger child until

the new node

reaches an

acceptable

location.

42

35

27

19

23

21

22

4

Removing

The

children all the

have keys <= the

out-of-place node,

or

The node reaches

the leaf.

The process of

pushing the new

node downward is 19

called

reheapification

downward.

Top of a Heap

42

35

27

23

21

22

4

Adding an Entry to a Heap

45

35

27

19

23

21

22

4

5

As an example, suppose that we already have nine entries that are

arranged in a heap with the above priorities.

Suppose that we are adding a new entry with a priority 42.The first step

is to add this entry in a way that keeps the binary tree complete. In this

case the new entry will be the left child of the entry with priority 21.

45

35

23

21

27

19

5

42

22

4

The structure is no longer a heap, since the node with priority 21 has a

child with a higher priority. The new entry (with priority 42) rises upward

until it reaches an acceptable location. This is accomplished by swapping

the new entry with its parent.

45

35

23

42

27

19

5

21

22

4

The new entry is still bigger than its parent, so a second swap is done.

45

42

23

35

27

19

5

21

22

4

Adding an entry to a priority

queue

Pseudocode for Adding an entry

The priority queue has been implemented as a heap.

1.Place the new entry in the heap in the first available

location.This keeps the structure as a complete binary

tree, but it might no longer be a heap since the new

entry might have a higher priority than its parent.

2. while (the new entry has a priority that is higher than

its parent)

swap the new entry with its parent.

Reheapification upward

Now the new entry stops rising, because its

parent (with priority 45) has a higher priority

than the new entry. In general, the “new

entry rising” stops when the new entry

reaches the root. The rising process is called

reheapification upward.

Implementing a Heap

42

• We will store the

data from the

nodes in a

partially-filled

array.

An array of data

35

27

23

21

Implementing a Heap

42

• Data from the root

goes in the

first

location

of the

array.

42

An array of data

35

27

23

21

Implementing a Heap

42

• Data from the

next row goes in

the next two

array locations.

42

35

An array of data

23

35

27

23

21

Implementing a Heap

42

• Data from the next

row goes in the

next two array

locations.

• Any node’s two

children reside at

indexes (2n) and

(2n + 1) in the array.

An array of

data

42

35

35

27

23

23

21

27

21

Implementing a Heap

42

• Data from the

next row goes

in the next two

array locations.

42

35

An array of data

23

35

27

27

23

21

We don't care what's in

this part of the array.

21

Important Points about the

Implementation 42

• The links between the tree's

nodes are not actually stored

35

as pointers, or in any other

way.

21

• The only way we "know" that 27

"the array is a tree" is from

the way we manipulate the

data.

42

35

23

27

21

An array of data

23

Important Points about the

Implementation 42

• If you know the index

of a node, then it is

easy to figure out the

indexes of that node's

parent and children.

35

27

23

21

42

35

23

27

21

[1]

[2]

[3]

[4]

[5]

Removing an entry from a Heap

• When an entry is removed from a priority,

we must always remove the

• entry with the highest priority - the entry

that stands “on top of heap”.

• For example, the entry at the root, with

priority 45 will be removed.

The highest priority item, at

the root, will be removed from the heap

45

42

23

35

27

19

5

21

22

4

Removing an entry from a heap

• During the removal, we must ensure that the resulting

structure remains a heap. If the root entry is the only

entry in the heap, then there is really no more work to

do except to decrement the member variable that is

keeping track of the size of the heap. But if there are

other entries in the tree, then the tree must be

rearranged because a heap is not allowed to run

around without a root. The rearrangement begins by

moving the last entry in the last level upto the root, like

this:

Removing an entry from a Heap

Before the move

After the move

45

21

42

23

27

19

35

5

21

22

23

42

4

27

19

35

5

The last entry in the last level

has been moved to the root.

22

4

Heapsort

• Heapsort combines the time efficiency of

mergesort and the storage efficiency of

quicksort. Like mergesort, heapsort has a

worst case running time that is O(nlogn),

and like quicksort it does not require an

additional array. Heapsort works by first

transforming the array to be sorted into a

heap.

Heap representation

A heap is a complete binary tree, and an efficient method

of representing a complete binary tree in an array

follows these rules:

Data from the root of the complete binary tree goes in the

[0] component of the array.

The data from depth one nodes is placed in the next two

components of the array.

We continue in this way, placing the four nodes of depth

two in the next four components of the array, and so

forth. For example, a heap with ten nodes can be stored

in a ten element array as shown below:

45

42

27

21

19

45

27 42 21

23

4

22

35

5

23 22

35

19

4

5

Rules for location of data in the

array

• The data from the root always appears in the [0]

component of the array.

• Suppose that the data for a root node appears in

the component [i] of the array. Then the data for

its parent is always at location [(i - 1) / 2] (using

integer division).

• Suppose that the data for a node appears in

component [i] of the array. Then its children (if

they exist)always have their data at these locations:

Left child at component [2i + 1]

Right child at component [2i + 2]

• The largest value in a heap is stored in the root and

that in our array representation of a complete binary

tree, the root node is stored in the first array position.

Thus, since the array represents a heap, we know that

the largest array element is in the first array position.

To get the largest value in the correct array position, we

simply interchange the first and the final array

elements. After interchanging these two array elements,

we know that the largest array element is in the final

array position, as shown below:

• The dark vertical line in the array separates the

sorted side of the array from the unsorted side (on

the left) . Moreover the left side still represents a

complete binary tree which is almost a heap.

5

27 42

21 23 22

35

19

4

45

When we consider the unsorted side as a complete binary

tree, it is only the root that violates the heapstorage

rule. This root is smaller than its children. The next

step of the heapsort is to reposition this out of place

value in order to restore the heap. The process called

reheapification downward begins by comparing the

value in the root with the value of each of its children.

If one or both children are larger than the root , then

the root’s value is exchanged with the larger of the two

children. This moves the troublesome value down one

level in the tree. For example:

42

5

27

21

19

23

4

22

35

42 27 5 21 23 22 35 19 4

45

• In the example 5 is still out of place, so we will once

again exchange it with its largest child resulting in the

array and heap shown below:

42

27

21

19

35

23

4

22

5

42 27 35 21 23 22

5

19 4

45

The unsorted side of the array must be

maintained a heap. When the unsorted side of

the array is once again a heap, the heapsort

continues by exchanging the largest element in

the unsorted side with the rightmost element of

the unsorted side. For example 42 is exchanged

with 4 as shown below:

4

27 35 21 23 22 5 19 42 45

• The sorted side now has two largest elements, and

the unsorted side is once again almost a heap, as

shown below:

4

4 27 35 21 23 22 5 19 42 45

27

Only the root (4) is out of place,

and that may be fixed by another

reheapification downward. After

the reheapification downward,

the largest value of the unsorted

side will once again reside at

location [0]and we can continue to

pull out the next largest value.

21

19

35

23

22

5

Pseudocode for the heapsort algorithm

//Heapsort for the array called data with n elements

1. Convert the array of n elements into a heap.

2. unsorted = n; // The number of elements in the unsorted

side

3. while ( unsorted > 1)

{ // Reduce the unsorted side by one

unsorted - -;

Swap data[0] with data [unsorted].

The unsorted side of the array is now a heap with the

root out of place.

Do a reheapification downward to turn the unsorted

side

back into a heap.

}

Analysis

• While the Heapsort is a constant factor slower

than the Quicksort for average data sets, it still has

a complexity of O(n log n) for best, worst and

average data.

• Reheapification is the process by which an out of

place node is exchanged with its parent (upward)

or its children (downward) until the node’s key is

<= to it’s parent and >= to it’s children. Then

reheapification is complete.

A simpler but related problem: Find the smallest and the

second smallest elements in an array (of size n):

• A straightforward method:

Find the smallest using n –1 comparisons; swap it to the end

of the array, find the next smallest in n –2 comparisons, for a

total of 2n – 3 comparisons.

• A method that “saves” results of some earlier comparisons:

(1) Divide the array into two halves, find the smallest

elements of each half, call them min1 and min2, respectively;

(2) Compare min1 and min2, to find the smallest;

(3) If min1 is the smallest, compare min2 with the remaining

elements of the first half to determine the second smallest;

similarly for the case if min2 is the smallest.

The number of comparisons is (n –1) + (n/2 –1) = (3n/2 –2.

The second method can be depicted in the following figure:

min

min1

min2

H1

H2

First half of array

Second half of array

In the figure, a link connects a smaller element to a larger

one going downward; for example, min min1 and min

min2, and min1 each element in the first half of the

array, similarly for min2. This organization facilitates

finding the smallest and second smallest elements.

A binary tree data structure for Min-heaps:

A binary tree is called a left-complete binary tree if

(1) the tree is full at each level except possibly at the

maximum level (depth), where the tree is full at level

i means there are 2i nodes at that level (recall the root

is at level 0); and

(2) at the tree’s maximum level h, if there are fewer than

2h nodes then the missing nodes are on the right side

of the tree.

If there are n nodes in a leftcomplete binary tree of depth

h, then 2h n 2h+1 –1. Thus,

h lg n < (h +1). In particular,

h = O(lg n).

Missing on the right side

A binary tree satisfies the (min-)heap-order property if the

value at each is less than or equal to the value at each of the

child nodes (if exist).

A binary min-heap is a left-complete binary tree that also

satisfies the heap-order property.

13

21

16

24

65

31

26

19

32

A binary min-heap

68

Note that there is no

definite order between

values in sibling nodes;

also, it is obvious that

the minimum value is at

the root.

Two basic operations on binary min-heaps:

(1)Suppose the value at a node is decreased which causes

violation to the heap-order property (because the value is

smaller than that of its parent’s). The operation to restore

the heap property is named percolateUp (siftUp).

(2)Suppose the value at a node is increased which becomes

larger than either (or both) of the value of the children’s.

The operation to restore the heap order is named

percolateDown (siftDown).

Both operations can be completed in time proportional to

the tree height, thus, of time complexity O(lg n) where n is

the total number of nodes. These operations are crucial in

supporting the heap operations insert and deleteMin.

The percolateUp (siftUp) operation:

percolateUp

13

21

25

37

13

35

43

21

12

25

37

The heap order violated

at node valued 12

Note that the time of each

step (each level) is constant,

i.e., compare with parent

and swap

12

43

35

12

21

25

37

13

43

percolateUp

35

The percolateDown (siftDown) operation:

percolateDown

43

21

21

35

43

35

Who is smaller ?

37

25

45

63

The heap order violated

at node valued 43

Each step of percolateDown

finds the smaller of the two

children (if exist) then swap it

with the violating node

37

25

45

63

Who is smaller ?

21

25

37

35

43

45

percolateDown

63

Implementation of the heap operations:

insert(): insert a node next to the last node, call percolateUp

from it, treating the new node as a violation.

deleteMin(): delete the root node, move the last node to the

root, then call percolateDown from the root.

findMin(): return the root’s value.

Finally, a binary heap can be implemented using an array

storing the heap elements from the root towards the leaves,

level by level and from left to right within each level. The

left-completeness property guarantees that there are no

“holes” in the array. Also, if we use array indexes 1..n to

store n elements, the parent’s index (if exists) of node i is

i/2, the left child’s index is 2i, the right child 2i + 1 (if

exist). The time complexity of deleteMin and insert both are

O(lg n); the time complexity of findMin is O(1).

An array implementation of a binary heap:

1

2

4

3

5

Number the nodes

(starting at 1) by levels,

from top to bottom and

left to right within level

6

Parent to children (index i to 2i, 2i+1)

1

Root

2

3

4

Level 1 nodes

5

6

. . . . .

Level 2 nodes

Convert an array T[1..n] into a heap (known as heapify):

A top-down method: repeatedly call insert() by inserting T[i]

into a heap T[1..(i –1)], for i = 2 to n.

21

12

13

12

9

7

Initially,

T[1] by

itself ia a

heap

21

13

9

9

7

Insert 12 into

T[1..1], making

T[1..2] a heap

21

13

9

12

7

Insert 9 into

T[1..2],

making T[1..3]

a heap

13

21

7

12

7

Insert 13 into

T[1..3], making

T[1..4} a heap

9

21

12

13

Insert 7 into

T[1..4], making

T[1..5] a heap

The total time complexity of top-down heapify is O(n lg n).

A bottom-up heapify method:

21

12

13

9

7

n = 5, start at node n/2, the

node of the highest index

that has any children

For i = n/2 down to 1

/* percolate down T[i],

making the subtree rooted

at i a heap */

call percolateDown(i)

Complexity analysis: Node1 travels down h levels during percolateDown, nodes

2 and 3 each (h –1) levels, nodes 4 through 7 each (h –2) levels, etc. The total

number of levels traveled is h + 2(h –1) + 4 (h –2) + …+ 2h –1(h – (h – 1)) =

2h –1(1 + 2(1/2) + 3(1/2)2 + 4(1/2)3 + …) 2n, because 2h –1 < n/2 since h lg n,

and the infinite series 1 + 2x + 3x2 + … = 1/(1 –x)2 when |x| < 1. Thus, the time

complexity of heapify is O(n).