Works by Masataka Goto

advertisement

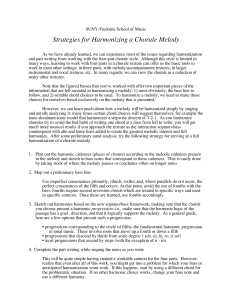

Works by Masataka Goto Dr. Masataka Goto (* The photo is taken from Goto’s Home page) The National Institute of Advanced Industrial Science and Technology (AIST) Home page: http://staff.aist.go.jp/m.goto/ Presented by Beinan Li, Music Tech @ McGill, 2005-2-10 Content Goto’s personal info MIR / Music understanding Speech Interface Speech Completion Speech Spotter Interactive music system Real-time Beat Tracking System for Musical Acoustic Signals Real-time F0 Estimation of Melody and Bass Lines in Musical Audio Signals SmartMusicKIOSK: Music Listening Station with Chorus-Search Function A Distributed Cooperative System to Play MIDI Instruments Interactive Performance of a Music-controlled CG Dancer VirJa Session (A Virtual Jazz Session System) Music database Masataka Goto A researcher working at the National Institute of Advanced Industrial Science and Technology (AIST), a newborn Japanese public research organization (15 former) A researcher of Precursory Research for Embryonic Science and Technology (PRESTO) ("Information and Human Activity" research area), Japan Science and Technology Corporation (JST) Doctor degree from Waseda University, 1998. Research interests. Real-time Beat Tracking System (2001) (Next) Can recognize a hierarchical beat structure (quarter-note, half-note, and measure levels ) in real-world audio signals sampled from popularmusic compact discs. With or without drums Time-signature 4/4 ; tempo is roughly constant Using selected musical knowledge (heuristics) Succeeded in 43 out of 45 songs Real-time Beat Tracking System Main issues of beat tracking from acoustic signal: detecting beat-tracking cues in audio signals interpreting the cues to infer the beat structure dealing with the ambiguity of interpretation Cues: Onset times of different frequency ranges Chord-change possibilities based on provisional time strips Drum patterns for Bass/Snare drums Quantitative rhythmic difficulty: Power transition Multi-agent based hypothesis evaluation Real-time Beat Tracking System Chord-change possibility, from dominant frequency by histogram peak within a period of time. (Picture taken from Goto, 2001) Selectively Used Musical Knowledge Onset time: (a-1) “A frequent inter-onset interval is likely to be the inter-beat interval.” (a-2) “Onset times tend to coincide with beat times (i.e., sounds are likely to occur on beats).” Chord change: (b-1) “Chords are more likely to change on beat times than on other positions.” (b-2) “….on half-note times than on other positions of beat times.” (b-3) “….at the beginnings of measures than at other positions of half-note times.” Drum pattern: (re-evaluate hypothesis) (c-1) “The beginning of the input drum pattern indicates a half-note time.” (c-2) “The input drum pattern has the appropriate inter-beat interval.” Real-time F0 Estimation of Melody and Bass Lines (2004) (Next) Music Scene Description based on subsymbolic representation Find a predominant harmonic structure instead of a single fundamental frequency (within a restricted range). Melody lines: by a voice or a single-tone mid-range instrument; Bass lines: by a bass guitar or contrabass The average detection rate: 88.4% for the melody line and 79.9% for the bass Real-time F0 Estimation of Melody and Bass Lines Main problem: Which F0 (in polyphonic) -> melody / bass ? Unknown number of sound sources. Select from several candidates. Assumptions: Melody / bass have a harmonic structure, regardless F0 Melody / bass have a frequency range for most predominant harmonic structure (“MPHS”) Melody / bass line have temporally continuous trajectories (F0), during a musical note. Real-time F0 Estimation of Melody and Bass Lines Method: Limit the frequency range: melody : middle- and high-frequency regions Bass: low frequency whether the F0 is within the limited range or not. Find the MPHS and its F0 View the observed frequency components as a weighted mixture of all possible harmonic-structure tone models without assuming the number of sound sources Deal with ambiguity Considers candidates’ temporal continuity and selects the most dominant and stable trajectory of the F0 Music Listening Station with Chorus-Search Function (2004) Music-playback interface for trial listening and general music selecting / sampling. Function for jumping to the chorus section Visualizing song structure. (Picture taken from Goto’s home page) Speech Completion (2002) Helps the user recall uncertain phrases and saves labor when the input phrase is long. Based on the phenomenon: Human hesitates by lengthening a vowel (a filled pause is uttered): e.g. “Er…” Displays completion candidates acoustically resemble the uttered fragment for user to choose. Filled pause: small fundamental frequency (voice pitch) transitions and small spectral envelope deformations. Vocabulary tree, HMM-based speech recognizer . English with Japanese accent? (vowel -> consonant) Speech Spotter (2004) Allow user to enter voice commands into a speech recognizer in the midst of natural human-human conversation. Filled-pause / High-pitch detection based (voice cue). On-demand information system for assisting humanhuman conversation (e.g. weather inquiry during talk) Music-playback system for enriching telephone conversation (i.e. BGM judebox) A Distributed Cooperative System to Play MIDI Instruments (2002) Remote Music Control Protocol (RMCP) Extension of MIDI. Network symbolized multimedia information transmission. UDP / IP, client-server communication Ethernet / Internet Information sharing by broadcast and time scheduling using time stamps Interactive Performance of a Musiccontrolled CG Dancer (1997) CG character to enhance musician communication in real jam session via visual attention. A successful CG dance depends on interactions between each musician and CG character. E.g. If the guitarist plays, CGC does not move unless the drummer determines the motion timing VirJa Session (A Virtual Jazz Session System) (1999) Enable distributed computer players to listen to other computer players' performances as well as human's performance and to interact with each other. On top of the last two techniques. RWC Music Database (2002- ) (back) RWC (Real World Computing) Music Database Copyright-cleared Common foundation for research. Benchmark. Built by the RWC Music Database Sub-Working Group (Goto as the chair) of the Real World Computing Partnership (RWCP) of Japan. World's first large-scale music database specifically for research purposes. Six original collections, 315 pieces with: original audio signals MIDI files, text files of lyrics individual sounds at half-tone intervals variations of playing styles, dynamics, etc. References Goto’s home page: http://staff.aist.go.jp/m.goto/ Masataka Goto: A Real-time Music-scene-description System: Predominant-F0 Estimation for Detecting Melody and Bass Lines in Real-world Audio Signals, Speech Communication (ISCA Journal), Vol.43, No.4, pp.311-329, 2004. Masataka Goto: An Audio-based Real-time Beat Tracking System for Music With or Without Drum-sounds, Journal of New Music Research, Vol.30, No.2, pp.159-171, June 2001.