01-Intro - Fordham University Computer and Information

advertisement

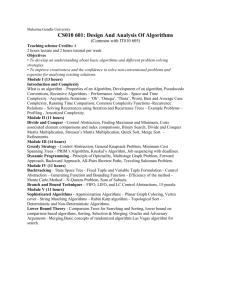

Computer Algorithms Lecture 1 Introduction Some of these slides are courtesy of D. Plaisted, UNC and M. Nicolescu, UNR 1 Class Information • • • • Instructor: Elena Filatova e-mail: filatova@fordham.edu Office: 334 Office hours: – – – – Tuesday, Friday 12:30 – 2:00pm Thursday 4:00 – 5:00pm Additional office hours: by appointment E-mail: 4080 in the beginning of the subject phrase • Web page: blackboard – It is your responsibility to check the class blackboard regularly – Any questions related course material should be placed on the blackboard discussion board • Main text book: Introduction to Algorithms by Cormen et al (3nd ed.) 2 Grading • Homework assignments: 30% – Electronic submission through blackboard – Done individually • Midterm: 25% • Final: 35% • In-class quizzes: 5% – – – – Without prior notification Based on the material from the previous class Absolutely no make-up quizzes Two worst scores will be dropped • Class participation: 5% – Attendance is mandatory – No electronic devices is allowed in the class room (unless with special permission) 3 Pre-requisites • Programming (CS I) –C – C++ • Data Structures (2200) • Discrete math: not necessary but very helpful 4 Approach • • • • • Analytical Build a mathematical model of a computer Study properties of algorithms on this model Reason about algorithms Prove facts about time taken for algorithms 5 Course Outline Intro to algorithm design, analysis, and applications • Algorithm Analysis – Proof of algorithm correctness, Asymptotic Notation, Recurrence Relations, Probability & Combinatorics, Proof Techniques, Inherent Complexity. • Data Structures – Lists, Heaps, Graphs, Trees, Balanced Trees • Sorting & Ordering – Mergesort, Heapsort, Quicksort, Linear-time Sorts (bucket, counting, radix sort), Selection, Other sorting methods. • Algorithmic Design Paradigms – Divide and Conquer, Dynamic Programming, Greedy Algorithms, Graph Algorithms, Randomized Algorithms 6 Goals • Be very familiar with a collection of core algorithms. – CS classics – A lot of examples on-line for most languages/data structures • Be fluent in algorithm design paradigms: divide & conquer, greedy algorithms, randomization, dynamic programming, approximation methods. • Be able to analyze the correctness and runtime performance of a given algorithm. • Be intimately familiar with basic data structures. • Be able to apply techniques in practical problems. 7 Algorithms • Informally, – A tool for solving a well-specified computational problem. – One formal definition ~ Turing Machine (4090 Theory of Computation) Input Algorithm Output • Example: sorting input: A sequence of numbers. output: An ordered permutation of the input. issues: correctness, efficiency, storage, etc. 8 Why Study Algorithms? • Necessary in any computer programming problem – Improve algorithm efficiency: run faster, process more data, do something that would otherwise be impossible – Solve problems of significantly large size – Technology only improves things by a constant factor • Compare algorithms • Algorithms as a field of study – Learn about a standard set of algorithms – New discoveries arise – Numerous application areas • Learn techniques of algorithm design and analysis 9 Roadmap • Different problems – Sorting • Different design paradigms – Searching – Divide-and-conquer – String processing – Incremental – Graph problems – Dynamic programming – Geometric problems – Greedy algorithms – Numerical problems – Randomized/probabilistic 10 Analyzing Algorithms • Predict the amount of resources required: • memory how much space is needed? • computational time: how fast the algorithm runs? • • FACT: running time grows with the size of the input Input size (number of elements in the input) – Size of an array, polynomial degree, # of elements in a matrix, # of bits in the binary representation of the input, vertices and edges in a graph Def: Running time = the number of primitive operations (steps) executed before termination Arithmetic operations (+, -, *), data movement, control, decision making (if, while), comparison 11 Algorithm Efficiency vs. Speed E.g.: sorting n numbers Sort 106 numbers (n=106)! Friend’s computer = 109 instructions/second Friend’s algorithm = 2n2 instructions Your computer = 107 instructions/second Your algorithm = 50nlgn instructions 2 10 instructio ns 2000seconds Your friend = 9 10 instructio ns / second 6 2 You = 50 106 lg10 6 instructio ns 100seconds 7 10 instructio ns / second 20 times better!! 12 Algorithm Analysis: Example • Alg.: MIN (a[1], …, a[n]) m ← a[1]; for i ← 2 to n if a[i] < m then m ← a[i]; • Running time: – the number of primitive operations (steps) executed before termination T(n) =1 [first step] + (n) [for loop] + (n-1) [if condition] + (n-1) [the assignment in then] = 3n - 1 • Order (rate) of growth: – The leading term of the formula – Expresses the asymptotic behavior of the algorithm 13 Typical Running Time Functions • 1 (constant running time): – Instructions are executed once or a few times • logN (logarithmic) – A big problem is solved by cutting the original problem in smaller sizes, by a constant fraction at each step • N (linear) – A small amount of processing is done on each input element • N logN – A problem is solved by dividing it into smaller problems, solving them independently and combining the solution 14 Typical Running Time Functions • N2 (quadratic) – Typical for algorithms that process all pairs of data items (double nested loops) • N3 (cubic) – Processing of triples of data (triple nested loops) • NK (polynomial) • 2N (exponential) – Few exponential algorithms are appropriate for practical use 15 Time units Why Faster Algorithms? Problem size (n) Time units 100 80 60 f(n)=n f(n)=log(n) f(n)=n log(n) 40 20 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 Problem size (n) 16 Asymptotic Notations • A way to describe behavior of functions in the limit – Abstracts away low-order terms and constant factors – How we indicate running times of algorithms – Describe the running time of an algorithm as n grows to • O notation:asymptotic “less than and equal”: f(n) “≤” g(n) • notation:asymptotic “greater than and equal”:f(n) “≥” g(n) • notation:asymptotic “equality”: f(n) “=” g(n) 17 Mathematical Induction • Used to prove a sequence of statements (S(1), S(2), … S(n)) indexed by positive integers. n n 1 i 2 i 1 n S(n): • Proof: – Basis step: prove that the statement is true for n = 1 – Inductive step: assume that S(n) is true and prove that S(n+1) is true for all n ≥ 1 • The key to proving mathematical induction is to find case n “within” case n+1 • Correctness of an algorithm containing a loop 18 Recurrences Def.: Recurrence = an equation or inequality that describes a function in terms of its value on smaller inputs, and one or more base cases • E.g.: T(n) = T(n-1) + n • Useful for analyzing recurrent algorithms • Methods for solving recurrences – – – – Iteration method Substitution method Recursion tree method Master method 19 Sorting Iterative methods: • Insertion sort • Bubble sort • Selection sort 2, 3, 4, 5, 6, 7, 8, 9, 10, J, Q, K, A Divide and conquer • Merge sort • Quicksort Non-comparison methods • Counting sort • Radix sort • Bucket sort 20 Types of Analysis • Worst case (e.g. cards reversely ordered) – Provides an upper bound on running time – An absolute guarantee that the algorithm would not run longer, no matter what the inputs are • Best case (e.g., cards already ordered) – Input is the one for which the algorithm runs the fastest • Average case (general case) – Provides a prediction about the running time – Assumes that the input is random 21 Specialized Data Structures • Problem: – Schedule jobs in a computer system – Process the job with the highest priority first • Solution: HEAPS – all levels are full, except possibly the last one, which is filled from left to right – for any node x Parent(x) ≥ x Operations: – – – – Build Insert Extract max Increase key 22 Graphs • Applications that involve not only a set of items, but also the connections between them Maps Schedules Hypertext Computer networks Circuits 23 Searching in Graphs • Graph searching = systematically follow the edges of the graph so as to visit the vertices of the graph u v w x y z • Two basic graph methods: – Breadth-first search – Depth-first search – The difference between them is in the order in which they explore the unvisited edges of the graph • Graph algorithms are typically elaborations of the basic graphsearching algorithms 24 Minimum Spanning Trees • • A connected, undirected graph: – Vertices = houses, Edges = roads A weight w(u, v) on each edge (u, v) E Find T E such that: 1. T connects all vertices 2. w(T) = Σ(u,v)T w(u, v) is minimized 8 b 4 7 d 9 2 11 a c i 6 7 8 h 14 4 1 e 10 g 2 f Algorithms: Kruskal and Prim 25 Shortest Path Problems • Input: – Directed graph G = (V, E) – Weight function w : E → R 6 • Weight of path p = v0, v1, . . . , vk 3 2 1 4 2 • Shortest-path weight from u to v: 5 7 3 6 k w( p ) w( vi 1 , vi ) i 1 w(p) : u p v if there exists a path from u to v δ(u, v) = min otherwise 26 Dynamic Programming • An algorithm design technique (like divide and conquer) – Richard Bellman, optimizing decision processes – Applicable to problems with overlapping subproblems E.g.: Fibonacci numbers: • Recurrence: F(n) = F(n-1) + F(n-2) • Boundary conditions: F(1) = 0, F(2) = 1 • Compute: F(5) = 3, F(3) = 1, F(4) = 2 • Solution: store the solutions to subproblems in a table • Applications: – Assembly line scheduling, matrix chain multiplication, longest common sequence of two strings, 0-1 Knapsack problem 27 Greedy Algorithms • Similar to dynamic programming, but simpler approach – Also used for optimization problems • Idea: When we have a choice to make, make the one that looks best right now – Make a locally optimal choice in hope of getting a globally optimal solution • Greedy algorithms don’t always yield an optimal solution • Applications: – Activity selection, fractional knapsack, Huffman codes 28 Greedy Algorithms • Problem – Schedule the largest possible set of non-overlapping activities for B21 Start End Activity 1 8:00am 9:15am Database systems class 2 8:30am 10:30am Movie presentation (refreshments served) 3 9:20am 11:00am Data structures class 4 10:00am noon Programming club mtg. (Pizza provided) 5 11:30am 1:00pm Computer graphics class 6 1:05pm 2:15pm Analysis of algorithms class 7 2:30pm 3:00pm Computer security class 8 noon 4:00pm Computer games contest (refreshments served) 9 4:00pm 5:30pm Operating systems class 29 How to Succeed in this Course • • • • • Start early on all assignments. Don‘t procrastinate Complete all reading before class Participate in class Review after each class Be formal and precise on all problem sets and inclass exams 30 Basics • Introduction to algorithms, complexity, and proof of correctness. (Chapters 1 & 2) • Asymptotic Notation. (Chapter 3.1) • Goals – – – – Know how to write formal problem specifications. Know about computational models. Know how to measure the efficiency of an algorithm. Know the difference between upper and lower bounds and what they convey. – Be able to prove algorithms correct and establish computational complexity. 31 Divide-and-Conquer • • • • Designing Algorithms. (Chapter 2.3) Recurrences. (Chapter 4) Quicksort. (Chapter 7) Divide-and-conquer and mathematical induction • Goals – – – – – Know when the divide-and-conquer paradigm is an appropriate one. Know the general structure of such algorithms. Express their complexity using recurrence relations. Determine the complexity using techniques for solving recurrences. Memorize the common-case solutions for recurrence relations. 32 Randomized Algorithms • Probability & Combinatorics. (Chapter 5) • Quicksort. (Chapter 7) • Hash Tables. (Chapter 11) • Goals – Be thorough with basic probability theory and counting theory. – Be able to apply the theory of probability to the following. • Design and analysis of randomized algorithms and data structures. • Average-case analysis of deterministic algorithms. – Understand the difference between average-case and worst-case runtime, esp. in sorting and hashing. 33 Sorting & Selection • • • • Heapsort (Chapter 6) Quicksort (Chapter 7) Bucket Sort, Radix Sort, etc. (Chapter 8) Selection (Chapter 9) • Goals – Know the performance characteristics of each sorting algorithm, when they can be used, and practical coding issues. – Know the applications of binary heaps. – Know why sorting is important. – Know why linear-time median finding is useful. 34 Search Trees • Binary Search Trees – Not balanced (Chapter 12) • Red-Black Trees – Balanced (Chapter 13) • Goals – – – – Know the characteristics of the trees. Know the capabilities and limitations of simple binary search trees. Know why balancing heights is important. Know the fundamental ideas behind maintaining balance during insertions and deletions. – Be able to apply these ideas to other balanced tree data structures. 35 Dynamic Programming • Dynamic Programming (Chapter 15): an algorithm design technique (like divide-and-conquer) • Goals – Know when to apply dynamic programming and how it differs from divide and conquer. – Be able to systematically move from one to the other. 36 Graph Algorithms • Basic Graph Algorithms (Chapter 22) • Goals – Know how to represent graphs (adjacency matrix and edge-list representations). – Know the basic techniques for graph searching. – Be able to devise other algorithms based on graph-searching algorithms. – Be able to “cut-and-paste” proof techniques as seen in the basic algorithms. 37 Greedy Algorithms • Greedy Algorithms (Chapter 16) • Minimum Spanning Trees (Chapter 23) • Shortest Paths (Chapter 24) • Goals – Know when to apply greedy algorithms and their characteristics. – Be able to prove the correctness of a greedy algorithm in solving an optimization problem. – Understand where minimum spanning trees and shortest path computations arise in practice. 38 Weekly Reading Assignment Chapters 1, 2, and 3 Appendix A (Textbook: CLRS) 39 Insertion Sort • Good for sorting a small number of elements • Works like sorting a hand of playing cards – Start with an empty hand and the cards face down on the table – Then remove one card at a time from the table and insert it into the correct position in the left hand – To find the correct position for a card, compare it with each of the cards already in the hand from right to left – At all times, the cards that are already in the left hand a sorted and those cards were originally on the top of the pile • Example 40 Pseudo-Code Conventions • Indentation as block structure • Loop and conditional constructs similar to those in Pascal or Java, such as while, for, repeat, if-then-else • Symbol ► starts a comment line (no execution time) • Using ← instead of = and allowing i ← j ← e • Variables local to the given procedure • Page 19 of the text-book 41 Insertion Sort: Pseudo-Code Definiteness: each instruction is clear and unambiguous Visualization: University of San Francisco 42 Algorithm Analysis • Assumptions: – Random-Access Machine (RAM) • Operations are executed one after another • No concurrent operations • Only primitive instructions – Arithmetic (+, -, /, *, floor, ceiling) – Data movement (load, store, copy) – Control operators (conditional/unconditional branch, subroutine call, return) • Primitive instructions take constant time • Interested in time complexity: amount of time to complete an algorithm 43 What to measure? How to measure? • Input size: depends on the problem – Number of items for sorting (3 or 1000) • Even time for sorting sequences of the same size can vary – Total number of bits for multiplying two integers – Sometimes, more than one input • Running time: number of primitive operations executed – Machine-independent – Each line of pseudo-code – constant time – Constant time for each line vary 44 Analysis of Insertion Sort 45 Running Time • Best-case: the input array is in the correct order • Worst-case: the input array is in the reverse order • Average-case Insertion sort running time • • • • Best-case: linear function Worst-case: quadratic function Average-case: best-case or worst-case?? Order (rate) of growth: – The leading term of the formula – Expresses the asymptotic behavior of the algorithm • Given two algorithms (linear and quadratic) which one will you choose? 46